HS119: Securing 2026: How AI, Quantum, and the AI-Powered Browser are Driving Enterprise Defense (Sponsored)

Anand Oswal, Executive Vice President at Palo Alto Networks, joins Johna Johnson and John Burke for a wide-ranging exploration of two emerging focal points of enterprise risk: cryptographically relevant quantum computing, and browser-mediated agentic AI. The looming arrival of quantum computers that can break legacy encryption has already created the threat of “harvest now, decrypt... Read more »NB554: AWS, Google Link Public Clouds; Trading Data Center Has Zero Chill

Take a Network Break! We start with listener follow-up on Fortinet’s vulnerability numbering, and sound a red alert about an authentication bypass vulnerability in ASUS’s AiCloud service. AWS and Google announce a joint cross-cloud interconnect offering (other cloud providers are invited to play), Microsoft and Ciena pitch a new design to boost optical network resiliency,... Read more »Multi-Pod EVPN Troubleshooting (Part 3)

Last week, we fixed the mismatched route targets in our sample multi-pod EVPN fabric. With that fixed, every PE device should see every other PE device as a remote VTEP for ingress replication purposes. We got that to work on Site-A (AS 65001), but not on Site-B (AS 65002); let’s see what else is broken.

Note: This is the fifth blog post in the Multi-Pod EVPN series. If you stumbled upon it, start with the design overview and troubleshooting overview posts. More importantly, familiarize yourself with the topology we’ll be using; it’s described in the Multi-Pod EVPN Troubleshooting: Fixing Next Hops.

Ready? Let’s go. Here’s our network topology:

Computer Network Design Complexity and Tradeoffs Training

I’m teaching a “one off” special event class over on O’Reilly’s platform (via Pearson) this coming Friday, the 5th of December. From the Description:

Join networking engineer and infrastructure expert Russ White for this exclusive, one-time event exploring the critical role of tradeoffs in network design. We’ll begin by unpacking how complexity shapes the decisions architects and designers must make, and how tradeoffs are often an unavoidable part of navigating that complexity. Through real-world examples, you’ll learn how different network design choices impact overall system complexity, and how to approach these decisions with greater clarity and confidence. We’ll wrap up with an in-depth discussion of unintended consequences—how they arise, how to anticipate them, and how they relate to designing in complex, adaptive environments.

As always, if you register for the course you can watch later.

How to Upgrade Windows 11 on Unsupported Hardware

Upgrading Windows 11 can be challenging if your computer does not meet Microsoft’s strict hardware […]

The post How to Upgrade Windows 11 on Unsupported Hardware first appeared on Brezular's Blog.

Tech Bytes: Bringing AI Reasoning to Infrastructure with Itential FlowAI (Sponsored)

Itential has announced FlowAI, a new offering that brings agentic AI to Itential’s network automation platform. On today’s Tech Bytes podcast Ethan Banks talks with Peter Sprygada, Chief Architect at Itential, about how FlowAI works, its components, and how Itential uses the Model Context Protocol (MCP). They also dig into how FlowAI supports AI-driven orchestration... Read more »netlab as the Universal Configuration Translator

Dan Partelly, a heavy netlab user (and an active contributor), sent me this interesting perspective on how one might want to use netlab without ever building a lab with it. All I added was a bit of AI-assisted editing; my comments are on a grey background.

In all podcasts and interviews I listened to, netlab was referred to as a “lab management solution”. But this is misleading. It’s also a translator, due to its ability to abstract devices, and can easily generate perfectly usable configs for devices or technologies you have never worked on.

Why Replicate is joining Cloudflare

We're happy to announce that as of today Replicate is officially part of Cloudflare.

When we started Replicate in 2019, OpenAI had just open sourced GPT-2, and few people outside of the machine learning community paid much attention to AI. But for those of us in the field, it felt like something big was about to happen. Remarkable models were being created in academic labs, but you needed a metaphorical lab coat to be able to run them.

We made it our mission to get research models out of the lab into the hands of developers. We wanted programmers to creatively bend and twist these models into products that the researchers would never have thought of.

We approached this as a tooling problem. Just like tools like Heroku made it possible to run websites without managing web servers, we wanted to build tools for running models without having to understand backpropagation or deal with CUDA errors.

The first tool we built was Cog: a standard packaging format for machine learning models. Then we built Replicate as the platform to run Cog models as API endpoints in the cloud. We abstracted away both the low-level machine learning, and the complicated Continue reading

Harga $8, Kritik Berlipat: McDonald’s Hadapi Badai Online di Tengah Krisis Harga

Daftar Pustaka

Raksasa makanan cepat saji McDonald’s mencoba menawarkan nilai. Namun, promosi terbarunya justru memicu badai. Perusahaan mempromosikan paket McNugget seharga $8. Tetapi, konsumen merasa harga itu tidak masuk akal. Insiden ini menunjukkan adanya masalah yang lebih dalam. Perusahaan berjuang mempertahankan citra keterjangkauannya. Akibatnya, kritik online menggema dengan sangat keras.

Promosi $8 yang Memicu Amarah Online

Awal bulan ini, McDonald’s mengumumkan promosi terbatas. Promosi itu berisi 10 potong McNugget, kentang, dan minuman. Perusahaan memposisikannya sebagai penawaran nilai yang bagus. Namun, respons di media sosial sangat negatif. Banyak orang mengeluh di bawah postingan perusahaan. Mereka menyuarakan ketidakpuasan mereka secara terbuka.

Sebagai contoh, satu komentar menanyakan nilai dari promosi tersebut. “Sejak kapan $8 adalah harga yang bagus untuk nugget?” tulis seorang komentator. Keluhan lain berfokus pada kualitas dan layanan. Waktu tunggu di drive-thru juga menjadi sorotan. Akibatnya, postingan itu dipenuhi ratusan ulasan negatif.

Perusahaan mencoba merespons keluhan tersebut. Mereka meminta pengguna untuk mengirim informasi kontak mereka. Tujuannya adalah untuk menyelesaikan masalah secara privat. Namun, usaha itu tidak meredakan amarah publik. Badai kritik online terus berlanjut tanpa henti. Situasi ini menunjukkan jarak antara persepsi Continue reading

Fun Reading: From XML to LLMs

In his latest blog post (Systems design 3: LLMs and the semantic revolution), Avery Pennarun claims that LLMs might solve the problem we consistently failed to solve on a large scale for the last 60 (or so) years – the automated B2B data exchange.

You might agree with him or not (for example, an accountant or two might get upset with hallucinated invoice items), but his articles are always a fun read.

KubeCon NA 2025: Three Core Kubernetes Trends and a Calico Feature You Should Use Now

The Tigera team recently returned from KubeCon + CloudNativeCon North America and CalicoCon 2025 in Atlanta, Georgia. It was great, as always, to attend these events, feel the energy of our community, and hold in-depth discussions at the booth and in our dedicated sessions that revealed specific, critical shifts shaping the future of cloud-native platforms.

We pulled together observations from our Tigera engineers and product experts in attendance to identify three key trends that are directly influencing how organizations manage Kubernetes today.

Trend 1: Kubernetes is Central to AI Workload Orchestration

Trend 1: Kubernetes is Central to AI Workload Orchestration

A frequent and significant topic of conversation was the role of Kubernetes in supporting Artificial Intelligence and Machine Learning (AI/ML) infrastructure.

The consensus is clear: Kubernetes is becoming the standard orchestration layer for these specialized workloads. This requires careful consideration of networking and security policies tailored to high-demand environments. Observations from the Tigera team indicated a consistent focus on positioning Kubernetes as the essential orchestration layer for AI workloads. This trend underscores the need for robust, high-performance CNI solutions designed for the future of specialized computing.

Trend 2: Growth in Edge Deployments Increases Complexity

Trend 2: Growth in Edge Deployments Increases Complexity

Conversations pointed to a growing and tangible expansion of Kubernetes beyond central data centers and Continue reading

Best of the Hedge: Episode 5 on DoH (Part 1)

In this episode of the Hedge, Geoff Huston joins Tom Ammon and Russ White to discuss the ideas behind DNS over HTTPS (DoH), and to consider the implications of its widespread adoption. Is it time to bow to our new overlords?

Lab: IS-IS Route Redistribution

Route redistribution into IS-IS seems even easier than its OSPFv2/OSPFv3 counterparts. There are no additional LSAs/LSPs; the redistributed prefixes are included in the router LSP. Things get much more interesting once you start looking into the gory details and exploring how different implementations use (or do not) the various metric bits and TLVs.

You’ll find more details (and the opportunity to explore the LSP database contents in a safe environment) in the IS-IS Route Redistribution lab exercise.

Click here to start the lab in your browser using GitHub Codespaces (or set up your own lab infrastructure). After starting the lab environment, change the directory to feature/7-redistribute and execute netlab up.

NANOG 95

NANOG held its 95th meeting in Arlington, Texas in October of 2025. Here's my take on a few presentations that caught my attention through this three-day meeting.UET Relative Addressing and Its Similarities to VXLAN

Relative Addressing

As described in the previous section, applications use endpoint objects as their communication interfaces for data transfer. To write data from local memory to a target memory region on a remote GPU, the initiator must authorize the local UE-NIC to fetch data from local memory and describe where that data should be written on the remote side.

To route the packet to the correct Fabric Endpoint (FEP), the application and the UET provider must supply the FEP’s IP address (its Fabric Address, FA). To determine where in the remote process’s memory the received data belongs, the UE-NIC must also know:

- Which job the communication belongs to

- Which process within that job owns the target memory

- Which Resource Index (RI) table should be used

- Which entry in that table describes the exact memory location

This indirection model is called relative addressing.

How Relative Addressing Works

Figure 5-6 illustrates the concept. Two GPUs participate in distributed training. A process on GPU 0 with global rank 0 (PID 0) receives data from GPU 1 with global rank 1 (PID 1). The UE-NIC determines the target Fabric Endpoint (FEP) based on the destination IP address (FA = 10.0.1.11). This Continue reading

OSPFv3 Router ID Documentation on Arista EOS

When I published a blog post making fun of the ridiculously incorrect Cisco IOS/XE OSPFv3 documentation, an engineer working for Cisco quickly sent me an email saying, “Well, the other vendors are not much better.”

Let’s see how well Arista EOS is doing; this is their description of the router-id command (taken from EOS 4.35.0F documentation; unchanged for at least a dozen releases):

How to Turbocharge Your Kubernetes Networking With eBPF

When your Kubernetes cluster handles thousands of workloads, every millisecond counts. And that pressure is no longer the exception; it is the norm. According to a recent CNCF survey, 93% of organizations are using, piloting, or evaluating Kubernetes, revealing just how pervasive it has become.

Kubernetes has grown from a promising orchestration tool into the backbone of modern infrastructure. As adoption climbs, so does pressure to keep performance high, networking efficient, and security airtight.

However, widespread adoption brings a difficult reality. As organizations scale thousands of interconnected workloads, traditional networking and security layers begin to strain. Keeping clusters fast, observable, and protected becomes increasingly challenging.

Innovation at the lowest level of the operating system—the kernel—can provide faster networking, deeper system visibility, and stronger security. But developing programs at this level is complex and risky. Teams running large Kubernetes environments need a way to extend the Linux kernel safely and efficiently, without compromising system stability.

Why eBPF Matters for Kubernetes Networking

Enter eBPF (extended Berkeley Packet Filter), a powerful technology that allows small, verified programs to run safely inside the kernel. It gives Kubernetes platforms a way to move critical logic closer to where packets actually flow, providing sharper visibility Continue reading

NAN107: How AI is Changing the Networking Landscape

The world of networking is changing at lightning speed thanks to AI. Today Eric sits down with Chris Kane to explore this new reality for network engineers. Together, they dive deeper into some of the changes that will be coming next, breaking down the technical demands and mindset shifts of intellectual curiosity and humility necessary... Read more »TCG063: Constraint Drives Innovation with John Capobianco

Recorded live at AutoCon4, William Collins and Eyvonne Sharp join forces with John Capobianco for some in the moment thoughts and reflections on the AutoCon experience – from the in-person connections to the workshops to the stage presentations. John gives us the inside story on his very own workshop and the latest version releases in... Read more »Testing IP Multicast with netlab

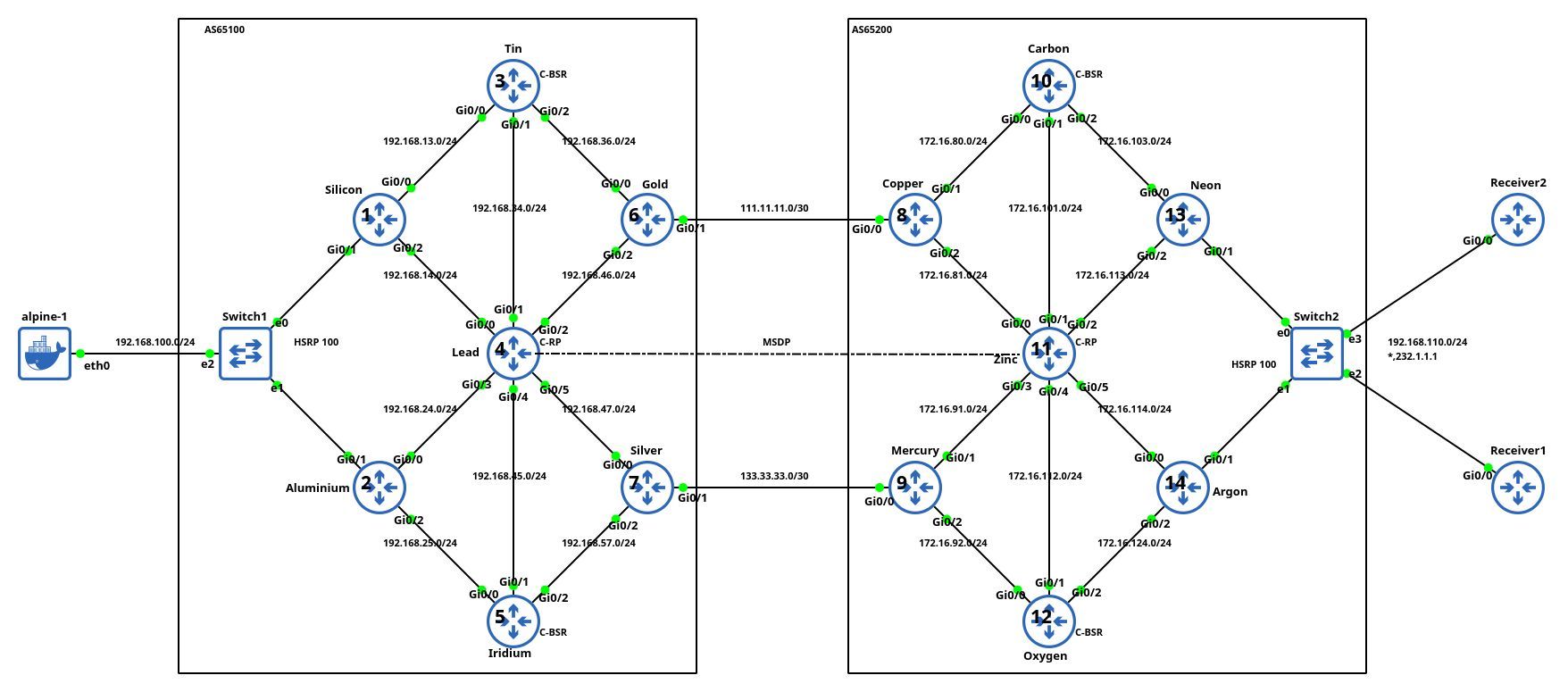

Aleksandr Albin built a large (almost 20-router) lab topology (based on an example from Jeff Doyle’s Routing TCP/IP Volume 2) that he uses to practice inter-AS IP multicast. He also published the topology file (and additional configuration templates) on GitHub and documented his experience in a LinkedIn post.

Lab topology, copied with permission by Aleksandr Albin

It’s so nice to see engineers using your tool in real-life scenarios. Thanks a million, Aleksandr, for sharing it.

Trend 1: Kubernetes is Central to AI Workload Orchestration

Trend 1: Kubernetes is Central to AI Workload Orchestration Trend 2: Growth in Edge Deployments Increases Complexity

Trend 2: Growth in Edge Deployments Increases Complexity