BGP Session Security: Be Very Skeptical

A while ago I explained how Generalized TTL Security Mechanism could be used to prevent denial-of-service attacks on routers running EBGP. Considering the results published in Analyzing the Security of BGP Message Parsing presentation from DEFCON 31 I started wondering how well GTSM implementations work.

TL&DR summary:

BGP Session Security: Be Very Skeptical

A while ago I explained how Generalized TTL Security Mechanism could be used to prevent denial-of-service attacks on routers running EBGP. Considering the results published in Analyzing the Security of BGP Message Parsing presentation from DEFCON 31 I started wondering how well GTSM implementations work.

TL&DR summary:

WPA2 Cracking On NVIDIA with CUDA

In the previous tutorial "Cracking WPA/WPA2 Pre-shared Key Using GPU", we showed how to crack […]

The post WPA2 Cracking On NVIDIA with CUDA first appeared on Brezular's Blog.

Worth Reading: Flow Distribution Across ECMP Paths

Dip Singh wrote another interesting article describing how ECMP load balancing implementations work behind the scenes. Absolutely worth reading.

Worth Reading: Flow Distribution Across ECMP Paths

Dip Singh wrote another interesting article describing how ECMP load balancing implementations work behind the scenes. Absolutely worth reading.

Worth Reading: Single-Port LAGs

Lindsay Hill described an excellent idea: all ports on your switches routers should be in link aggregation groups even when you have a single port in a group. That approach allows you to:

- Upgrade the link speed without changing any layer-3 configuration

- Do link maintenance without causing a routing protocol flap

It also proves RFC 1925 rule 6a, but then I guess we’re already used to that ;)

Worth Reading: Single-Port LAGs

Lindsay Hill described an excellent idea: all ports on your switches routers should be in link aggregation groups even when you have a single port in a group. That approach allows you to:

- Upgrade the link speed without changing any layer-3 configuration

- Do link maintenance without causing a routing protocol flap

It also proves RFC 1925 rule 6a, but then I guess we’re already used to that ;)

Heavy Networking 703: Integrating ZTNA And SASE With Palo Alto Networks (Sponsored)

Today’s Heavy Networking, sponsored by Palo Alto Networks, discusses Zero Trust Network Access (ZTNA) across the Secure Access Service Edge (SASE). You could think of ZTNA, as VPN evolved and SASE as SD-WAN evolved. Not only do you get an overlay connectivity fabric, but you also get cloud-hosted security services. We talk about how it all works together, the role of client software, Palo Alto Networks' architecture, integrating an IDP, and more.

The post Heavy Networking 703: Integrating ZTNA And SASE With Palo Alto Networks (Sponsored) appeared first on Packet Pushers.

Heavy Networking 703: Integrating ZTNA And SASE With Palo Alto Networks (Sponsored)

Today’s Heavy Networking, sponsored by Palo Alto Networks, discusses Zero Trust Network Access (ZTNA) across the Secure Access Service Edge (SASE). You could think of ZTNA, as VPN evolved and SASE as SD-WAN evolved. Not only do you get an overlay connectivity fabric, but you also get cloud-hosted security services. We talk about how it all works together, the role of client software, Palo Alto Networks' architecture, integrating an IDP, and more.Hedge 196: What’s up with Ethernet? (with Peter Jones)

Ethernet is the technology used to move most of the world’s data at the physical layer. What has been going on for the last few years in Ethernet, and what is coming? Peter Jones joins Tom Ammon and Russ White to talk about current and future work in Ethernet, AI, and other odds and ends.

La cryptographie post-quantique passe en disponibilité générale

Au cours des douze derniers mois, nous avons parlé de la nouvelle référence en matière de chiffrement sur Internet : la cryptographie post-quantique. Durant la Semaine anniversaire, l'année dernière, nous avons annoncé que notre version bêta de Kyber était disponible à des fins de test, et que Cloudflare Tunnel pouvait être mis en œuvre avec la cryptographie post-quantique. Au début de l'année, nous avons clairement indiqué que nous estimons que cette technologie fondamentale devait être accessible à tous, gratuitement et pour toujours.

Aujourd'hui, nous avons franchi une étape importante, après six ans et 31 articles de blog : nous lançons le déploiement de la prise en charge de la cryptographie post-quantique en disponibilité générale1 pour nos clients, nos services et nos systèmes internes ; nous le décrivons plus en détail ci-dessous. Ce déploiement inclut des produits tels que Pingora pour la connectivité aux serveurs d'origine, 1.1.1.1, R2, le routage intelligent Argo, Snippets et bien d'autres.

Il s'agit d'une étape importante pour Internet. Nous ne savons pas encore quand les ordinateurs quantiques deviendront suffisamment puissants pour briser la cryptographie actuelle, mais les avantages qu'offre l'adoption de la cryptographie post-quantique sont aujourd'hui manifestes. Des connexions rapides et Continue reading

Post-Quanten-Kryptographie allgemein verfügbar

In den letzten zwölf Monaten haben wir über die neue Grundlage der Verschlüsselung im Internet gesprochen: Post-Quanten-Kryptographie. Während der Birthday Week im letzten Jahr haben wir angekündigt, dass unsere Beta-Version von Kyber zu Testzwecken verfügbar ist und dass Post-Quanten-Kryptografie für Cloudflare Tunnel aktiviert werden kann. Anfang dieses Jahres haben wir uns klar dafür ausgesprochen, dass diese grundlegende Technologie für alle kostenlos und dauerhaft verfügbar sein sollte.

Heute haben wir nach sechs Jahren und 31 Blog-Beiträgen einen Meilenstein erreicht: Wir führen die allgemeine Verfügbarkeit der Unterstützung für Post-Quanten-Kryptographie für unsere Kunden, Dienste und internen Systeme ein, wie im Folgenden genauer beschrieben. Dazu gehören Produkte wie Pingora für Konnektivität von Ursprungsservern, 1.1.1.1, R2, Argo Smart Routing, Snippets und viele mehr.

Dies ist ein Meilenstein für das Internet. Wir wissen noch nicht, wann Quantencomputer leistungsstark genug sein werden, um die heutige Kryptographie zu knacken, aber die Vorteile, jetzt auf Post-Quanten-Kryptographie umzusteigen, liegen auf der Hand. Schnelle Verbindungen und vorausschauende Sicherheit sind dank der Fortschritte von Cloudflare, Google, Mozilla, den National Institutes of Standards and Technology (NIST) in den USA, der Internet Engineering Task Force und zahlreichen akademischen Einrichtungen heute möglich.

Was bedeutet Allgemeine Verfügbarkeit (GA)? Im Oktober 2022 aktivierten wir Continue reading

Disponibilidad general de la criptografía poscuántica

Durante los últimos 12 meses, hemos estado hablando sobre la nueva línea base de la encriptación en Internet: la criptografía poscuántica. El año pasado, durante la Semana aniversario anunciamos la disponibilidad de nuestra versión beta de Kyber para fines de prueba, y que Cloudflare Tunnel se podría activar con la criptografía poscuántica. Este mismo año, dejamos clara nuestra postura de que creemos que esta tecnología fundamental debería estar disponible para todos de forma gratuita, siempre.

Hoy, tras seis años y 31 publicaciones del blog, hemos alcanzado un hito importante: estamos empezando a implementar la disponibilidad general del soporte de la criptografía poscuántica para nuestros clientes, servicios y sistemas internos, tal como se describe más detalladamente a continuación. Esto incluye productos como Pingora para la conectividad de origen, 1.1.1.1, R2, Argo Smart Routing, Snippets y muchos más.

Esto es un hito para Internet. No sabemos aún cuándo los ordenadores cuánticos alcanzarán la escala suficiente para descifrar la criptografía actual, pero las ventajas de actualizar ahora a la criptografía cuántica son evidentes. Las conexiones rápidas y una seguridad preparada para el futuro son posibles hoy gracias a los avances logrados por Cloudflare, Google, Mozilla, el Instituto Nacional de Continue reading

后量子加密正式发布

在过去的十二个月里,我们一直在讨论互联网上的新加密基准:后量子加密。去年生日周期间,我们宣布我们的 Kyber 测试版可供测试,且 Cloudflare Tunnel 可启用后量子加密。今年早些时候,我们明确表示这项基础技术应该永久免费提供给所有人使用。

经过六年的努力和 31 篇博客文章的酝酿,我们今天正式发布对我们客户、服务和内部系统的后量子加密支持,详情如下。支持的产品包括 Pingora (源连接)、1.1.1.1、R2、Argo Smart Routing、Snippets 等等。

这对互联网来说是一个里程碑。我们目前还不知道量子计算机何时会强大到足以破解现今的加密技术,但是现在升级到后量子加密的好处是显而易见的。由于 Cloudflare、Google、Mozilla、美国国家标准与技术研究院、互联网工程任务组以及众多学术机构取得的进展,快速连接和面向未来的安全性在今天才成为可能。

正式发布意味着什么?2022 年 10 月,我们为通过 Cloudflare 服务的所有网站和 API 启用 X25519+Kyber 测试版。然而,一个巴掌拍不响:只有浏览器也支持后量子加密,才能确保连接的安全。2023 年 8 月起,Chrome逐渐默认启用 X25519+Kyber。

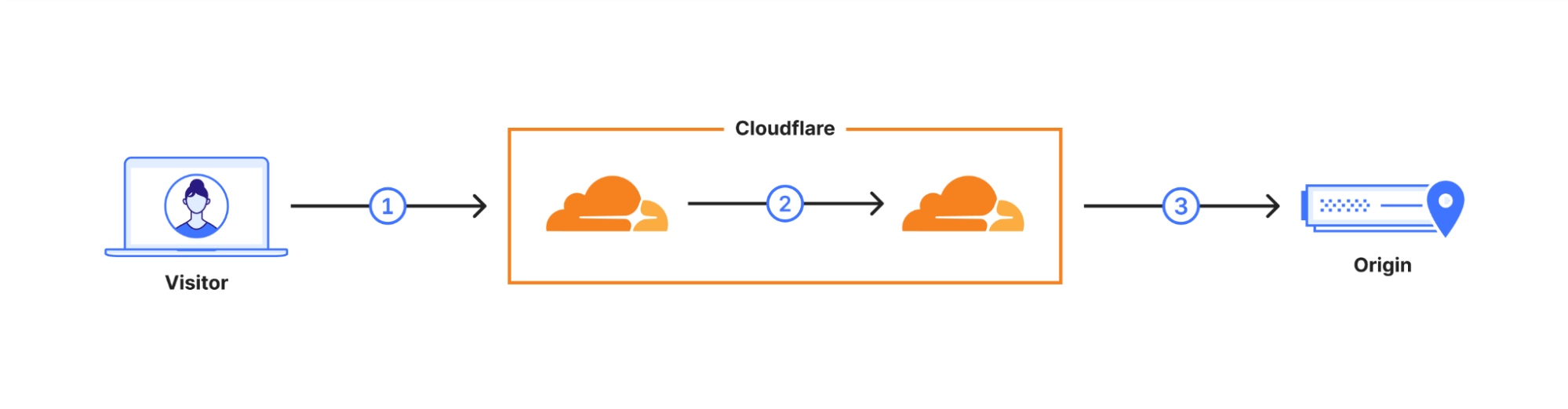

用户的请求通过 Cloudflare 的网络进行路由(2)。我们已经升级了许多这些内部连接以使用后量子加密,并预计到 2024 年底将完成所有内部连接的升级。这使得连接(3)成为我们与源服务器之间的最后一环。

我们很高兴能正式推出对大部分入站和出站连接的 X25519+Kyber 支持,适用于源服务器和 Cloudflare Workers fetch()。

| 计划 | 后量子加密出站连接支持 |

|---|---|

| 免费 | 开始推出。目标是 10 月底前 100% 覆盖 |

| Pro 和 Business | 目标是年底前 100% 覆盖 |

| Enterprise | 2024 年 2 月开始推出2024 年 3 月 100% 覆盖 |

对于我们的 Enterprise 客户,我们将在接下来的六个月内定期发出更多信息,以帮助您准备迎接这一推出。Pro、Business 和 Enterprise 客户可以跳过这一推出,提前使用我们中相关博客文章描述的 API,在您的区域内选择加入或者退出。在 2024 年 2 月为 Enterprise 客户推出之前,我们将在仪表板上添加一个切换按钮,以选择退出。

如果您希望马上开始,请查看我们的博客文章以了解技术细节,并通过 API 启用后量子加密支持!

包括什么?下一步是什么?

对于如此大规模的升级,我们希望首先专注于我们最常用的产品,然后扩展以覆盖边缘用例。因此本次推出包括如下产品和系统:

| 1.1.1.1 |

| AMP |

| API GATEWAY |

| Argo Smart Routing |

| Auto Minify |

| 自动平台优化 |

| Automatic Signed Exchanges |

| Cloudflare Egress |

| Cloudflare Images |

| Cloudflare Rulesets |

| Cloudflare Snippets |

| Cloudflare Tunnel |

| 自定义错误页 |

| 基于流的监测 |

| 运行状况检查 |

| Hermes |

| Host Head Checker |

| Magic Firewall |

| Magic Network Monitoring |

| Network Error Logging |

| Project Flame |

| Quicksilver |

| R2 储存 |

| Request Tracer |

| Rocket Loader |

| Speed on Cloudflare Dash |

| SSL/TLS |

| 流量管理器 |

| WAF, Managed Rules |

| Waiting Room |

| Web Analytics |

如果您使用的产品或服务未在此处列出,那么我们尚未开始为其推出后量子加密支持。我们正在积极推出对所有产品和服务的后量子加密支持,包括我们的 Zero Trust 产品。在我们实现所有系统的后量子加密支持之前,我们将在每个创新周发布更新博客文章,介绍我们已经为哪些产品推出了后量子加密,下一步将支持的产品以及未来的计划。

我们将在不久后为以下产品提供后量子加密支持:

| Cloudflare Gateway |

| Cloudflare DNS |

| Cloudflare Load Balancer |

| Cloudflare Access |

| Always Online |

| Zaraz |

| 日志记录 |

| D1 |

| Cloudflare Workers |

| Cloudflare WARP |

| Bot管理 |

为什么现在要推出这项服务?

正如我们今年早些时候宣布的那样,后量子加密将免费包含在所有能够支持的 Cloudflare 产品和服务中。最好的加密技术应该对每个人免费开放,以帮助支持全球的隐私和人权。

正如我们在 3 月所提到的:

“曾经的实验性前沿已经变成了现代社会的基础结构。它运行在我们最关键的基础设施中,例如电力系统、医院、机场和银行。我们将最珍贵的记忆托付给它。我们把秘密托付给它。这就是为什么互联网需要默认是私密的。它需要默认是安全的。”

我们对后量子加密技术的研究是基于这样一个论点,即量子计算机可以破解传统密码学,产生类似于“千年虫”的问题。我们知道未来会出现一个问题,可能会给用户、企业甚至国家带来灾难性的后果。这一次的区别在于,我们不知道这种计算范式的突破将会在何年何月发生。更糟糕的是,今天捕获的任何流量在未来可能会被解密。我们今天就需要为应对这种威胁做好准备。

我们很高兴每个人都能在他们的系统中采用后量子加密技术。要了解我们部署后量子加密和第三方客户端/服务器支持的最新进展, 请访问 pq.cloudflareresearch.com 并关注本博客。

1我们正在使用 Kyber (NIST 选择的后量子密钥协商算法)的一个初步版本。Kyber 尚未最终定稿。我们预计最终标准将在 2024 年以 ML-KEM 的名称发布,届时我们将迅速采用该标准,并停止支持 X25519Kyber768Draft00。

ポスト量子暗号が一般利用可能に

過去12か月間、当社はインターネット上の暗号化の新しいベースラインであるポスト量子暗号について議論してきました。昨年のバースデーウィークの間、Kyberのベータ版がテスト用に利用可能であること、そしてCloudflare Tunnelがポスト量子暗号を使用して、有効にできることを発表しました。今年初め、当社は、この基礎的なテクノロジーを誰もが永久に無料で利用できるべきだというスタンスを明確にしました。

今日、当社は6年を経てマイルストーンを達成し、31件のブログ記事を作成中です。以下に、より詳細に説明されているように、お客様、サービス、および内部システムへのポスト量子暗号サポートの一般提供のロールアウトを開始しています。これには、オリジン接続用のPingora、1.1.1.1、R2、Argo Smart Routing、Snippetsなどの製品が含まれます。

これはインターネットのマイルストーンです。量子コンピュータが今日の暗号を破るのに十分な規模を持つかは、まだ分かりませんが、今すぐポスト量子暗号にアップグレードするメリットは明らかです。Cloudflare、Google、Mozilla、米国国立標準技術研究所、インターネットエンジニアリングタスクフォース、および多数の学術機関による進歩により、高速接続と、将来も保証されるセキュリティはすべて今日可能になっています

一般提供とはどういう意味ですか?2022年10月当社はX25519+KyberをCloudflareを介して提供されるすべてのWebサイトとAPIに対し、ベータ版として有効にしました。しかし、タンゴを踊るには二人必要です。ブラウザがポスト量子暗号もサポートしている場合にのみ、接続が保護されます。2023年8月から、ChromeはデフォルトでX25519+Kyberを徐々に有効にします。

ユーザーのリクエストは、Cloudflareのネットワークを介してルーティングされます(2)。当社はこれらの内部接続の多くをポスト量子暗号を使用するようにアップグレードしており、2024年末までにすべての内部接続のアップグレードが完了する予定です。これにより、最終リンクとして、当社と配信元サーバー間の接続(3)が残ります。

配信元サーバーやCloudflare Workersのフェッチを含む利用に対し、ほとんどのインバウンドおよびアウトバウンド接続向けX25519+Kyberのサポートを使用の一般提供としてロールアウトしていることをお知らせできて嬉しく思います。

| プラン | ポスト量子アウトバウンド接続のサポート |

|---|---|

| Free | ロールアウトを開始。 10月末までに100%を目指す。 |

| ProプランおよびBusinessプラン | 年末までに100%を目指す。 |

| Enterprise | ロールアウト開始は2024年2月。 2024年3月までに100%。 |

Enterpriseのお客様には、今後6ヶ月にわたり、ロールアウトの準備に役立つ追加情報を定期的にお送りする予定です。Pro、Business、Enterpriseをご利用のお客様は、ロールアウトをスキップして、現在ご利用のゾーン内でオプトインすることも、コンパニオンブログ記事で説明されているAPIを使用して事前にオプトアウトすることもできます。2024年2月にEnterprise向けにロールアウトする前に、ダッシュボードにオプトアウト用のトグルを追加する予定です。

今すぐ始めたいという方は、技術的な詳細を記載したブログをチェックし、APIを介したポスト量子暗号サポートを有効にしてください!

何が含まれ、次に何があるのでしょうか?

この規模のアップグレードでは、まず最もよく使用される製品に焦点を当て、次に外側に拡張しエッジケースを把握したいと考えました。 このプロセスにより、このロールアウトには以下の製品とシステムを含めることになりました。

| 1.1.1.1 |

| AMP |

| API Gateway |

| Argo Smart Routing |

| Auto Minify |

| プラットフォームの自動最適化 |

| Automatic Signed Exchanges |

| Cloudflareエグレス |

| Cloudflare Images |

| Cloudflareルールセット |

| Cloudflare Snippets |

| Cloudflare Tunnel |

| カスタムエラーページ |

| フローベースのモニタリング |

| ヘルスチェック |

| ヘルメス |

| ホストヘッドチェッカー |

| Magic Firewall |

| Magic Network Monitoring |

| ネットワークエラーログ |

| プロジェクトフレーム |

| クイックシルバー |

| R2ストレージ |

| リクエストトレーサー |

| Rocket Loader |

| Cloudflare Dashの速度 |

| SSL/TLS |

| Traffic Manager(トラフィックマネージャー) |

| WAF、管理ルール |

| Waiting Room |

| Web Analytics |

ご利用の製品またはサービスがここに記載されていない場合は、まだポスト量子暗号の展開を開始していません。当社は、Zero Trust製品を含むすべての製品とサービスにポスト量子暗号を展開することに積極的に取り組んでいます。 すべてのシステムでポスト量子暗号のサポートが完了するまで、どの製品にポスト量子暗号をロールアウトしたか、次にロールアウトする製品、まだ、近々予定の製品を網羅する更新ブログをイノベーションウィークごとに公開する予定です。

ポスト量子暗号サポートを導入しようと取り組んでいる製品はまもなくです。

| Cloudflare Gateway |

| Cloudflare DNS |

| Cloudflareロードバランサー |

| Cloudflare Access |

| Always Online |

| Zaraz |

| ログ |

| D1 |

| Cloudflare Workers |

| Cloudflare WARP |

| ボット管理 |

なぜ、今なのでしょうか?

今年初めにお知らせしたように、ポスト量子暗号は、それをサポートできるすべてのCloudflare製品とサービスの中に無料で含まれます。最高の暗号化技術は誰もがアクセスできる必要があり、プライバシーと人権を世界的にサポートするのに役立ちます。

3月に述べたように:

「かつては実験的なフロンティアだったものが、現代社会の根底にある構造へと変わりました。これは、電力システム、病院、空港、銀行などの最も重要なインフラストラクチャで実行されます。私たちは最も貴重なメモリをそれに託しています。私たちは機密をそれに託しています。インターネットが、デフォルトでプライベートである必要があるのはそのためです。デフォルトで安全である必要があります。」

ポスト量子暗号に関する私たちの研究は、従来の暗号を破ることができる量子コンピューターが西暦2000年のバグと同様の問題を引き起こすという論文によって推進されています。将来的には、ユーザー、企業、さらには国家に壊滅的な結果をもたらす可能性のある問題が発生することは、わかっています。今回の違いは、計算パラダイムのこの破壊がいつどのように発生するかがわからないことです。 さらに悪いことに、今日捕捉されたトラフィックは将来解読される可能性があります。この脅威に備えるために、今日から準備をする必要があります。

当社は、ポスト量子暗号を皆さんのシステムに導入することを楽しみにしています。ポスト量子暗号の導入とサードパーティのクライアント/サーバーサポートの最新情報をフォローするには、pq.cloudflareresearch.comをチェックして、このブログに注目してください。

1当社は、ポスト量子鍵合意に関して、NISの選択であるKyberの暫定版を使用しています。Kyberはまだ完成していません。最終基準は、2024年にML-KEMという名前で公開される予定であり、X25519Kyber768Draft00のサポートを廃止しつつ、速やかに採用する予定です。

Encrypted Client Hello – the last puzzle piece to privacy

Today we are excited to announce a contribution to improving privacy for everyone on the Internet. Encrypted Client Hello, a new proposed standard that prevents networks from snooping on which websites a user is visiting, is now available on all Cloudflare plans.

Encrypted Client Hello (ECH) is a successor to ESNI and masks the Server Name Indication (SNI) that is used to negotiate a TLS handshake. This means that whenever a user visits a website on Cloudflare that has ECH enabled, no one except for the user, Cloudflare, and the website owner will be able to determine which website was visited. Cloudflare is a big proponent of privacy for everyone and is excited about the prospects of bringing this technology to life.

Browsing the Internet and your privacy

Whenever you visit a website, your browser sends a request to a web server. The web server responds with content and the website starts loading in your browser. Way back in the early days of the Internet this happened in 'plain text', meaning that your browser would just send bits across the network that everyone could read: the corporate network you may be browsing from, the Internet Service Provider that offers Continue reading

Cloudflare now uses post-quantum cryptography to talk to your origin server

Quantum computers pose a serious threat to security and privacy of the Internet: encrypted communication intercepted today can be decrypted in the future by a sufficiently advanced quantum computer. To counter this store-now/decrypt-later threat, cryptographers have been hard at work over the last decades proposing and vetting post-quantum cryptography (PQC), cryptography that’s designed to withstand attacks of quantum computers. After a six-year public competition, in July 2022, the US National Institute of Standards and Technology (NIST), known for standardizing AES and SHA, announced Kyber as their pick for post-quantum key agreement. Now the baton has been handed to Industry to deploy post-quantum key agreement to protect today’s communications from the threat of future decryption by a quantum computer.

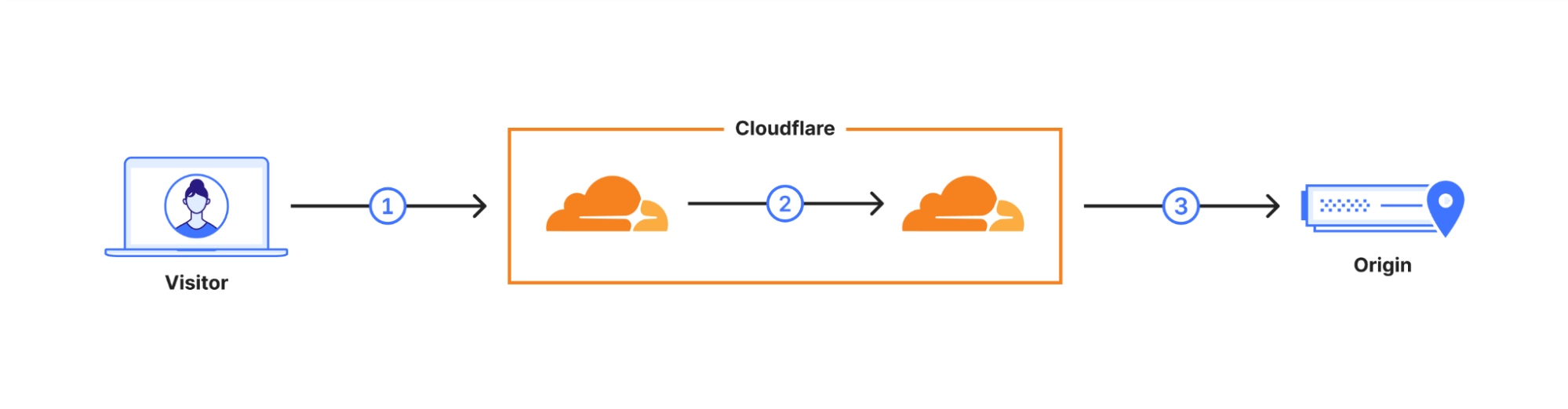

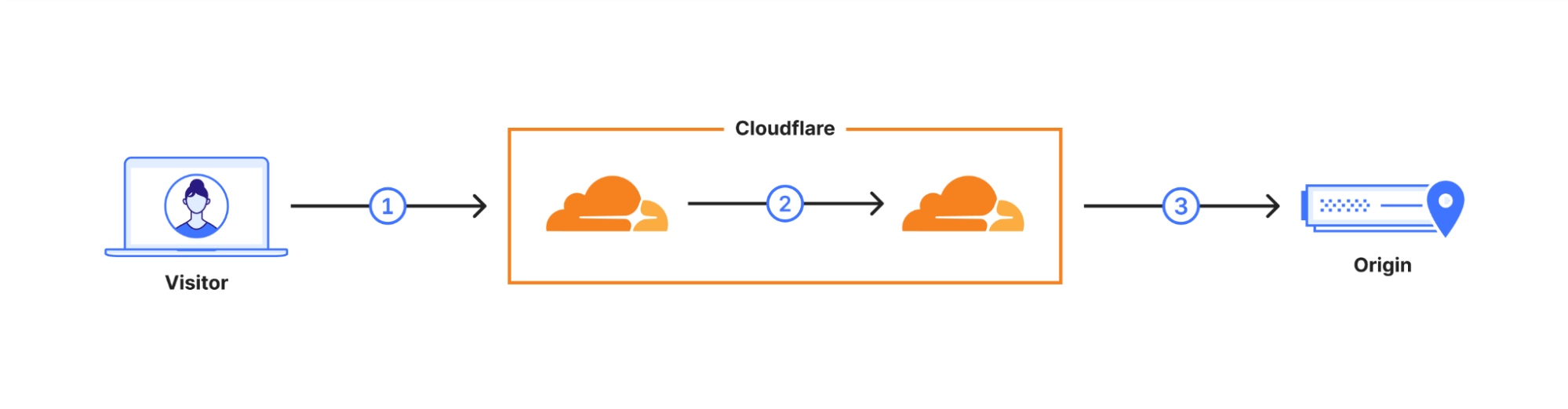

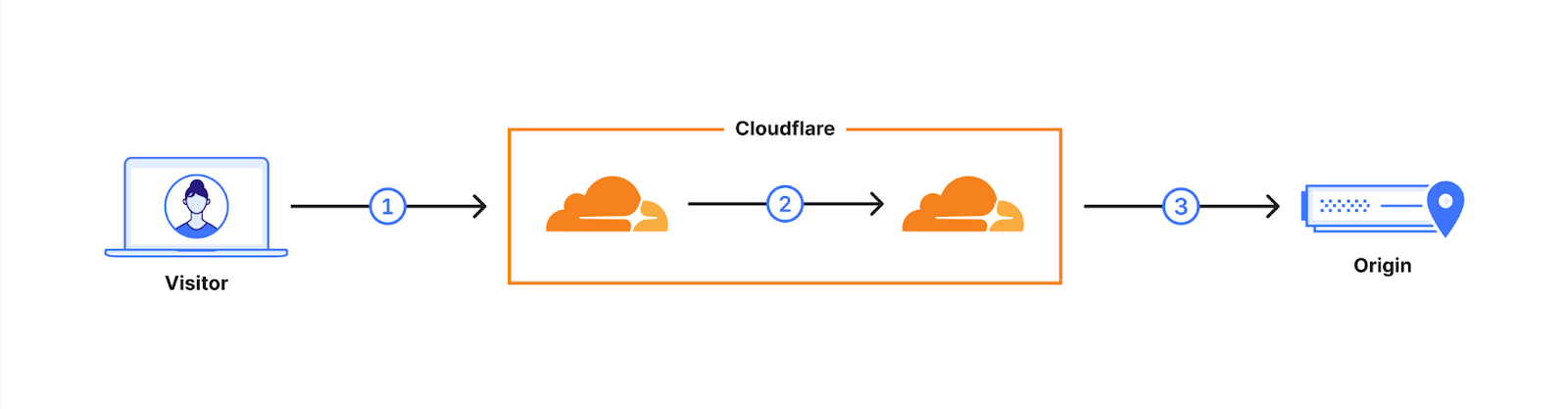

Cloudflare operates as a reverse proxy between clients (“visitors”) and customers’ web servers (“origins”), so that we can protect origin sites from attacks and improve site performance. In this post we explain how we secure the connection from Cloudflare to origin servers. To put that in context, let’s have a look at the connection involved when visiting an uncached page on a website served through Cloudflare.

The first connection is from the visitor’s browser to Cloudflare. In October 2022, we enabled X25519+Kyber Continue reading

Privacy-preserving measurement and machine learning

In 2023, data-driven approaches to making decisions are the norm. We use data for everything from analyzing x-rays to translating thousands of languages to directing autonomous cars. However, when it comes to building these systems, the conventional approach has been to collect as much data as possible, and worry about privacy as an afterthought.

The problem is, data can be sensitive and used to identify individuals – even when explicit identifiers are removed or noise is added.

Cloudflare Research has been interested in exploring different approaches to this question: is there a truly private way to perform data collection, especially for some of the most sensitive (but incredibly useful!) technology?

Some of the use cases we’re thinking about include: training federated machine learning models for predictive keyboards without collecting every user’s keystrokes; performing a census without storing data about individuals’ responses; providing healthcare authorities with data about COVID-19 exposures without tracking peoples’ locations en masse; figuring out the most common errors browsers are experiencing without reporting which websites are visiting.

It’s with those use cases in mind that we’ve been participating in the Privacy Preserving Measurement working group at the IETF whose goal is to develop systems Continue reading

Network performance update: Birthday Week 2023

We constantly measure our own network’s performance against other networks, look for ways to improve our performance compared to them, and share the results of our efforts. Since June 2021, we’ve been sharing benchmarking results we’ve run against other networks to see how we compare.

In this post we are going to share the most recent updates since our last post in June, and tell you about our tools and processes that we use to monitor and improve our network performance.

How we stack up

Since June 2021, we’ve been taking a close look at every single network and taking actions for the specific networks where we have some room for improvement. Cloudflare was already the fastest provider for most of the networks around the world (we define a network as country and AS number pair). Taking a closer look at the numbers; in July 2022, Cloudflare was ranked #1 in 33% of the networks and was within 2 ms (95th percentile TCP Connection Time) or 5% of the #1 provider for 8% of the networks that we measured. For reference, our closest competitor on that front was the fastest for 20% of networks.

As of August 30, Continue reading