Hyperdrive: cómo hacer que las bases de datos parezcan globales

Hyperdrive te permite un acceso ultrarrápido a tus bases de datos existentes desde Cloudflare Workers, dondequiera que se ejecuten. Conectas Hyperdrive a tu base de datos, modificas una línea de código para conectarte a través de Hyperdrive, y listo: las conexiones y las consultas son más rápidas (spoiler: puedes utilizarlo hoy mismo).

En pocas palabras, Hyperdrive utiliza nuestra red global para acelerar las consultas a tus bases de datos existentes, tanto si se encuentran en un proveedor de nube heredado como en tu proveedor favorito de bases de datos sin servidor; reduce drásticamente la latencia que implica configurar repetidamente nuevas conexiones a la base de datos; y almacena en caché las consultas de lectura a tu base de datos más populares, lo que a menudo evita incluso la necesidad de volver a tu base de datos.

Sin Hyperdrive, esa base de datos principal (la que contiene tus perfiles de usuario, tu inventario de productos o que ejecuta tus aplicaciones web críticas), ubicada en la región us-east1 de tu proveedor de nube heredado, ofrecerá un acceso muy lento a los usuarios en París, Singapur y Dubái, y más lento de lo que debería ser para los usuarios en Los Ángeles Continue reading

Hyperdrive: Damit Datenbanken global wirken

Hyperdrive macht den Zugriff auf Ihre bestehenden Datenbanken von Cloudflare Workers aus hyperschnell, egal wo sie laufen. Sie verbinden Hyperdrive mit Ihrer Datenbank, ändern eine Codezeile, um eine Verbindung über Hyperdrive herzustellen, und voilà: Verbindungen und Abfragen werden schneller (und Spoiler: Sie können es schon heute nutzen).

Kurz gesagt: Hyperdrive nutzt unser globales Netzwerk, um Abfragen an Ihre bestehenden Datenbanken zu beschleunigen, unabhängig davon, ob sich diese bei einem alten Cloud-Provider oder bei Ihrem bevorzugten Provider für Serverless-Datenbanken befinden. Die Latenz, die durch das wiederholte Einrichten neuer Datenbankverbindungen entsteht, wird drastisch reduziert, und die beliebtesten Leseabfragen an Ihre Datenbank werden zwischengespeichert, sodass Sie oft gar nicht mehr zu Ihrer Datenbank zurückkehren müssen.

Wenn Ihre Kerndatenbank – mit Ihren Nutzerprofilen, Ihrem Produktbestand oder Ihrer wichtigen Web-App – in der us-east1-Region eines veralteten Cloud-Anbieters angesiedelt ist, wird der Zugriff für Nutzende in Paris, Singapur und Dubai ohne Hyperdrive sehr langsam sein und selbst für Nutzende in Los Angeles oder Vancouver langsamer, als er sein sollte. Da jeder Roundtrip bis zu 200 ms dauert, können die mehrfachen Roundtrips, die allein für den Verbindungsaufbau erforderlich sind, leicht bis zu einer Sekunde (oder mehr!) in Anspruch nehmen; und das, bevor Sie überhaupt eine Continue reading

Hyperdrive : donner aux bases de données l'impression d'être mondiales

Hyperdrive vous permet d'accéder très rapidement à vos bases de données existantes à partir de Cloudflare Workers, quel que soit leur lieu d'exécution. Il vous suffit de connecter Hyperdrive à votre base de données, de modifier une ligne de code pour vous connecter via Hyperdrive, et voilà : les connexions et les requêtes sont accélérées (et spoiler : vous pouvez l'utiliser dès aujourd'hui).

En un mot, Hyperdrive s'appuie sur notre réseau mondial pour accélérer les requêtes vers vos bases de données existantes, qu'elles se situent chez un fournisseur de cloud traditionnel ou chez votre fournisseur de base de données serverless préféré. La solution réduit considérablement la latence induite par l'établissement répété de nouvelles connexions avec la base de données. En outre, elle met en cache les requêtes de lecture les plus populaires adressées à votre base de données, une opération qui évite souvent d'avoir à s'adresser à nouveau à votre base de données.

Sans Hyperdrive, l'accès à votre base de données principale (celle qui contient les profils de vos utilisateurs, votre stock de produits ou qui exécute votre application web essentielle) hébergée dans la région us-east1 d'un fournisseur de cloud traditionnel se révèlera très lent pour les utilisateurs situés Continue reading

Race ahead with Cloudflare Pages build caching

Today, we are thrilled to release a beta of Cloudflare Pages support for build caching! With build caching, we are offering a supercharged Pages experience by helping you cache parts of your project to save time on subsequent builds.

For developers, time is not just money – it’s innovation and progress. When every second counts in crunch time before a new launch, the “need for speed” becomes critical. With Cloudflare Pages’ built-in continuous integration and continuous deployment (CI/CD), developers count on us to drive fast. We’ve already taken great strides in making sure we’re enabling quick development iterations for our users by making solid improvements on the stability and efficiency of our build infrastructure. But we always knew there was more to our build story.

Quick pit stops

Build times can feel like a developer's equivalent of a time-out, a forced pause in the creative process—the inevitable pit stop in a high-speed formula race.

Long build times not only breaks the flow of individual developers, but it can also create a ripple effect across the team. It can slow down iterations and push back deployments. In the fast-paced world of CI/CD, these delays can drastically impact productivity and the delivery Continue reading

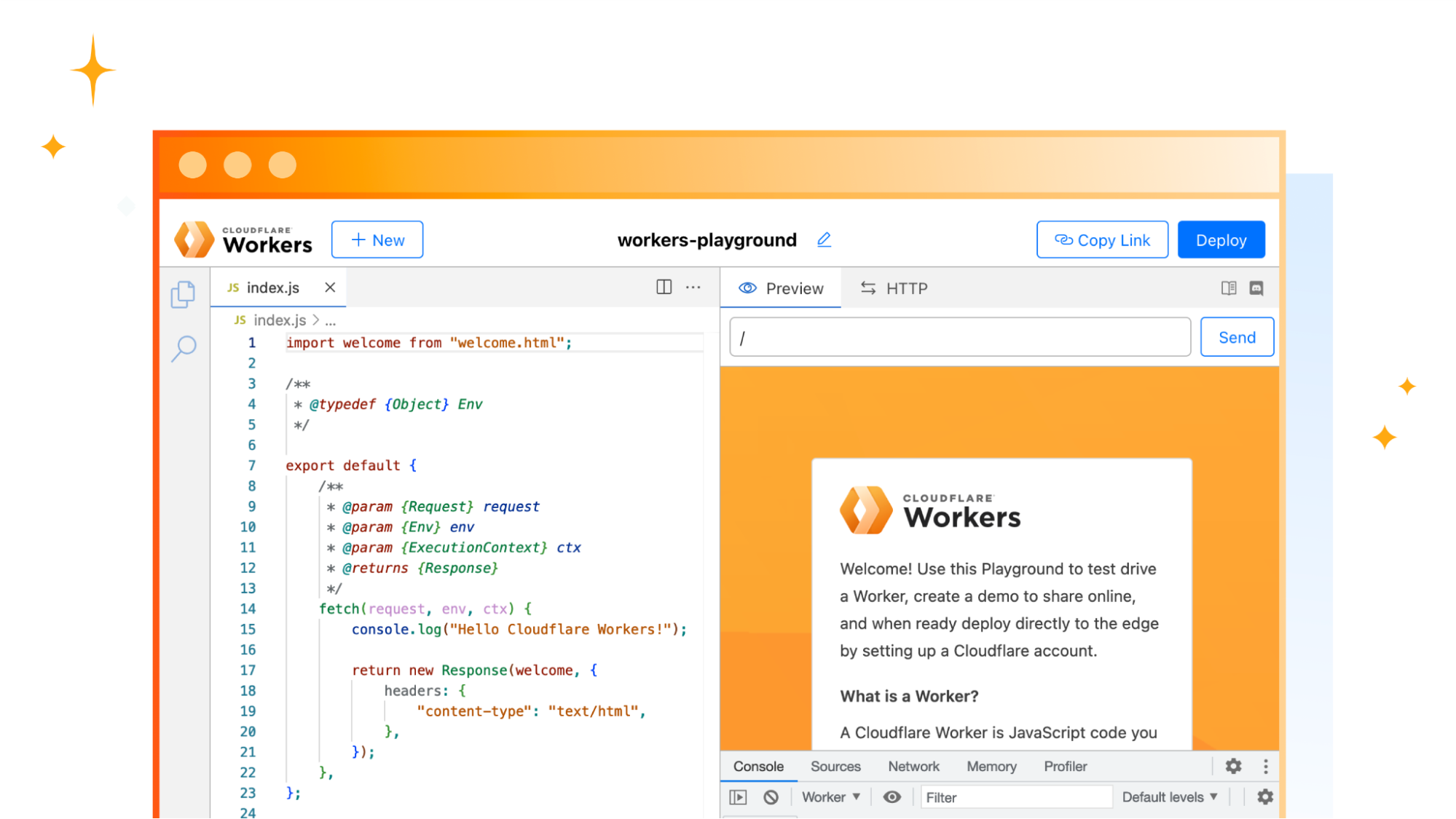

Re-introducing the Cloudflare Workers Playground

Since the very initial announcement of Cloudflare Workers, we’ve provided a playground. The motivation behind that being a belief that users should have a convenient, low-commitment way to play around with and learn more about Workers.

Over the last few years, while Cloudflare Workers and our Developer Platform have changed and grown, the original playground has not. Today, we’re proud to announce a revamp of the playground that demonstrates the power of Workers, along with new development tooling, and the ability to share your playground code and deploy instantly to Cloudflare’s global network.

A focus on origin Workers

When Workers was first introduced, many of the examples and use-cases centered around middleware, where a Worker intercepts a request to an origin and does something before returning a response. This includes things like: modifying headers, redirecting traffic, helping with A/B testing, or caching. Ultimately the Worker isn’t acting as an origin in these cases, it sits between the user and the destination.

While Workers are still great for these types of tasks, for the updated playground, we decided to focus on the Worker-as-origin use-case. This is where the Worker receives a request and is responsible for returning the full response. In Continue reading

Running Serverless Puppeteer with Workers and Durable Objects

Last year, we announced the Browser Rendering API – letting users running Puppeteer, a browser automation library, directly in Workers. Puppeteer is one of the most popular libraries used to interact with a headless browser instance to accomplish tasks like taking screenshots, generating PDFs, crawling web pages, and testing web applications. We’ve heard from developers that configuring and maintaining their own serverless browser automation systems can be quite painful.

The Workers Browser Rendering API solves this. It makes the Puppeteer library available directly in your Worker, connected to a real web browser, without the need to configure and manage infrastructure or keep browser sessions warm yourself. You can use @cloudflare/puppeteer to run the full Puppeteer API directly on Workers!

We’ve seen so much interest from the developer community since launching last year. While the Browser Rendering API is still in beta (sign up to our waitlist to get access), we wanted to share a way to get more out of our current limits by using the Browser Rendering API with Durable Objects. We’ll also be sharing pricing for the Rendering API, so you can build knowing exactly what you’ll pay for.

Building a responsive web design testing tool with the Continue reading

A Socket API that works across JavaScript runtimes — announcing a WinterCG spec and Node.js implementation of connect()

Earlier this year, we announced a new API for creating outbound TCP sockets — connect(). From day one, we’ve been working with the Web-interoperable Runtimes Community Group (WinterCG) community to chart a course toward making this API a standard, available across all runtimes and platforms — including Node.js.

Today, we’re sharing that we’ve reached a new milestone in the path to making this API available across runtimes — engineers from Cloudflare and Vercel have published a draft specification of the connect() sockets API for review by the community, along with a Node.js compatible implementation of the connect() API that developers can start using today.

This implementation helps both application developers and maintainers of libraries and frameworks:

- Maintainers of existing libraries that use the node:net and node:tls APIs can use it to more easily add support for runtimes where node:net and node:tls are not available.

- JavaScript frameworks can use it to make connect() available in local development, making it easier for application developers to target runtimes that provide connect().

Why create a new standard? Why connect()?

As we described when we first announced connect(), to-date there has not been a standard API across JavaScript runtimes for creating and Continue reading

Cloudflare Integrations Marketplace introduces three new partners: Sentry, Momento and Turso

Building modern full-stack applications requires connecting to many hosted third party services, from observability platforms to databases and more. All too often, this means spending time doing busywork, managing credentials and writing glue code just to get started. This is why we’re building out the Cloudflare Integrations Marketplace to allow developers to easily discover, configure and deploy products to use with Workers.

Earlier this year, we introduced integrations with Supabase, PlanetScale, Neon and Upstash. Today, we are thrilled to introduce our newest additions to Cloudflare’s Integrations Marketplace – Sentry, Turso and Momento.

Let's take a closer look at some of the exciting integration providers that are now part of the Workers Integration Marketplace.

Improve performance and reliability by connecting Workers to Sentry

When your Worker encounters an error you want to know what happened and exactly what line of code triggered it. Sentry is an application monitoring platform that helps developers identify and resolve issues in real-time.

The Workers and Sentry integration automatically sends errors, exceptions and console.log() messages from your Worker to Sentry with no code changes required. Here’s how it works:

- You enable the integration from the Cloudflare Dashboard.

- The credentials from the Sentry project of Continue reading

Cloudflare is now powering Microsoft Edge Secure Network

Between third-party cookies that track your activity across websites, to highly targeted advertising based on your IP address and browsing data, it's no secret that today’s Internet browsing experience isn’t as private as it should be. Here at Cloudflare, we believe everyone should be able to browse the Internet free of persistent tracking and prying eyes.

That’s why we’re excited to announce that we’ve partnered with Microsoft Edge to provide a fast and secure VPN, right in the browser. Users don’t have to install anything new or understand complex concepts to get the latest in network-level privacy: Edge Secure Network VPN is available on the latest consumer version of Microsoft Edge in most markets, and automatically comes with 5 GB of data. Just enable the feature by going to [Microsoft Edge Settings & more (…) > Browser essentials, and click Get VPN for free]. See Microsoft’s Edge Secure Network page for more details.

Cloudflare’s Privacy Proxy platform isn’t your typical VPN

To take a step back: a VPN is a way in which the Internet traffic leaving your device is tunneled through an intermediary server operated by a provider – in this case, Cloudflare! There are many important pieces that Continue reading

D1: open beta is here

D1 is now in open beta, and the theme is “scale”: with higher per-database storage limits and the ability to create more databases, we’re unlocking the ability for developers to build production-scale applications on D1. Any developers with an existing paid Workers plan don’t need to lift a finger to benefit: we’ve retroactively applied this to all existing D1 databases.

If you missed the last D1 update back during Developer Week, the multitude of updates in the changelog, or are just new to D1 in general: read on.

Remind me: D1? Databases?

D1 our native serverless database, which we launched into alpha in November last year: the queryable database complement to Workers KV, Durable Objects and R2.

When we set out to build D1, we knew a few things for certain: it needed to be fast, it needed to be incredibly easy to create a database, and it needed to be SQL-based.

That last one was critical: so that developers could a) avoid learning another custom query language and b) make it easier for existing query buildings, ORM (object relational mapper) libraries and other tools to connect to D1 with minimal effort. From this, we’ve seen a Continue reading

New Workers pricing — never pay to wait on I/O again

Today we are announcing new pricing for Cloudflare Workers and Pages Functions, where you are billed based on CPU time, and never for the idle time that your Worker spends waiting on network requests and other I/O. Unlike other platforms, when you build applications on Workers, you only pay for the compute resources you actually use.

Why is this exciting? To date, all large serverless compute platforms have billed based on how long your function runs — its duration or “wall time”. This is a reflection of a new paradigm built on a leaky abstraction — your code may be neatly packaged up into a “function”, but under the hood there’s a virtual machine (VM). A VM can’t be paused and resumed quickly enough to execute another piece of code while it waits on I/O. So while a typical function might take 100ms to run, it might typically spend only 10ms doing CPU work, like crunching numbers or parsing JSON, with the rest of time spent waiting on I/O.

This status quo has meant that you are billed for this idle time, while nothing is happening.

With this announcement, Cloudflare is the first and only global serverless platform to Continue reading

How GitHub Saved My Day

I always tell networking engineers who aspire to be more than VLAN-munging CLI jockeys to get fluent with Git. I should also be telling them that while doing local version control is the right thing to do, you should always have backups (in this case, a remote repository).

I’m eating my own dog food1 – I’m using a half dozen Git repositories in ipSpace.net production2. If they break, my blog stops working, and I cannot publish new documents3.

Now for a fun fact: Git is not transactionally consistent.

How GitHub Saved My Day

I always tell networking engineers who aspire to be more than VLAN-munging CLI jockeys to get fluent with Git. I should also be telling them that while doing local version control is the right thing to do, you should always have backups (in this case, a remote repository).

I’m eating my own dog food1 – I’m using a half dozen Git repositories in ipSpace.net production2. If they break, my blog stops working, and I cannot publish new documents3.

Now for a fun fact: Git is not transactionally consistent.

Day Two Cloud 212: Cloud Essentials – Object, File, And Block Storage

Day Two Cloud continues the Cloud Essentials series with cloud storage. We focus specifically on AWS's offering, which include object, file, and block storage options. We also discuss special file systems, file caching, instance stores, and more. We cover use cases for the major storage options and their costs. We also touch briefly on storage services including data migration, hybrid cloud storage, and disaster recovery and backup.

The post Day Two Cloud 212: Cloud Essentials – Object, File, And Block Storage appeared first on Packet Pushers.