Summer Break 2023

Long story short: it’s time for another summer break, as people reporting my bloopers – THANK YOU!!! – know only too well. I plan to be back in early autumn rolling out tons of new content.

I’ll do my best to reply to support requests (it will take longer than usual), and probably won’t be able to resist publishing a few lightweight netlab-related blog posts. If you get bored there’s still over 400 hours of existing content, over 100 podcast episodes, and thousands of blog posts.

In the meantime, get away from work, turn off the Internet, and enjoy a few days in your favorite spot with your loved ones!

Summer Break 2023

Long story short: it’s time for another summer break, as people reporting my bloopers – THANK YOU!!! – know only too well. I plan to be back in early autumn rolling out tons of new content.

I’ll do my best to reply to support requests (it will take longer than usual), and probably won’t be able to resist publishing a few lightweight netlab-related blog posts. If you get bored there’s still over 400 hours of existing content, over 100 podcast episodes, and thousands of blog posts.

In the meantime, get away from work, turn off the Internet, and enjoy a few days in your favorite spot with your loved ones!

Geo-blocking at the BGP Level

The post Geo-blocking at the BGP Level appeared first on Noction.

Case study: Calico helps Upwork migrate legacy system to Kubernetes on AWS and enforce zero-trust security

Upwork is a freelancing platform that connects a global base of clients to freelancers via job postings. Since going public on the New York Stock Exchange in 2019, the company has become one of the leading freelance platforms worldwide and was named on Time’s list of the 100 Most Influential Companies of 2022.

Upwork’s platform team was running containerized workloads on Consul and Spring Cloud, which required service owners to manually switch to a new code library each time Upwork’s platform team had a new release, and vice versa. This manual switching happened as often as every two months, which was inefficient for a company with over 800 microservices. Also, service owners were not adopting new libraries immediately and could not add upstream and downstream dependencies as needed without going through a review process. Combined, these problems meant that service owners and the cloud engineering and InfoSec teams lacked visibility, were highly susceptible to zero-day attacks and had a slow incident mitigation response.

To solve these problems, Upwork needed to adopt a distributed architecture from the application layer to the network layer. To do this, they required Kubernetes. The switch to Kubernetes meant Upwork’s containers needed to adhere to cloud-native Continue reading

Iperf3 vs. Iperf3: Just Start Measuring

Tony Fortunato of The Technology Firm walks you through testing scenarios using iPerf3 on different devices.Cloudflare Snippets is now available in alpha

Today we are excited to announce that Cloudflare Snippets is available in alpha. In the coming weeks we will be opening access to our waiting list.

What are Snippets?

Over the past two years we have released a number of new rules products such as Transform Rules, Cache Rules, Origin Rules, Config Rules and Redirect Rules. These new products give more control to customers on how we process their traffic as it flows through our global network. The feedback on these products so far has been overwhelmingly positive. However, our customers still occasionally need the ability to do more than the out-of-the-box functionality allows. Not just adding an HTTP header - but performing some advanced calculation to create the output.

For these cases, Cloudflare Snippets comes to the rescue. Snippets are small pieces of user created JavaScript that are run by Cloudflare before your website, API or application is served to the user. If you're familiar with Cloudflare Workers, our robust developer platform, then you'll find Snippets to be a familiar addition. For those who are not, Snippets are designed to be easily created, tested, and deployed. Providing you with the ability to deploy your custom JavaScript Snippet to Continue reading

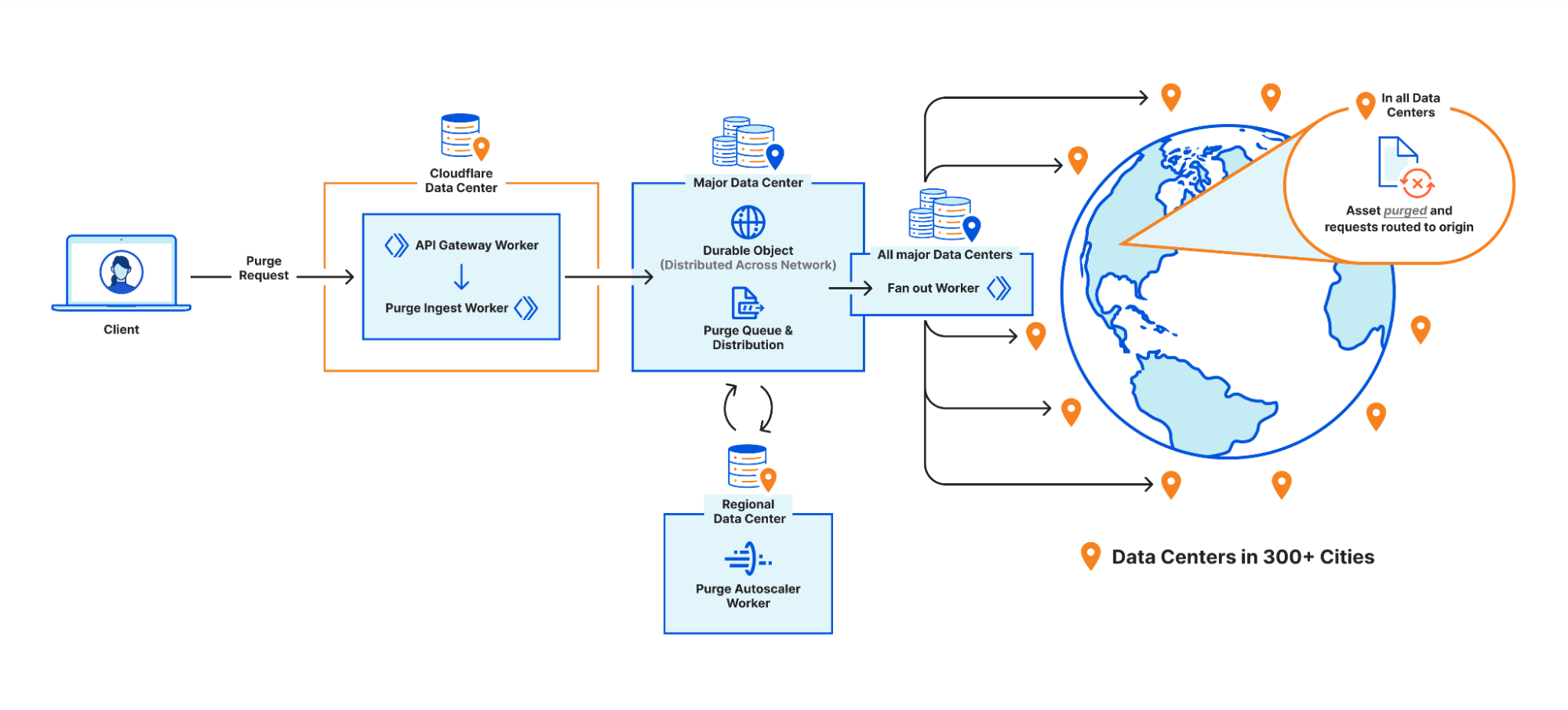

Part 2: Rethinking cache purge with a new architecture

In Part 1: Rethinking Cache Purge, Fast and Scalable Global Cache Invalidation, we outlined the importance of cache invalidation and the difficulties of purging caches, how our existing purge system was designed and performed, and we gave a high level overview of what we wanted our new Cache Purge system to look like.

It’s been a while since we published the first blog post and it’s time for an update on what we’ve been working on. In this post we’ll be talking about some of the architecture improvements we’ve made so far and what we’re working on now.

Cache Purge end to end

We touched on the high level design of what we called the “coreless” purge system in part 1, but let’s dive deeper into what that design encompasses by following a purge request from end to end:

Step 1: Request received locally

An API request to Cloudflare is routed to the nearest Cloudflare data center and passed to an API Gateway worker. This worker looks at the request URL to see which service it should be sent to and forwards the request to the appropriate upstream backend. Most endpoints of the Cloudflare API are currently handled by Continue reading

Spotlight on Zero Trust: We’re fastest and here’s the proof

In January and in March we posted blogs outlining how Cloudflare performed against others in Zero Trust. The conclusion in both cases was that Cloudflare was faster than Zscaler and Netskope in a variety of Zero Trust scenarios. For Speed Week, we’re bringing back these tests and upping the ante: we’re testing more providers against more public Internet endpoints in more regions than we have in the past.

For these tests, we tested three Zero Trust scenarios: Secure Web Gateway (SWG), Zero Trust Network Access (ZTNA), and Remote Browser Isolation (RBI). We tested against three competitors: Zscaler, Netskope, and Palo Alto Networks. We tested these scenarios from 12 regions around the world, up from the four we’d previously tested with. The results are that Cloudflare is the fastest Secure Web Gateway in 42% of testing scenarios, the most of any provider. Cloudflare is 46% faster than Zscaler, 56% faster than Netskope, and 10% faster than Palo Alto for ZTNA, and 64% faster than Zscaler for RBI scenarios.

In this blog, we’ll provide a refresher on why performance matters, do a deep dive on how we’re faster for each scenario, and we’ll talk about how we measured performance for each product.

Continue reading

It’s never been easier to migrate thanks to Cloudflare’s new Migration Hub

We understand the pain points associated with CDN migrations. That's why in late 2021 we introduced Turpentine, a project to the process of translating the old Varnish Configuration Language (VCL) into Cloudflare Workers with just a push of a button. After nearly two years of testing and user feedback, we’ve tailored the migration processes for different user groups.

Today, we are thrilled to relaunch Turpentine, and introduce Cloudflare's new Migration Hub. The Migration Hub serves as a one-stop-shop for all migration needs, featuring brand-new migration guides that bring transparency and simplicity to the process.

We also know that a large number of customers aren't comfortable doing migrations themselves. Years of built up business logic makes unpacking and translating CDN configurations between different vendors difficult and locks businesses into subpar products and services. To help these customers we have established a Professional Services group to ensure smooth migrations for customers transitioning to Cloudflare’s first-class products. Going forward, we plan to continue to invest resources in Turpentine to ensure that moving to any part of Cloudflare is easy and you have the help you need.

Why choose Cloudflare?

Cloudflare has gained immense popularity among businesses seeking to improve website performance, security, Continue reading

Workers KV is faster than ever with a new architecture

We’re excited to announce a significant performance improvement coming to Workers KV, focused on dramatically improving cold read performance and reducing latency, even for long tail access patterns.

Developers using KV have seen great performance on hot reads, but ask why their 95th percentile latency — often on a key (or set of keys) that hadn’t been accessed recently or in that region — was higher than expected. We took this feedback to heart: we’ve been working feverishly on a new caching layer for KV behind the scenes, which enables customers to achieve much more frequent hot reads, reduced worst case latency times, better flexibility and control over cache TTLs, and much faster consistency over our previous iterations, and it’s now live for all KV users.

The best part? Developers using KV don’t need to change anything to benefit from this increased performance.

What is Workers KV?

Workers KV is a key value store designed for read heavy use-cases and applications powered by Cloudflare’s network. KV’s focus on read-heavy use-cases allows it to serve hot (cached) reads in milliseconds, which makes it ideal for storing per-application or customer configuration data, routing configuration, multivariate (A/B testing) configurations, and even small asset Continue reading

How Kinsta used Workers and Workers KV to improve cache hit rates by 56%

This is a guest post by Kinsta about their use of our platform.

At Kinsta, we’re obsessed with speed: Our Application Hosting, Database Hosting and Managed WordPress Hosting services all run on the Google Cloud Platform’s fastest Premium Tier Network and C2 Machines, and we rely on Cloudflare to keep the pedal to the metal for tens of thousands of customers who want to deliver their content around the world with speed and security.

While making that happen, we’ve learned a thing or two about using Cloudflare Workers and Workers KV to provide optimized caching rules for static and dynamic content.

In early 2023, we doubled down on Cloudflare cache wrangling, making caches more responsive to client-side configuration changes while also shifting the heavy lifting behind broadcasting feature updates away from our admins on the backend and into Cloudflare Workers. A key result was a dramatic increase in the share of customer data successfully cached, increasing 56.3% between October 2022 and March 2023.

Cloudflare Workers and Workers KV allow us to programmatically customize every request and response with minimal effort and lower latency. We no longer need to deploy changes to hundreds of thousands of containers when we Continue reading

Use FRR Containers to Learn Routing Protocol Fundamentals

An anonymous commenter asked this highly relevant question about my Internet routing security lab:

What are the smallest hardware requirements to run the lab.

TL&DR: 2 GB RAM, 2 vCPU

Now for the more precise answer (aka “it depends”).

Use FRR Containers to Learn Routing Protocol Fundamentals

An anonymous commenter asked this highly relevant question about my Internet routing security lab:

What are the smallest hardware requirements to run the lab?

TL&DR: 2 GB RAM, 2 vCPU

Now for the more precise answer (aka “it depends”).