IPv4 QoS Markings Calculator

This is a quick calculator I came up that I could use in the CCIE lab to translate between various IPv4 header QoS markings. As long as I could remember how to draw out the calculator, all I had to do was some basic math and I could translate between markings quite easily.

Establishing the Big Data Connection

Many network vendors will tell you that their network equipment is built for Big Data. However, once deployed, do you have enough Big DataAre We The Problem With Wearables?

Something, Something, Apple Watch.

Oh, yeah. There needs to be substance in a wearable blog post. Not just product names.

Wearables are the next big product category that is driving innovation. The advances being made in screen clarity, battery life, and component miniaturization are being felt across the rest of the device market. I doubt Apple would have been able to make the new Macbook logic board as small as it is without a few things learned from trying to cram transistors into a watch case. But, are we the people sending the wrong messages about wearable technology?

The Little Computer That Could

If you look at the biggest driving factor behind technology today, it comes down to size. Technology companies are making things smaller and lighter with every iteration. If the words thinnest and lightest don’t appear in your presentation at least twice then you aren’t on the cutting edge. But is this drive because tech companies want to make things tiny? Or is it more that consumers are driving them that way?

Yes, people the world over are now complaining that technology should have other attributes besides size and weight. A large contingent says that battery life is Continue reading

Response: Open Compute Project (OCP) Formally Accepts Open Network Linux (ONL) | Big Switch Networks, Inc.

This is good news. Big Switch has contributed Open Network Linux to Open Compute Project and been accepted as the standard operating system for whitebox Ethernet. Analysis Customers now have a number of choices for operating system for theirwhitebox switches. I know of the following: Open Network Linux Cumulus Linux PicOS from Pica8 This is base […]

The post Response: Open Compute Project (OCP) Formally Accepts Open Network Linux (ONL) | Big Switch Networks, Inc. appeared first on EtherealMind.

OSPF Design Test

I shared three OSPF questions below,similar to BGP Design Test published earlier. These are design related, please take your time before giving your answer. In return,I want you to share these test on social media so more people can benefit from it. If you have a problem,confusion with the answers which I provided, let’s discuss… Read More »

The post OSPF Design Test appeared first on Network Design and Architecture.

Show 227 – OpenStack Neutron Overview with Kyle Mestery

Today's Packet Pushers adventure is piloted by hosts Ethan Banks and Greg Ferro. They are joined by guide Kyle Mestery on a tour of OpenStack Neutron.

Author information

The post Show 227 – OpenStack Neutron Overview with Kyle Mestery appeared first on Packet Pushers Podcast and was written by Ethan Banks.

Apple Watch: Function Over Form Otherwise It’s Just a Fad

Apple Watch: Function Over Form Otherwise It's Just a Fad

by Kris Olander, Sr. Technical Marketing Engineer - March 10, 2015

So yet another technology company wants to put some jewelry on my wrist. Good luck. You know it’s not that I don’t want the Apple Watch to succeed. It’s just that I’ve been down this path already.

This past weekend I was reading a piece in the San Francisco Chronicle by Thomas Lee, “Why we need Apple to fail.” While I’m not really buying Mr. Lee’s line of thinking - my ego is not going to be bruised one way or the other should Apple fail or succeed with this product - it did start me thinking.

My father was an employee at Hewlett Packard back in the good old days. One of the latest and greatest products of those days was the desktop calculator. It was a time when each successive year found significantly more computational power in much smaller footprints. Eventually someone said, “Hey, we could make a calculator as small as a watch now.” So they did.

I don’t know how many watches HP produced over the lifetime of the product Continue reading

Open Hardware that Just Runs

When you buy a server, you don’t worry whether or not Windows will run on the server. You know it will. That’s because the server industry has a comprehensive solution to a hard problem: rapid, standard integration between the OS and underlying open hardware. They’ve made it ubiquitous and totally transparent to you.

This is not the case for embedded systems, where you have to check whether an OS works on a particular hardware platform, and oftentimes you find out that it’s not supported yet. Bare metal switches are a good example of this.

It’s time to change that. We need the same transparent model on switches that we have on servers.

To make open networking ubiquitous — and to give customers choice among a wide variety of designs, port configurations and manufacturers — the integration between the networking OS and bare metal networking hardware must be standardized, fast, and easy to validate. We need open hardware.

The Starting Point

Today, a bare-metal networking hardware vendor supplies their hardware spec to the NOS (network OS) provider. The NOS team reads the spec, interprets it and writes drivers/scripts to manage the device components (sensors, LEDs, fans and so forth). Then Continue reading

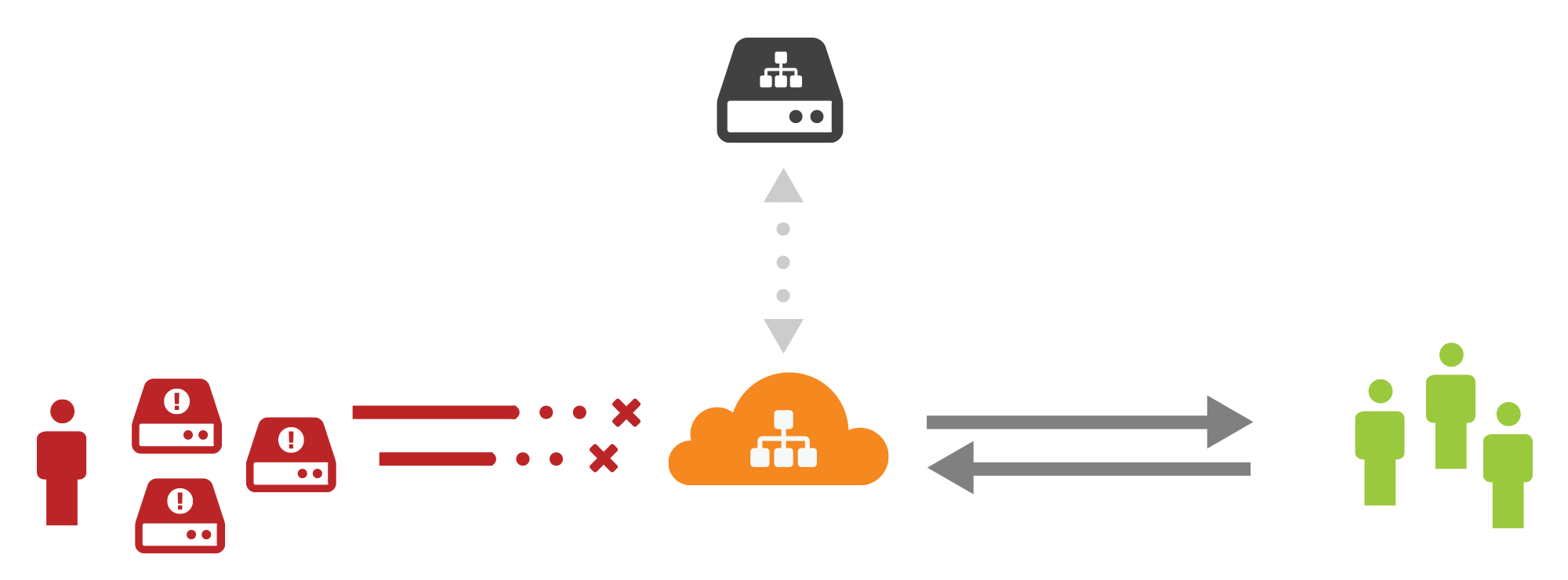

Announcing Virtual DNS: DDoS Mitigation and Global Distribution for DNS Traffic

It’s 9am and CloudFlare has already mitigated three billion malicious requests for our customers today. Six out of every one hundred requests we see is malicious, and increasingly, more of that is targeting DNS nameservers.

DNS is the phone book of the Internet and fundamental to the usability of the web, but is also a serious weak link in Internet security. One of the ways CloudFlare is trying to make DNS more secure is by implementing DNSSEC, cryptographic authentication for DNS responses. Another way is Virtual DNS, the authoritative DNS proxy service we are introducing today.

Virtual DNS provides CloudFlare’s DDoS mitigation and global distribution to DNS nameservers. DNS operators need performant, resilient infrastructure, and we are offering ours, the fastest of any providers, to any organization’s DNS servers.

Many organizations have legacy DNS infrastructure that is difficult to change. The hosting industry is a key example of this. A host may have given thousands of clients a set of nameservers but now realize that they don't have the performance or defensibility that their clients need.

Virtual DNS means that the host can get the benefits of a global, modern DNS infrastructure without having to contact every customer Continue reading