How to Make Friends as an Adult

Making friends as an adult can seem daunting, but it doesn’t have to be. There are many ways to meet new people and build lasting relationships.

Ways to Find Friends as an Adult

Making friends as an adult can be challenging, but it can also be rewarding. With a little effort, you can develop lasting relationships with the people around you. Here are a few tips to get you started:

Join a club or group that aligns with your interests

One of the best ways to meet new friends is by joining a club or group that aligns with your interests. This could be anything from a book club to a hiking group to a cooking class. Not only will you have something in common with the other members, but you’ll also have the opportunity to bond over shared activities.

Attend local events and festivals

Another great way to meet new people is by attending local events and festivals. These are usually great occasions for socializing, and you never know who you might meet. You might even make some new friends that live right in your own neighborhood.

Volunteer for a cause that’s important to you

If you’re looking Continue reading

Cloudflare blocks 15M rps HTTPS DDoS attack

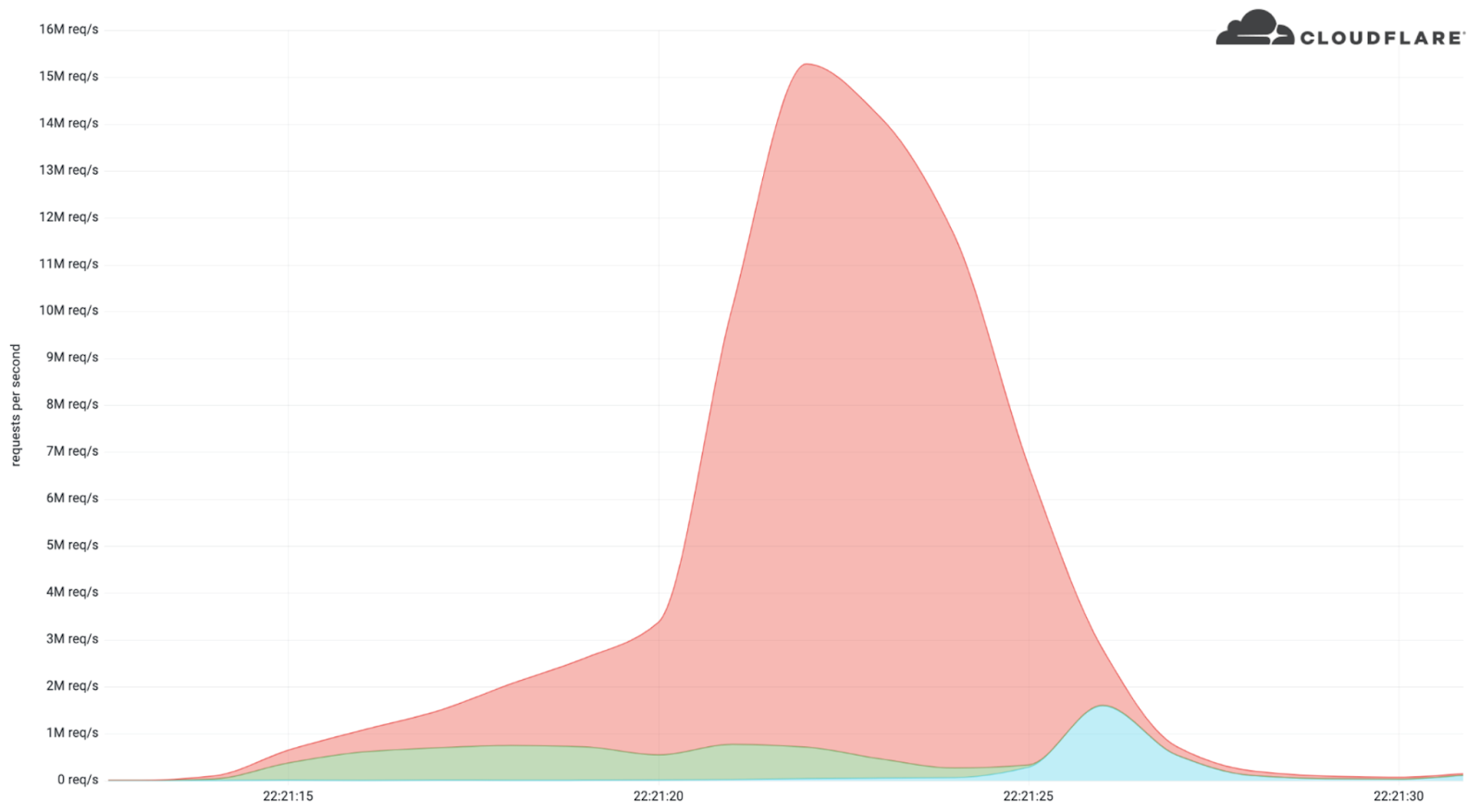

Earlier this month, Cloudflare’s systems automatically detected and mitigated a 15.3 million request-per-second (rps) DDoS attack — one of the largest HTTPS DDoS attacks on record.

While this isn’t the largest application-layer attack we’ve seen, it is the largest we’ve seen over HTTPS. HTTPS DDoS attacks are more expensive in terms of required computational resources because of the higher cost of establishing a secure TLS encrypted connection. Therefore it costs the attacker more to launch the attack, and for the victim to mitigate it. We’ve seen very large attacks in the past over (unencrypted) HTTP, but this attack stands out because of the resources it required at its scale.

The attack, lasting less than 15 seconds, targeted a Cloudflare customer on the Professional (Pro) plan operating a crypto launchpad. Crypto launchpads are used to surface Decentralized Finance projects to potential investors. The attack was launched by a botnet that we’ve been observing — we’ve already seen large attacks as high as 10M rps matching the same attack fingerprint.

Cloudflare customers are protected against this botnet and do not need to take any action.

The attack

What’s interesting is that the attack mostly came from data centers. We’re Continue reading

Practical Python For Networking: 6.2 – Package Examples – Video

This lesson walks through basic examples of packages. Course files are in a GitHub repository: https://github.com/ericchou1/pp_practical_lessons_1_route_alerts Eric Chou is a network engineer with 20 years of experience, including managing networks at Amazon AWS and Microsoft Azure. He’s the founder of Network Automation Nerds and has written the books Mastering Python Networking and Distributed Denial Of […]

The post Practical Python For Networking: 6.2 – Package Examples – Video appeared first on Packet Pushers.

Is X.25 Still Alive?

Enrique Vallejo asked an interesting question a while ago:

When was X.25 official declared dead? Note that the wikipedia claims that it is still in use in parts of the world.

Wikipedia is probably right, and had several encounters with X.25 that would corroborate that claim. If you happen to have more up-to-date information, please leave a comment.

Is X.25 Still Alive?

Enrique Vallejo asked an interesting question a while ago:

When was X.25 official declared dead? Note that the wikipedia claims that it is still in use in parts of the world.

Wikipedia is probably right, and had several encounters with X.25 that would corroborate that claim. If you happen to have more up-to-date information, please leave a comment.

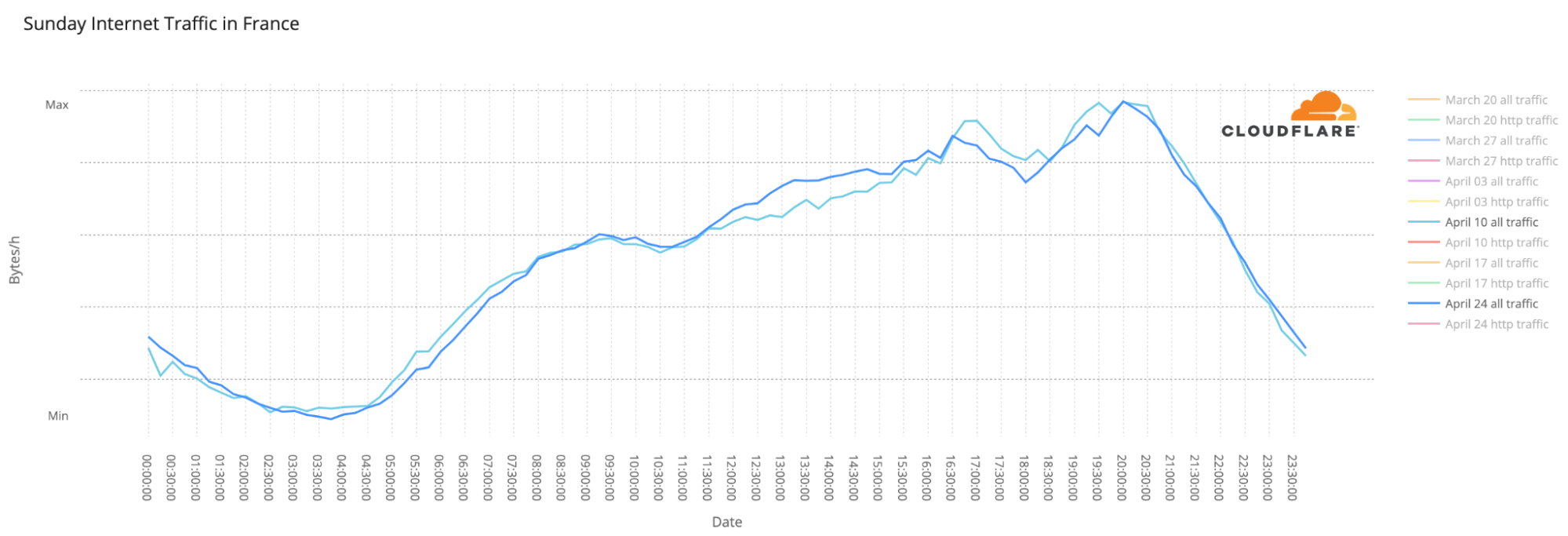

Two voting days, a debate and a polling rule in France impacts the Internet

We blogged previously about some trends concerning the first round of the 2022 French presidential election, held on April 10. Here we take a look at the run-off election this Sunday, April 24, that ended up re-electing Emmanuel Macron as President of France.

First, the two main trends: French-language news sites outside France were clearly impacted by the local rule that states that exit polls can only be published after 20:00.

And Internet traffic was similar on both the election days (April 10 and 24) and that includes the increase in use of mobile devices and interest in news websites — there we also saw a clear interest in the Macron-Le Pen debate on April 20.

We have discussed before that election days usually don’t have a major impact on overall Internet traffic. Let’s compare April 10 with 24, the two Sundays when the elections were held. The trends throughout the day are incredibly similar (with a slight increase in traffic on April 24), even with a two-week gap between them.

Another election-day trend is the use of mobile devices to access the Internet, mainly at night. The largest spikes in number of requests made using mobile devices in Continue reading

Deux jours de vote, un débat et une réglementation concernant les élections en France impactent l’Internet

Nous avons publié un article de blog consacré à certaines tendances concernant le premier tour de l'élection présidentielle française de 2022, qui s'est déroulé le 10 avril. Nous nous intéressons ici au second tour de l'élection, qui a eu lieu le dimanche 24 avril et a abouti à la réélection d'Emmanuel Macron à la présidence de la France.

Tout d'abord, les deux principales tendances : les sites d'information francophones situés hors de France ont été clairement impactés par la réglementation locale, qui stipule que les estimations ne peuvent être publiées qu'après 20 heures.

Le trafic Internet a été similaire les deux jours de l'élection (les 10 et 24 avril), et cela inclut l'augmentation de l'utilisation des appareils mobiles et l'intérêt pour les sites d'actualités – - là aussi, nous avons constaté un net intérêt pour le débat Macron-Le Pen du 20 avril.

Nous avons déjà évoqué le fait que les jours d'élections n'ont généralement pas un impact majeur sur le trafic Internet global. Comparons les journées des 10 et 24 avril, les deux dimanches où ont eu lieu les élections. Les tendances tout au long de la journée sont incroyablement similaires (avec une légère augmentation du trafic le 24 Continue reading

How To Work With A Sponsor For Your IT Blog

For many years, I’ve been working with B2B IT vendors who sponsor content with my company to market their offerings. My co-founder and I have learned many lessons–some the hard way–about dealing with these vendors and the content they create with us.

In this article, I’ll focus on handling a specific scenario. You’ve got a niche blog where you write as a deeply technical expert in a IT field such as cloud, networking, storage, development, or security. Your audience is made up of fellow nerds in similar orbits. You’ve been writing for years, and have developed a faithful audience who reads most of your stuff. After all this time, a real-deal vendor appears, wanting to place a sponsored blog post on your hallowed site. Now what?

You might think the sponsored content itself would be the most complicated part, and that once you hit publish, you’re mostly done. Not really. Back end logistics will likely take up more of your time. There are other considerations, too. Consider them carefully before trying to monetize your blogging hobby.

Mark Sponsored Content As Sponsored

If this is your first sponsored post, you might feel weird about it. The temptation can be to hide Continue reading

BGP Remotely Triggered Blackhole (RTBH)

DDoS attacks and BGP Flowspec responses describes how to simulate and mitigate common DDoS attacks. This article builds on the previous examples to show how BGP Remotely Triggered Blackhole (RTBH) controls can be applied in situations where BGP Flowpsec is not available, or is unsuitable as a mitigation response.docker run --rm -it --privileged --network host --pid="host" \Start Containerlab.

-v /var/run/docker.sock:/var/run/docker.sock -v /run/netns:/run/netns \

-v ~/clab:/home/clab -w /home/clab \

ghcr.io/srl-labs/clab bash

curl -O https://raw.githubusercontent.com/sflow-rt/containerlab/master/ddos.ymlDownload the Containerlab topology file.

sed -i "s/\\.ip_flood\\.action=filter/\\.ip_flood\\.action=drop/g" ddos.ymlChange mitigation policy for IP Flood attacks from Flowspec filter to RTBH.

containerlab deploy -t ddos.ymlDeploy the topology. Access the DDoS Protect screen at http://localhost:8008/app/ddos-protect/html/

docker exec -it clab-ddos-attacker hping3 \Launch an IP Flood attack. The DDoS Protect dashboard shows that as soon as the ip_flood attack traffic reaches the threshold a control is implemented and the attack traffic is immediately dropped. The entire process between the attack being launched, detected, and mitigated happens within a second, ensuring minimal impact on network capacity and services.

--flood --rawip -H 47 192.0.2.129

docker exec -it clab-ddos-sp-router vtysh -c "show running-config"See Continue reading

Full Stack Journey 065: Developer Tools And Practices Other IT Disciplines Can Adopt

Today's Full Stack Journey asks: Are there tools, techniques, or practices common to software development that other IT disciplines should consider adopting? Can these tools and practices help other IT disciplines improve automation, operations, and daily tasks? Guests Adeel Ahmad and Kurt Seifried join host Scott Lowe to discuss.

The post Full Stack Journey 065: Developer Tools And Practices Other IT Disciplines Can Adopt appeared first on Packet Pushers.

Full Stack Journey 065: Developer Tools And Practices Other IT Disciplines Can Adopt

Today's Full Stack Journey asks: Are there tools, techniques, or practices common to software development that other IT disciplines should consider adopting? Can these tools and practices help other IT disciplines improve automation, operations, and daily tasks? Guests Adeel Ahmad and Kurt Seifried join host Scott Lowe to discuss.Practical Python For Networking: 6.1 Python Packages – Introduction To Packages – Video

This lesson introduces packages, which let you bundle together different Python modules to re-use and share. Course files are in a GitHub repository: https://github.com/ericchou1/pp_practical_lessons_1_route_alerts Additional Resources: Packages Tutorial: https://docs.python.org/3/tutorial/modules.html#packages Python Modules And Packages: An Introduction: https://realpython.com/python-modules-packages/ Eric Chou is a network engineer with 20 years of experience, including managing networks at Amazon AWS and Microsoft […]

The post Practical Python For Networking: 6.1 Python Packages – Introduction To Packages – Video appeared first on Packet Pushers.

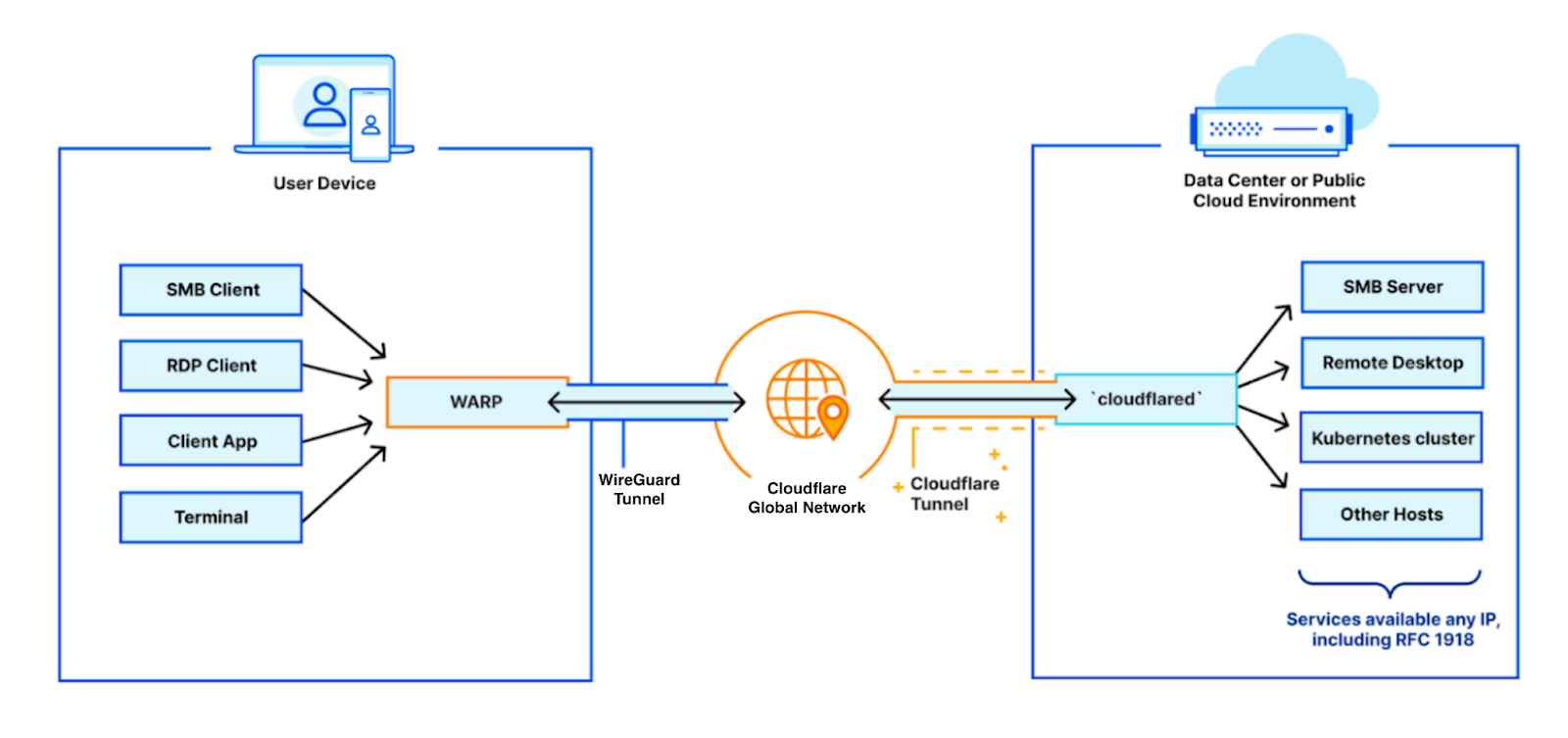

Building many private virtual networks through Cloudflare Zero Trust

We built Cloudflare’s Zero Trust platform to help companies rely on our network to connect their private networks securely, while improving performance and reducing operational burden. With it, you could build a single virtual private network, where all your connected private networks had to be uniquely identifiable.

Starting today, we are thrilled to announce that you can start building many segregated virtual private networks over Cloudflare Zero Trust, beginning with virtualized connectivity for the connectors Cloudflare WARP and Cloudflare Tunnel.

Connecting your private networks through Cloudflare

Consider your team, with various services hosted across distinct private networks, and employees accessing those resources. More than ever, those employees may be roaming, remote, or actually in a company office. Regardless, you need to ensure only they can access your private services. Even then, you want to have granular control over what each user can access within your network.

This is where Cloudflare can help you. We make our global, performant network available to you, acting as a virtual bridge between your employees and private services. With your employees’ devices running Cloudflare WARP, their traffic egresses through Cloudflare’s network. On the other side, your private services are behind Cloudflare Tunnel, accessible Continue reading

Marcelo Affonso and Rebecca Weekly: Why we joined Cloudflare

Marcelo Affonso (VP of Infrastructure Operations) and Rebecca Weekly (VP of Hardware Systems) recently joined our team. Here they share their journey to Cloudflare, what motivated them to join us, and what they are most excited about.

Marcelo Affonso - VP of Infrastructure Operations

I am thrilled to join Cloudflare and lead our global infrastructure operations. My focus will be building, expanding, optimizing, and accelerating Cloudflare’s fast-growing infrastructure presence around the world.

Recently, I have found myself reflecting on how central the Internet has become to the lives of people all over the world. We use the Internet to work, to connect with families and friends, and to get essential services. Communities, governments, businesses, and cultural institutions now use the Internet as a primary communication and collaboration layer.

But on its own, the Internet wasn’t architected to support that level of use. It needs better security protections, faster and more reliable connectivity, and more support for various privacy preferences. What’s more, those benefits can’t just be available to large businesses. They need to be accessible to a full range of communities, governments, and individuals who now rely on the Internet. And they need to be accessible in various ways to Continue reading

$4M for SONIC services

$4M for SONIC services