How to Balance Cloud-Scale Efficiencies Against Business Realities

The widespread deployment of cloud infrastructure has led IT teams to demand freedom of choice, but that might not always be what’s best for the organization as a whole.

The widespread deployment of cloud infrastructure has led IT teams to demand freedom of choice, but that might not always be what’s best for the organization as a whole.

Oracle Brings ‘Star Wars Cyber Defenses’ and Robots to Cloud Security

The company's new cloud architecture features "impenetrable barriers" that block threats and autonomous robots that find threats and kill them, said CTO Larry Ellison.

The company's new cloud architecture features "impenetrable barriers" that block threats and autonomous robots that find threats and kill them, said CTO Larry Ellison.

Some notes for journalists about cybersecurity

The recent Bloomberg article about Chinese hacking motherboards is a great opportunity to talk about problems with journalism.Journalism is about telling the truth, not a close approximation of the truth, but the true truth. They don't do a good job at this in cybersecurity.

Take, for example, a recent incident where the Associated Press fired a reporter for photoshopping his shadow out of a photo. The AP took a scorched-earth approach, not simply firing the photographer, but removing all his photographs from their library.

That's because there is a difference between truth and near truth.

Now consider Bloomberg's story, such as a photograph of a tiny chip. Is that a photograph of the actual chip the Chinese inserted into the motherboard? Or is it another chip, representing the size of the real chip? Is it truth or near truth?

Or consider the technical details in Bloomberg's story. They are garbled, as this discussion shows. Something like what Bloomberg describes is certainly plausible, something exactly what Bloomberg describes is impossible. Again there is the question of truth vs. near truth.

There are other near truths involved. For example, we know that supply chains often replace high-quality expensive components with cheaper, Continue reading

Pulumi Code Dev Platform Adds Premium Team Tier, Scores $15M Series A

The firm's SaaS platform acts as a translation layer between the language a developer is using to write code and where that code is being sent.

The firm's SaaS platform acts as a translation layer between the language a developer is using to write code and where that code is being sent.

What Makes a Security Company?

When you think of a “security” company, what comes to mind? Is it a software house making leaps in technology to save us from DDoS attacks or malicious actors? Maybe it’s a company that makes firewalls or intrusion detection systems that stand guard to keep the bad people out of places they aren’t supposed to be. Or maybe it’s something else entirely.

Tradition Since Twenty Minutes Ago

What comes to mind when you think of a traditional security company? What kinds of technology do they make? Maybe it’s a firewall. Maybe it’s an anti-virus program. Or maybe it’s something else that you’ve never thought of.

Is a lock company like Schlage a security company? Perhaps they aren’t a “traditional” IT security company but you can guarantee that you’ve seen their products protecting data centers and IDF closets. What about a Halon system manufacturer? They may not be a first thought for security, but you can believe that a fire in your data center is going cause security issues. Also, I remember that I learned more about Halon and wet/dry pipe fire sprinkler systems from my CISSP study than anywhere else.

The problem with classifying security companies as “traditional” or “non-traditional” Continue reading

TCP/IP, Sockets, and SIGPIPE

An example of why this bug persists is the well-known college textbook "Unix Network Programming" by Richard Stevens. In section 5.13, he correctly describes the problem.

When a process writes to a socket that has received an RST, the SIGPIPE signal is sent to the process. The default action of this signal is to terminate the process, so the process must catch the signal to avoid being involuntarily terminated.

Election interference from Uber and Lyft

Almost nothing can escape the taint of election interference. A good example is the announcements by Uber and Lyft that they'll provide free rides to the polls on election day. This well-meaning gesture nonetheless calls into question how this might influence the election."Free rides" to the polls is a common thing. Taxi companies have long offered such services for people in general. Political groups have long offered such services for their constituencies in particular. Political groups target retirement communities to get them to the polls, black churches have long had their "Souls to the Polls" program across the 37 states that allow early voting on Sundays.

But with Uber and Lyft getting into this we now have concerns about "big data", "algorithms", and "hacking".

As the various Facebook controversies have taught us, these companies have a lot of data on us that can reliably predict how we are going to vote. If their leaders wanted to, these companies could use this information in order to get those on one side of an issue to the polls. On hotly contested elections, it wouldn't take much to swing the result to one side.

Even if they don't do this consciously, their Continue reading

App Micro-segmentation How To’s: Informatica, Oracle and SAP

consolidated posts from the VMware on VMware blog

Are you someone that prefers a blank sheet of paper or an empty text pad screen? Do you get the time to have that thought process to create the words, images or code to fill that empty space? Yes to both — I’m impressed! Creating something from scratch is an absolutely magical feeling especially once it gets to a point of sharing or usefulness. However, many of us spend a bit more of our time editing, building upon or debugging. Fortunately, that can be pretty interesting as well.

In the case of setting up mico-segmentation with VMware NSX Data Center, you have a couple options on quickly getting started:

- NSX Micro-segmentation: Day 1 Guide

- NSX Micro-segmentation: Day 2 Guide

- (blog) Rapid Micro-segmentation using Application Rule Manager Recommendation Engine

- (blog) Securing Native Cloud Workloads with VMware NSX Cloud Blog Series – Part 1: Getting Started

Those resources and more are great jumping off points especially since you likely have more than just Informatica, Oracle and SAP apps in your environments.

Now, should you have those Informatica, Oracle and SAP apps, then here’s the next level of details. I’m Continue reading

When It Comes to IoT, We Must Work Together to #SecureIt

My first ever rendezvous with the word “IoT” was during my final year at a college conference, when a prominent regional start-up figure dispensed an oblique reference to it. I learned that IoT was the next big thing veering towards the mass market, which would eventually change the course of everyday human existence by making our way of life more convenient. What caught my attention was the term “things” in IoT – an unbounded category which could be anything from the the bed you sleep on, the clothes you drape, or even the personal toiletries you use.

The Internet of Things (IoT) is a class of devices that “can monitor their environment, report their status, receive instructions, and even take action based on the information they receive.” IoT connotes not just the device but also the complex network connected to the device. Multiple studies have revealed that there are more connected devices than people on the planet. Although, combining computers and networks to devices has existed for long, they were previously not integrated to consumer devices and durable goods, used in ordinary day to day life. Furthermore, IoT being an evolving concept, exhibiting a range of ever-changing features, Continue reading

Huawei Shakes Up the AI Status Quo

Google, nVidia, IBM face a new formidable AI challenger in Huawei.

Google, nVidia, IBM face a new formidable AI challenger in Huawei.

10 Security Startups that Investors Are Funding

These 10 security companies raised more than $499 million in just the last month as security remains a top priority for many enterprises.

These 10 security companies raised more than $499 million in just the last month as security remains a top priority for many enterprises.

McAfee Uncovers New Security Threat Linked to China

McAfee also added new products to its Mvision enterprise security portfolio including endpoint detection and response and an integrated data loss prevention policy engine across endpoints, networks, and the cloud.

McAfee also added new products to its Mvision enterprise security portfolio including endpoint detection and response and an integrated data loss prevention policy engine across endpoints, networks, and the cloud.

Encrypt that SNI: Firefox edition

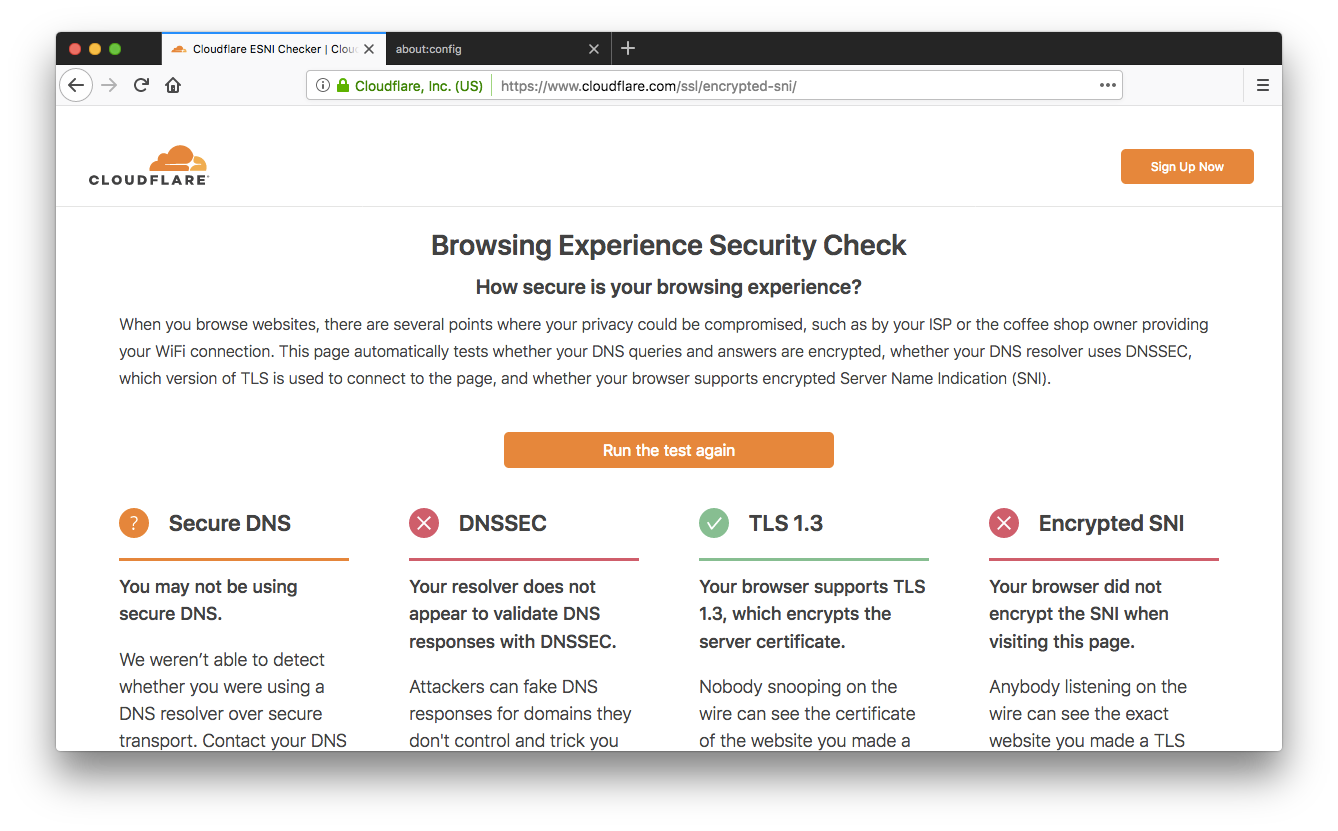

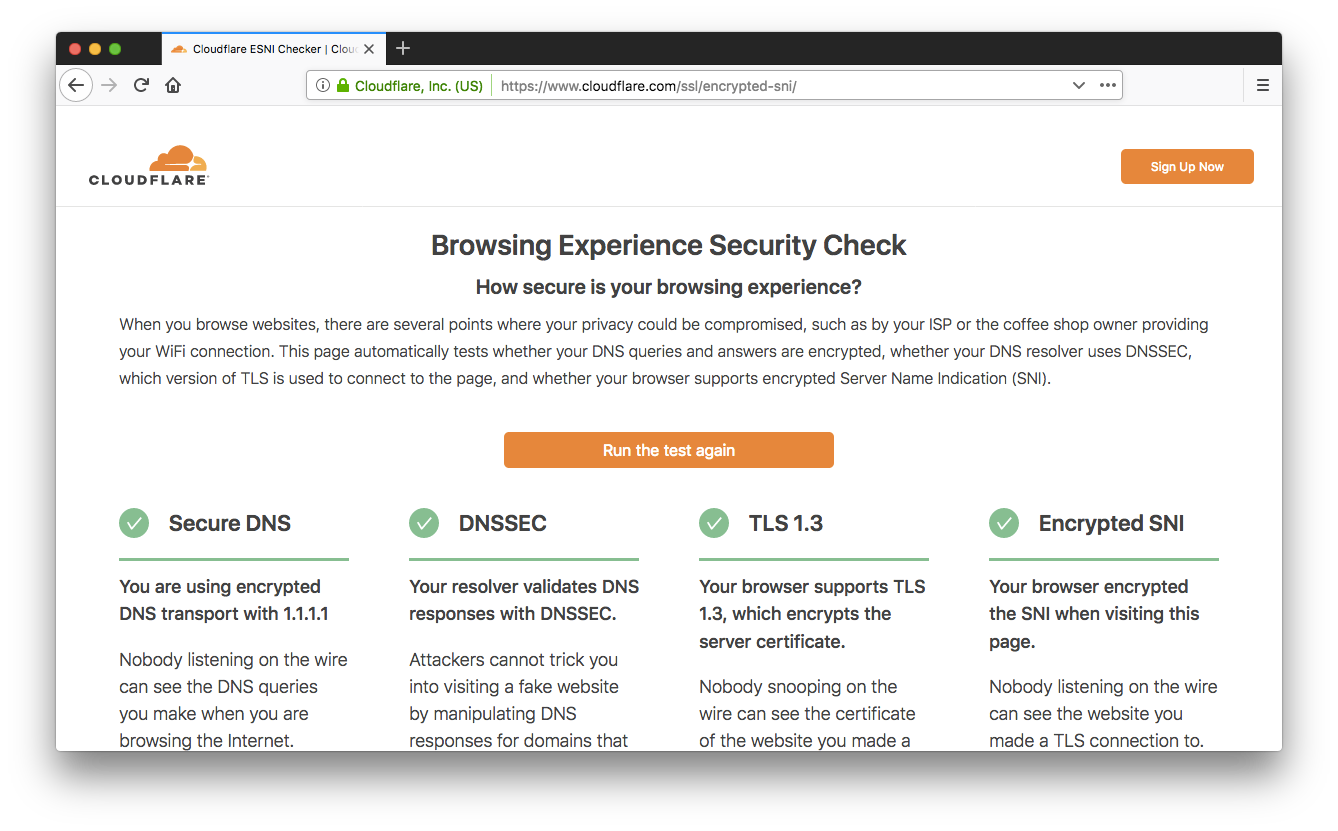

A couple of weeks ago we announced support for the encrypted Server Name Indication (SNI) TLS extension (ESNI for short). As promised, our friends at Mozilla landed support for ESNI in Firefox Nightly, so you can now browse Cloudflare websites without leaking the plaintext SNI TLS extension to on-path observers (ISPs, coffee-shop owners, firewalls, …). Today we'll show you how to enable it and how to get full marks on our Browsing Experience Security Check.

Here comes the night

The first step is to download and install the very latest Firefox Nightly build, or, if you have Nightly already installed, make sure it’s up to date.

When we announced our support for ESNI we also created a test page you can point your browser to https://encryptedsni.com which checks whether your browser / DNS configuration is providing a more secure browsing experience by using secure DNS transport, DNSSEC validation, TLS 1.3 & ESNI itself when it connects to our test page. Before you make any changes to your Firefox configuration, you might well see a result something like this:

So, room for improvement! Next, head to the about:config page and look for the network.security.esni.enabled Continue reading

How SD-WAN as a Service Solves MPLS Limitations

Having your SD-WAN delivered as-a-service helps simply the network and free up IT resources to focus on growth generating opportunities.

Having your SD-WAN delivered as-a-service helps simply the network and free up IT resources to focus on growth generating opportunities.

Cato Networks Adds Self-Healing Capability to Its SD-WAN

It also released an SD-WAN device built specifically for data centers. Previously, it only had a branch device that couldn’t fully meet data centers’ needs.

It also released an SD-WAN device built specifically for data centers. Previously, it only had a branch device that couldn’t fully meet data centers’ needs.

Dell EMC Dips Its Toes Into Security With Cyber Recovery Software

The new software shows how the lines between traditional backup and recovery and data security are being blurred.

The new software shows how the lines between traditional backup and recovery and data security are being blurred.

Alibaba and Fortanix Forge Cloud Security Partnership

Fortanix’s key management and secuity software ensures that even cloud providers don’t have access to the customer’s encrypted data.

Fortanix’s key management and secuity software ensures that even cloud providers don’t have access to the customer’s encrypted data.

MUST READ: Operational Security Considerations for IPv6 Networks

A team of IPv6 security experts I highly respect (including my good friends Enno Rey, Eric Vyncke and Merike Kaeo) put together a lengthy document describing security considerations for IPv6 networks. The document is a 35-page overview of things you should know about IPv6 security, listing over a hundred relevant RFCs and other references.

No wonder enterprise IPv6 adoption is so slow – we managed to make a total mess.