VMware SDDC with NSX Expands to AWS

I prior shared this post on the LinkedIN publishing platform and my personal blog at HumairAhmed.com. There has been a lot of interest in the VMware Cloud on AWS (VMC on AWS) service since its announcement and general availability. Writing this brief introductory post, the response received confirmed the interest and value consumers see in this new service, and I hope to share more details in several follow-up posts.

VMware Software Defined Data Center (SDDC) technologies like vSphere ESXi, vCenter, vSAN, and NSX have been leveraged by thousands of customers globally to build reliable, flexible, agile, and highly available data center environments running thousands of workloads. I’ve also discussed prior how partners leverage VMware vSphere products and NSX to offer cloud environments/services to customers. In the VMworld Session NET1188BU: Disaster Recovery Solutions with NSX, I discussed how VMware Cloud Providers like iLand and IBM use NSX to provide cloud services like DRaaS. In 2016, VMware and AWS announced a strategic partnership, and, at VMworld this year, general availability of VMC on AWS was announced; this new service, and, how NSX is an integral component to this service, is the focus of this post.

Enable nested virtualization on Google Cloud

Google Cloud Platform introduced nested virtualization support in September 2017. Nested virtualization is especially interesting to network emulation research since it allow users to run unmodified versions of popular network emulation tools like GNS3, EVE-NG, and Cloonix on a cloud instance.

Google Cloud supports nested virtualization using the KVM hypervisor on Linux instances. It does not support other hypervisors like VMware ESX or Xen, and it does not support nested virtualization for Windows instances.

In this post, I show how I set up nested virtualization in Google Cloud and I test the performance of nested virtual machines running on a Google Cloud VM instance.

Create Google Cloud account

Sign up for a free trial on Google Cloud. Google offers a generous three hundred dollar credit that is valid for a period of one year. So you pay nothing until either you have consumed $300 worth of services or one year has passed. I have been hacking on Google cloud for one month, using relatively large VMs, and I have consumed only 25% of my credits.

If you already use Google services like G-mail, then you already have a Google account and adding Google Cloud to your account is easy. Continue reading

Customizing the host deploy process

In 4.2 release we have introduced a possibility to customize the host-deploy process by running the Ansible post-tasks after the host-deploy process successfully finishes.

The reason

Prior to oVirt 4.2 release administrators could customize host's firewall rules using engine-config option IPTablesConfigSiteCustom.

Unfortunately writing custom iptables rules into string value to be used in engine-config was very user unfriendly and using engine-config to provide custom

firewalld rules would be even much worse. Because of above we have introduced Ansible integration as a part of host deploy flow, which allows administrators to

add any custom actions executed on the host during host deploy flow.

Special tasks file

As part of this role we also include additional tasks, which could be written by the user, to modify the host-deploy process for example to open some more FirewallD ports.

Those additional tasks can be added to following file:

/etc/ovirt-engine/ansible/ovirt-host-deploy-post-tasks.yml

This post-task file is executed as part of host-deploy process just before setup network invocation.

Example

An example post-tasks file is provided by ovirt-engine installation, at following location:

/etc/ovirt-engine/ansible/ovirt-host-deploy-post-tasks.yml.example

This is just an example file, to add some task into host deploy flow, you need to create below mentioned file Continue reading

oVirt roles Ansible Galaxy integration

In 4.2 release we have splitted our oVirt Ansible roles into separate RPM packages and also separate git repositories, so it is possible for user to install specific role either from Ansible Galaxy or as RPM package.

The reason

The reason to split the roles into separate packages and git repositories was mainly the usage from the AWX/Ansible Tower. Since Ansible Galaxy is only integrated with github you need to store your Ansible role in single git repostiory in order to have separate Ansible role in Galaxy. Previously we used one single repository where we have stored all the roles, but because of that manual configuration was required to make those roles usable in AWX/Ansible Tower. So as you can see on image below we now have many roles in Ansible Galaxy under oVirt user:

How to install the roles

There are still two ways how to install the roles: either using Ansible Galaxy or using RPM package available from oVirt repositories.

Ansible Galaxy

You are now able to install just a single role and not necessarily all of them at once like in previous versions For example to install just oVirt cluster upgrade role, you have to run Continue reading

oVirt roles Ansible Galaxy integration

In 4.2 release we have splitted our oVirt Ansible roles into separate RPM packages and also separate git repositories, so it is possible for user to install specific role either from Ansible Galaxy or as RPM package.

The reason

The reason to split the roles into separate packages and git repositories was mainly the usage from the AWX/Ansible Tower. Since Ansible Galaxy is only integrated with github you need to store your Ansible role in single git repostiory in order to have separate Ansible role in Galaxy. Previously we used one single repository where we have stored all the roles, but because of that manual configuration was required to make those roles usable in AWX/Ansible Tower. So as you can see on image below we now have many roles in Ansible Galaxy under oVirt user:

How to install the roles

There are still two ways how to install the roles: either using Ansible Galaxy or using RPM package available from oVirt repositories.

Ansible Galaxy

You are now able to install just a single role and not necessarily all of them at once like in previous versions For example to install just oVirt cluster upgrade role, you have to run Continue reading

Introduction to OVN and Red Hat Virtualization

Hi Folks, recently my friend and colleague, Tony James prepared and delivered an excellent webinar internally at Red Hat on how to configure Open Virtual Networking (OVN) in Red Hat Virtualization. For those of you that are unfamiliar with OVN, or what it offers, allow me to provide you with the proper illumination.

Background

Way back in the dark ages, the only way that mere mortals could get encapsulation, segmentation, and other benefits of SDN in RHV was via third party integration. Or if there was an OpenStack deployment that could be tapped into via the RHV Neutron integration. Recently though, native SDN (via OVN) is in Tech Preview in RHV 4.1, and I’m going to spend the next few posts going over the basics.

NOTE – Tech Preview is Red Hat’s way of providing the software bits for folks to try out, but there is no support for software in Tech Preview. The official statement is here. In short, the more interest and bugs filed against Tech Preview, the sooner it gets put in production.

The current fully supported virtual networking in RHV is built around “Linux Bridging”. It’s solid and it’s simple. That is to say that Continue reading

LVM Configuration the Easy Way

Now oVirt features a simple way to prevent a host from scanning and then activating logical volumes that are not required directly by the host. In particular, the solution addresses logical volumes on shared storage managed by oVirt, and logical volumes created by a guest in oVirt raw volumes. Why is a solution required? Because scanning and activating other logical volumes may cause data corruption, slow boot, and other issues.

The solution is configuring an LVM filter on each host, which allows LVM on a host to scan only the logical volumes required directly by the host. To achieve this, we have introduced a vdsm-tool command, config-lvm-filter, that will configure the host for you.

The new command, vdsm-tool config-lvm-filter analyzes the current LVM configuration to decide

whether a filter should be configured. Then, if the LVM filter has yet to be configured, the command generates an LVM filter option for this host, and adds the option to the LVM configuration.

Scenario 1: An Unconfigured Host

On a host yet to be configured, the command automatically configures the LVM once the user confirms the operation:

# vdsm-tool config-lvm-filter

Analyzing host...

Found these mounted logical volumes on this host:

logical volume: /dev/mapper/vg0-lv_home

Continue readingUsing Mininet to test OpenStack Firewall drivers

OpenStack FWaaS project will be supporting a Layer 2 firewall based on OVS flow rules. While working on the OVS driver, I felt the need to do some quick tests to check if the flow rules are programmed correctly on the OVS bridge. Although we can run a complete devstack system within a VM, to … Continue reading Using Mininet to test OpenStack Firewall driversLLDP Information Now Available via the Administration Portal

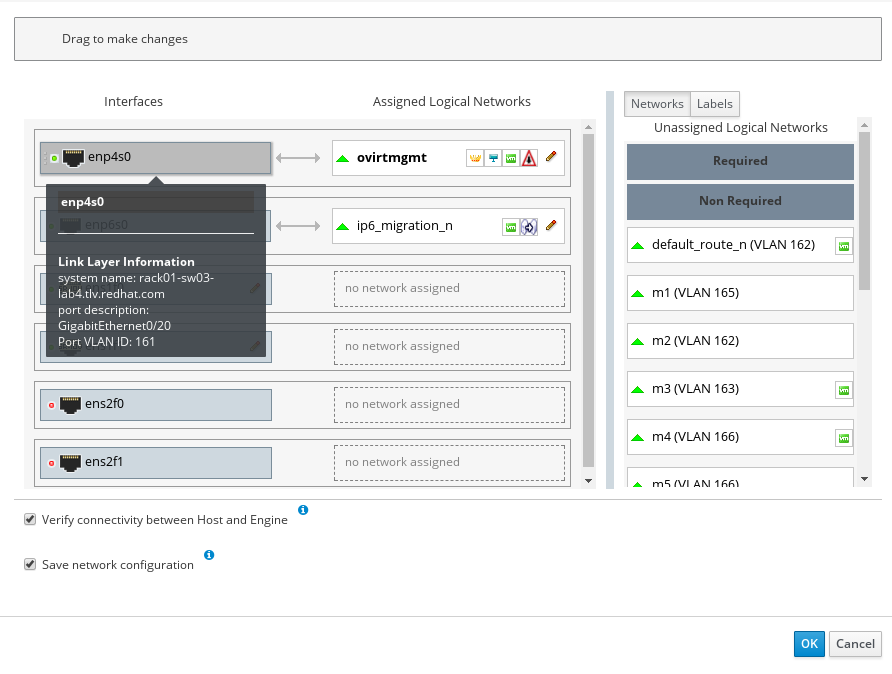

In oVirt 4.2 we have introduced support for the Link Layer Discovery Protocol (LLDP). It is used by network devices for advertising the identity and capabilities to neighbors on a LAN. The information gathered by the protocol can be used for better network configuration. Learn more about LLDP.

Why do you need LLDP?

When adding a host into oVirt cluster, the network administrator usually needs to attach various networks to it. However, a modern host can have multiple interfaces, each with its non-descriptive name.

Examples

In the screenshot below, taken from the Administration Portal, a network administrator has to know

to which interface to attach the network named m2 with VLAN_ID 162. Should it be interface

enp4s0, ens2f0 or even ens2f1? With oVirt 4.2, the administrator can hover over enp4s0

and see that this interface is connected to peer switch rack01-sw03-lab4, and learn that this

peer switch does not support VLAN 162 on this interface. By looking at every interface, the

administrator can choose which interface is the right option for networkm2.

A similar situation arises with the configuration of mode 4 bonding (LACP). Configurating LACP usually starts with network administrator defining a port group Continue reading

Engine XML Brings a Smoother Flow of Data Into oVirt

on November 8, oVirt 4.2 saw the introduction of an important behind-the-scenes enhancement.

The change is associated with the exchange of information between the engine and the VDSM. It addresses the issue of multiple abstraction layers, with each layer needing to convert its input into a suitably readable format in order to report to the next layer.

This change improves data communication between the engine and Libvirt - the tool that manages platform virtualization.

Background

Previously, the configuration file for a newly created virtual machine (VM) originated in the engine as a map or dictionary. Then, in the VDSM, it was converted into an XML file that was readable by Libvirt. This process required a greater coding effort which in turn slowed down the development process.

What's changed?

Now, this map or dictionary has been replaced by engine XML, an XML configuration file that complies with the Libvirt API. VDSM now simply routes this Libvirt-readable file to/from Libvirt, in VM lifecycle (virt) related flows.

As an oVirt user, it’s business as usual.

However, if you are a developer dealing with debugging issues that involve running a VM, simply be aware that the Domain XML is now generated by ovirt-engine, Continue reading

Setting up multiple networks is going to be much faster in oVirt 4.2

Assume you have an oVirt cluster with hundreds of VM networks. Now you add a

new host to the cluster. In order for it to move to the Operational state,

it must have all required networks attached to it. The easiest way to do it is

to attach networks to a label, and then place that label on a NIC of the added

host. However, if there are too many networks, Engine could fail to setup them

all at once. This is caused by a slow VDSM setupNetworks call that is not able

to finish within the 180 seconds long vdsTimeout of Engine.

VDSM performance changes would be included in ovirt-4.2, currently in ovirt-master.

Initscripts performance patch is targeted for EL 7.5.

The following table shows maximal number of networks that can be handled within the vdsTimeout. The measured setupNetworks command handles one network with static IP and N VLAN+bridge networks with no IP. Edit covered a move of all networks from one NIC to another.

Please note that given numbers are for reference only.

| installed | N | add | edit | del |

|---|---|---|---|---|

| ovirt-4.2 | 190 | 180s | 127s | 67s |

| ovirt-4.2 and patched initscripts | 350 | 138s | 176s | 89s |

| ovirt-4.1 | 150 | Continue reading |

Introducing oVirt 4.2.0 Beta

On October 31st, the oVirt project released version 4.2.0 Beta, available for Red Hat Enterprise Linux 7.4, CentOS Linux 7.4, or similar.

Since the release of oVirt 4.2.0 Alpha a month ago, a substantial number of stabilization fixes have been introduced.

What's new in this release?

Support for LLDP, a protocol for network devices for advertising identity and capabilities to neighbors on a LAN. LLDP information can now be displayed in both the UI and via the API. The information gathered by the protocol can be used for better network configuration.

oVirt 4.2.0 Beta features Gluster 3.12.

oVirt's hyperconverged solution now enables a single replica Gluster deployment.

OVN (Open Virtual Network) is now fully supported and recommended for isolated overlay networks. OVN is automatically deployed on the the host, and made available for VM connectivity.

Snapshots can now be uploaded and downloaded via the REST API (and the SDKs).

An improvement has been introduced to the self-hosted engine. Now, the self-hosted-engine will connect to all IPs discovered, allowing both higher performance via multiple paths as well as high availability in the event that one of the targets fails.

A new Continue reading

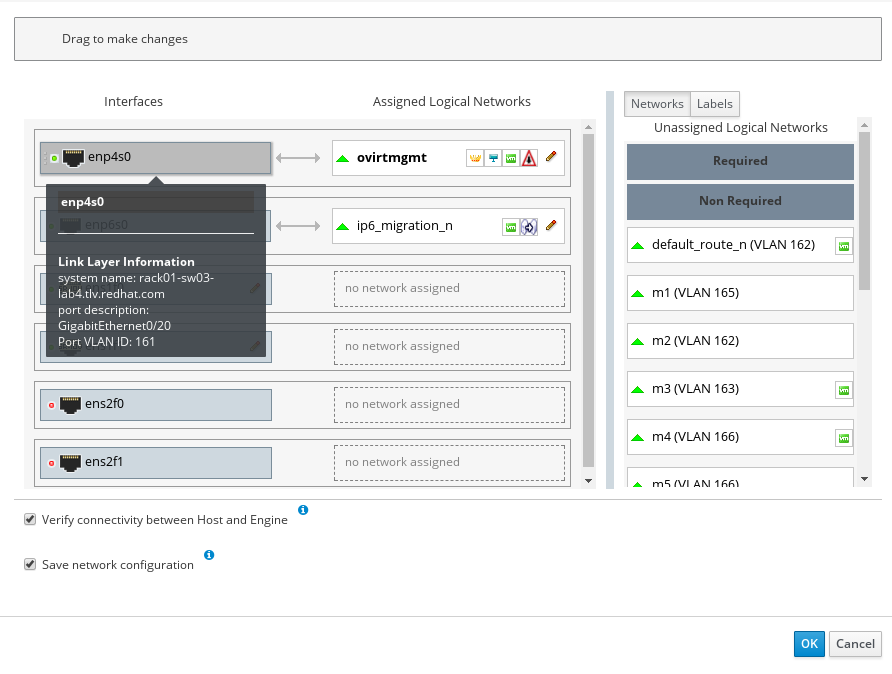

LLDP Information Now Available via the Administration Portal

In oVirt 4.2 we have introduced support for the Link Layer Discovery Protocol (LLDP). It is used by network devices for advertising the identity and capabilities to neighbors on a LAN. The information gathered by the protocol can be used for better network configuration.Learn more about LLDP.

Why do you need LLDP?

When adding a host into oVirt cluster, the network administrator usually needs to attach various networks to it. However, a modern host can have multiple interfaces, each with its non-descriptive name.

Examples

In the screenshot below, taken from the Administration Portal, a network administrator has to know

to which interface to attach the network named m2 with VLAN_ID 162. Should it be interface

enp4s0, ens2f0 or even ens2f1? With oVirt 4.2, the administrator can hover over enp4s0

and see that this interface is connected to peer switch rack01-sw03-lab4, and learn that this

peer switch does not support VLAN 162 on this interface. By looking at every interface, the

administrator can choose which interface is the right option for networkm2.

A similar situation arises with the configuration of mode 4 bonding (LACP). Configurating LACP usually starts with network administrator defining a port group Continue reading

2018 FOSDEM & DEVCONF Events – Call for Proposals

On behalf of oVirt and the Xen Project, we are excited to announce that the call for proposals is now open for the Virtualization & IaaS devroom at the upcoming FOSDEM 2018.

This year will mark FOSDEM’s 18th anniversary as one of the longest-running free and open source software developer events, attracting thousands of developers and users from all over the world. FOSDEM will take place in Brussels, Belgium, February 3 & 4, 2018.

Also coming up is DEVCOM, The 10th annual, free community conference for developers, admins, and users of free and open source Linux, JBoss technologies. DEVCONF will take place in Brno, Czech Republic, January 26-28, 2018.

![]()

About FOSDEM

This Virtualization & IaaS devroom at FOSDEM is a collaborative effort, organized by dedicated folks from projects such as OpenStack, Xen Project,, oVirt, QEMU, and Foreman. Featured sessions will include topics such as open source hypervisors and virtual machine managers such as Xen Project, KVM,bhyve, and VirtualBox, and Infrastructure-as-a-Service projects such as Apache CloudStack, OpenStack, oVirt, QEMU, OpenNebula, and Ganeti.

This devroom will host presentations that focus on topics of shared interest, such as KVM; libvirt; shared storage; virtualized networking; cloud security; clustering and high availability; interfacing with Continue reading

Upgrading Virtual Appliances

In every SDDC workshop I tried to persuade the audience that the virtual appliances (particularly per-application instances of virtual appliances) are the way to go. I usually got the questions along the lines of “who will manage and audit all these instances?” but once someone asked “and how will we upgrade them?”

Short answer: you won’t.

Read more ...Demo: Multi-site Active-Active with NSX, F5 Networks GSLB, and Palo Alto Networks Security

I wrote this post prior on my personal blog at HumairAhmed.com. You can also see many of my prior blogs on multisite and Cross-vCenter NSX here on the VMware Network Virtualization blog site. This post expands on my prior post, Multi-site Active-Active Solutions with NSX-V and F5 BIG-IP DNS. Specifically, in this post, deploying applications in an Active-Active model across data centers is demonstrated where ingress/egress is always at the data center local to the client, or in other words localized ingress/egress. Continue reading

Set up a dedicated virtualization server on Packet.net

Packet is a hardware-as-a-service vendor that provides dedicated servers on demand at very low cost. For me and my readers, Packet offers a solution to the problem of using cloud services to run complex network emulation scenarios that require hardware-level support for virtualization. Packet users may access powerful servers that empower them to perform activities they could not run on a normal personal computer.

In this post, I will describe the procedure to set up an on-demand bare metal server and to create and maintain persistent data storage for applications. I will describe a generic procedure that can be applied to any application and that works for users who access Packet services from a laptop computer running any of the common operating systems: Windows, Mac, and Linux. In a future post, I will describe how I run network emulation scenarios on a Packet server.

Table of Contents

Introducing High Performance Virtual Machines

Bringing high performance virtual machines to oVirt!

Introducing a new VM type in oVirt 4.2.0 Alpha. A newly added checkbox in the all-new Administration Portal delivers the highest possible virtual machine performance, very close to bare metal.

What does it do?

Some of the magic includes:

- Enable Pass-Through Host CPU

- Enable IO Threads, Num Of IO Threads = 1

- Set the IO and Emulator threads pinning topology

For the full feature set, see the very detailed High Performance VM feature page

Count me in! How do I set it up?

Simple. Go to the Administration Portal and from the vertical menu select Compute > Virtual machines. Click the New VM tab to open up the New Virtual Machine dialog box. In the General tab next to the Optimized for field, click the drop down menu and select High Performance. Click OK. Depending on your current configurations, a smart pop-up may open with a list of additional recommended manual configurations, specific to your setup. To address these recommended changes, click Cancel.

New Virtual Machine dialog box with the High Performance VM type highlighted

How VM is started

Starting up a virtual machine (VM) is not an easy task, there are a lot of things going on hidden from the plain sight and studying it alone is a challenging task. The goal of this post is to simplify the process of learning how the oVirt hypervisor works. The concept of the oVirt is illustrated in the process of starting up the VM, which covers everything from top to the bottom.

Disclaimer: I am an engineer working close to the host part of the oVirt, therefore my knowledge of the engine is limited.

Architecture

Let me start with explaining the main parts oVirt hypervisor architecture. It will help a lot in understanding the overall process of the VM startup flow. Following is the simplified architecture, where I omit auxiliary components and support scripts. This will allow me to focus on the core concept without distractions.

The architecture comprises of three main components: 1) web UI and engine, 2) VDSM, 3) guest agent.

The web UI is where the user makes the first contact with the oVirt hypervisor. The web UI is the main command center of the engine and allows a user to control all aspects and states of Continue reading

Introducing oVirt 4.2.0 Alpha

On September 28, the oVirt project released version 4.2.0 Alpha, available for Red Hat Enterprise Linux 7.4, CentOS Linux 7.4, or similar.

This pre-release version should not be used in production, and is not feature complete.

oVirt is the open source virtualization solution that provides an awesome KVM management interface for multi-node virtualization. This maintenance version is super stable and there are some nice new features.

what's new in oVirt 4.2.0?

Here's an overview of the new main features:

The Administration Portal has been redesigned from scratch using Patternfly, a widely adopted standard in web application design that promotes consistency and usability across IT applications, through UX patterns and widgets. The result is a cleaner, more intuitive and user-friendly user interface. The old horizontal menu has been replaced by a two-level vertical menu. The system tree is gone, and its functionality has been integrated into the vertical menus. Here are some screenshots:

Dashboard

Virtual Machines View

Adding a New Virtual Machine

Storage View

An all new VM Portal for non-admin users - designed with React-based UI and Patternfly principles - replaces the existing User Portal. Built with performance and ease of use in mind, Continue reading