Comments: VMware Cloud on AWS

VMware started talking about VMware Cloud on AWS a while ago, and my first response was “yeah, it’s just vCloud Air but they wanted to get rid of CapEx, so it’s running on someone else’s servers”

Last week Frank Denneman published a technical overview of the solution and I was mostly correct.

Read more ...oVirt Now Supports OVS-DPDK

DPDK (Data Plane Development Kit) provides high-performance packet processing libraries and user space drivers. Open vSwitch uses DPDK libraries to perform entirely within the user space. According to Intel ONP performance tests, using OVS with DPDK can provide a huge performance enhancement, increasing network packet throughput and reducing latency.

OVS-DPDK has been added to the oVirt Master branch as an experimental feature. The following post describes the OVS-DPDK installation and configuration procedures.

Please note: Accessing the OVS-DPDK feature requires installing the oVirt Master version. In addition, OVS-DPDK cannot access any features located within the Linux kernel. This includes Linux bridge, tun/tap devices, iptables, etc.

Requirements

In order to achieve the best performance, please follow the instructions at: http://dpdk.org/doc/guides-16.11/linux_gsg/nic_perf_intel_platform.html

The network card must be on the supported vendor matrix, located here: http://dpdk.org/doc/nics

Ensure that your system supports 1GB hugepages:

grep -q pdpe1gb /proc/cpuinfo && echo "1GB supported"

- Hardware support: Make sure VT-d / AMD-Vi is enabled in BIOS

- Kernel support: When adding a new host via the oVirt GUI, go to Hosts -> Edit > Kernel, and add the following parameters to the Kernel command line:

iommu=pt intel_iommu=on default_hugepagesz=1GB hugepagesz=1G hugepages=NIn the event that 1GB Continue reading

VMware’s Platform Revolves Around ESXi, Except Where It Can’t

Building a platform is hard enough, and there are very few companies that can build something that scales, supports a diversity of applications, and, in the case of either cloud providers or software or whole system sellers, can be suitable for tens of thousands, much less hundreds of thousands or millions, of customers.

But if building a platform is hard, keeping it relevant is even harder, and those companies who demonstrate the ability to adapt quickly and to move to new ground while holding old ground are the ones that get to make money and wield influence in the datacenter. …

VMware’s Platform Revolves Around ESXi, Except Where It Can’t was written by Timothy Prickett Morgan at The Next Platform.

VMWare Networking to Openstack Networking to AWS Networking

Understanding the networking constructs in Openstack, VMware and AWS will help in making these networking design decisions. I will try to compare and equate network constructs in these three cloud technologies below:

Openstack | Amazon AWS | VMware (VSwitch / DVSwitch) | |

|---|---|---|---|

| Virtual Network Continue reading |

Optimize Data Center Infrastructure: Virtualize Network Services

We’re almost done with our data center infrastructure optimization journey. In this step, we’ll virtualize the network services.

How to use oVirt Ansible roles

The recent post, An Introduction to Ansible Roles, discussed the new roles that were introduced in the oVirt 4.1.6 release. This follow-up post will explain how to set up and use Ansible roles, using either Ansible Galaxy or oVirt Ansible Roles RPM.

Ansible Galaxy

To make life easier, Ansible Galaxy stores multiple Ansible roles, including oVirt Ansible roles. To install the roles, perform the next steps:

To install roles on your local machine, run the following command:

$ ansible-galaxy install ovirt.ovirt-ansible-roles

This will install your roles into directory /etc/ansible/roles/ovirt.ovirt-ansible-roles/.

By default, Ansible only searches for roles in /etc/ansible/roles/ directory and your current working directory.

To change the directories where Ansible looks for roles, modify the roles_path option of [defaults] section in ansible.cfg configuration file.

The default location of this file is in /etc/ansible/ansible.cfg.

$ sed -i 's|#roles_path = /etc/ansible/roles|roles_path = /etc/ansible/roles:/etc/ansible/roles/ovirt.ovirt-ansible-roles/roles|' /etc/ansible/ansible.cfg

For more information on changing the directories where Ansible searches for roles, see the Ansible documentation pages.

Copy one of the examples from the directory /etc/ansible/roles/ovirt.ovirt-ansible-roles/examples/ into your working directory, then modify the needed variables and run the playbook.

oVirt Ansible Roles RPM

In the latest oVirt repositories Continue reading

SecureNet: Simulating a Secure Network with Mininet

I have been working with OpenStack(devstack) for a while and I must say it is quite convenient to bring up a test setup using devstack. At times, I still feel it is an overkill to use devstack for a quick test to verify your understanding of the network/security rules/routing etc. This is where Mininet shines. … Continue reading SecureNet: Simulating a Secure Network with MininetThe search for the killer app of unikernels

When a radically different technology comes along it usually takes time before we figure out how to apply it. When we had steam engines running factories there was one engine in each factory with a giant driveshaft running through the whole factory. When the electric engine came along people started replacing the giant steam engine with a giant electric motor. It took time before people understood that they could deploy several small motors in different parts of the factory and connect electric cables rather than having a common driveshaft. It takes time to understand the technology and its applicability.

The situation with unikernels is similar. We have this new thing and to some extent we’re using it to replace some general purpose operating system workloads. But we’re still very much limited by how we think about operating systems and computers.

Unikernels are radically different. Naturally the question of the killer app has come up on a number of occasions. As unikernels are quite different from the dominant operating systems of today it isn’t as easy to spot what it will be. Here I’ll try to answer why it’s hard to spot the killer app.

Defining characteristics of unikernels

Let’s start Continue reading

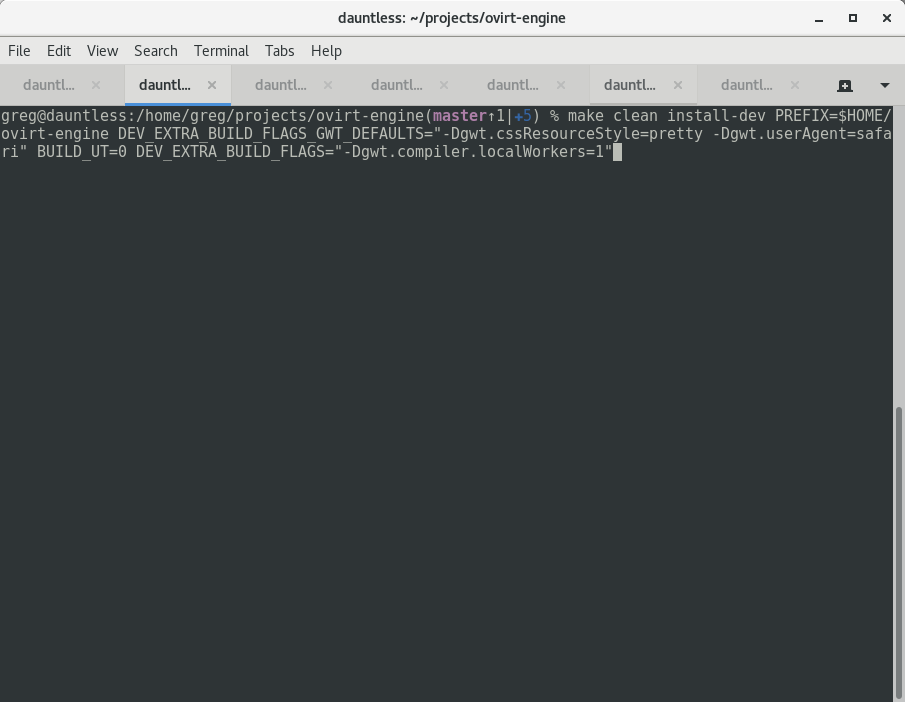

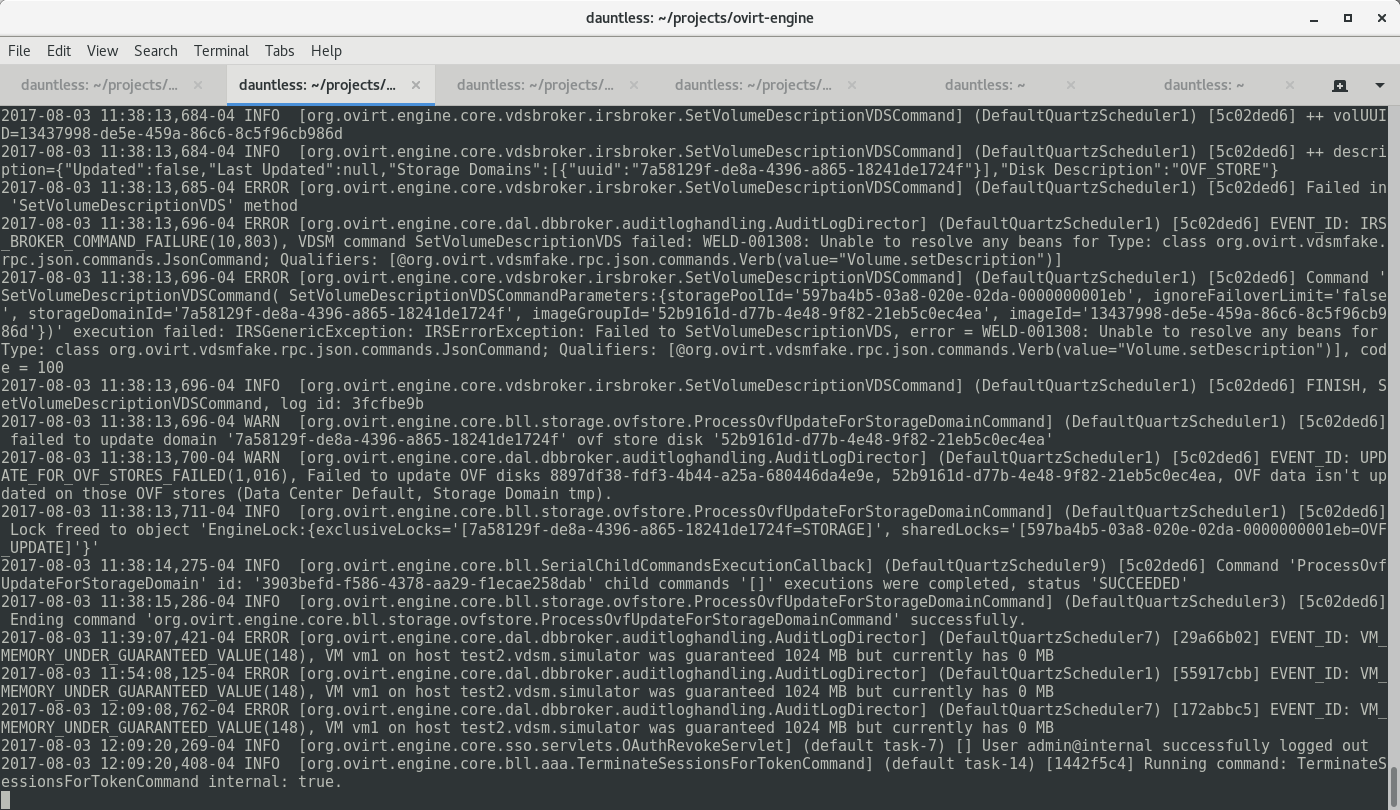

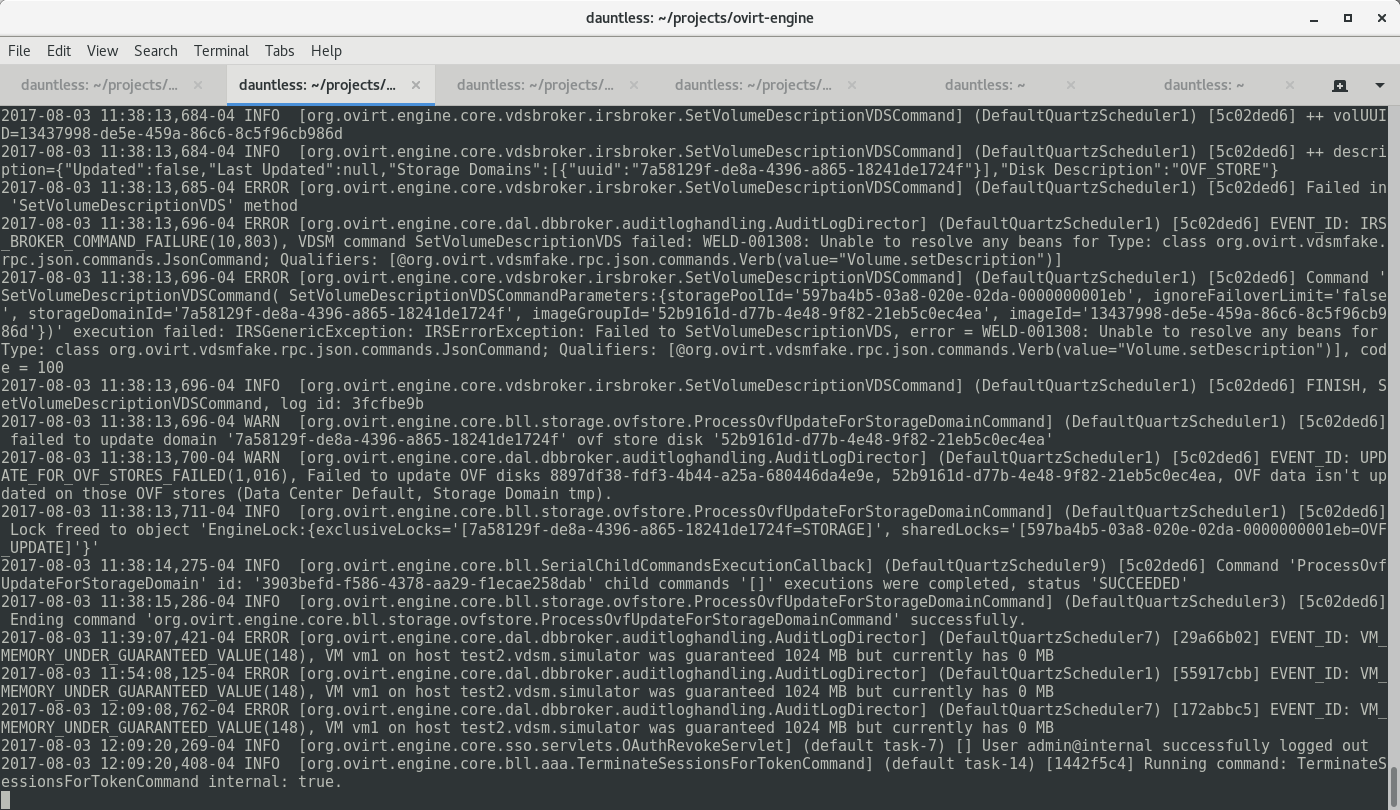

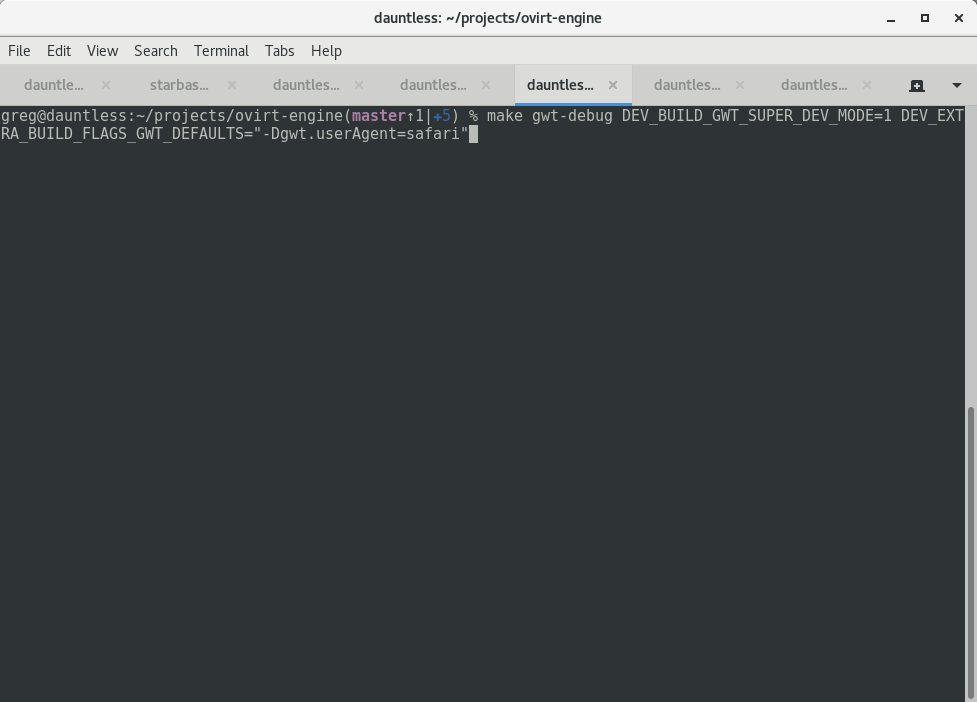

oVirt Webadmin GWT Debug Quick Refresh

As a developer, one drawback of using Google Web Toolkit (GWT) for the oVirt Administration Portal (aka webadmin) is that the GWT compile process takes an exceptionally long time. If you make a change in some code and rebuild the ovirt-engine project using make install-dev ..., you'll be waiting several minutes to test your change. In practice, such a long delay in the usual code-compile-refresh-test cycle would be unbearable.

Luckily, we can use GWT Super Dev Mode ("SDM") to start up a quick refresh-capable instance of the application. With SDM running, you can make a change in GWT and test the refreshed change within seconds.

If you want to step through code and use the Chrome debugger, oVirt and SDM don't work well together for debugging due to the oVirt Administration Portal's code and source map size. Therefore, below we demonstrate how to disable source maps.

Demo (40 seconds)

Steps

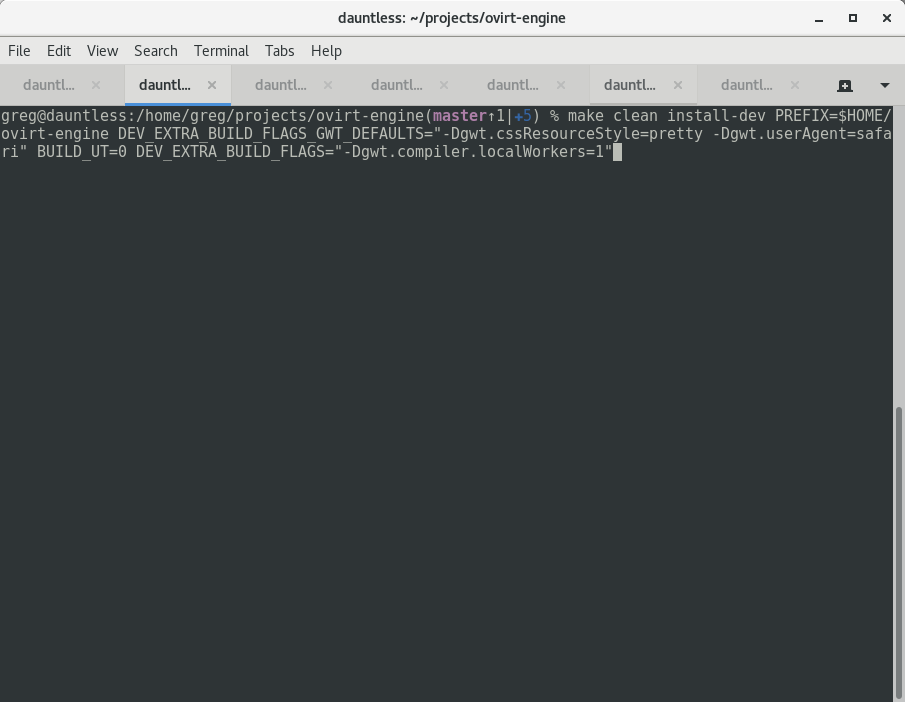

-

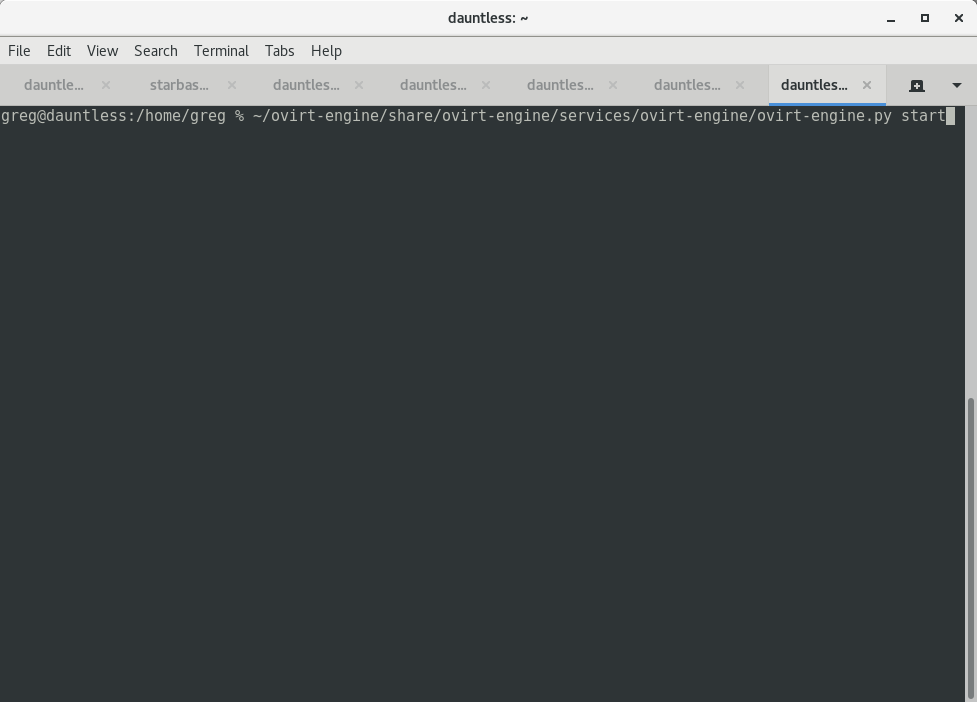

Open a terminal, build the engine normally, and start it.

``` make clean install-dev PREFIX=$HOME/ovirt-engine DEV_EXTRA_BUILD_FLAGS_GWT_DEFAULTS="-Dgwt.cssResourceStyle=pretty -Dgwt.userAgent=safari" BUILD_UT=0 DEV_EXTRA_BUILD_FLAGS="-Dgwt.compiler.localWorkers=1"

…

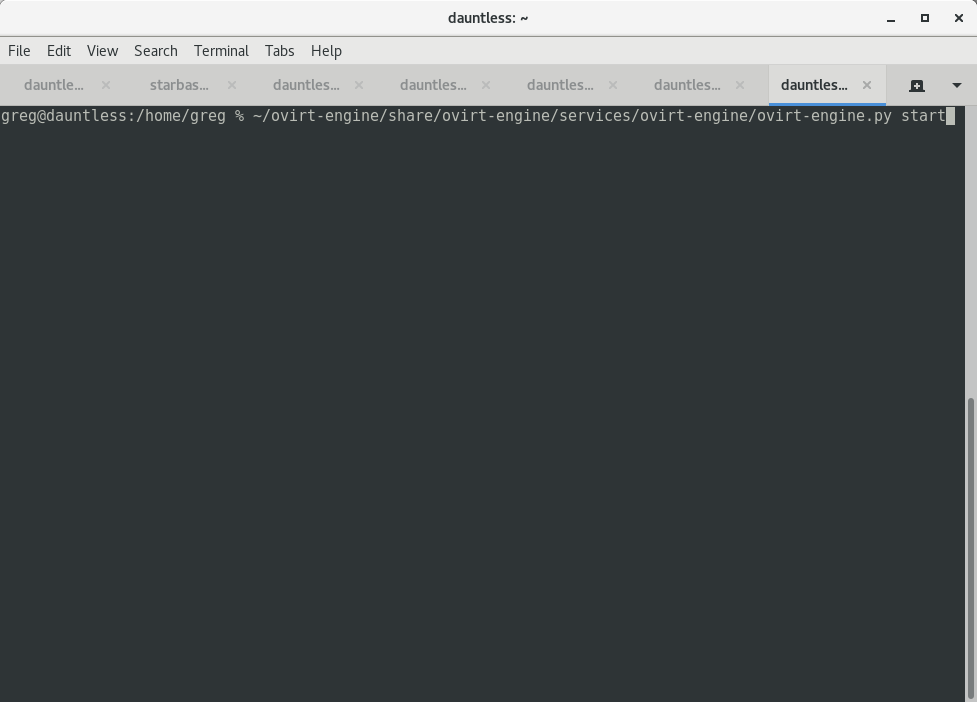

$HOME/ovirt-engine/share/ovirt-engine/services/ovirt-engine/ovirt-engine.py start

```

-

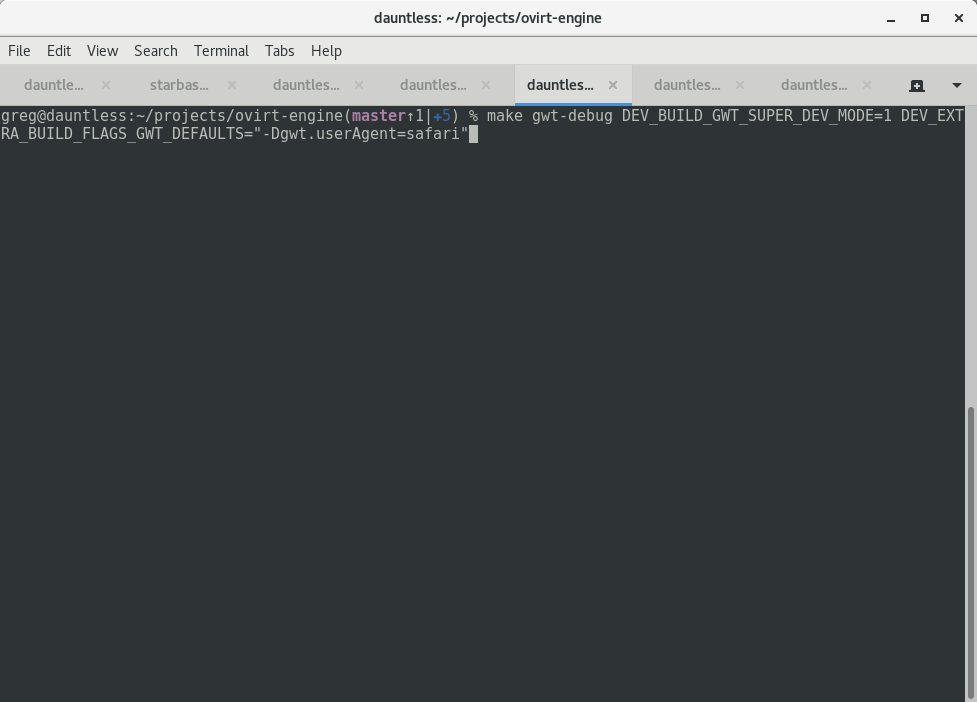

In a second terminal, run:

Chrome:

make gwt-debug DEV_BUILD_GWT_SUPER_DEV_MODE=1 DEV_EXTRA_BUILD_FLAGS_GWT_DEFAULTS="-Dgwt.userAgent=safari"or

Firefox:

make gwt-debug DEV_BUILD_GWT_SUPER_DEV_MODE=1 DEV_EXTRA_BUILD_FLAGS_GWT_DEFAULTS="-Dgwt.userAgent=gecko1_8"

Wait about two minutes Continue reading

Virtual networking optimization and best practices

Network optimization is an incredibly important component to scalability and efficiency. Without solid network optimization, an organization will be confronted with a quickly building overhead and vastly reduced efficiency. Network optimization aids a business in making the most of its technology, reducing costs and even improving upon security. Through virtualization, businesses can leverage their technology more effectively — they just need to follow a few virtual networking best practices.

Prioritize the most important applications

There are certainly applications that are optional, but there are others that are critical. The most important applications on a network are the ones that need to be prioritized in terms of system resources. These are generally cyber security suites, firewalls, and monitoring services. Optional applications may still be preferred for business operations, but because they aren’t critical they can still operate slowly in the event of system wide issues.

Prioritizing security applications is especially important as there are many cyber security exploits that operate with the express purpose of flooding the system until security elements fail. When security apps are prioritized, the risk of this type of exploit is greatly reduced.

Install application monitoring services

Application monitoring services will be able to automatically detect when Continue reading

VMware NSX is something something awesome

At times I have trouble focusing on writing articles for some of the presentations I am exposed to at Tech Field Day. Because of that, I really wanted to try something different. This article is more of my free-formed thoughts about NSX and why I’m excited to deploy it at my current $job. From the time I heard that the NSX team was going to be presenting at TFD15 for 4 hours, I knew that I would be writing this article because. Unfortunately it took me far too long to gather up this half formed thought.

At times I have trouble focusing on writing articles for some of the presentations I am exposed to at Tech Field Day. Because of that, I really wanted to try something different. This article is more of my free-formed thoughts about NSX and why I’m excited to deploy it at my current $job. From the time I heard that the NSX team was going to be presenting at TFD15 for 4 hours, I knew that I would be writing this article because. Unfortunately it took me far too long to gather up this half formed thought.

First things first – NSX and Micro-Segmentation

I love the concept of Micro-Segmentation that NSX enables. Think of NSX as a virtual distributed firewall that is integrated with your hypervisor, but it really is so much more. This allows you to connect a security policy directly to the vNIC of your guest VM’s. Attaching it to the VM allows that policy to follow the VM anywhere, and everywhere it goes. You don’t have to worry about inter- or intra-VLAN segmentation as all of that is done on each vNIC. On top of that, NSX’s firewall is PCI DSS 3.2 compliant! Another rather compelling Continue reading

Best Practices for RHV 4

Hi folks, one of the many things that I’ve been working on behind the scenes has finally seen the light of day: Best Practices for Red Hat Virtualization 4. This takes over where the product documentation leaves off.What I mean by that is this:

The product documentation is (mostly) great about telling you “how” to do the many activities related to deploying Red Hat Virtualization.

This new document tells you “why” to do many of the activities related to deploying Red Hat Virtualization. It does NOT have code examples, but it DOES have lots of things to consider. Things like:

- “Standard” deployment or “Hosted Engine” deployment

- RHEL host or RHV host

- NAS or SAN

- Lager or Ale (just kidding)

In other words, when you go to plan out your deployment, this is the document that you want to read before you paint yourself into a corner. Many of the items are best practices, like “don’t turn off SELinux”. Others are more considerations and implications, like “NAS or SAN”.

If this is something you’re interested in, you can download it here:

Best Practices for Red Hat Virtualization 4

Hope this helps,

Captain KVM

The post Best Practices for RHV Continue reading

An Introduction to oVirt Ansible Roles

Today I would like to share with you some of the integration work with Ansible 2.3 that was done in the latest oVirt 4.1 release. The Ansible integration work was quite extensive and included Ansible modules that can be utilized for automating a wide range of oVirt tasks, including tiered application deployment and virtualization infrastructure management.

While Ansible has multiple levels of integrations, I would like to focus this article on oVirt Ansible roles. As stated in the Ansible documentation: “Roles in Ansible build on the idea of include files and combine them to form clean, reusable abstractions – they allow you to focus more on the big picture and only dive into the details when needed.”

We used the above logic as a guideline for developing the oVirt Ansible roles. We will cover three of the many Ansible roles available for oVirt:

For each example, I will describe the role's purpose and how it is used.

oVirt Infra

The purpose of this role is to automatically configure and manage an oVirt datacenter. It will take a newly deployed- but not yet configured- oVirt engine (RHV-M for RHV users), hosts, and storage and Continue reading

Hyper-converged infrastructure – Part 1 : Is it a real thing ?

Recently I was lucky enough to play with Cisco Hyperflex in a lab and since it was funny to play with, I decided to write a basic blog post about the hyper-converged infrastructure concept (experts, you can move forward and read something else ? ). It has really piqued my interest. I know I may be […]

The post Hyper-converged infrastructure – Part 1 : Is it a real thing ? appeared first on VPackets.net.

Unikernels are secure. Here is why.

Per Buer is the CEO of IncludeOS. IncludeOS is a clean-slate unikernel written in C++ with performance and security in mind. Per Buer is the founder and previous CEO/CTO of Varnish Software.

We’ve created a video that explains this in 7 minutes, so you’ll have the option of watching it instead of reading it.

There have been put forth various arguments for why unikernels are the better choice security wise and also some contradictory opinions on why they are a disaster. I believe that from a security perspective unikernels can offer a level of security that is unprecedented in mainstream computing.

A smaller codebase

Classic operating systems are nothing if not generic. They support everything and the kitchen sink. Since they ship in their compiled form and since users cannot be expected to compile functionality as it is needed, everything needs to come prebuilt and activated. Case in point; your Windows laptop might come with various services activated (bluetooth, file sharing, name resolution, and similar services). You might not use them but they are there. Go to some random security conference and these services will likely be the attack vector that is used to break into your laptop — even Continue reading

How To: Setting up VPN (IPSec tunnel) to an AWS VPC

- Amazon's VPN connection service that uses the customer gateway and virtual private gateway.

- Using a VPN appliance that acts as a gateway terminating IPSec tunnel.

AWS side configuration

- Create a Virtual Private Gateway. This does not take any settings except a tag/name

- Create a Customer Gateway.

- Make sure the Customer Gateway mimic’s your external / gateway router in your infrastructure. (WAN IP). Select BGP or non-BGP according to your router config.

- Create a new VPC, say 10.0.0.0/16

- Connect the Virtual Private Gateway to this VPC. (VPG -> Attach VPC -> Select your vpc)

- Open the route table for this VPC and enable route propagation (VPC -> Route table -> Route Propataion -> Yes)

- Create new VPN

- Choose specific VPG to associate along with Customer Gateway. You can create a Customer Gateway when creating a VPN if you haven't already done step 1).

- Set routing options. Dynamic if your gateway router Continue reading