Palo Alto Auto-Tagging to Automate Security Actions

Auto-tagging allows the firewall to tag a policy object when it receives a log that matches specific criteria, creating an IP-to-tag or user-to-tag mapping. For example, when the firewall generates a traffic or threat log, you can configure it to tag the source IP address or User associated with that log using a specific tag name. These tags can then be used to automatically populate policy objects like Dynamic User Groups or Dynamic Address Groups, which in turn can automate security actions within security policies.

For example, let's say I have a policy that denies traffic from the Internet to the firewall's public IP or subnet whenever someone attempts to access random ports. This policy blocks the traffic and generates a traffic log. Now, if someone tries to target our public IP on port 22 (SSH), we might want to add them to a blacklist, which is a Dynamic Address Group. We can then create another policy that references this Dynamic Address Group to block any further traffic from this IP address.

A Realistic Use Case

A realistic use case is when you want to block a source IP after multiple failed authentication attempts to GlobalProtect. Typically, you can use Continue reading

Tech Bytes: Converge Networking and Security with Fortinet APs and Switches (Sponsored)

Today on the Tech Bytes podcast we talk Ethernet switches and wireless APs with sponsor Fortinet. Yes, Fortinet. Best known for firewalls and other security products, Fortinet has a full line of switches for the campus and data center, as well as a robust portfolio of wireless APs. Fortinet is here to make its case... Read more »Anthropic And OpenAI Show Why Musk Should Build A Cloud

If Amazon is going to make you pay for the custom AI advantage that it wants to build over rivals Google and Microsoft, then it needs to have the best models possible running on its homegrown accelerators. …

Anthropic And OpenAI Show Why Musk Should Build A Cloud was written by Timothy Prickett Morgan at The Next Platform.

Topology aware flow analytics with NVIDIA NetQ

NVIDIA Cumulus Linux 5.11 for AI / ML describes how NVIDIA 400/800G Spectrum-X switches combined with the latest Cumulus Linux release deliver enhanced real-time telemetry that is particularly relevant to the AI / machine learning workloads that Spectrum-X switches are designed to handle.

This article shows how to extract Topology from an NVIDIA fabric in order to perform advanced fabric aware analytics, for example: detect flow collisions, trace flow paths, and de-duplicate traffic.

In this example, we will use NVIDIA NetQ, a highly scalable, modern network operations toolset that provides visibility, troubleshooting, and validation of your Cumulus and SONiC fabrics in real time.

netq show lldp jsonFor example, the NetQ Link Layer Discovery Protocol (LLDP) service simplifies the task of gathering neighbor data from switches in the network, and with the json option, makes the output easy to process with a Python script, for example, lldp-rt.py.

The simplest way to try sFlow-RT is to use the pre-built sflow/topology Docker image that packages sFlow-RT with additional applications that are useful for monitoring network topologies.

docker run -p 6343:6343/udp -p 8008:8008 sflow/topologyConfigure Cumulus Linux to steam sFlow telemetry to sFlow-RT on UDP port 6343 (the default for Continue reading

EVPN Designs: EVPN IBGP over IPv4 EBGP

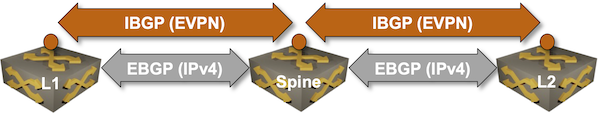

We’ll conclude the EVPN designs saga with the “most creative” design promoted by some networking vendors: running an IBGP session (carrying EVPN address family) between loopbacks advertised with EBGP IPv4 address family.

Oversimplified IBGP-over-EBGP design

There’s just a tiny gotcha in the above Works Best in PowerPoint diagram. IBGP assumes the BGP neighbors are in the same autonomous system while EBGP assumes they are in different autonomous systems. The usual way out of that OMG, I painted myself into a corner situation is to use BGP local AS functionality on the underlay EBGP session:

From Python to Go 004. Arrays, Lists, Slices.

Hello my friend,

In this post we will start exploring how to store multiple values in a single variable. There are multiple approaches how this can be achieved both in Python and Go (Golang). The first one we’ll cover is using ordered something of elements. We’ve used word “something”, because in Python this is called list, whilst Go (Golang) use the term slice. Let’s see what is similar and what is not between Python and Go (Golang).

Who Else Deals With Network Automation?

Just this week there was a massive event Autocon2, which is the biggest gathering of network automation enthusiasts from the entire world. People from different industries shared their experience, success stories and challenges. Whilst many of those insights are different, all of them revolve around the central idea that network automation is a must.

We have started doing network automation trainings before it become mainstream, yet we constantly update its content with new things. Start your training today to onboard the training, which is just leaving the platform.:

We offer the following training programs in network automation for you:

Cisco CML Free Tier (No Kidding)

I first came across this on LinkedIn that Cisco is introducing a free tier of their Cisco CML platform (starting from version 2.8). My initial reaction was, 'Cisco giving something away for free? That can't be true!' But it turns out, it is. I couldn't wait to try it. I believe it’s limited to five nodes, but I’ll take it.

I could never justify paying $199 per year when there are free alternatives available (EVE-NG, GNS3, Containerlab). If it were a one-time purchase, I might have gone for it, but $199 every year is just too much for me.

In this post, I'll cover how to get the free version and how to install it on VMware Workstation.

Downloading the Image

Head over to the Cisco download page and select CML version 2.8 Free Tier. As I mentioned earlier, you need a Cisco account, which is easy to create. Depending on your installation method, you can choose either the bare-metal or OVA Continue reading

Ford Lead Says HPC, GPUs, AI Are Keys to Driving Progress

Like all auto manufacturers, Ford is pursuing what can only be described as a profound digital transformation. …

Ford Lead Says HPC, GPUs, AI Are Keys to Driving Progress was written by Nicole Hemsoth Prickett at The Next Platform.

Microsoft Is First To Get HBM-Juiced AMD CPUs

Intel was the first of the major CPU makers to add HBM stacked DRAM memory to a CPU package, with the “Sapphire Rapids” Max Series Xeon 5 processors, but with the “Granite Rapids” Xeon 6, Intel abandoned the use of HBM memory in favor of what it would hope would be more main stream MCR DDR5 main memory, which has multiplexed ranks to boost bandwidth by nearly 2X over regular DDR5 memory. …

Microsoft Is First To Get HBM-Juiced AMD CPUs was written by Timothy Prickett Morgan at The Next Platform.

NSF Comes to SC24 With Money Map, AI Blueprint

You’d be forgiven for thinking the annual Supercomputing Conference (SC24) was a global AI-specific event this year. …

NSF Comes to SC24 With Money Map, AI Blueprint was written by Nicole Hemsoth Prickett at The Next Platform.

HN 759: Deploying the BGP Monitoring Protocol (BMP) at ISP Scale

The BGP Monitoring Protocol, or BMP, is an IETF standard. With BMP you can send BGP prefixes and updates from a router to a collector before any policy filters are applied. Once collected, you can analyze this routing data without any impact on the router itself. On today’s Heavy Networking, we talk with Bart Dorlandt,... Read more »Hedge 251: Regulating Local Data Centers

What impact do local regulations have on our ability to build and operate new data centers in the United States? What impact do these regulations have on local economies? Juan Londoño, from the Taxpayers Protection Alliance, joins Ned Bellavance and Russ White to discuss yet another part of the network engineering world.

TNO009: From Network Monitoring to Observability: Make the Leap for Better NetOps

Traditional network monitoring was built around SNMP and logs. And while there’s still a role for these sources, network observability aims to incorporate more data to help you build a holistic picture of the network and its behavior and performance. These sources can include flows, streaming telemetry, APIs, NETCONF, the CLI, deep packet inspection, synthetic... Read more »Dynamic BGP Peers

You might have an environment where a route reflector (or a route server) has dozens or hundreds of BGP peers. Configuring them by hand is a nightmare; you should either build a decent automation platform or use dynamic BGP neighbors – a feature you can practice in the next lab exercise.

Click here to start the lab in your browser using GitHub Codespaces (or set up your own lab infrastructure). After starting the lab environment, change the directory to session/9-dynamic and execute netlab up.

SC24 Over 10 Terabits per Second of WAN Traffic

The SC24 WAN Stress Test chart shows 10.3 Terabits bits per second of WAN traffic to the The International Conference for High Performance Computing, Networking, Storage, and Analysis (SC24) conference held this week in Atlanta. The conference network used in the demonstration, SCinet, is described as the most powerful and advanced network on Earth, connecting the SC community to the world.

SC24 Real-time RoCEv2 traffic visibility describes a demonstration of wide area network bulk data transmission using RDMA over Converged Ethernet (RoCEv2) flows typically seen in AI/ML data centers. In the example, 3.2Tbits/second sustained trasmissions from sources geographically distributed around the United States was demonstrated.

SC24 Dropped packet visibility demonstration shows how the sFlow data model integrates three telemetry streams: counters, packet samples, and packet drop notifications. Each type of data is useful on its own, but together they provide the comprehensive network wide observability needed to drive automation. Real-time network visibility is particularly relevant to AI / ML data center networks where congestion and dropped packets can result in serious performance degradation and in this screen capture you can see multiple 400Gbits/s RoCEv2 flows.

SC24 SCinet traffic describes the architecture of the real-time monitoring system used to Continue reading

What is Network Automation?

Ever since AutoCon1, I've been trying to define Network Automation, at least in my own mind. The thinking is, we need to define terms before we can tackle solutions. In a jet lagged, sleep deprived moment, it occurred to me that NAF is trying to help us go from a single celled organisms to a READ MORE

The post What is Network Automation? appeared first on The Gratuitous Arp.