AMD Boasts First Mainstream 64-Core Processor at CES

AMD CEO Lisa Su described the Ryzen Threadripper 3990X as the “very first 64-core processor in...

AMD CEO Lisa Su described the Ryzen Threadripper 3990X as the “very first 64-core processor in...

From architecture to operations, 5G networks have the potential to drive industries towards digital...

Company President Cristiano Amon said the product will be “deployed commercially at scale in the...

Ten years ago, when Cloudflare was created, the Internet was a place that people visited. People still talked about ‘surfing the web’ and the iPhone was less than two years old, but on July 4, 2009 large scale DDoS attacks were launched against websites in the US and South Korea.

Those attacks highlighted how fragile the Internet was and how all of us were becoming dependent on access to the web as part of our daily lives.

Fast forward ten years and the speed, reliability and safety of the Internet is paramount as our private and work lives depend on it.

We started Cloudflare to solve one half of every IT organization's challenge: how do you ensure the resources and infrastructure that you expose to the Internet are safe from attack, fast, and reliable. We saw that the world was moving away from hardware and software to solve these problems and instead wanted a scalable service that would work around the world.

To deliver that, we built one of the world's largest networks. Today our network spans more than 200 cities worldwide and is within milliseconds of nearly everyone connected to the Internet. We have built the capacity to stand Continue reading

Your experience using the Internet has continued to improve over time. It’s gotten faster, safer, and more reliable. However, you probably have to use a different, worse, equivalent of it when you do your work. While the Internet kept getting better, businesses and their employees were stuck using their own private networks.

In those networks, teams hosted their own applications, stored their own data, and protected all of it by building a castle and moat around that private world. This model hid internally managed resources behind VPN appliances and on-premise firewall hardware. The experience was awful, for users and administrators alike. While the rest of the Internet became more performant and more reliable, business users were stuck in an alternate universe.

That legacy approach was less secure and slower than teams wanted, but the corporate perimeter mostly worked for a time. However, that began to fall apart with the rise of cloud-delivered applications. Businesses migrated to SaaS versions of software that previously lived in that castle and behind that moat. Users needed to connect to the public Internet to do their jobs, and attackers made the Internet unsafe in sophisticated, unpredictable ways - which opened up every business to a Continue reading

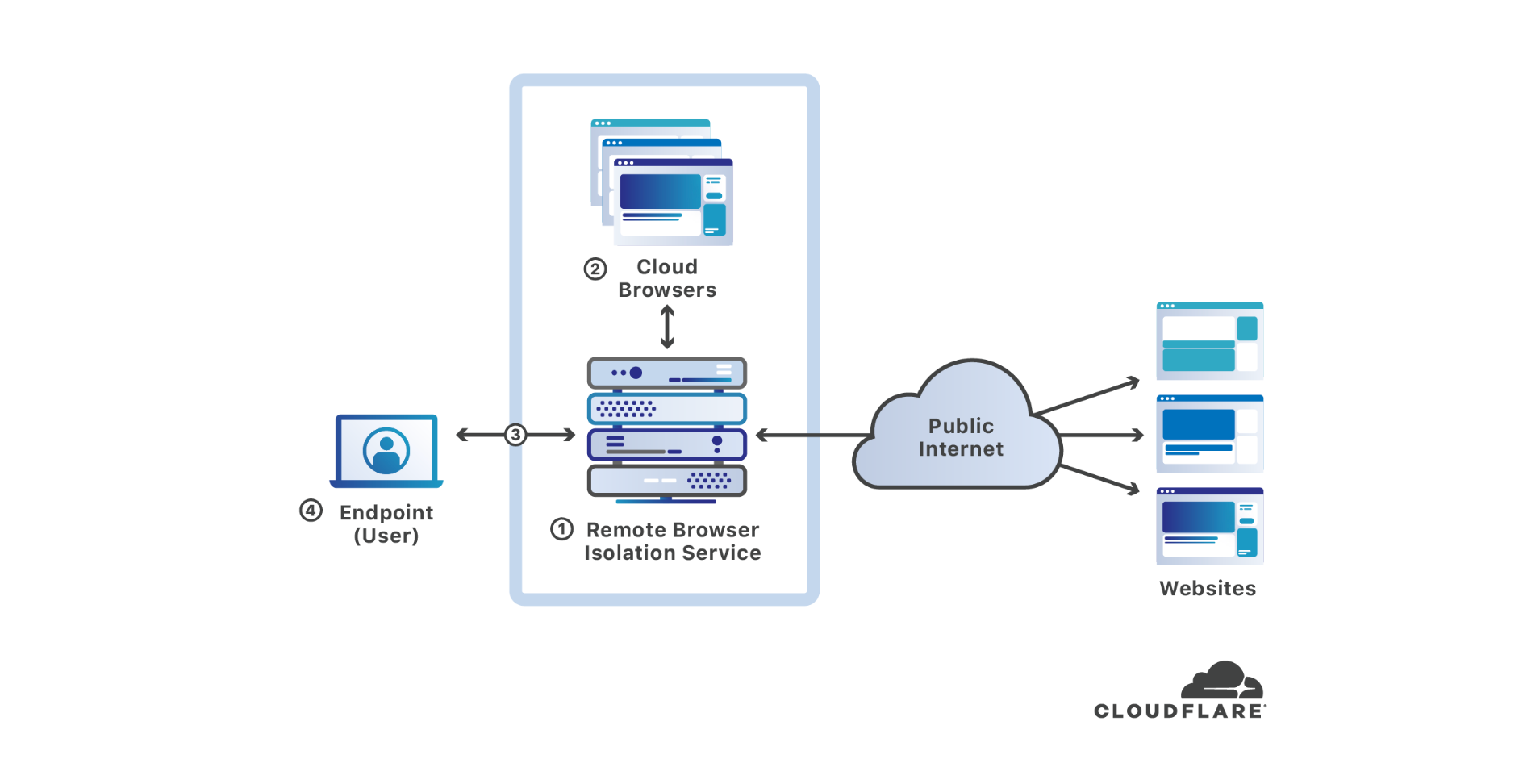

Cloudflare announced today that it has purchased S2 Systems Corporation, a Seattle-area startup that has built an innovative remote browser isolation solution unlike any other currently in the market. The majority of endpoint compromises involve web browsers — by putting space between users’ devices and where web code executes, browser isolation makes endpoints substantially more secure. In this blog post, I’ll discuss what browser isolation is, why it is important, how the S2 Systems cloud browser works, and how it fits with Cloudflare’s mission to help build a better Internet.

It’s been more than 30 years since Tim Berners-Lee wrote the project proposal defining the technology underlying what we now call the world wide web. What Berners-Lee envisioned as being useful for “several thousand people, many of them very creative, all working toward common goals”[1] has grown to become a fundamental part of commerce, business, the global economy, and an integral part of society used by more than 58% of the world’s population[2].

The world wide web and web browsers have unequivocally become the platform for much of the productive work (and play) people do every day. However, as the pervasiveness Continue reading

The certification, developed by the quasi-standards body MEF, aims to help service providers...

The amount of layer-2 tricks we use to make enterprise networking work never ceases to amaze me - from shared IP addresses used by various clustering solutions (because it’s too hard to read the manuals and configure DNS) to shared MAC addresses used by first-hop router redundancy protocols (because it would be really hard to send a Gratuitous ARP message on failover) and all sorts of shenanigans we’re forced to engage in to enable running servers to be moved willy-nilly around the Earth.

Read more ...The deal marks the first cybersecurity acquisition of 2020, and it’s the largest-ever enterprise...

There are at least two – and possibly more – paths to make Arm processors competitive with the Intel and now AMD X86 incumbent processors in the datacenter. …

The Other Way To Bring Arm CPUs To Servers was written by Timothy Prickett Morgan at The Next Platform.