Day Two Cloud 015: How To Prepare For And Run Azure Stack

What exactly are Day Two operations for Azure Stack? Does your company have the skill sets to properly manage and support your newly deployed hybrid cloud infrastructure? Today's episode, with guest Kristopher Turner, examines all the things you have to account for when planning and then running this integrated system.

The post Day Two Cloud 015: How To Prepare For And Run Azure Stack appeared first on Packet Pushers.

Working Together for an Internet for Women

How can we get more girls and young women involved in the Internet?

Since 2017, the Internet Society’s Women SIG has developed global actions to promote gender equality and to develop digital skills and leadership among girls and young women.

With the support of several Chapters of the Internet Society, we’ve organized global face-to-face and virtual events on security and privacy issues focused on girls and women. But this work can’t be done alone, which is why we’ve promoted collaboration within organizations, government, civil society, companies, academia, and the technical community to organize events that have a positive impact on the Internet community. (We also collaborate with EQUALS Global Partnership.)

This year, to commemorate the International Day of Girls in ICT, promoted by the International Telecommunications Union (ITU), which aims to reduce the digital gender gap and to encourage and motivate girls to participate in tech careers, we organized a series of workshops focused on digital skills for girls. The main node was organized in conjunction with the Internet Society Chapter in Guatemala and the Spain Cultural Center in Guatemala.

We also had a global celebration in El Salvador, Mexico, Panama, Honduras, Hong Kong, Burkina Faso, Senegal, Armenia, Continue reading

Tigera Secure 2.5 – Implement Kubernetes Network Security Using Your Firewall Manager

We are excited to announce the general availability of Tigera Secure 2.5. With this release, security teams can now create and enforce security controls for Kubernetes using their existing firewall manager.

Containers and Kubernetes adoption are gaining momentum in enterprise organizations. Gartner estimates that 30% of organizations are running containerized applications today, and they expect that number to grow to 75% by 2022. That’s tremendous growth considering the size and complexity of enterprise IT organizations. It’s difficult to put exact metrics on the growth in Kubernetes adoption; however, KubeCon North America attendance is a good proxy. KubeCon NA registrations grew from 1,139 in 2016 to over 8,000 in 2018 and are expected to surpass 12,000 this December, and the distribution of Corporate Registrations has increased dramatically.

Despite this growth, Kubernetes is a tiny percentage of the overall estate the security team needs to manage; sometimes less than 1% of total workloads. Security teams are stretched thin and understaffed, so it’s no surprise that they don’t have time to learn the nuances of Kubernetes and rethink their security architecture, workflow, and tools for just a handful of applications. That leads to stalled deployments and considerable friction between the application, infrastructure, Continue reading

IPv6 SLAAC Host OS Address Allocation

While rebuilding my v6 lab with a variety different host OS, I found that there is no single approach to address generation in IPv6 SLAAC networks.

I've recorded my findings below for future reference, but also as a good way to delve deeper into the murky world of IPv6 address generation and shine a light on just what is all this stuff in our 'ifconfig/ip add' commands.

TL;DR

The table below summarizes my observations

| OS | Address Generation | Temporary Address |

|--------|---|---|---|---|

| macOS 10.14.6 | Stable-privacy | Yes |

| Ubuntu 18.04 | Stable-privacy | Yes |

| Debian 10 | EUI-64👈👀 | Yes |

| Fedora 30 | Stable-privacy | No 👈👀|

| Windows 10 1903 | Randomized IID | Yes |

Lab Setup

I'm running a basic LAN topology with a combination of hardware (Windows 10 and macOS), plus virtual machines for the Linux OS.

- Virtualization platform: Hyper-V on Windows 2019 Server

- Host OS:

- Windows 10 running on an HP Z book.

- macOS version 10.14.6 running on a MBP 2017

- Ubuntu 18.04.3

- Fedora 30

- Debian 10 Stable

- Gateway: ArubaOS-Switch 2930F-8G-PoE+-2SFP+ running WC.16.07.0002

Gateway configuration:

ipv6 Continue readingReal World APIs: Snagging a Global Entry Interview

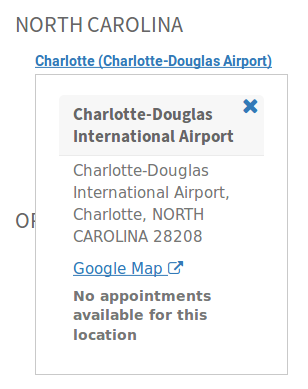

As my new job will have me traveling a bit more often, I finally bit the bullet and signed up for Global Entry (which is similar to TSA PreCheck but works for international travel as well). A few days after submitting my application and payment, I was conditionally approved. The next step was to schedule an “interview,” which is essentially a 10-minute appointment where they ask a few questions and take biometrics. The interview must be done in person at one of relatively few CBP locations.

Here in Raleigh, North Carolina, my two closest locations are Richmond and Charlotte. Unfortunately, CBP’s scheduling portal indicated no availability for new appointments at either location. No additional context is provided, so I have no idea whether I should keep trying every few days, or attempt to schedule an appointment at a remote location to coincide with future travel.

My only hope at this point is that spots will eventually open up as other applicants cancel their appointments or CBP adds sufficient staff to meet demand. But that means manually logging into the portal, completing two-factor authentication, and checking both of my desired appointment locations each and every time.

Sounds like a great use Continue reading

Sponsored Post: Educative, PA File Sight, Etleap, PerfOps, InMemory.Net, Triplebyte, Stream, Scalyr

Who's Hiring?

- Triplebyte lets exceptional software engineers skip screening steps at hundreds of top tech companies like Apple, Dropbox, Mixpanel, and Instacart. Make your job search O(1), not O(n). Apply here.

- Need excellent people? Advertise your job here!

Cool Products and Services

- Grokking the System Design Interview is a popular course on Educative.io (taken by 20,000+ people) that's widely considered the best System Design interview resource on the Internet. It goes deep into real-world examples, offering detailed explanations and useful pointers on how to improve your approach. There's also a no questions asked 30-day return policy. Try a free preview today.

- PA File Sight - Actively protect servers from ransomware, audit file access to see who is deleting files, reading files or moving files, and detect file copy activity from the server. Historical audit reports and real-time alerts are built-in. Try the 30-day free trial!

- For heads of IT/Engineering responsible for building an analytics infrastructure, Etleap is an ETL solution for creating perfect data pipelines from day one. Unlike older enterprise solutions, Etleap doesn’t require extensive engineering work to set up, maintain, and scale. It automates most ETL setup and Continue reading

2019 Indigenous Connectivity Summit Training: Empowering Communities to Create Connections on Their Own Terms

Indigenous communities across North America are working to bridge the digital divide.

Each year the Indigenous Connectivity Summit (ICS) brings together community leaders, network operators, policymakers, and others to talk about new and emerging networks and the policies that impact them. During the two-day Summit, people from across the United States, Canada, and the rest of the world share best practices, challenges, and success stories – and learn how they can work together when they return home to solve connectivity challenges in Indigenous communities.

This year, we’ll be in Hilo, Hawaii from November 12-15.

But that’s not nearly enough time to cover everything, especially with close to 1,000 amazing participants (200 in-person and 700 online) ready to share their stories and create new connections.

So we’re trying something new. As we’ve done before, the ICS will still be split into two parts: a two-day training and a two-day event. But this year, participants can also attend a series of two distinct virtual training sessions before the event in Hawaii: Community Networks and Policy and Advocacy.

These sessions will allow participants to spend time over the course of several weeks getting in-depth information about two of the topics we spend Continue reading

Talking High Bandwidth With IBM’s Power10 Architect

As the lead engineer on the Power10 processor, Bill Starke already knows what most of us have to guess about Big Blue’s next iteration in a processor family that has been in the enterprise market in one form or another for nearly three decades. …

Talking High Bandwidth With IBM’s Power10 Architect was written by Timothy Prickett Morgan at .

Network Break 246: Cloudflare Dumps 8chan; Cisco Settles Whistleblower Suit

This week's Network Break examines Cloudflare's decision to drop 8Chan, analyzes Cisco's settlement of a security-related whistleblower suit the company fought for eight years, discusses a new VMware/Google cloud partnership, reviews the latest financial news from tech vendors, and more.

The post Network Break 246: Cloudflare Dumps 8chan; Cisco Settles Whistleblower Suit appeared first on Packet Pushers.

Packet Pushers 2019 Audience Survey

The Packet Pushers audience survey is a necessary, pithy (and privacy protected!) survey to help us get things right.

The post Packet Pushers 2019 Audience Survey appeared first on EtherealMind.

Paving The Way For Two System Architecture Paths

The IT industry is all about evolution, building on what’s been done in the past to address the demands of the future. …

Paving The Way For Two System Architecture Paths was written by Jeffrey Burt at .

Stratix 10 SX At The Heart Of Intel’s Most Powerful FPGA Accelerator

Intel has started shipping a new FPGA accelerator card based on the high-end Stratix 10 SX FPGA. …

Stratix 10 SX At The Heart Of Intel’s Most Powerful FPGA Accelerator was written by Michael Feldman at .