7 Enterprise Storage Trends for 2018

Companies plan to ramp up their storage infrastructure, according to Interop ITX research.

Companies plan to ramp up their storage infrastructure, according to Interop ITX research.

So let’s learn about NETCONF, but first a bit of history and perspective. Everyone in networking business at least once heard about SNMP (Simple Network Management Protocol), which is the goto protocol for monitoring your network devices, and wondered how cool it would be if you could not only monitor your network with it, but actively configure it (sort of like “SDN wannabe”). But for that purpose the SNMP was not really useful, it supported some write operations but they were so generic and incomplete that it was not really feasible. That is where NETCONF came around 2011 as a standard (it was here before but its RFC 6241 was ratified then) and changed the game in favor of configuring any device, while not restricting vendors from declaring their own NETCONF data structures to fit their features, but lets first check the protocol first before diving into the data structures.

NETCONF is a RCP (remote procedure call) based protocol, using XML formating as payload and YAML language as data modeling (the part that explains to you what XML to send to configure something).

Ok, lets get to the point, in our excercise I will be focused on the Continue reading

Last week, I presented MANRS to the IX.BR community. My presentation was part of a bigger theme – the launch of an ambitious program in Brazil to make the Internet safer.

While there are many threats to the Internet that must be mitigated, one common point and a challenge for many of them is that the efficacy of the approaches relies on collaboration between independent and sometimes competing parties. And, therefore, finding ways to incentivize and reward such collaboration is at the core of the solutions.

MANRS tries to do that by increasing the transparency of a network operator’s security posture and its commitment to a more secure and resilient Internet. Subsequently, the operator can leverage its increased security posture, signaling it to potential customers and thus differentiating from their competitors.

MANRS also helps build a community of security-minded operators with a common purpose – an important factor that improves accountability, facilitates better peering relationships, and improves coordination in preventing and mitigating incidents.

I ran an interactive poll with four questions to provide a more quantitative answer. More than 100 people participated, which makes the results Continue reading

For the first two sessions of the Building Network Automation Solutions online course I got awesome guest speakers, and it seems we’ll have another fantastic lineup in the Spring 2018 course:

Most network automation solutions focus on device configuration based on user request – service creation or change of data model describing the network. Another very important but often ignored aspect is automatic response to external events, and that’s what David Gee will describe in his presentation.

Read more ...Here’s a quote from one of my friends who spent years working with Ansible playbooks:

Debugging Ansible is one of the most terrible experiences one can endure…

It’s not THAT bad, particularly if you have a good debugging toolbox. I described mine in the Debugging Ansible Playbooks part of the Ansible for Networking Engineers online course.

Please note that the Building Network Automation Solutions online course includes all material from the Ansible online course.

|

| Fig 1.1- ARISTA Cloud-Vision |

This blog post by Ethan Bank totally describes my (bad) Inbox habits. If you're anything like me, you might find Ethan's ideas useful (I do... following them is a different story though).

For anyone who would like to pick up a copy of Ethan and I’s latest work, it’s currently on prepublication special over at InformIT. Click on the image below for the sale price.

I co-authored Computer Networking Problems And Solutions with Russ White. The nice folks at InformIT.com are accepting pre-orders of the book and ebook at 40% off until December 16, 2017. Go get yourself a copy of this short 832 page read via this link containing all of InformIT’s titles coming soon.

Or, if you use the book’s product page instead of the “coming soon” link above, use code PREORDER to get the discount.

All “coming soon” titles on sale at InformIT: http://informit.com/comingsoon

Product Page for Computer Networking Problems & Solutions: http://www.informit.com/store/computer-networking-problems-and-solutions-an-innovative-9781587145049

I co-authored Computer Networking Problems And Solutions with Russ White. The nice folks at InformIT.com are accepting pre-orders of the book and ebook at 40% off until December 16, 2017. Go get yourself a copy of this short 832 page read via this link containing all of InformIT’s titles coming soon.

Or, if you use the book’s product page instead of the “coming soon” link above, use code PREORDER to get the discount.

All “coming soon” titles on sale at InformIT: http://informit.com/comingsoon

Product Page for Computer Networking Problems & Solutions: http://www.informit.com/store/computer-networking-problems-and-solutions-an-innovative-9781587145049

West Midlands Police believe it is the first time the high-tech crime has been caught on camera. Relay boxes can receive signals through walls, doors and windows but not metal. The theft took just one minute and the Mercedes car, stolen from the Elmdon area of Solihull on 24 September, has not been recovered. @BBCWest Midlands Police believe it is the first time the high-tech crime has been caught on camera. Relay boxes can receive signals through walls, doors and windows but not metal. The theft took just one minute and the Mercedes car, stolen from the Elmdon area of Solihull on 24 September, has not been recovered. @BBC

The Supreme Court will hear oral arguments in Carpenter v. United States on November Continue reading

Putting compute and storage at the edge reduces latency.

Putting compute and storage at the edge reduces latency.

ETSI launches AR group; Canonical and Rancher Labs build joint platform; VeloCloud extends to AWS.

ETSI launches AR group; Canonical and Rancher Labs build joint platform; VeloCloud extends to AWS.

Kubernetes has quickly become a key technology in the emerging containerized application environment since it was first announced by Google engineers just more than three years ago, catching hold as the primary container orchestration tool used by hyperscalers, HPC organizations and enterprises and overshadowing similar tools like Docker Swarm, Mesos and OpenStack.

Born from earlier internal Google projects Borg and Omega, the open-source Kubernetes has been embraced by top cloud providers and growing numbers of enterprises, and support is growing among datacenter infrastructure software vendors.

Red Hat has built out its OpenShift cloud application platform based on both …

No Slowdown in Sight for Kubernetes was written by Nicole Hemsoth at The Next Platform.

CloudCenter also compares costs of running applications in public and private clouds.

CloudCenter also compares costs of running applications in public and private clouds.

Hey, it's HighScalability time:

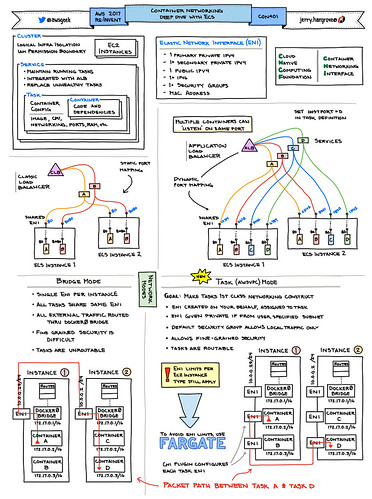

AWS Geek creates spectacular visual summaries.

If you like this sort of Stuff then please support me on Patreon. And please recommend my new book—Explain the Cloud Like I'm 10—to those looking to understand the cloud. I think they'll like it.