Public Videos: Graph Algorithms in Networks (Part 1)

The first half of the Graph Algorithms in Networks webinar by Rachel Traylor is now available without a valid ipSpace.net account; it discusses algorithms dealing with trees, paths, and finding centers of graphs. Enjoy!

When to Use BGP, VXLAN, or IP-in-IP: A Practical Guide for Kubernetes Networking

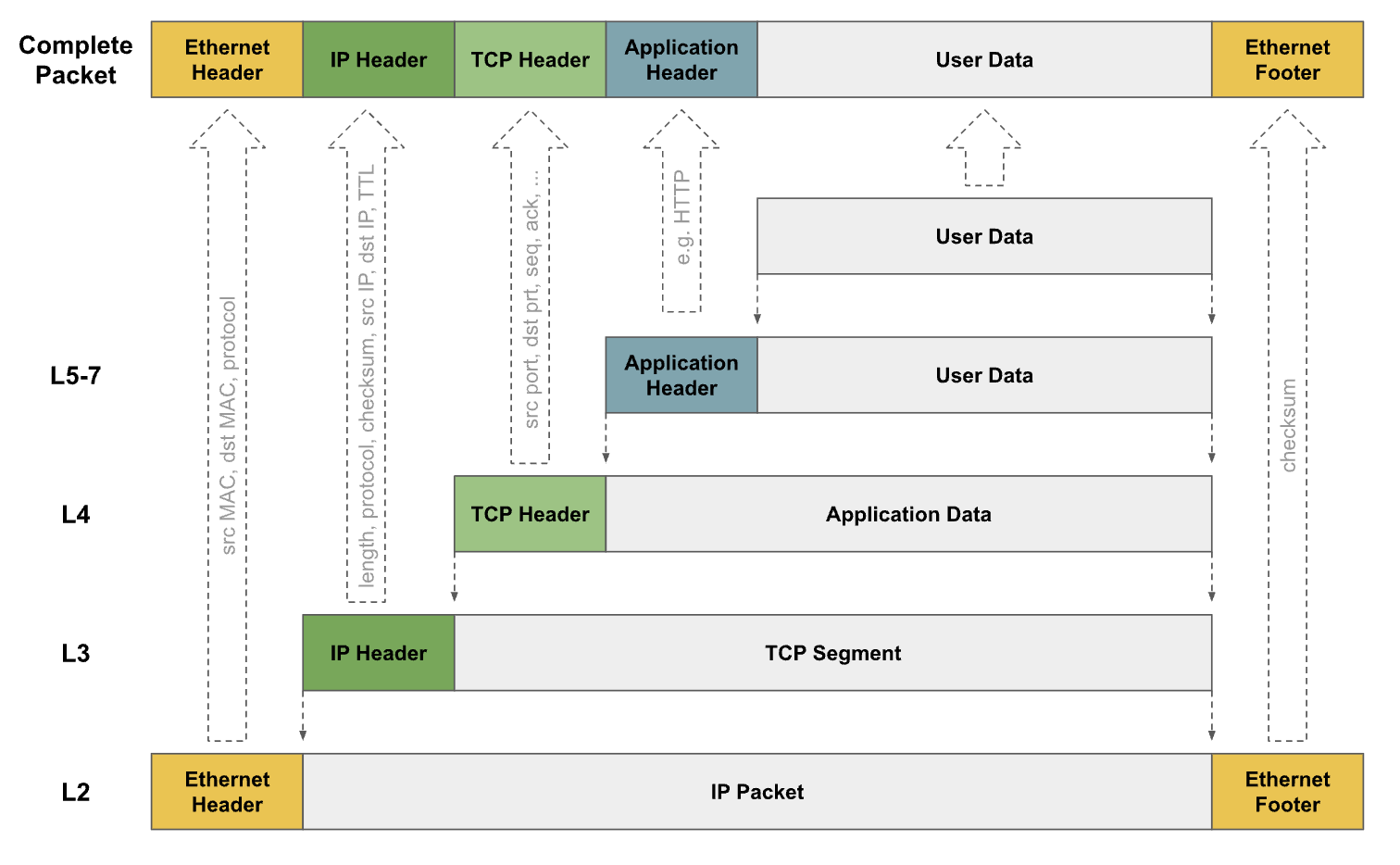

When deploying a Kubernetes cluster, a critical architectural decision is how pods on different nodes communicate. The choice of networking mode directly impacts performance, scalability, and operational overhead. Selecting the wrong mode for your environment can lead to persistent performance issues, troubleshooting complexity, and scalability bottlenecks.

The core problem is that pod IPs are virtual. The underlying physical or cloud network has no native awareness of how to route traffic to a pod’s IP address, like 10.244.1.5 It only knows how to route traffic between the nodes themselves. This gap is precisely what the Container Network Interface (CNI) must bridge.

The CNI employs two primary methods to solve this problem:

- Overlay Networking (Encapsulation): This method wraps a pod’s packet inside another packet that the underlying network understands. The outer packet is addressed between nodes, effectively creating a tunnel. VXLAN and IP-in-IP are common encapsulation protocols.

- Underlay Networking (Routing): This method teaches the network fabric itself how to route traffic directly to pods. It uses a routing protocol like BGP to advertise pod IP routes to the physical Continue reading

PP083: A CISO’s Perspective on Model Context Protocol (MCP)

Model Context Protocol (MCP) is an open-source protocol that enables AI agents to connect to data, tools, workflows, and other agents both within and outside of enterprise borders. As organizations dive head-first into AI projects, MCP and other agentic protocols are being quickly adopted. And that means security and network teams need to understand how... Read more »HW063: Designing a Wireless-First Office

A wireless-first office is a sensible goal these days when most laptops don’t have an Ethernet port and lots of devices use Wi-Fi. Wireless and network architect Phil Sosaya led the transition to wireless-first offices at sites across the globe. He details his design approach, including why he doesn’t bother with site survey software. He... Read more »OSPF Router ID and Loopback Interface Myths

Daniel Dib wrote a nice article describing the history of the loopback interface1, triggering an inevitable mention of the role of a loopback interface in OSPF and related flood of ancient memories on my end.

Before going into the details, let’s get one fact straight: an OSPF router ID was always (at least from the days of OSPFv1 described in RFC 1133) just a 32-bit identifier, not an IPv4 address2. Straight from the RFC 1133:

“Polaris” AmpereOne M Arm CPUs Sighted In Oracle A4 Instances

Japanese tech conglomerate SoftBank announced a $6.5 billion acquisition of Arm server CPU upstart Ampere Computing back in March, and that deal has not yet closed. …

“Polaris” AmpereOne M Arm CPUs Sighted In Oracle A4 Instances was written by Timothy Prickett Morgan at The Next Platform.

NB548: Broadcom Brings Chips to Wi-Fi 8 Party; Attorneys General Scrutinize HPE/Juniper Settlement

Take a Network Break! On today’s coverage, F5 releases an emergency security update after state-backed threat actors breach internal systems, and North Korean attackers use the blockchain to host and hide malware. Broadcom is shipping an 800G NIC aimed at AI workloads, and Broadcom joins the Wi-Fi 8 party early with a sampling of pre-standard... Read more »AI / ML network performance metrics at scale

The charts above show information from a GPU cluster running an AI / ML training workload. The 244 nodes in the cluster are connected by 100G links to a single large switch. Industry standard sFlow telemetry from the switch is shown in the two trend charts generated by the sFlow-RT real-time analytics engine. The charts are updated every 100mS.- Per Link Telemetry shows RoCEv2 traffic on 5 randomly selected links from the cluster. Each trend is computed based on sFlow random packet samples collected on the link. The packet header in each sample is decoded and the metric is computed for packets identified as RoCEv2.

- Combined Fabric-Wide Telemetry combines the signals from all the links to create a fabric wide metric. The signals are highly correlated since the AI training compute / exchange cycle is synchronized across all compute nodes in the cluster. Constructive interference from combining data from all the links removes the noise in each individual signal and clearly shows the traffic pattern for the cluster.

Using Git Pre-Commit Hooks

A while ago I wrote an article about linting Markdown files with markdownlint. In that article, I presented the use case of linting the Markdown source files for this site. While manually running linting checks is fine—there are times and situations when this is appropriate and necessary—this is the sort of task that is ideally suited for a Git pre-commit hook. In this post, I’ll discuss Git pre-commit hooks in the context of using them to run linting checks.

Before moving on, a disclaimer: I am not an expert on Git hooks. This post shares my limited experience and provides an example based on what I use for this site. I have no doubt that my current implementation will improve over time as my knowledge and experience grow.

What is a Git Hook?

As this page explains, a hook is a program “you can place in a hooks directory to trigger actions at certain points in git’s execution.” Generally, a hook is a script of some sort. Git supports different hooks that get invoked in response to specific actions in Git; in this particular instance, I’m focusing on the pre-commit hook. This hook gets invoked by git-commit (i.e. Continue reading

netlab: Embed Files in a Lab Topology

Today, I’ll focus on another feature of the new files plugin – you can use it to embed any (hopefully small) file in a lab topology (configlets are just a special case in which the plugin creates the relative file path from the configlets dictionary data).

You could use this functionality to include configuration files for Linux containers, custom reports, or even plugins in the lab topology, and share a complete solution as a single file that can be downloaded from a GitHub repository.

HN801: Will a Natural Language Interface (NLI) Replace Your CLI?

Could an LLM or some kind of an AI-driven language model, such as a natural language interface, someday replace our beloved CLI? That is, instead of needing to understand the syntax of a specific vendor’s CLI, could a language model allow network operators to use plain language to get the information they need or the... Read more »The Third Time Will Be The Charm For Broadcom Switch Co-Packaged Optics

If Broadcom says that co-packaged optics is ready for prime time and can compete with other ways of linking switch ASICs to fiber optic cables, then it is very unlikely that Broadcom is wrong. …

The Third Time Will Be The Charm For Broadcom Switch Co-Packaged Optics was written by Timothy Prickett Morgan at The Next Platform.

How HPC Is Igniting Discoveries In Dinosaur Locomotion – And Beyond

In the basement of Connecticut’s Beneski Museum of Natural History sit the fossilized footprints of a small, chicken-sized dinosaur. …

How HPC Is Igniting Discoveries In Dinosaur Locomotion – And Beyond was written by Timothy Prickett Morgan at The Next Platform.

TNO046: Prisma AIRS: Securing the Multi-Cloud and AI Runtime (Sponsored)

Multi-cloud, automation, and AI are changing how modern networks operate and how firewalls and security policies are administered. In today’s sponsored episode with Palo Alto Networks, we dig into offerings such as CLARA (Cloud and AI Risk Assessment) that help ops teams gain more visibility into the structure and workflows of their multi-cloud networks. We... Read more »Hedge 284: Netops and Corporate Culture

We all know netops, NRE, and devops can increase productivity, increase Mean Time Between Mistakes (MTBM), and decrease MTTR–but how do we deploy and use these tools? We often think of the technical hurdles you face in their deployment, but most of the blockers are actually cultural. Chris Grundemann, Eyvonne, Russ, and Tom discuss the cultural issues with deploying netops on this episode of the Hedge.

download

Load Balancing Monitor Groups: Multi-Service Health Checks for Resilient Applications

Modern applications are not monoliths. They are complex, distributed systems where availability depends on multiple independent components working in harmony. A web server might be running, but if its connection to the database is down or the authentication service is unresponsive, the application as a whole is unhealthy. Relying on a single health check is like knowing the “check engine” light is not on, but not knowing that one of your tires has a puncture. It’s great your engine is going, but you’re probably not driving far.

As applications grow in complexity, so does the definition of "healthy." We've heard from customers, big and small, that they need to validate multiple services to consider an endpoint ready to receive traffic. For example, they may need to confirm that an underlying API gateway is healthy and that a specific ‘/login’ service is responsive before routing users there. Until now, this required building custom, synthetic services to aggregate these checks, adding operational overhead and another potential point of failure.

Today, we are introducing Monitor Groups for Cloudflare Load Balancing. This feature provides a new way to create sophisticated, multi-service health assessments directly on our platform. With Monitor Groups, you can bundle Continue reading

Lab: Hide Transit Subnets in IS-IS Networks

Sometimes you want to assign IPv4/IPv6 subnets to transit links in your network (for example, to identify interfaces in traceroute outputs), but don’t need to have those subnets in the IP routing tables throughout the whole network. Like OSPF, IS-IS has a nerd knob you can use to exclude transit subnets from the router PDUs.

Want to check how that feature works with your favorite device? Use the Hide Transit Subnets in IS-IS Networks lab exercise.

Click here to start the lab in your browser using GitHub Codespaces (or set up your own lab infrastructure). After starting the lab environment, change the directory to feature/4-hide-transit and execute netlab up.