AWS Summit London – Come For The IaaS, Stay For the PaaS

I attended the AWS Summit in London yesterday. Here are some observations in no particular order. Come for the IaaS, Stay for the PaaS. AWS made a strong case about their portfolio of software services such as AWS Lambda, Cloudformation, KMS and Cloudtrail. Its no longer just compute, storage and networking its all about the […]

The post AWS Summit London – Come For The IaaS, Stay For the PaaS appeared first on EtherealMind.

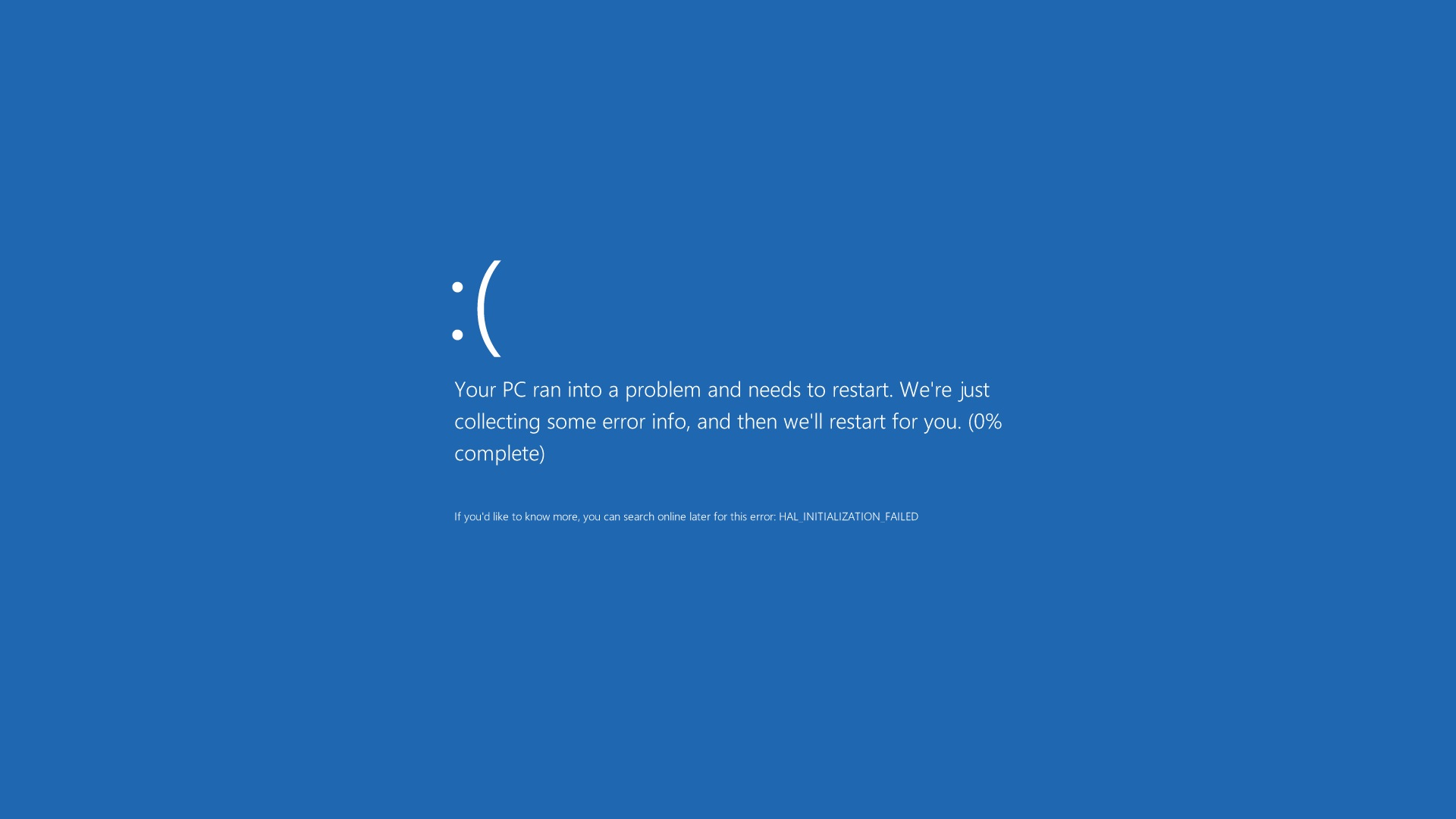

Protection against critical Windows vulnerability (CVE-2015-1635)

A few hours ago, more details surfaced about the MS15-034 vulnerability. Simple PoC code has been widely published that will hang a Windows web server if sent a request with an HTTP Range header containing large byte offsets.

We have rolled out a WAF rule that blocks these requests.

Customers on a paid plan and who have the WAF enabled are automatically protected against this problem. It is highly recommended that you upgrade your IIS and your Windows servers as soon as possible; in the meantime any requests coming into CloudFlare that try and exploit this DoS/RCE will be blocked.

Nokia to Acquire Alcatel-Lucent for $16.6 Billion

Well, that was quick. Two days after rumors started, Nokia and Alcatel-Lucent announce a $16.6B hookup.

Well, that was quick. Two days after rumors started, Nokia and Alcatel-Lucent announce a $16.6B hookup.

Handy Link For SCP Commands

Putting this here because I’m constantly forgetting it.

Originally from here: http://www.hypexr.org/linux_scp_help.php

Copy the file “foobar.txt” from a remote host to the local host:

$ scp [email protected]:foobar.txt /some/local/directory

Copy the file “foobar.txt” from the local host to a remote host:

$ scp foobar.txt [email protected]:/some/remote/directory