Dropped packet notifications with Cisco 8000 Series Routers

The availability of the Cisco IOS XR Release 25.1.1 brings sFlow dropped packet notification support to Cisco 8000 series routers, making it easy to capture and analyze packets dropped at router ingress, aiding in understanding blocked traffic types, identifying potential security threats, and optimizing network performance.

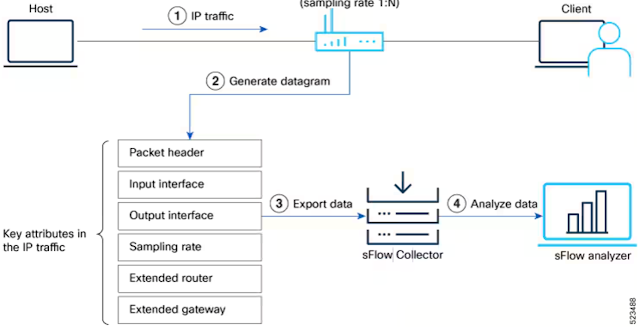

sFlow Configuration for Traffic Monitoring and Analysis describes the steps to enable sFlow and configure packet sampling and interface counter export from a Cisco 8000 Series router to a remote sFlow analyzer.

Note: Devices using NetFlow or IPFIX must transition to sFlow for regular sampling before utilizing the dropped packet feature, ensuring compatibility and consistency in data analysis.

Router(config)#monitor-session monitor1 Router(config)#destination sflow EXP-MAP Router(config)#forward-drops rx

Configure a monitor-session with the new destination sflow option to export dropped packet notifications (which include ingress interface, drop reason, and header of dropped packet) to the configured sFlow analyzer.

Cisco lists the following benefits of streaming dropped packets in the configuration guide:

- Enhanced Network Visibility: Captures and forwards dropped packets to an sFlow collector, providing detailed insights into packet loss and improving diagnostic capabilities.

- Comprehensive Analysis: Allows for simultaneous analysis of regular and dropped packet flows, offering a holistic view of network performance.

- Troubleshooting: Empowers Continue reading