0

This is a guest post by Videet Parekh, Abelardo Lopez-Lagunas, Sek Chai at Latent AI.

Edge networks present a significant opportunity for Artificial Intelligence (AI) performance and applicability. AI technologies already make it possible to run compelling applications like object and voice recognition, navigation, and recommendations.

AI at the edge presents a host of benefits. One is scalability—it is simply impractical to send all data to a centralized cloud. In fact, one study has predicted a global scope of 90 zettabytes generated by billions of IoT devices by 2025. Another is privacy—many users are reluctant to move their personal data to the cloud, whereas data processed at the edge are more ephemeral.

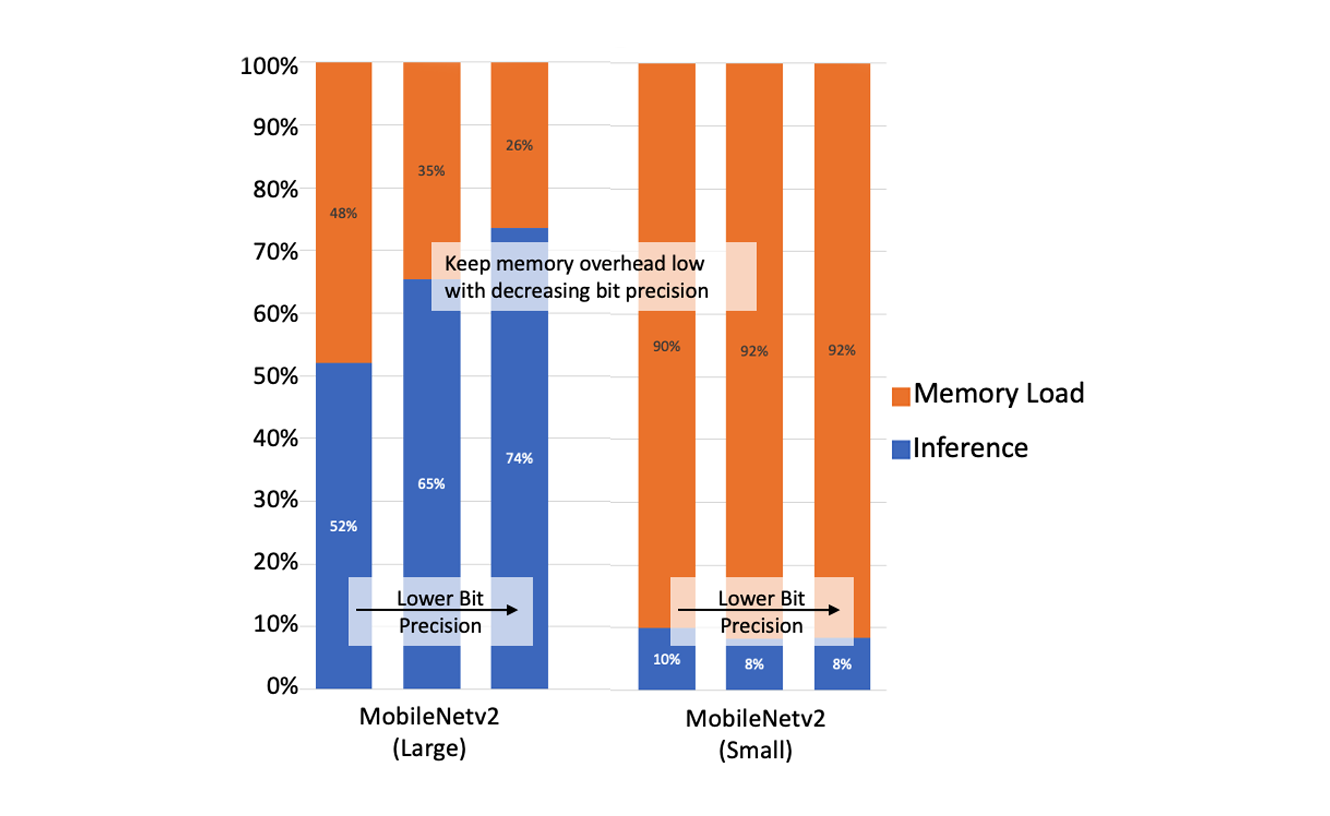

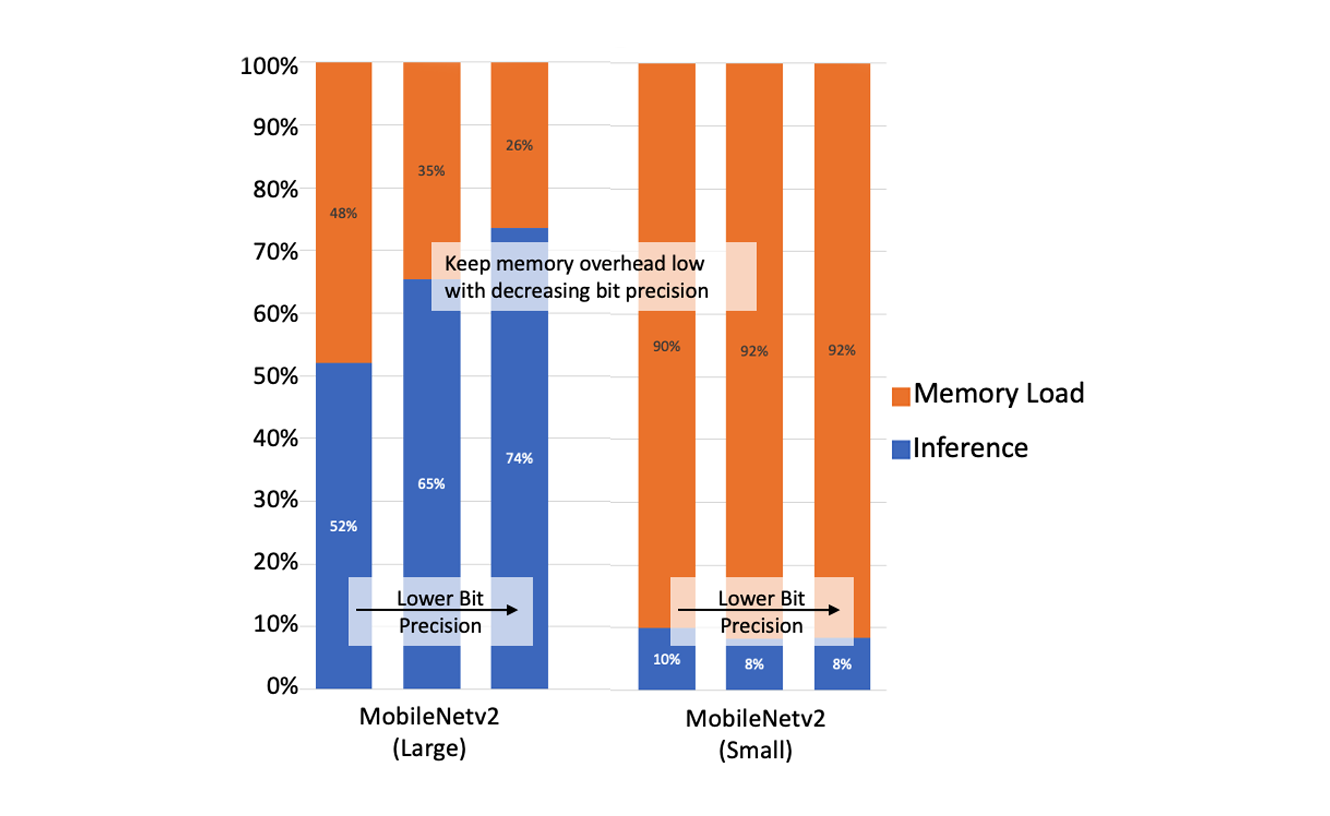

When AI services are distributed away from centralized data centers and closer to the service edge, it becomes possible to enhance the overall application speed without moving data unnecessarily. However, there are still challenges to make AI from the deep-cloud run efficiently on edge hardware. Here, we use the term deep-cloud to refer to highly centralized, massively-sized data centers. Deploying edge AI services can be hard because AI is both computational and memory bandwidth intensive. We need to tune the AI models so the computational latency and bandwidth Continue reading