Weekly Wrap: Nutanix Furloughs 25% of Workforce Citing COVID-19

SDxCentral Weekly Wrap for May 8, 2020: Nutanix to furlough 25% of its workforce; IBM wears Red Hat...

SDxCentral Weekly Wrap for May 8, 2020: Nutanix to furlough 25% of its workforce; IBM wears Red Hat...

Steven Wood, Cisco’s principal engineer of enterprise architectures and SD-WAN, made the...

Microsoft called Amazon’s latest JEDI protest “yet another attempt to force a re-do because...

If you don’t already know that I’m a co-host of a great podcast we do at Gestalt IT, here’s a great way to jump in. This episode was a fun one to record and talk about licensing:

Sometimes I have to play the role of the genial host and I don’t get to express my true opinion on things. After all, a good podcast host is really just there to keep the peace and ensure the guests get to say their words, right?

I once said that every random feature in a certain network operating system somehow came from a million-dollar PO that needed to be closed. It reflects my personal opinion that sometimes the things we see in code don’t always reflect reality. But how do you decide what to build if you’re not listening to customers?

It’s a tough gamble to take. You can guess at what people are going to want to include and hope that you get it right. Other times you’re going to goof and put something your code that no one uses. It’s a delicate balance. One of the biggest traps that a company can fall into is waiting for their Continue reading

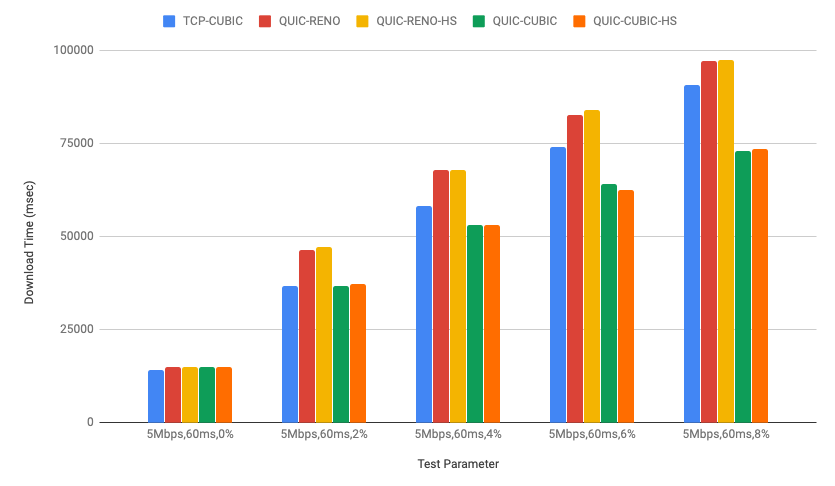

quiche, Cloudflare's IETF QUIC implementation has been running CUBIC congestion control for a while in our production environment as mentioned in Comparing HTTP/3 vs. HTTP/2 Performance). Recently we also added HyStart++ to the congestion control module for further improvements.

In this post, we will talk about QUIC congestion control and loss recovery briefly and CUBIC and HyStart++ in the quiche congestion control module. We will also discuss lab test results and how to visualize those using qlog which was recently added to the quiche library as well.

In the network transport area, congestion control is how to decide how much data the connection can send into the network. It has an important role in networking so as not to overrun the link but also at the same time it needs to play nice with other connections in the same network to ensure that the overall network, the Internet, doesn’t collapse. Basically congestion control is trying to detect the current capacity of the link and tune itself in real time and it’s one of the core algorithms for running the Internet.

QUIC congestion control has been written based on many years of TCP Continue reading

It’s amazing how many people assume that The Internet is a thing, whereas in reality it’s a mishmash of interconnected independent operators running mostly on goodwill, misplaced trust in other people’s competence, and (sometimes) pure dumb luck.

I described a few consequences of this sad reality in the Internet Has More than One Administrator video (part of How Networks Really Work webinar), and Nick Buraglio and Elisa Jasinska provided even more details in their Surviving the Internet Default-Free Zone webinar.

The Cisco Adaptive Security Appliance alone has more than 1 million deployments globally, according...

The ToIP Foundation aims to establish a common standard for consumers and businesses to ensure data...

Fortinet Q1 earnings remained strong amidst pandemic; Microsoft eyed a $170M security buy; and...

Any company that holds more than a quarter of a market – by money or shipments – is doing pretty well. …

This Switcheroo Doesn’t Get Old was written by Timothy Prickett Morgan at The Next Platform.

Kamran Amini was an executive at IBM in the mid-2000s when the company first put AMD’s then-relatively new Opteron processors into some of its System x servers. …

Lenovo Goes Double Barrel With AMD “Rome” Epycs was written by Jeffrey Burt at The Next Platform.

As our enterprise customers build out large, multi-cluster Kubernetes environments, they are encountering an entirely new set of security challenges, requiring solutions that operate at scale and can be deployed both on-premises and across multiple clouds.

Today we are thrilled to announce the release of Calico Enterprise 3.0 and the availability of our Global Network Security Center, a game-changing solution that provides a central management plane for network security across every Kubernetes cluster in your organization.

The Calico Enterprise Global Network Security Center for Kubernetes is a centralized management plane and single point of control for multi-cluster and multi-cloud environments. Calico Enterprise’s centralized control simplifies and speeds routine maintenance, leaving more time for your platform team to address other important tasks.

For example, instead of logging in to 50 clusters one-at-a-time to make a policy change, with a single log-in to Calico Enterprise you can apply policy changes consistently across all 50 clusters. You can also automatically apply existing network security controls to new clusters as they are added.

Calico Enterprise also includes centralized log management, troubleshooting with Flow Visualizer, and cluster-wide IDS (intrusion detection). GNSC provides compliance reporting, and alerts on non-compliance Continue reading

T-Mobile US expanded its 5G network running on 600 MHz spectrum by activating 1,600 sites during...

“Our ability to directly manage our supply chain and shipping logistics allowed us to quickly...

By Susan Wu, Senior Product Marketing Manager and Yasen Simeonov, Senior Technical Product Manager, Networking and Security Business Unit

Kubernetes has become mainstream in the enterprise. In the latest Cloud Native Computing Foundation (CNCF) survey [1], 78% of the companies surveyed use Kubernetes in production. Containers are not only the norm but are running at scale with 34% of the organizations using 1,000 containers or more.

Given the rise in deployment, challenges remain as organizations attempt to operationalize Kubernetes.

With the latest release of VMware NSX-T and the NSX Container Plugin (NCP) we continue to address our customers’ top challenges such as security, complexity, and networking.

NSX provides the full stack networking and security across container orchestration platforms including VMware vSphere 7 with Kubernetes, Tanzu, OpenShift and upstream Kubernetes. NSX-T automates network services (distributed switching, routing, firewalling, load balancing/ingress, IPAM), and applies associated firewall policies directly at the pod level as soon as the cluster is spun up using standard Kubernetes commands. This level of simplicity and automation helps manage Kubernetes and the underlying software-defined data center (SDDC) infrastructure providing a common framework for virtualization admins and developers.

This is a guest post from Docker Captain Bret Fisher, a long-time DevOps sysadmin and speaker who teaches container skills with his popular Docker Mastery courses Docker Mastery, Kubernetes Mastery, Docker for Node.js, and Swarm Mastery, weekly YouTube Live shows. Bret also consults with companies adopting Docker. Join Bret and other Docker Captains at DockerCon LIVE 2020 on May 28th, where they’ll be live all day hanging out, answering questions and having fun.

When Docker announced in December that it was continuing its DockerCon tradition, albeit virtually, I was super excited and disappointed at the same time. It may sound cliché but truly, my favorite part of attending conferences is seeing old friends and fellow Captains, meeting new people, making new friends, and seeing my students in real life.

Can a virtual event live up to its in-person version? My friend Phil Estes was honest about his experience on Twitter and I agree… it’s not the same. Online events shouldn’t be one-way information dissemination. As attendees, we should be able to *do* something, not just watch.

Well, challenge accepted. We’ve been working hard for months to pull together a great event for you – and Continue reading

Really, 50% ? Say its not true.

The post 50% of Firefox Users Still Have Flash installed appeared first on EtherealMind.