The Twin Engine Strategy That Propels AWS Is Working Well

Like Google and Meta Platforms, Amazon knows exactly how to infuse AI into its business operations such as online retail, transportation, advertising, and even the Amazon Web Services cloud. …

The Twin Engine Strategy That Propels AWS Is Working Well was written by Timothy Prickett Morgan at The Next Platform.

Fragments of an adolescent web

I have unearthed a few old articles typed during my adolescence, between 1996 and 1998. Unremarkable at the time, these pages now compose, three decades later, the chronicle of a vanished era.1

The word “blog” does not exist yet. Wikipedia remains to come. Google has not been born. AltaVista reigns over searches, while already struggling to embrace the nascent immensity of the web2. To meet someone, you had to agree in advance and prepare your route on paper maps. 🗺️

The web is taking off. The CSS specification has just emerged, HTML tables still serve for page layout. Cookies and advertising banners are making their appearance. Pages are adorned with music and videos, forcing browsers to arm themselves with plugins. Netscape Navigator sits on 86% of the territory, but Windows 95 now bundles Internet Explorer to quickly catch up. Facing this offensive, Netscape opensource its browser.

France falls behind. Outside universities, Internet access remains expensive and laborious. Minitel still reigns, offering phone directory, train tickets, remote shopping. This was not yet possible with the Internet: buying a CD online was a pipe dream. Encryption suffers from inappropriate regulation: the DES algorithm is capped at 40 bits and Continue reading

Multicast PIM Sparse Mode (IV)

In the previous post, we covered PIM Dense Mode and mentioned that it is not widely used in production because of its flood and prune behaviour. Every router in the network receives the multicast traffic first, and then routers without interested receivers have to send prune messages. This is inefficient, especially in large networks.

In this post, we will look at PIM Sparse Mode, which takes the opposite approach. Instead of flooding traffic everywhere and pruning where it is not needed, Sparse Mode only sends traffic to parts of the network that explicitly request it. Routers with interested receivers send Join messages and only then does the multicast traffic start flowing. This makes Sparse Mode much more efficient and scalable, which is why it is the preferred mode in most production networks today.

PIM Spare Mode Overview

In Dense Mode, we saw two main problems. Multicast traffic is flooded everywhere, and every router has to maintain state for every multicast group, even if all its interfaces are pruned. Sparse Mode Continue reading

HN813: What Should Networkers Know About Software Development (and Vice Versa)?

What should network engineers know about software development? What should software developers know about networking? Ethan and Drew sit down with Chris Rapier and Nick Buraglio to discuss why crossing these silos can improve outcomes for everyone. They break down why being a little curious about the infrastructure can help software developers write better code,... Read more »Lab: Routing Between VXLAN Segments

In the previous EVPN/VXLAN lab exercises, we covered the basics of Ethernet bridging over VXLAN and the use of the EVPN control plane to build layer-2 segments.

It’s time to move up the protocol stack. Let’s see how you can route between VXLAN segments, this time using unique unicast IP addresses on the layer-3 switches.

You can run the lab on your own netlab-enabled infrastructure (more details), but also within a free GitHub Codespace or even on your Apple-silicon Mac (installation, using Arista cEOS container, using VXLAN/EVPN labs).

Hedge 294: Resource Constrained Environments

The future of network design and architecture is–based on current trends–is going to be working with and around resource constraints. How would resource constraints impact the way we design and manage networks? Mike Bushong joins Tom, Eyvonne, and Russ to ponder network engineering in a resource constrained world.

download

Migrating from NGINX Ingress to Calico Ingress Gateway: A Step-by-Step Guide

From Ingress NGINX to Calico Ingress Gateway

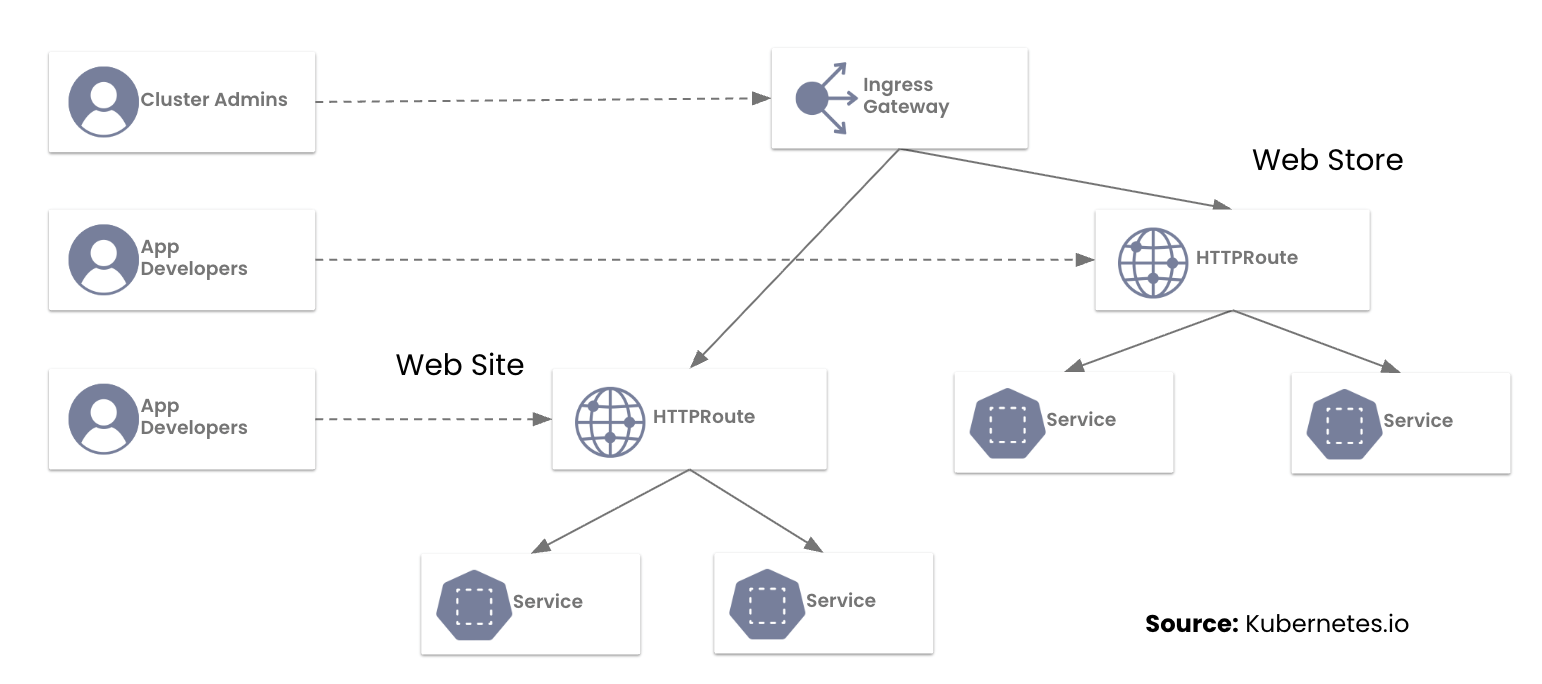

In our previous post, we addressed the most common questions platform teams are asking as they prepare for the retirement of the NGINX Ingress Controller. With the March 2026 deadline fast approaching, this guide provides a hands-on, step-by-step walkthrough for migrating to the Kubernetes Gateway API using Calico Ingress Gateway. You will learn how to translate NGINX annotations into HTTPRoute rules, run both models side by side, and safely cut over live traffic.

A Brief History

The announced retirement of the NGINX Ingress Controller has created a forced migration path for the many teams that relied on it as the industry standard. While the Ingress API is not yet officially deprecated, the Kubernetes SIG Network has designated the Gateway API as its official successor. Legacy Ingress will no longer receive enhancements and exists primarily for backward compatibility.

Why the Industry is Standardizing on Gateway API

While the Ingress API served the community for years, it reached a functional ceiling. Calico Ingress Gateway implements the Gateway API to provide:

- Role-Oriented Design: Clear separation between the infrastructure (managed by SREs) and routing logic (managed by Developers).

- Native Expressiveness: Features like URL rewrites and header manipulation Continue reading

N4N048: QoS Fundamentals

Quality of Service (QoS) is a huge topic with a punishingly large group of acronyms. Join Ethan and Holly as they help you build a mental framework of what QoS is and what it solves. Not only do they break down essential acronyms, they also discuss QoS fundamentals, define the major groups of QoS tools,... Read more »IPB193: IPv6 Basics – Troubleshooting

Are you struggling to get IPv6 working, whether in a lab or even a pilot deployment? Ed, Nick, and Tom walk through the essentials of IPv6 troubleshooting, revealing the non-negotiable differences between IPv4 and IPv6 that can trip up even experienced network engineers. They break down why blocking all ICMP, like in v4, will instantly... Read more »2025 Q4 DDoS threat report: A record-setting 31.4 Tbps attack caps a year of massive DDoS assaults

Welcome to the 24th edition of Cloudflare’s Quarterly DDoS Threat Report. In this report, Cloudforce One offers a comprehensive analysis of the evolving threat landscape of Distributed Denial of Service (DDoS) attacks based on data from the Cloudflare network. In this edition, we focus on the fourth quarter of 2025, as well as share overall 2025 data.

The fourth quarter of 2025 was characterized by an unprecedented bombardment launched by the Aisuru-Kimwolf botnet, dubbed “The Night Before Christmas" DDoS attack campaign. The campaign targeted Cloudflare customers as well as Cloudflare’s dashboard and infrastructure with hyper-volumetric HTTP DDoS attacks exceeding rates of 200 million requests per second (rps), just weeks after a record-breaking 31.4 Terabits per second (Tbps) attack.

DDoS attacks surged by 121% in 2025, reaching an average of 5,376 attacks automatically mitigated every hour.

In the final quarter of 2025, Hong Kong jumped 12 places, making it the second most DDoS’d place on earth. The United Kingdom also leapt by an astonishing 36 places, making it the sixth most-attacked place.

Infected Android TVs — part of the Aisuru-Kimwolf botnet — bombarded Cloudflare’s network with hyper-volumetric HTTP DDoS attacks, while Telcos emerged as the most-attacked industry.

Ultra Ethernet: Receiver Credit-based Congestion Control (RCCC)

Introduction

Receiver Credit-Based Congestion Control (RCCC) is a cornerstone of the Ultra Ethernet transport architecture, specifically designed to eliminate incast congestion. Incast occurs at the last-hop switch when the aggregate data rate from multiple senders exceeds the egress interface capacity of the target’s link. This mismatch leads to rapid buffer exhaustion on the outgoing interface, resulting in packet drops and severe performance degradation.

The RCCC Mechanism

Figure 8-1 illustrates the operational flow of the RCCC algorithm. In a standard scenario without credit limits, source Rank 0 and Rank 1 might attempt to transmit at their full 100G line rates simultaneously. If the backbone fabric consists of 400G inter-switch links, the core utilization remains a comfortable 50% (200G total traffic). However, because the target host link is only 100G, the last-hop switch (Leaf 1B-1) becomes an immediate bottleneck. The switch is forced to queue packets that cannot be forwarded at the 100G egress rate, eventually triggering incast congestion and buffer overflows.

While "incast" occurs at the egress interface and can resemble head-of-line blocking, it is fundamentally a "fan-in" problem where multiple sources converge on a single receiver. Under RCCC, standard Explicit Congestion Notification (ECN) on the last-hop switch's egress interface is Continue reading

On MPLS Forwarding Performance Myths

Whenever I claim that the initial use case for MPLS was improved forwarding performance (using the RFC that matches the IETF MPLS BoF slides as supporting evidence), someone inevitably comes up with a source claiming something along these lines:

The idea of speeding up the lookup operation on an IP datagram turned out to have little practical impact.

That might be true1, although I do remember how hard it was for Cisco to build the first IP forwarding hardware in the AGS+ CBUS controller. Switching labels would be much faster (or at least cheaper), but the time it takes to do a forwarding table lookup was never the main consideration. It was all about the aggregate forwarding performance of core devices.

Anyhow, Duty Calls. It’s time for another archeology dig. Unfortunately, most of the primary sources irrecoverably went to /dev/null, and personal memories are never reliable; comments are most welcome.

D2DO293: Haskell in the Modern Day

Ned and Kyler sit down with Tikhon Jelvis to discuss Haskell and other niche programming languages. They explore how this decades-old language isn’t just surviving, but thriving. They also break down how Haskell can provide distinct advantages over traditional programming, especially for complex domain modeling and concurrent applications. Episode Links: Copilot Language Haskell Project Haskell... Read more »OMG, After a Decade, VXLAN Is Still Insecure

In 2017 (over eight years ago), I was making fun of the fact that “VXLAN is insecure” was news to some people. Obviously, the message needed to be repeated, as the same author gave a very similar presentation two years later at a security conference.

Unfortunately, it seems that everything old is new again (see also RFC 1925 rules 4 and 11), as proved by a “Using GRE and VXLAN for Fun and Profit” (my summary) presentation at DEFCON 33. Even if you knew that unencrypted tunnels are insecure (duh!) for decades, you might still want to read the summary of the talk (published on APNIC blog) and view the slides.

Calico Ingress Gateway: Key FAQs Before Migrating from NGINX Ingress Controller

What Platform Teams Need to Know Before Moving to Gateway API

We recently sat down with representatives from 42 companies to discuss a pivotal moment in Kubernetes networking: the NGINX Ingress retirement.

With the March 2026 retirement of the NGINX Ingress Controller fast approaching, platform teams are now facing a hard deadline to modernize their ingress strategy. This urgency was reflected in our recent workshop, “Switching from NGINX Ingress Controller to Calico Ingress Gateway” which saw an overwhelming turnout, with engineers representing a cross-section of the industry, from financial services to high-growth tech startups.

During the session, the Tigera team highlighted a hard truth for platform teams: the original Ingress API was designed for a simpler era. Today, teams are struggling to manage production traffic through “annotation sprawl”—a web of brittle, implementation-specific hacks that make multi-tenancy and consistent security an operational nightmare.

The move to the Kubernetes Gateway API isn’t just a mandatory update; it’s a graduation to a role-oriented, expressive networking model. We’ve previously explored this shift in our blogs on Understanding the NGINX Retirement and Why the Ingress NGINX Controller is Dead.

PP095: OT and ICS – Where Digital and Physical Risks Meet

Operation Technology (OT) and Industrial Control Systems (ICS) are where the digital world meets the physical world. These systems, which are critical to the operation of nuclear power plants, manufacturing sites, municipal power and water plants, and more, are under increasing attack. On today’s Packet Protector we return to the OT/ICS realm to talk about... Read more »HW070: Better Understand Your Network Performance with NetViews

Every Wi-fi or network professional occasionally struggles with understanding what their endpoints are experiencing. Keith sits down with Bill Bushong, creator of NetViews, a macOS application originally called PingStalker. In this conversation they discuss why he built NetViews, the technical details on how it works, its network monitoring capabilities, and how Wi-Fi professionals can use... Read more »Improve global upload performance with R2 Local Uploads

Today, we are launching Local Uploads for R2 in open beta. With Local Uploads enabled, object data is automatically written to a storage location close to the client first, then asynchronously copied to where the bucket lives. The data is immediately accessible and stays strongly consistent. Uploads get faster, and data feels global.

For many applications, performance needs to be global. Users uploading media content from different regions, for example, or devices sending logs and telemetry from all around the world. But your data has to live somewhere, and that means uploads from far away have to travel the full distance to reach your bucket.

R2 is object storage built on Cloudflare's global network. Out of the box, it automatically caches object data globally for fast reads anywhere — all while retaining strong consistency and zero egress fees. This happens behind the scenes whether you're using the S3 API, Workers Bindings, or plain HTTP. And now with Local Uploads, both reads and writes can be fast from anywhere in the world.

Try it yourself in this demo to see the benefits of Local Uploads.

Ready to try it? Enable Local Uploads in the Cloudflare Dashboard under your bucket's settings, or Continue reading

Interface MAC Address in IOS Layer-2 Images

Here’s another “You can’t make this up, but it sounds too crazy to be true” story: Cisco IOS layer-2 images change the interface MAC address when you change the interface switchport status.

Let me start with a bit of background:

- IOL Layer 2 image starts with interfaces enabled and in bridged (switchport) mode (details)

- netlab has to run a normalize script (applicable to IOLL2, IOSv L2, and Arista EOS) before configuring anything else to ensure all interfaces are shut down.

- The IOLL2

normalizeJinja template had a bug – when setting the interface MAC address, it checkedl.mac_addressinstead ofintf.mac_address. Nevertheless, everything worked because the MAC addresses were also set during the initial device configuration.