Exporting events to Loki

Grafana Loki is an open source log aggregation system inspired by Prometheus. While it is possible to use Loki with Grafana Alloy, a simpler approach is to send logs directly using the Loki HTTP API.The following example modifies the ddos-protect application to use sFlow-RT's httpAsync() function to send events to Loki's HTTP API.

var lokiPort = getSystemProperty("ddos_protect.loki.port") || '3100'; var lokiPush = getSystemProperty("ddos_protect.loki.push") || '/loki/api/v1/push'; var lokiHost = getSystemProperty("ddos_protect.loki.host"); function sendEvent(action,attack,target,group,protocol) { if(lokiHost) { var url = 'http://'+lokiHost+':'+lokiPort+lokiPush; lokiEvent = { streams: [ { stream: { service_name: 'ddos-protect' }, values: [[ Date.now()+'000000', action+" "+attack+" "+target+" "+group+" "+protocol, { detected_level: action == 'release' ? 'INFO' : 'WARN', action: action, attack: attack, ip: target, group: group, protocol: protocol } ]] } ] }; httpAsync({ url: url, headers: {'Content-Type':'application/json'}, operation: 'POST', body: JSON.stringify(lokiEvent), success: (response) => { if (200 != response.status) { logWarning("DDoS Loki status " + response.status); } }, error: (error) => { logWarning("DDoS Loki error " + error); } }); } if(syslogHosts.length === 0) return; var msg = {app:'ddos-protect',action:action,attack:attack,ip:target,group:group,protocol:protocol}; syslogHosts.forEach(function(host) { try { syslog(host,syslogPort,syslogFacility,syslogSeverity,msg); } catch(e) { logWarning('DDoS cannot send syslog to ' + host); } }); }Continue reading

SC25: SDSC Expanse cluster live AI/ML metrics

The SDSC Expanse cluster live AI/ML metrics dashboard is a joint InMon / San Diego Supercomputer Center (SDSC) demonstration at The International Conference for High Performance Computing, Networking, Storage, and Analysis (SC25) conference being held this week in St. Louis, November 16-21. Click on the dashboard link during the show to see live traffic.By default, the dashboard shows the Last 24 Hours of traffic. Explore the data: select Last 30 Days to get a long term view, select Last 5 Minutes to get an up to the second view, click on items in a chart legend to show selected metric, drag to select an interval and zoom in.

The Expanse cluster at the San Diego Supercomputer Center is a batch-oriented science computing gateway serving thousands of users and a wide range of research projects, see Google News for examples.The SDSC Expanse cluster live AI/ML metrics dashboard displays real-time metrics for workloads running on the cluster:

- Total Traffic Total traffic entering fabric

- Cluster Services Traffic associated with Lustre, Ceph and NFS storage, and Slurm workload management

- Core Link Traffic Histogram of load on fabric links

- Edge Link Traffic Histogram of load on access ports

- RDMA Operations Total RDMA operations

- Continue reading

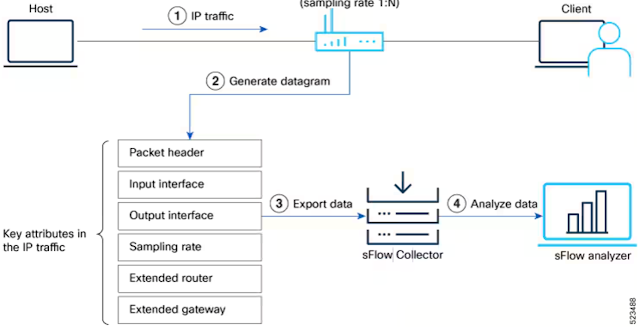

Ultra Ethernet Transport

The Ultra Ethernet Consortium has a mission to Deliver an Ethernet based open, interoperable, high performance, full-communications stack architecture to meet the growing network demands of AI & HPC at scale. The recently released UE-Specification-1.0.1 includes an Ultra Ethernet Transport (UET) protocol with similar functionality to RDMA over Converged Ethernet (RoCEv2).The sFlow instrumentation embedded as a standard feature of data center switch hardware from all leading vendors (Arista, Cisco, Dell, Juniper, NVIDIA, etc.) provides a cost effective solution for gaining visibility into UET traffic in large production AI / ML fabrics.

docker run -p 8008:8008 -p 6343:6343/udp sflow/prometheusThe easiest way to get started is to use the pre-built sflow/prometheus Docker image to analyze the sFlow telemetry. The chart at the top of this page shows an up to the second view of UET operations using the included Flow Browser application, see Defining Flows for a list of available UET attributes. Getting Started describes how to set up the sFlow monitoring system.

Flow metrics with Prometheus and Grafana describes how collect custom network traffic flow metrics using the Prometheus time series database and include the metrics in Grafana dashboards. Use the Flow Browser to explore Continue reading

Vector Packet Processor (VPP) dropped packet notifications

Vector Packet Processor (VPP) release 25.10 extends the sFlow implementation to include support for dropped packet notifications, providing detailed, low overhead, visibility into traffic flowing through a VPP router, see Vector Packet Processor (VPP) for performance information.

sflow sampling-rate 10000 sflow polling-interval 20 sflow header-bytes 128 sflow direction both sflow drop-monitoring enable sflow enable GigabitEthernet0/8/0 sflow enable GigabitEthernet0/9/0 sflow enable GigabitEthernet0/a/0The above VPP configuration commands enable sFlow monitoring of the VPP dataplane, randomly sampling packets, periodically polling counters, and capturing dropped packets and reason codes. The measurements are send via Linux netlink messages to an instance of the open source Host sFlow agent (hsflowd) which combines the measurements and streams standard sFlow telemetry to a remote collector.

sflow {

collector { ip=192.0.2.1 udpport=6343 }

psample { group=1 egress=on }

dropmon { start=on limit=50 }

vpp { }

}The /etc/hsflowd.conf file above enables the modules needed to receive netlink messages from VPP and send the resulting sFlow telemetry to a collector at 192.0.2.1. See vpp-sflow for detailed instructions.

docker run -p 6343:6343/udp sflow/sflowtoolRun sflowtool on the sFlow collector host to verify verify that the data is being received and Continue reading

AI / ML network performance metrics at scale

The charts above show information from a GPU cluster running an AI / ML training workload. The 244 nodes in the cluster are connected by 100G links to a single large switch. Industry standard sFlow telemetry from the switch is shown in the two trend charts generated by the sFlow-RT real-time analytics engine. The charts are updated every 100mS.- Per Link Telemetry shows RoCEv2 traffic on 5 randomly selected links from the cluster. Each trend is computed based on sFlow random packet samples collected on the link. The packet header in each sample is decoded and the metric is computed for packets identified as RoCEv2.

- Combined Fabric-Wide Telemetry combines the signals from all the links to create a fabric wide metric. The signals are highly correlated since the AI training compute / exchange cycle is synchronized across all compute nodes in the cluster. Constructive interference from combining data from all the links removes the noise in each individual signal and clearly shows the traffic pattern for the cluster.

Packet trimming

The latest version of the AI Metrics dashboard uses industry standard sFlow telemetry from network switches to monitor the number of trimmed packets to use as a congestion metric.

Ultra Ethernet Specification Update describes how the Ultra Ethernet Transport (UET) Protocol has the ability to leverage optional “packet trimming” in network switches, which allows packets to be truncated rather than dropped in the fabric during congestion events. As packet spraying causes reordering, it becomes more complicated to detect loss. Packet trimming gives the receiver and the sender an early explicit indication of congestion, allowing immediate loss recovery in spite of reordering, and is a critical feature in the low-RTT environments where UET is designed to operate.

cumulus@switch:~$ nv set system forwarding packet-trim profile packet-trim-default cumulus@switch:~$ nv config apply

NVIDIA Cumulus Linux release 5.14 for NVIDA Spectrum Ethernet Switches includes support for Packet Trimming. The above command enables packet trimming, sets the DSCP remark value to 11, sets the truncation size to 256 bytes, sets the switch priority to 4, and sets the eligibility to all ports on the switch with traffic class 1, 2, and 3. NVIDA BlueField host adapters respond to trimmed packets to ensure fast congestion recovery.

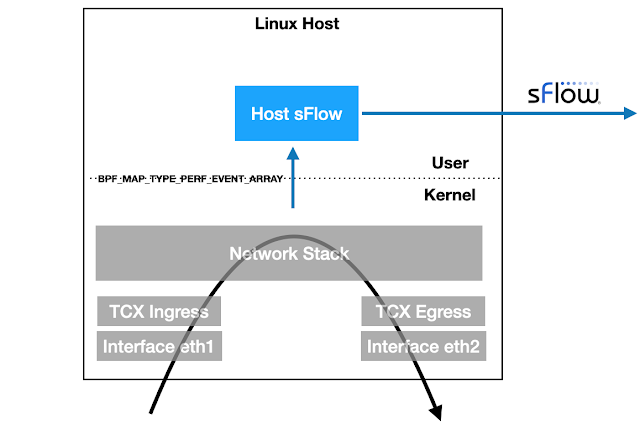

Linux packet sampling using eBPF

Linux 6.11+ kernels provide TCX attachment points for eBPF programs to efficiently examine packets as they ingress and egress the host. The latest version of the open source Host sFlow agent includes support for TCX packet sampling to stream industry standard sFlow telemetry to a central collector for network wide visibility, e.g. Deploy real-time network dashboards using Docker compose describes how to quickly set up a Prometheus database and use Grafana to build network dashboards.

static __always_inline void sample_packet(struct __sk_buff *skb, __u8 direction) {

__u32 key = skb->ifindex;

__u32 *rate = bpf_map_lookup_elem(&sampling, &key);

if (!rate || (*rate > 0 && bpf_get_prandom_u32() % *rate != 0))

return;

struct packet_event_t pkt = {};

pkt.timestamp = bpf_ktime_get_ns();

pkt.ifindex = skb->ifindex;

pkt.sampling_rate = *rate;

pkt.ingress_ifindex = skb->ingress_ifindex;

pkt.routed_ifindex = direction ? 0 : get_route(skb);

pkt.pkt_len = skb->len;

pkt.direction = direction;

__u32 hdr_len = skb->len < MAX_PKT_HDR_LEN ? skb->len : MAX_PKT_HDR_LEN;

if (hdr_len > 0 && bpf_skb_load_bytes(skb, 0, pkt.hdr, hdr_len) < 0)

return;

bpf_perf_event_output(skb, &events, BPF_F_CURRENT_CPU, &pkt, sizeof(pkt));

}

SEC("tcx/ingress")

int tcx_ingress(struct __sk_buff *skb) {

sample_packet(skb, 0);

return TCX_NEXT;

}

SEC("tcx/egress")

int tcx_egress(struct __sk_buff *skb) {

sample_packet(skb, 1);

return TCX_NEXT;

}

The sample.bpf.c file Continue reading

Tracing network packets with eBPF and pwru

pwru (packet, where are you?) is an open source tool from Cilium that used eBPF instrumentation in recent Linux kernels to trace network packets through the kernel.In this article we will use Multipass to create a virtual machine to experiment with pwru. Multipass is a command line tool for running Ubuntu virtual machines on Mac or Windows. Multipass uses the native virtualization capabilities of the host operating system to simplify the creation of virtual machines.

multipass launch --name=ebpf noble multipass exec ebpf -- sudo apt update multipass exec ebpf -- sudo apt -y install git clang llvm make libbpf-dev flex bison golang multipass exec ebpf -- git clone https://github.com/cilium/pwru.git multipass exec ebpf --working-directory pwru -- make multipass exec ebpf -- sudo ./pwru/pwru -hRun the commands above to create the virtual machine and build pwru from sources.

multipass exec ebpf -- sudo ./pwru/pwru port httpsRun pwru to trace https traffic on the virtual machine.

multipass exec ebpf -- curl https://sflow-rt.comIn a second window, run the above command to generate an https request from the virtual machine.

SKB CPU PROCESS NETNS MARK/x IFACE PROTO MTU LEN TUPLE FUNC 0xffff9fc40335a0e8 0 ~r/bin/curl:8966 4026531840 0 0 Continue reading

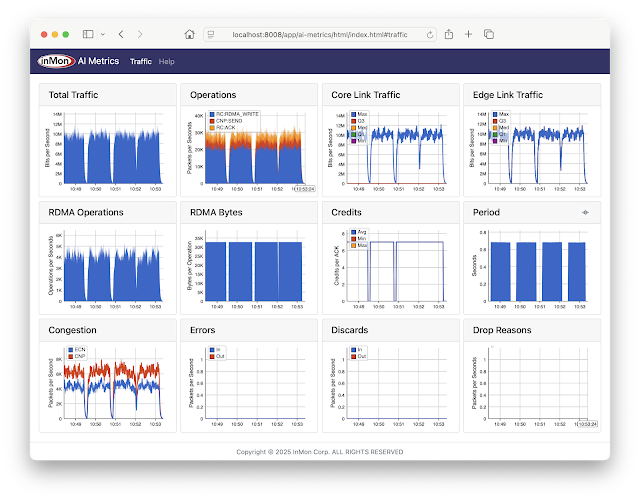

AI Metrics with InfluxDB Cloud

The InfluxDB AI Metrics dashboard shown above tracks performance metrics for AI/ML RoCEv2 network traffic, for example, large scale CUDA compute tasks using NVIDIA Collective Communication Library (NCCL) operations for inter-GPU communications: AllReduce, Broadcast, Reduce, AllGather, and ReduceScatter.The metrics include:

- Total Traffic Total traffic entering fabric

- Operations Total RoCEv2 operations broken out by type

- Core Link Traffic Histogram of load on fabric links

- Edge Link Traffic Histogram of load on access ports

- RDMA Operations Total RDMA operations

- RDMA Bytes Average RDMA operation size

- Credits Average number of credits in RoCEv2 acknowledgements

- Period Detected period of compute / exchange activity on fabric (in this case just over 0.5 seconds)

- Congestion Total ECN / CNP congestion messages

- Errors Total ingress / egress errors

- Discards Total ingress / egress discards

- Drop Reasons Packet drop reasons

Note: InfluxDB Cloud has a free service tier that can be used to test this example.

Save the following compose.yml file on a system running Docker.

configs:

config.telegraf:

content: |

[agent]

interval = '15s'

round_interval = true

omit_hostname = true

[[outputs.influxdb_v2]]

urls = ['https://<INFLUXDB_CLOUD_INSTANCE>.cloud2.influxdata.com']

Continue reading

AI network performance monitoring using containerlab

AI Metrics is available on GitHub. The application provides performance metrics for AI/ML RoCEv2 network traffic, for example, large scale CUDA compute tasks using NVIDIA Collective Communication Library (NCCL) operations for inter-GPU communications: AllReduce, Broadcast, Reduce, AllGather, and ReduceScatter.The screen capture is from a containerlab topology that emulates a AI compute cluster connected by a leaf and spine network. The metrics include:

- Total Traffic Total traffic entering fabric

- Operations Total RoCEv2 operations broken out by type

- Core Link Traffic Histogram of load on fabric links

- Edge Link Traffic Histogram of load on access ports

- RDMA Operations Total RDMA operations

- RDMA Bytes Average RDMA operation size

- Credits Average number of credits in RoCEv2 acknowledgements

- Period Detected period of compute / exchange activity on fabric (in this case just over 0.5 seconds)

- Congestion Total ECN / CNP congestion messages

- Errors Total ingress / egress errors

- Discards Total ingress / egress discards

- Drop Reasons Packet drop reasons

Note: Clicking on peaks in the charts shows values at that time.

This article gives step-by-step instructions to run the demonstration.

git clone https://github.com/sflow-rt/containerlab.gitDownload the sflow-rt/containerlab project from GitHub.

git clone https://github.com/sflow-rt/containerlab.git cd containerlab ./run-clabRun the above commands Continue reading

AI Metrics with Grafana Cloud

The Grafana AI Metrics dashboard shown above tracks performance metrics for AI/ML RoCEv2 network traffic, for example, large scale CUDA compute tasks using NVIDIA Collective Communication Library (NCCL) operations for inter-GPU communications: AllReduce, Broadcast, Reduce, AllGather, and ReduceScatter.The metrics include:

- Total Traffic Total traffic entering fabric

- Operations Total RoCEv2 operations broken out by type

- Core Link Traffic Histogram of load on fabric links

- Edge Link Traffic Histogram of load on access ports

- RDMA Operations Total RDMA operations

- RDMA Bytes Average RDMA operation size

- Credits Average number of credits in RoCEv2 acknowledgements

- Period Detected period of compute / exchange activity on fabric (in this case just over 0.5 seconds)

- Congestion Total ECN / CNP congestion messages

- Errors Total ingress / egress errors

- Discards Total ingress / egress discards

- Drop Reasons Packet drop reasons

Note: Grafana Cloud has a free service tier that can be used to test this example.

Multi-vendor support for dropped packet notifications

The sFlow Dropped Packet Notification Structures extension was published in October 2020. Extending sFlow to provide visibility into dropped packets offers significant benefits for network troubleshooting, providing real-time network wide visibility into the specific packets that were dropped as well the reason the packet was dropped. This visibility instantly reveals the root cause of drops and the impacted connections. Packet discard records complement sFlow's existing counter polling and packet sampling mechanisms and share a common data model so that all three sources of data can be correlated, for example, packet sampling reveals the top consumers of bandwidth on a link, helping to get to the root cause of congestion related packet drops reported for the link.

Today the following network operating systems include support for the drop notification extension in their sFlow agent implementations:

- Arista Dropped packet notifications with Arista Networks

- Cisco Dropped packet notifications with Cisco 8000 Series Routers

- Linux Linux as a network operating systemUsing sFlow to monitor dropped packets

- NVIDIA NVIDIA Cumulus Linux 5.11 for AI / ML

- VyOS VyOS 1.4 LTS released

Two additional sFlow dropped packet notification implementations are in the pipeline and should be available later this year:

- SONiC The Switch Continue reading

AI Metrics with Prometheus and Grafana

The Grafana AI Metrics dashboard shown above tracks performance metrics for AI/ML RoCEv2 network traffic, for example, large scale CUDA compute tasks using NVIDIA Collective Communication Library (NCCL) operations for inter-GPU communications: AllReduce, Broadcast, Reduce, AllGather, and ReduceScatter.The metrics include:

- Total Traffic Total traffic entering fabric

- Operations Total RoCEv2 operations broken out by type

- Core Link Traffic Histogram of load on fabric links

- Edge Link Traffic Histogram of load on access ports

- RDMA Operations Total RDMA operations

- RDMA Bytes Average RDMA operation size

- Credits Average number of credits in RoCEv2 acknowledgements

- Period Detected period of compute / exchange activity on fabric (in this case just over 0.5 seconds)

- Congestion Total ECN / CNP congestion messages

- Errors Total ingress / egress errors

- Discards Total ingress / egress discards

- Drop Reasons Packet drop reasons

This article gives step-by-step instructions to set up the dashboard in a production environment.

git clone https://github.com/sflow-rt/prometheus-grafana.git sed -i -e 's/prometheus/ai-metrics/g' prometheus-grafana/env_vars ./prometheus-grafana/start.sh

The easiest way to get started is to use Docker, see Deploy real-time network dashboards using Docker compose, and deploy the sflow/ai-metrics image bundling the AI Metrics application to generate metrics.

scrape_configs:

- job_name: 'sflow-rt-ai-metrics'

metrics_path: /app/ai-metrics/scripts/metrics.js/prometheus/txt

scheme: Continue reading

Dropped packet notifications with Cisco 8000 Series Routers

The availability of the Cisco IOS XR Release 25.1.1 brings sFlow dropped packet notification support to Cisco 8000 series routers, making it easy to capture and analyze packets dropped at router ingress, aiding in understanding blocked traffic types, identifying potential security threats, and optimizing network performance.

sFlow Configuration for Traffic Monitoring and Analysis describes the steps to enable sFlow and configure packet sampling and interface counter export from a Cisco 8000 Series router to a remote sFlow analyzer.

Note: Devices using NetFlow or IPFIX must transition to sFlow for regular sampling before utilizing the dropped packet feature, ensuring compatibility and consistency in data analysis.

Router(config)#monitor-session monitor1 Router(config)#destination sflow EXP-MAP Router(config)#forward-drops rx

Configure a monitor-session with the new destination sflow option to export dropped packet notifications (which include ingress interface, drop reason, and header of dropped packet) to the configured sFlow analyzer.

Cisco lists the following benefits of streaming dropped packets in the configuration guide:

- Enhanced Network Visibility: Captures and forwards dropped packets to an sFlow collector, providing detailed insights into packet loss and improving diagnostic capabilities.

- Comprehensive Analysis: Allows for simultaneous analysis of regular and dropped packet flows, offering a holistic view of network performance.

- Troubleshooting: Empowers Continue reading

Comparing AI / ML activity from two production networks

AI Metrics describes how to deploy the open source ai-metrics application. The application provides performance metrics for AI/ML RoCEv2 network traffic, for example, large scale CUDA compute tasks using NVIDIA Collective Communication Library (NCCL) operations for inter-GPU communications: AllReduce, Broadcast, Reduce, AllGather, and ReduceScatter. The screen capture from the article (above) shows results from a simulated 48,000 GPU cluster.This article goes beyond simulation to demonstrate the AI Metrics dashboard by comparing live traffic seen in two production AI clusters.

Cluster 1

This cluster consists of 250 GPUs connected via 100G ports to single large switch. The results are pretty consistent with simulation from the original article. In this case there is no Core Link Traffic because the cluster consists of a single switch. The Discards chart shows a burst of Out (egress) discards and the Drop Reasons chart gives the reason as ingress_vlan_filter. The Total Traffic, Operations, Edge Link Traffic, and RDMA Operations charts all show a transient drop in throughput coincident with the discard spike. Further details of the dropped packets, such as source/destination address, operation, ingress / egress port, QP pair, etc. can be extracted from the sFlow Dropped Packet Notifications that are populating Continue readingCapture to pcap file using sflowtool

Replay pcap files using sflowtool describes how to capture sFlow datagrams using tcpdump and replay them in real time using sflowtool. However, using tcpdump for the capture has the downside of requiring root privileges. A recent update to sflowtool now makes it possible to use sflowtool to capture sFlow datagrams in tcpdump pcap format without the need for root access.

docker run --rm -p 6343:6343/udp sflow/sflowtool -M > sflow.pcapEither compile the latest version of sflowtool or, as shown above, use Docker to run the pre-built sflow/sflowtool image. The -M option outputs whole UDP datagrams received to standard output. In either case, type CNTRL + C to end the capture.

AI Metrics

AI Metrics is available on GitHub. The application provides performance metrics for AI/ML RoCEv2 network traffic, for example, large scale CUDA compute tasks using NVIDIA Collective Communication Library (NCCL) operations for inter-GPU communications: AllReduce, Broadcast, Reduce, AllGather, and ReduceScatter.The dashboard shown above is from a simulated network 1,000 switches, each with 48 ports access ports connected to a host. Activity occurs in a 256mS on / off cycle to emulate an AI learning run. The metrics include:

- Total Traffic Total traffic entering fabric

- Operations Total RoCEv2 operations broken out by type

- Core Link Traffic Histogram of load on fabric links

- Edge Link Traffic Histogram of load on access ports

- RDMA Operations Total RDMA operations

- RDMA Bytes Average RDMA operation size

- Credits Average number of credits in RoCEv2 acknowledgements

- Period Detected period of compute / exchange activity on fabric (in this case just over 0.5 seconds)

- Congestion Total ECN / CNP congestion messages

- Errors Total ingress / egress errors

- Discards Total ingress / egress discards

- Drop Reasons Packet drop reasons

Note: Clicking on peaks in the charts shows values at that time.

This article gives step-by-step instructions to run the AI Metrics application in a production environment and integrate Continue reading

Replay pcap files using sflowtool

It can be very useful to capture sFlow telemetry from production networks so that it can be replayed later to perform off-line analysis, or to develop or evaluate sFlow collection tools.

sudo tcpdump -i any -s 0 -w sflow.pcap udp port 6343Run the command above on the system you are using to collect sFlow data (if you aren't yet collecting sFlow, see Agents for suggested configuration settings). Type Control-C to end the capture after 5 to 10 minutes. Copy the resulting sflow.pcap file to your laptop.

docker run --rm -it -v $PWD/sflow.pcap:/sflow.pcap sflow/sflowtool \ -r /sflow.pcap -P 1Either compile the latest version of sflowtool or, as shown above, use Docker to run the pre-built sflow/sflowtool image. The -P (Playback) option replays the trace in real-time and displays the contents of each sFlow message. Running sflowtool using Docker provides additional examples, including converting the sFlow messages into JSON format for processing by a Python script.

docker run --rm -it -v $PWD/sflow.pcap:/sflow.pcap sflow/sflowtool \ -r /sflow.pcap -f 192.168.4.198/6343 -P 1The -f (forwarding) option takes an IP address and UDP port number as arguments, in this Continue reading

Topology aware flow analytics with NVIDIA NetQ

NVIDIA Cumulus Linux 5.11 for AI / ML describes how NVIDIA 400/800G Spectrum-X switches combined with the latest Cumulus Linux release deliver enhanced real-time telemetry that is particularly relevant to the AI / machine learning workloads that Spectrum-X switches are designed to handle.

This article shows how to extract Topology from an NVIDIA fabric in order to perform advanced fabric aware analytics, for example: detect flow collisions, trace flow paths, and de-duplicate traffic.

In this example, we will use NVIDIA NetQ, a highly scalable, modern network operations toolset that provides visibility, troubleshooting, and validation of your Cumulus and SONiC fabrics in real time.

netq show lldp jsonFor example, the NetQ Link Layer Discovery Protocol (LLDP) service simplifies the task of gathering neighbor data from switches in the network, and with the json option, makes the output easy to process with a Python script, for example, lldp-rt.py.

The simplest way to try sFlow-RT is to use the pre-built sflow/topology Docker image that packages sFlow-RT with additional applications that are useful for monitoring network topologies.

docker run -p 6343:6343/udp -p 8008:8008 sflow/topologyConfigure Cumulus Linux to steam sFlow telemetry to sFlow-RT on UDP port 6343 (the default for Continue reading

SC24 Over 10 Terabits per Second of WAN Traffic

The SC24 WAN Stress Test chart shows 10.3 Terabits bits per second of WAN traffic to the The International Conference for High Performance Computing, Networking, Storage, and Analysis (SC24) conference held this week in Atlanta. The conference network used in the demonstration, SCinet, is described as the most powerful and advanced network on Earth, connecting the SC community to the world.

SC24 Real-time RoCEv2 traffic visibility describes a demonstration of wide area network bulk data transmission using RDMA over Converged Ethernet (RoCEv2) flows typically seen in AI/ML data centers. In the example, 3.2Tbits/second sustained trasmissions from sources geographically distributed around the United States was demonstrated.

SC24 Dropped packet visibility demonstration shows how the sFlow data model integrates three telemetry streams: counters, packet samples, and packet drop notifications. Each type of data is useful on its own, but together they provide the comprehensive network wide observability needed to drive automation. Real-time network visibility is particularly relevant to AI / ML data center networks where congestion and dropped packets can result in serious performance degradation and in this screen capture you can see multiple 400Gbits/s RoCEv2 flows.

SC24 SCinet traffic describes the architecture of the real-time monitoring system used to Continue reading