Ulukhaktok: Community Networking in the (Far) North

In June of this year, I had the great privilege of traveling to Ulukhaktok, NWT, Canada to talk to community members about the possibility of building a new, local Internet service network. As a result of these meetings, and the incredibly driven individuals I met with in Ulu, this time next year, Ulukhaktok will be the proud owner of the far-most Northern community network in the world.

I left Washington, D.C. in the throes of summer – upper 80-degree weather and so humid you’d feel wet the second you stepped outside. Two days and five planes later I was in Ulukhaktok, a community of about 400 people on the 70th parallel. Summer there is a little different, and I explored the community amidst summer snow and 24-hour days.

I spent four days getting to know the community, and it wasn’t hard to understand the deep sense of community pride right away. Ulu is a beautiful, U-shaped town on the edge of the Arctic Ocean. It’s filled with people who will stop when they see a stranger, smile, and ask who you are and what you’re doing. And every time someone stopped, I told them about the Internet Society, Continue reading

Using Automation vs Making Automation

First published in Packet Pushers Human Infrastructure Magazine 58 – you can subscribe here Another side of the debate around programming, automation and orchestration. Are you a user or a maker ? Platforms are 80/20 For the last 20 or 30 years, network management software has followed an 80/20 approach to customisation. On the first […]

The post Using Automation vs Making Automation appeared first on EtherealMind.

Innovations in CBD And Hemp

Cannabidiol (CBD) and hemp have been known to be used in health supplements for humans and animals, as well as in various beauty products. Since the legalization of marijuana in many U.S. states, innovations in CBD and hemp products have become an acceptable and highly effective alternative to synthetic drugs for the treatment of many conditions including:

- Chronic pain

- Anxiety and depression

- PTSD

- Arthritis

- Sleep issues

While more studies need to be done, it is believed since CBD and hemp oil may help reduce inflammation in the body that it may help serve as treatment and preventions for such health issues as heart disease and diabetes.

Innovative in CBD and hemp products for the treatment of dogs, specifically, may help to:

- Reduce aggression

- Relieve pain

- Improve skin and coat appearance

- Help reduce seizures

When CBD and hemp products do not offer a miracle cure, they have shown great promise in helping people who do not respond well to traditional drugs or who may simply not like the negative side effects for prescription drugs in treating certain medical conditions.

Keep in mind that most of the innovations in CBD and hemp are not designed to make you high, Continue reading

Introducing Certificate Transparency Monitoring

Today we’re launching Certificate Transparency Monitoring (my summer project as an intern!) to help customers spot malicious certificates. If you opt into CT Monitoring, we’ll send you an email whenever a certificate is issued for one of your domains. We crawl all public logs to find these certificates quickly. CT Monitoring is available now in public beta and can be enabled in the Crypto Tab of the Cloudflare dashboard.

Background

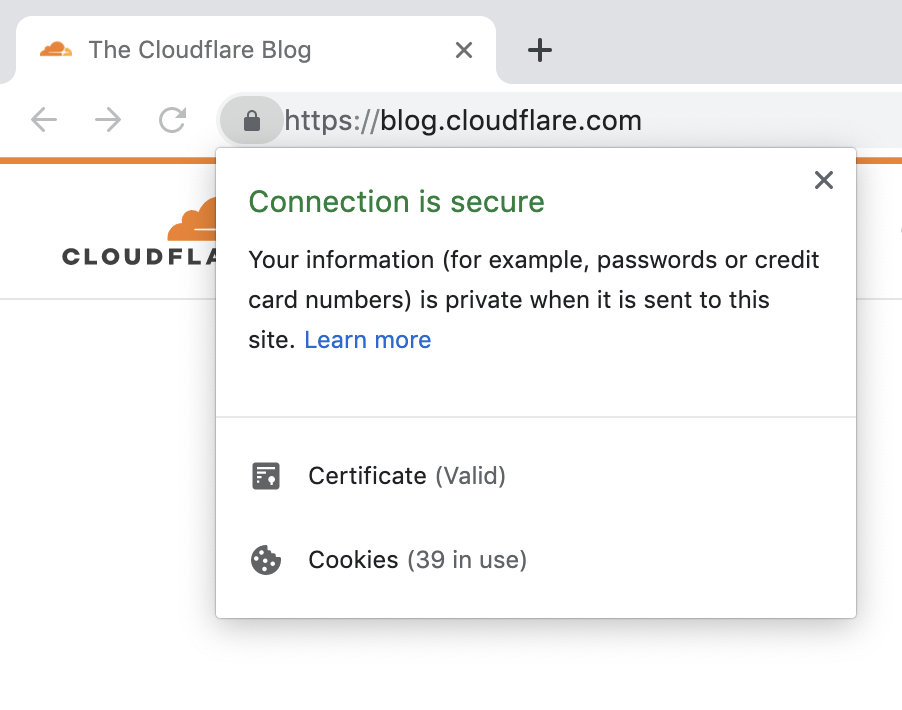

Most web browsers include a lock icon in the address bar. This icon is actually a button — if you’re a security advocate or a compulsive clicker (I’m both), you’ve probably clicked it before! Here’s what happens when you do just that in Google Chrome:

This seems like good news. The Cloudflare blog has presented a valid certificate, your data is private, and everything is secure. But what does this actually mean?

Certificates

Your browser is performing some behind-the-scenes work to keep you safe. When you request a website (say, cloudflare.com), the website should present a certificate that proves its identity. This certificate is like a stamp of approval: it says that your connection is secure. In other words, the certificate proves that content was not intercepted or Continue reading

Kernel of Truth season 2 episode 12: Innovation in the data center

Subscribe to Kernel of Truth on iTunes, Google Play, Spotify, Cast Box and Sticher!

Click here for our previous episode.

In this podcast we have an in-depth conversation about the different types and levels of innovation in the data center and where we see it going. Spiderman aka Rama Darbha and host Brian O’Sullivan are joined by a new guest to the podcast, VP of Marketing Ami Badani. They share that while innovation in the data center doesn’t appear sexy, outside of network engineers, in reality there has been a huge paradigm shift in the way data centers have built and operated last 3 years. So what does that mean? How is automation involved in this conversation? Listen here to find out.

Guest Bios

Brian O’Sullivan: Brian currently heads Product Management for Cumulus Linux. For 15 or so years he’s held software Product Management positions at Juniper Networks as well as other smaller companies. Once he saw the change that was happening in the networking space, he decided to join Cumulus Networks to be a part of the open networking innovation. When not working, Brian is a voracious reader and has held a variety of jobs, including Continue reading

IPv6 Buzz 032: LinkedIn Recruits IPv6 – Challenges And Lessons Learned

LinkedIn has been working with IPv6 for years. On today's IPv6 Buzz episode, LinkedIn engineer Franck Martin discusses the technical and cultural challenges of adoption, explores why the company engaged with IPv6, and shares lessons to help others with their own deployments.IPv6 Buzz 032: LinkedIn Recruits IPv6 – Challenges And Lessons Learned

LinkedIn has been working with IPv6 for years. On today's IPv6 Buzz episode, LinkedIn engineer Franck Martin discusses the technical and cultural challenges of adoption, explores why the company engaged with IPv6, and shares lessons to help others with their own deployments.

The post IPv6 Buzz 032: LinkedIn Recruits IPv6 – Challenges And Lessons Learned appeared first on Packet Pushers.

Save the Date: 4th Summit on Community Networks in Africa

The 4th Summit on Community Networks (CNs) in Africa will take place in Dodoma, Tanzania from 28 October to 2 November 2019. The Summit hopes to promote the creation and growth of CNs, increase collaboration between CN operators in the region, and provide an opportunity for them to engage with other stakeholders.

The main activities planned include:

- Training Workshop: 28-29 October

- CN Summit Plenary: 30-31 October

- Site Visit to Kondoa Community Network: 1-2 November

The event is targeted at CN operators, policy makers, researchers, evangelists, sponsors, and related networks such as community radio.

Last year over 100 participants from 20 countries, 13 of them African, gathered in Wild Lubanzi, in one of the deepest rural areas of the Eastern Cape. The 3rd Summit on CNs in Africa was organized by the Internet Society,Zenzeleni Networks NPC, and APC from 2-7 September 2018. For the approximate 40 participants from surrounding communities, the Summit was their first international conference – or conference of any kind. It was exciting to see them absorb everything and feel proud of their neighbors in Mankosi, home of the Zenzeleni project, one of many community networks in Africa.

CNs offer local solutions to the connectivity Continue reading

REST API 2. Basics cheat sheet (Ansible, Bash, Postman, and Python) for POST/DELETE using NetBox and Docker as examples

Hello my friend,

In the previous blogpost, we started the exciting journey in the world of REST API, where you have learned how to collect the information using GET method. Today you will learn how to create the objects using POST method and remove them using DELETE.

2

3

4

5

retrieval system, or transmitted in any form or by any

means, electronic, mechanical or photocopying, recording,

or otherwise, for commercial purposes without the

prior permission of the author.

Disclaimer

This article is a continuation of the previous one. You should start with that to get the full picture.

What are we going to test?

You will learn how to use two requests

- POST for adding new information

- DELETE for removing the entries

As you might remember, the interaction with the REST API is described by CRUD model, what stands for Create, Read, Update, and Delete. In this concept, HTTP POST method represents Create and DELETE represents Delete operaions.

To put the context, Digital Ocean NetBox and Docker are the applications, which we will manage over the REST API.

Software version

The following Continue reading

AirPods Review

TL:DR - five out of ten. Maybe.

The post AirPods Review appeared first on EtherealMind.

Real Robots That Look Or Act Like Humans

Technology has been advancing by leaps and bounds. Currently, scientists and tech experts are designing artificial intelligence (AI)-programmed robots that look and act like humans. While they’re not a standard in our society quite yet, this social robot technology is real and has drastically advanced over the last few decades to the point where these humanistic look-alike robots called androids are springing up all over the place – even in Whitney Cummings’ latest Netflix standup special!

These robots are being developed to someday take over jobs in retail, hospitality, health care, child and elderly care, and even as policemen. But are these android robots as human-looking and acting as scientists would have you believe? Let’s take a closer look and see if they really are as humane as their real-life counterparts.

Superficial Robots that Resemble … Us

Scientists have built AI-driven robots that have two arms, two legs, a complete and realistic face with hair and facial expressions. Some of them can smile or frown, sit or stand, and do a number of human jobs.

From photographs and YouTube videos, many of these robots look like humans wearing a wig. However, a more close-up view leaves them resembling life-sized Continue reading