Intel, Google, Other US Tech Companies Ban Huawei Following Trump Blacklist

Both U.S. companies and Huawei will take revenue hits as a result of the blacklisting.

Both U.S. companies and Huawei will take revenue hits as a result of the blacklisting.

Out and about this week

A reminder to my readers that I will be speaking at Interop19 this week in Las Vegas on Wednesday. Denise Donohue and I are speaking about finding smart people:

I will also be speaking at CHINOG Chicago Illinois on Thursday:

And interacting with some other folks on a panel about getting started in the IETF.

Mellanox Launches Ethernet Cloud Fabric Based on 400G Switches

“We get asked a lot: ‘Do your switches compare to [Broadcom] Trident or Tomahawk?’ The short...

“We get asked a lot: ‘Do your switches compare to [Broadcom] Trident or Tomahawk?’ The short...

The Week in Internet News: San Francisco Bans Use of Facial Recognition by Police

No cameras, please: The San Francisco Board of Supervisors has voted to ban the use of facial recognition technologies by the policy and other agencies over privacy and civil liberties concerns, the New York Times reports. Even though police across the country have used the technology to identify criminals, facial recognition has raised fears of abuse and of turning the country into a police state.

Broadband in space: SpaceX had planned to launch a rocket containing 60 satellites designed to deliver broadband service, but the company delayed the launch a couple of times, first because of wind and then because the satellites need a software update, ExtremeTech reports. The launch was supposed to be a first step toward Elon Musk’s plan to create a space-based broadband network.

Broadband in drones: As an alternative to satellite broadband and other efforts, SoftBank is looking at ways to provide Internet service by drone, the L.A. Times says. The Japanese telecom carrier recently announced it is working with drone maker AeroVironment to build a drone capable of “flying to the stratosphere, hovering around an area for months and serving as a floating cell tower to beam internet to users on Earth.”

Just Continue reading

VMware to Showcase NSX Service Mesh with Enterprise PKS at KubeCon EMEA

Go Beyond Microservices with NSX Service Mesh

Based on Istio and Envoy, VMware NSX Service Mesh provides discovery, visibility, control, and security of end-to-end transactions for cloud native applications. Announced at KubeCon NA 2018, NSX Service Mesh is currently in private Beta and interested users may sign up here.

The design for NSX Service Mesh extends beyond microservices to include end-users accessing applications, data stores, and sensitive data elements. NSX Service Mesh also introduces federation for containerized applications running on multiple VMware Kubernetes environments, across on-premises and public clouds. This enables improved operations, security, and visibility for containerized applications running on clusters across multiple on-premises and public clouds – with centrally defined and managed configuration, visuals, and policies.

Enterprises can leverage a number of different capabilities including:

- Traffic management

- mTLS encryption

- Application SLO policies and resiliency controls

- Progressive roll outs

- Automated remediation workflows

Achieve Operational Consistency with Federated Service Mesh

At Google Cloud Next, VMware and Google demonstrated how a hybrid cloud solution can use a federated service mesh across Kubernetes clusters on VMware Enterprise PKS and GKE. This highlighted one example deployment for how enterprise teams can achieve consistent operations and security for cloud native applications and data.

To learn Continue reading

One more thing… new Speed Page

Congratulations on making it through Speed Week. In the last week, Cloudflare has: described how our global network speeds up the Internet, launched a HTTP/2 prioritisation model that will improve web experiences on all browsers, launched an image resizing service which will deliver the optimal image to every device, optimized live video delivery, detailed how to stream progressive images so that they render twice as fast - using the flexibility of our new HTTP/2 prioritisation model and finally, prototyped a new over-the-wire format for JavaScript that could improve application start-up performance especially on mobile devices. As a bonus, we’re also rolling out one more new feature: “TCP Turbo” automatically chooses the TCP settings to further accelerate your website.

As a company, we want to help every one of our customers improve web experiences. The growth of Cloudflare, along with the increase in features, has often made simple questions difficult to answer:

- How fast is my website?

- How should I be thinking about performance features?

- How much faster would the site be if I were to enable a particular feature?

This post will describe the exciting changes we have made to the Speed Page on the Cloudflare dashboard to give Continue reading

Kubernetes and VMware Enterprise PKS Networking & Security Operations with NSX-T Data Center

The focus of this blog is VMware Enterprise PKS and Kubernetes Operations with NSX-T Data Center. For the sake of completion, I will start with a high level NSX-T deployment steps without going too much into the details.

This blog does not focus on NSX-T Architecture and Deployment in Kubernetes or Enterprise PKS environments, but it highlights some of those points as needed.

Deploying NSX-T Data Center

There are multiple steps that are required to be configured in NSX-T before deploying Enterprise PKS. At a high level, here are the initial steps of installing NSX-T:

- Download NSX-T Unified Appliance OVA.

- Deploy NSX-T Manager (Starting from NSX-T 2.4, three managers could be deployed with a Virtual IP).

- Add vCenter as a Compute Manager in NSX-T

- Deploy NSX-T Controllers. (Starting from NSX-T 2.4 the controllers are merged with NSX-T manager in a single appliance)

- Deploy one or more pairs of NSX-T Edges with a minimum of Large Size. (Large Size is required by Enterprise PKS, Bare-Metal Edges could be used too).

- Install NSX Packages on ESXi Hosts

- Create an Overlay and a VLAN Transport Zones.

- Create a TEP IP Pool.

- Add ESXi Hosts as a Transport nodes to the Continue reading

EU election season and securing online democracy

It’s election season in Europe, as European Parliament seats are contested across the European Union by national political parties. With approximately 400 million people eligible to vote, this is one of the biggest democratic exercises in the world - second only to India - and it takes place once every five years.

Over the course of four days, 23-26 May 2019, each of the 28 EU countries will elect a different number of Members of the European Parliament (“MEPs”) roughly mapped to population size and based on a proportional system. The 751 newly elected MEPs (a number which includes the UK’s allocation for the time being) will take their seats in July. These elections are not only important because the European Parliament plays a large role in the EU democratic system, being a co-legislator alongside the European Council, but as the French President Emmanuel Macron has described, these European elections will be decisive for the future of the continent.

Election security: an EU political priority

Political focus on the potential cybersecurity threat to the EU elections has been extremely high, and various EU institutions and agencies have been engaged in a long campaign to drive awareness among EU Member Continue reading

Tech Bytes: UK Retailer Revitalizes In-Store Experience With Silver Peak SD-WAN Platform (Sponsored)

On today’s Tech Bytes, sponsored by Silver Peak, we talk with homeware retailer Dunelm about how they rearchitected their WAN to improve the in-store experience for customers, lower IT costs and boost the bottom line.Tech Bytes: UK Retailer Revitalizes In-Store Experience With Silver Peak SD-WAN Platform (Sponsored)

On today’s Tech Bytes, sponsored by Silver Peak, we talk with homeware retailer Dunelm about how they rearchitected their WAN to improve the in-store experience for customers, lower IT costs and boost the bottom line.

The post Tech Bytes: UK Retailer Revitalizes In-Store Experience With Silver Peak SD-WAN Platform (Sponsored) appeared first on Packet Pushers.

Microsoft Azure Networking Slide Deck Is Ready

After a few weeks of venting my frustrations on Twitter I finally completed Microsoft Azure Networking slide deck last week and published the related demos on GitHub.

I will use the slide deck in a day-long workshop in Zurich (Switzerland) on June 12th and run a series of live webinar sessions in autumn. If you’re a (paid) subscriber you can already download the slides and it would be great if you’d have time to attend the Zurich workshop – it’s infinitely better to discuss interesting challenges face-to-face than to type questions in a virtual classroom.

RPCValet: NI-driven tail-aware balancing of µs-scale RPCs

RPCValet: NI-driven tail-aware balancing of µs-scale RPCs Daglis et al., ASPLOS’19

Last week we learned about the [increased tail-latency sensitivity of microservices based applications with high RPC fan-outs. Seer uses estimates of queue depths to mitigate latency spikes on the order of 10-100ms, in conjunction with a cluster manager. Today’s paper choice, RPCValet, operates at latencies 3 orders of magnitude lower, targeting reduction in tail latency for services that themselves have service times on the order of a small number of µs (e.g., the average service time for memcached is approximately 2µs).

The net result of rapid advancements in the networking world is that inter-tier communications latency will approach the fundamental lower bound of speed-of-light propagation in the foreseeable future. The focus of optimization hence will completely shift to efficiently handling RPCs at the endpoints as soon as they are delivered from the network.

Furthermore, the evaluation shows that “RPCValet leaves no significant room for improvement” when compared against the theoretical ideal (it comes within 3-15%). So what we have here is a glimpse of the limits for low-latency RPCs under load. When it’s no longer physically possible to go meaningfully faster, further application-level performance Continue reading

Weekly Top Posts: 2019-05-19

- SDxCentral Weekly Wrap: Thrangrycat Attacks Cisco Switches, Routers, Firewalls

- OpenStack Keeps One Eye on the Prize, One Over Its Shoulder

- HPE Buys Supercomputer Company Cray for $1.3B, Boosts Its HPC Business

- Trump Issues Long-Rumored Executive Order Targeting Chinese Telco Vendors

- Thrangrycat Attacks Cisco Switches, Routers, Firewalls

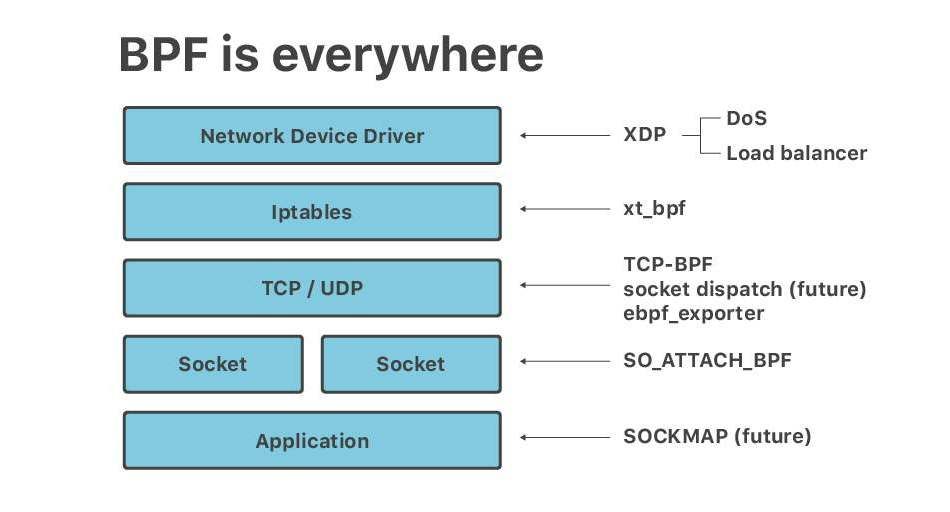

Cloudflare architecture and how BPF eats the world

Recently at Netdev 0x13, the Conference on Linux Networking in Prague, I gave a short talk titled "Linux at Cloudflare". The talk ended up being mostly about BPF. It seems, no matter the question - BPF is the answer.

Here is a transcript of a slightly adjusted version of that talk.

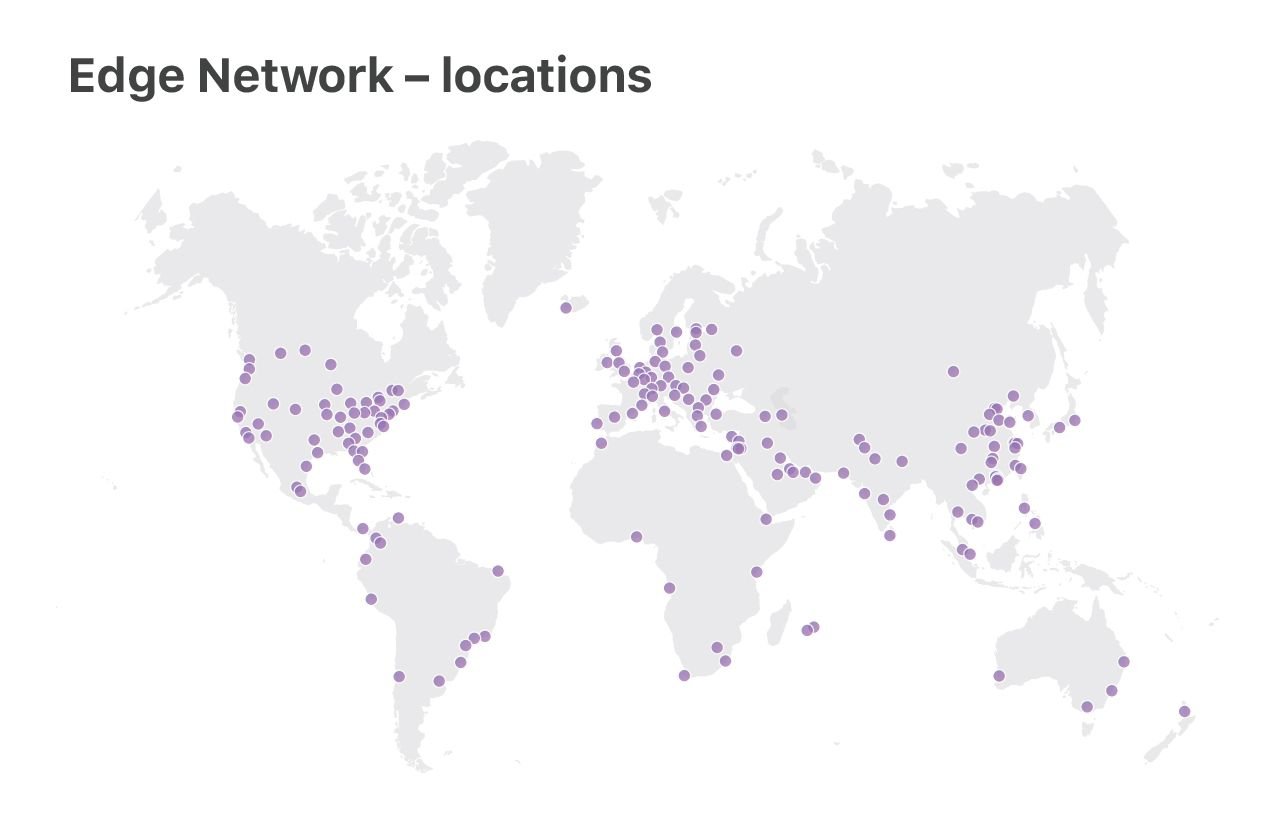

At Cloudflare we run Linux on our servers. We operate two categories of data centers: large "Core" data centers, processing logs, analyzing attacks, computing analytics, and the "Edge" server fleet, delivering customer content from 180 locations across the world.

In this talk, we will focus on the "Edge" servers. It's here where we use the newest Linux features, optimize for performance and care deeply about DoS resilience.

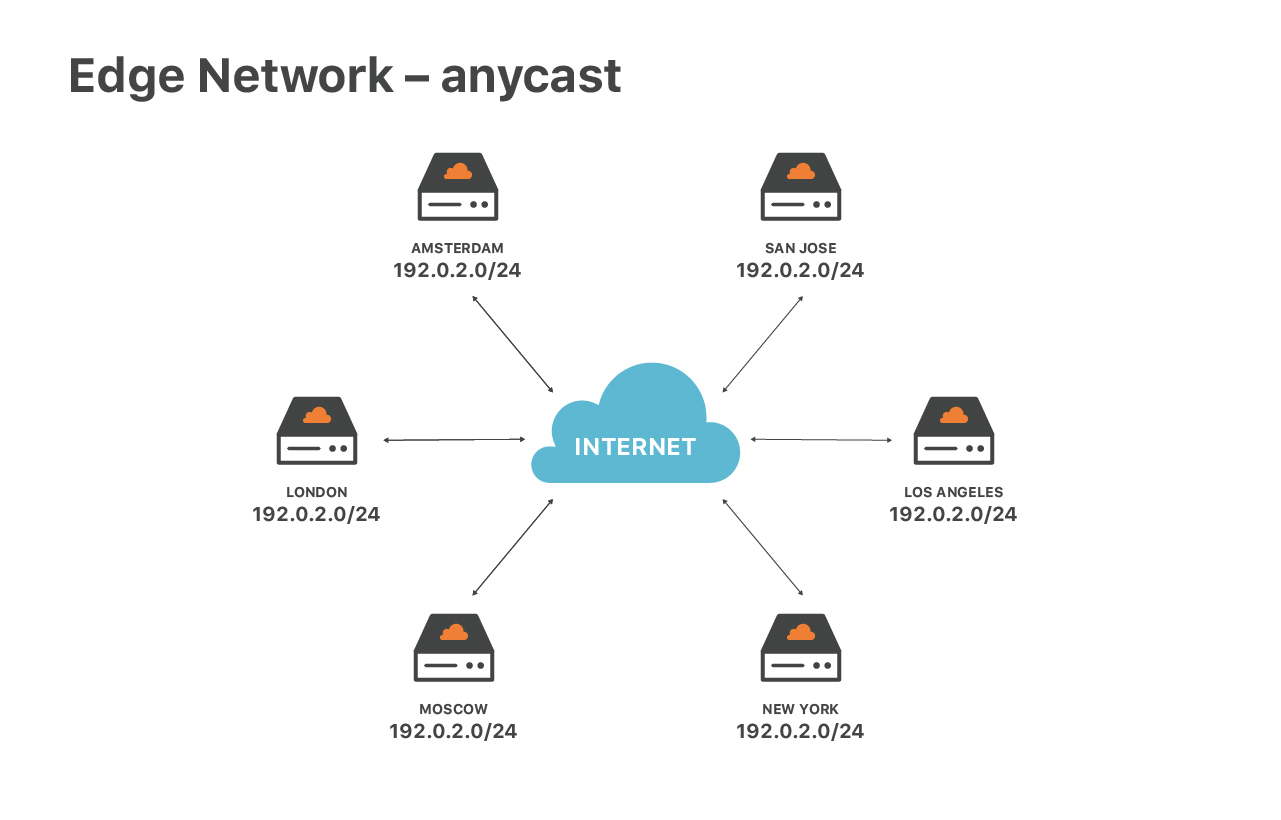

Our edge service is special due to our network configuration - we are extensively using anycast routing. Anycast means that the same set of IP addresses are announced by all our data centers.

This design has great advantages. First, it guarantees the optimal speed for end users. No matter where you are located, you will always reach the closest data center. Then, anycast helps us to spread out DoS traffic. During attacks each of the locations receives a small fraction of Continue reading

KubeCon to Focus on Stability, Extensibility, Stateful Support

“We expect more people to build frameworks on top of Kubernetes,” said Janet Kuo, a software...

“We expect more people to build frameworks on top of Kubernetes,” said Janet Kuo, a software...

Join Cloudflare & Yandex at our Moscow meetup! Присоединяйтесь к митапу в Москве!

Are you based in Moscow? Cloudflare is partnering with Yandex to produce a meetup this month in Yandex's Moscow headquarters. We would love to invite you to join us to learn about the newest in the Internet industry. You'll join Cloudflare's users, stakeholders from the tech community, and Engineers and Product Managers from both Cloudflare and Yandex.

Cloudflare Moscow Meetup

Tuesday, May 30, 2019: 18:00 - 22:00

Location: Yandex - Ulitsa L'va Tolstogo, 16, Moskva, Russia, 119021

Talks will include "Performance and scalability at Cloudflare”, "Security at Yandex Cloud", and "Edge computing".

Speakers will include Evgeny Sidorov, Information Security Engineer at Yandex, Ivan Babrou, Performance Engineer at Cloudflare, Alex Cruz Farmer, Product Manager for Firewall at Cloudflare, and Olga Skobeleva, Solutions Engineer at Cloudflare.

Agenda:

18:00 - 19:00 - Registration and welcome cocktail

19:00 - 19:10 - Cloudflare overview

19:10 - 19:40 - Performance and scalability at Cloudflare

19:40 - 20:10 - Security at Yandex Cloud

20:10 - 20:40 - Cloudflare security solutions and industry security trends

20:40 - 21:10 - Edge computing

Q&A

The talks will be followed by food, drinks, and networking.

View Event Details & Register Here »

We'll Continue reading