Cumulus content roundup: February

It’s time to officially unveil our Cumulus content roundup- February edition! In case you missed any of the content from the last month we, naturally, have you covered with links to it all below. Dig into the latest and greatest resources and news including two great podcasts that we recommend you queue up and listen to during your commute.

From Cumulus Networks:

How to make CI/CD with containers viable in production: Software-defined infrastructure is no longer a nice to have. It’s an absolute must using modern development approaches, such as CI/CD, containers, etc.

Kernel of Truth season 2 episode 1- EVPN on the host: Guess who’s back? Back again? The real Kernel of Truth is back with season 2 and we’re starting off this season with all things EVPN! This topic is near and dear to Attilla de Groots’ heart having talked about it in his recent blog here. He now joins Atul Patel and our host Brian O’Sullivan to talk more about EVPN on host for multi-tenancy.

BGP: What is it, how can it break, and can Linux BGP fix it?: Border Gateway Protocol is one of the most important protocols on the internet. Linux BGP allows for in-depth monitoring and Continue reading

CenturyLink Threat Research Reemerges as Black Lotus Labs

The threat researchers say there’s no deeper, symbolic meaning behind the cool new name. But it...

The threat researchers say there’s no deeper, symbolic meaning behind the cool new name. But it...

Headcount: The Latest Hirings, Firings, and Retirings — March 7, 2019

Verizon makes changes to its board of directors following the retirement of two prominent board...

Verizon makes changes to its board of directors following the retirement of two prominent board...

AT&T Launches New Cybersecurity Division, Joins Global Telco Security Alliance

The new security division will integrate and automate Alien Lab’s threat intelligence into a...

The new security division will integrate and automate Alien Lab’s threat intelligence into a...

Syniverse, Tata Global IPX Interconnect Deal Preps for 5G, IoT

The partnership includes “operational interlocks” for single accountability for troubleshooting...

The partnership includes “operational interlocks” for single accountability for troubleshooting...

Vodafone UK 5G Plans Barge Past Rivals

The U.K. operator goes three better than rival EE with plans to launch 5G in 19 markets this year.

The U.K. operator goes three better than rival EE with plans to launch 5G in 19 markets this year.

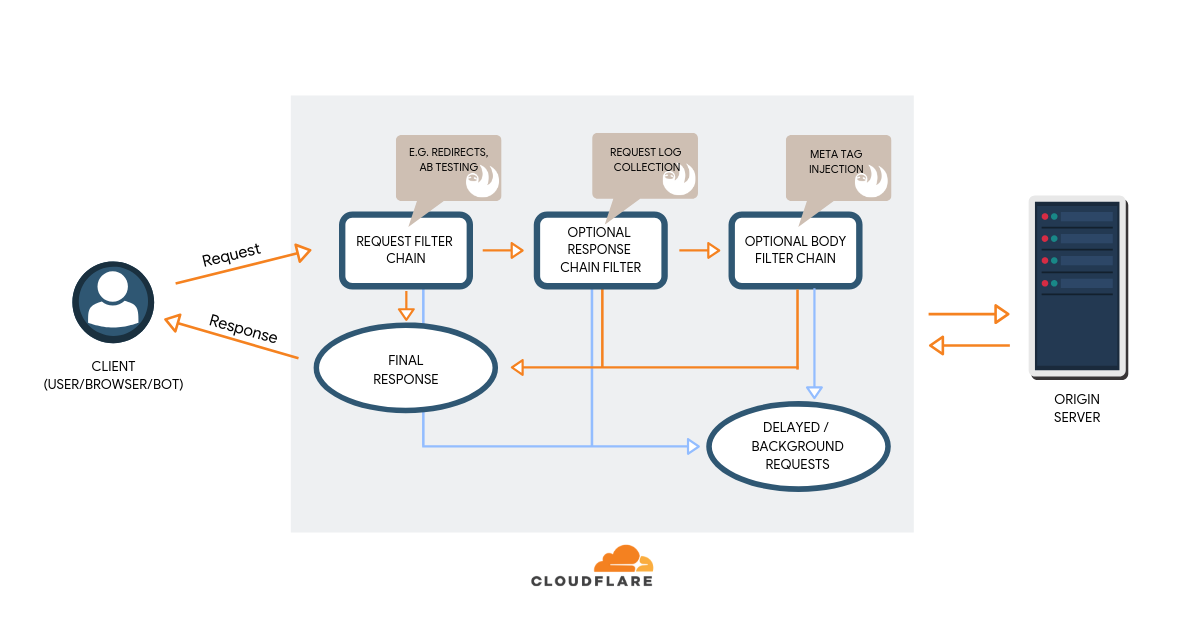

Diving into Technical SEO using Cloudflare Workers

This is a guest post by Igor Krestov and Dan Taylor. Igor is a lead software developer at SALT.agency, and Dan a lead technical SEO consultant, and has also been credited with coining the term “edge SEO”. SALT.agency is a technical SEO agency with offices in London, Leeds, and Boston, offering bespoke consultancy to brands around the world. You can reach them both via Twitter.

With this post we illustrate the potential applications of Cloudflare Workers in relation to search engine optimization, which is more commonly referred to as ‘SEO’ using our research and testing over the past year making Sloth.

This post is aimed at readers who are both proficient in writing performant JavaScript, as well as complete newcomers, and less technical stakeholders, who haven’t really written many lines of code before.

Endless practical applications to overcome obstacles

Working with various clients and projects over the years we’ve continuously encountered the same problems and obstacles in getting their websites to a point of “technical SEO excellence”. A lot of these problems come from platform restriction at an enterprise level, legacy tech stacks, incorrect builds, and years of patching together various services and infrastructures.

As a team of Continue reading

Silo 2: On-Premise with DevOps

I had a great time stirring up the hornet’s nest with the last post about DevOps, so I figured that I’d write another one with some updated ideas and clarifications. And maybe kick the nest a little harder this time.

Grounding the Rules

First, we need to start out with a couple of clarifications. I stated that the mantra of DevOps was “Move Fast, Break Things.” As has been rightly pointed out, this was a quote from Mark Zuckerberg about Facebook. However, as has been pointed out by quite a few people, “The use of basic principles to enable business requirements to get to production deployments with appropriate coordination among all business players, including line of business, developers, classic operations, security, networking, storage and other functional groups involved in service delivery” is a bit more of definition than motto.

What exactly is DevOps then? Well, as I have been educated, it’s a principle. It’s an idea. A premise, if you will. An ideal to strive for. So, to say that someone is on a DevOps team is wrong. There is no such thing as a classic DevOps team. DevOps is instead something that many other teams do in Continue reading

IPv6 Buzz 021: NAT Isn’t Necessary For Security: Answering Listener Questions

Today's episode of IPv6 Buzz answers listener questions including why NAT isn't necessary for security, and the feasibility of scanning a v6 network to discover devices. Thanks for the questions and keep them coming!IPv6 Buzz 021: NAT Isn’t Necessary For Security: Answering Listener Questions

Today's episode of IPv6 Buzz answers listener questions including why NAT isn't necessary for security, and the feasibility of scanning a v6 network to discover devices. Thanks for the questions and keep them coming!

The post IPv6 Buzz 021: NAT Isn’t Necessary For Security: Answering Listener Questions appeared first on Packet Pushers.

Interview with Juniper Networks Ambassador Rob Jeffery

Had a chance to sit down with fellow Ambassador Rob Jeffery at the Juniper NXTWORK 2018 conference in Las Vegas. Rob is the Technical Director and CTO at Next Gen Security based out of the UK, with a heavy focus on bringing emerging network and security products into the marketplace. We discussed his involvement in …Continue reading "Interview with Juniper Networks Ambassador Rob Jeffery"

Building Network Automation Source-of-Truth (Part 2)

In the first blog post of this series I described how you could start building the prerequisite for any network automation solution: the device inventory.

Having done that, you should know what is in your network, but you still don’t know how your network is supposed to work and what services it is supposed to provide. Welcome to the morass known as building your source-of-truth.

Read more ...Huawei Lawsuit Against the U.S. Government Challenges Equipment Ban

The lawsuit, which was filed in a federal court in Texas, seeks a permanent injunction against the...

The lawsuit, which was filed in a federal court in Texas, seeks a permanent injunction against the...

Don’t Throw the Multicloud Out with the Bathwater

https://twitter.com/CTOAdvisor/status/1103034794781429761 https://www.youtube.com/watch?v=wNOA-sPqf80& https://twitter.com/randybias/status/1103713819195408384 https://twitter.com/C_Z_Raisch/status/1103723011037900800 Keith was totally right to push back on the idea that multicloud was about cost optimization. Not only is this a fantasy, it’s also off-brand for most enterprises. In my experience, the enterprise is totally fine paying a premium on what they consider best of breed technology solutions. to me, multicloud isn’t about trying to boil the ocean and build some master plan for world dominance that includes dynamic blah blah blah.Deutsche Welle Profiles Community Networks Around the World

How can the Internet change lives in rural and remote regions? Deutsche Welle, Germany’s public broadcaster, asks these questions in three stories that explore community networks in Zimbabwe, the Republic of Georgia, and South Africa.

Read about the community networks and listen to their stories!

Murambinda Works started as an Internet café in 2002 in the Buhera District in eastern Zimbabwe. Since then it’s grown to provide training in computer literacy for teachers at nearly 218 primary and secondary schools. Murambinda Works, in partnership with the Internet Society and others, is also working to connect eight schools, one nurse training school, and offices of the Ministry of Education.

Tusheti, a mountainous, isolated region in the Republic of Georgia, had been left unconnected by commercial operators. The Internet Society partnered with its Georgian Chapter and other local organizations to help build access to the Internet, which was completed in 2017. (The Tusheti community network was also profiled in The New York Times.)

The Zenzeleni Network in Mankosi, is one of South Africa’s most economically disadvantaged communities. Zenzeleni – which means “do it yourself” in the local language, isiXhosa – was launched in 2012 to provide affordable voice service Continue reading

Network Security Firm Untangle Launches SD-WAN Router and Micro-Firewall

The two products are part of its Network Security Framework, which provides network security...

The two products are part of its Network Security Framework, which provides network security...