Upcoming Training: How the Internet Really Works

The 29th of May, in 7 days, I’m teaching a four-hour webinar/class on Safari Books Online:

This class isn’t just for network engineers, it’s for anyone interested in how the Internet works. You don’t need prior network engineering experience or knowledge to understand the content–so feel free to forward along to anyone you think might be Continue reading

Hedge 227: Provider Consolidation and Competition

Europe and the United States are completely different landscapes of Internet service providers. Which provides better service for customers, and which direction should these different markets go? Luke Kehoe joins Tom Ammon, Eyvonne Sharp, and Russ White to discuss the European market specifically, and why the European market needs consolidation.

HN735: Managing OT Networks

The variety and number of OT devices continue to grow at such a pace that network engineers really need to think through how to manage them as part of their broader network. Dan Massameno joins the show to talk about how he’s collaborating with his facilities department and using SD-Access to manage the OT virtual... Read more »BGP EVPN Fabric – Remote Leaf MAC Learning Process

Remote VTEP Leaf-102: Low-Level Control Plane Analysis

In this section, we will first examine the update process of the BGP tables on the VTEP switch Leaf-102 when it receives a BGP Update message from Spine-11. After that, we will go through the update processes for the MAC-VRF and the MAC Address Table. Finally, we will examine how the VXLAN manager on Leaf-102 learns the IP address of Leaf-10's NVE interface and creates a unidirectional NVE peer record in the NVE Peer Database based on this information.

Remote Learning: BGP Processes

We have configured switches Leaf-101 and Leaf-102 as Route Reflector Clients on the Spine-11 switch. Spine-11 has stored the content of the BGP Update message sent by Leaf-101 in the neighbor-specific Adj-RIB-In of Leaf-101. Spine-11 does not import this information in its local BGP Loc-RIB because we have not defined a BGP import policy. Since Leaf-102 is an RR Client, the BGP process on Spine-11 copies this information in the neighbor-specific Adj-RIB-Out table for Leaf-102 and sends the information to Leaf-102 in a BGP Update message. The BGP process on Leaf-102 stores the received information from the Adj-RIB-In table to the BGP Loc-RIB according to the import policy of EVPN Instance 10010 (import RT 65000:10010). During the import process, the Route Distinguisher values are also modified to match the configuration of Leaf-102: change the RD value from 192.168.10.101:32777 (received RD) to 192.168.10.102:32777 (local RD).

Worth Reading: Using AWS Services via IPv6

AWS started charging for public IPv4 addresses a few months ago, supposedly to encourage users to move to IPv6. As it turns out, you need public IPv4 addresses (or a private link) to access many AWS services, clearly demonstrating that it’s just another way of fleecing the sheep Hotel California tax. I’m so glad I moved my videos to Cloudflare ;)

For more details, read AWS: Egress Traffic and Using AWS Services via IPv6 (rendered in beautiful, easy-to-read teletype font).

Worth Reading: Using AWS Services via IPv6

AWS started charging for public IPv4 addresses a few months ago, supposedly to encourage users to move to IPv6. As it turns out, you need public IPv4 addresses (or a private link) to access many AWS services, clearly demonstrating that it’s just another way of fleecing the sheep Hotel California tax. I’m so glad I moved my videos to Cloudflare ;)

For more details, read AWS: Egress Traffic and Using AWS Services via IPv6 (rendered in beautiful, easy-to-read teletype font).

KU056: Kubernetes Turns 10: A Look at the Past and Future

Kubernetes turns ten years old this summer. We take the opportunity to look at where it’s been and where it’s going. While many other open source projects folded over time, Kubernetes took the world by storm with the support of diverse entities including CNCF, Microsoft, AWS, Google, RedHat, and individual contributors. Moving forward, we predict... Read more »No Rest for SuzieQ

To be clear, it is true that SuzieQ never rests but it is also true that SuzieQ always RESTS, that is, the SuzieQ REST API is available to answer your network questions and help automate your network workflows. In my previous post about SuzieQ we focused on getting started and asking questions about our network READ MORE

The post No Rest for SuzieQ appeared first on The Gratuitous Arp.

EVPN Designs: IBGP Full Mesh Between Leaf Switches

In the previous blog post in the EVPN Designs series, we explored the simplest possible VXLAN-based fabric design: static ingress replication without any L2VPN control plane. This time, we’ll add the simplest possible EVPN control plane: a full mesh of IBGP sessions between the leaf switches.

EVPN Designs: IBGP Full Mesh Between Leaf Switches

In the previous blog post in the EVPN Designs series, we explored the simplest possible VXLAN-based fabric design: static ingress replication without any L2VPN control plane. This time, we’ll add the simplest possible EVPN control plane: a full mesh of IBGP sessions between the leaf switches.

D2C243: Your Kubernetes Clusters are Showing

There are about 1.4 million Kubernetes clusters just sitting out there on the public internet as we speak. That is 1.4 million lateral-movement rich, highly privileged environments. The bearer of this anxiety-provoking news is today’s guest, Lee Briggs. Lee explains why major cloud providers make this the default option– ease of use. The good news... Read more »Expanding Regional Services configuration flexibility for customers

This post is also available in Français, Español, Nederlands.

When we launched Regional Services in June 2020, the concept of data locality and data sovereignty were very much rooted in European regulations. Fast-forward to today, and the pressure to localize data persists: Several countries have laws requiring data localization in some form, public-sector contracting requirements in many countries require their vendors to restrict the location of data processing, and some customers are reacting to geopolitical developments by seeking to exclude data processing from certain jurisdictions.

That’s why today we're happy to announce expanded capabilities that will allow you to configure Regional Services for an increased set of defined regions to help you meet your specific requirements for being able to control where your traffic is handled. These new regions are available for early access starting in late May 2024, and we plan to have them generally available in June 2024.

It has always been our goal to provide you with the toolbox of solutions you need to not only address your security and performance concerns, but also to help you meet your legal obligations. And when it comes to data localization, we know that some of you need Continue reading

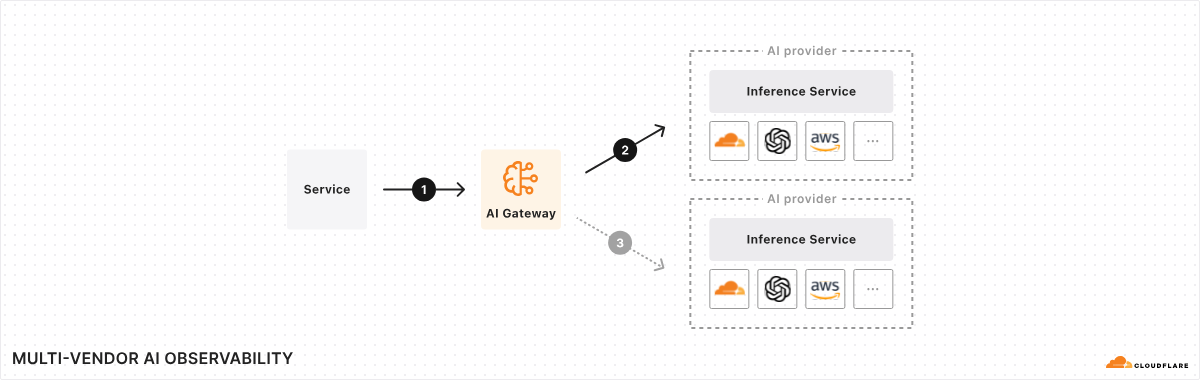

AI Gateway is generally available: a unified interface for managing and scaling your generative AI workloads

During Developer Week in April 2024, we announced General Availability of Workers AI, and today, we are excited to announce that AI Gateway is Generally Available as well. Since its launch to beta in September 2023 during Birthday Week, we’ve proxied over 500 million requests and are now prepared for you to use it in production.

AI Gateway is an AI ops platform that offers a unified interface for managing and scaling your generative AI workloads. At its core, it acts as a proxy between your service and your inference provider(s), regardless of where your model runs. With a single line of code, you can unlock a set of powerful features focused on performance, security, reliability, and observability – think of it as your control plane for your AI ops. And this is just the beginning – we have a roadmap full of exciting features planned for the near future, making AI Gateway the tool for any organization looking to get more out of their AI workloads.

Why add a proxy and why Cloudflare?

The AI space moves fast, and it seems like every day there is a new model, provider, or framework. Given this high rate of Continue reading

EVPN Rerouting After LAG Member Failures

In the previous two blog posts (Dealing with LAG Member Failures, LAG Member Failures in VXLAN Fabrics) we discovered that it’s almost trivial to deal with a LAG member failure in an MLAG cluster if we have a peer link between MLAG members. What about the holy grail of EVPN pundits: ESI-based MLAG with no peer link between MLAG members?

EVPN Rerouting After LAG Member Failures

In the previous two blog posts (Dealing with LAG Member Failures, LAG Member Failures in VXLAN Fabrics) we discovered that it’s almost trivial to deal with a LAG member failure in an MLAG cluster if we have a peer link between MLAG members. What about the holy grail of EVPN pundits: ESI-based MLAG with no peer link between MLAG members?

Cisco vPC in VXLAN/EVPN Network – Part 6 – vPC Enhancements

There are a lot of options when it comes to vPC. What enhancements should you consider? I’ll go through some of the options worth considering.

Peer Switch – The Peer Switch feature changes how vPC behaves in regards to STP. Without this enabled, you would configure different STP priorities on the primary and secondary switch. The secondary switch forwards BPDUs coming from vPC-connected switches towards the primary switch. The secondary switch doesn’t process these received BPDUs. Only the primary switch sends BPDUs to the vPC-connected switches. Note that the secondary switch can process and send BPDUs to switches that are only connected to the secondary switch. Without Peer Switch it looks like this:

- The BPDU sent by SW04 is not processed by SW02. It is forwarded towards SW01.

- SW04 BPDU is only sent initially. Port will become Root port and stop sending BPDUs.

- SW02 sends BPDU towards SW05 as it is not connected with vPC. The BPDU has information about cost to Root (SW01).

- SW02 doesn’t send BPDU towards SW03 as it is connected with vPC.

- SW01 and SW02 have different STP priorities and send distinct BPDUs. They are not one switch from STP perspective.

- If SW01 goes down, STP Continue reading

PP015: Zero Trust Architecture: Because You Can’t Trust Anybody Any More

Zero trust is a buzzword, but what does it actually mean and how will it impact network engineers? Jennifer is here to get us up to speed. First, she gives a general description: It’s a security architectural strategy that’s progressing toward increased observability and trust inferences. Then she breaks it down for the three main... Read more »What’s new in Calico – Spring 2024

Calico, the leading solution for container networking and security, unveils a host of new features this spring. From new security capabilities that simplify operations, enhanced visualization for faster troubleshooting, and major enhancements to its popular workload-centric distributed WAF, Calico is set to redefine how you manage and secure your containerized workloads.

This blog describes the new capabilities in Calico.

Simplified security operations for Runtime Threat Detection

Runtime threat detection generates a large number of security events. However, managing and analyzing these events can be challenging, and users need a way to summarize and navigate through them to gain deeper insights and take appropriate actions. Let’s see how Calico simplifies runtime security operations.

New Security Events Dashboard

We are excited to announce the introduction of the Security Event Dashboard in Calico. This dashboard provides a summary of the security events generated by the runtime threat detection engine. With the Security Event Dashboard, users can easily analyze and pivot around the data, enabling them to:

- Efficiently find and analyze specific segments of security events.

- Collaborate with stakeholders involved in the analysis, response, and remediation of security events.

The Security Event Dashboard offers a visually appealing and user-friendly interface, presenting key summarizations of Continue reading

Butchering AI

I once heard a quote that said, “The hardest part of being a butcher is knowing where to cut.” If you’ve ever eaten a cut of meat you know that the difference between a tender steak and a piece of meat that needs hours of tenderizing is just inches apart. Butchers train for years to be able to make the right cuts in the right pieces of meat with speed and precision. There’s even an excellent Medium article about the dying art of butchering.

One thing that struck me in that article is how the art of butchering relates to AI. Yes, I know it’s a bit corny and not an easy segue into a technical topic but that transition is about as subtle as the way AI has come crashing through the door to take over every facet of our lives. It used to be that AI was some sci-fi term we used to describe intelligence emerging in computer systems. Now, AI is optimizing my PC searches and helping with image editing and creation. It’s easy, right?

Except some of those things that AI promises to excel at doing are things that professionals have spent years honing their Continue reading