Syslog relay with Scapy

I needed to point some syslog data at a new toy being evaluated by security folks. #!/usr/bin/env python2.7

from scapy.all import *

def pkt_callback(pkt):

del pkt[Ether].src

del pkt[Ether].dst

del pkt[IP].chksum

del pkt[UDP].chksum

pkt[IP].dst = '192.168.100.100'

sendp(pkt)

sniff(iface='eth0', filter='udp port 514', prn=pkt_callback, store=0)

This script has scapy collecting frames matching udp port 514 (libpcap filter) from interface eth0. Each matching packet is handed off to the pkt_callback function. It clears fields which need to be recalculated, changes the destination IP (to the address of the new Security Thing) and puts the packets back onto the wire.

The source IP on these forged packets is unchanged, so the Security Thing thinks it's getting the original logs from real servers/routers/switches/PDUs/weather stations/printers/etc... around the Continue reading

Configuring vPC on Cisco Nexus Devices

A short Story on vPC- Virtual Port Channel in Cisco Datacenter Environment

There are lot of questions how and why we are using the vPC in the Datacenter environment while some asked me about the difference in the vPC and VSS. Please have a look on the below link for the comparison of vPC and VSS.

Features comparison : Cisco vPC and Cisco VSS

Apart from the above mentioned articles, I wrote on the different technologies used by other vendors same as Cisco uses vPC and VSS. Below is the link defining the same

Feature Comparison: Juniper VCF vs HP IRF vs Cisco VSS vs Cisco vPC

From all the above articles, I think now you got the basics on vPC and VSS, but in this article I will talk about the vPC configuration in details with the diagram. The topology used in the article will be sample topology and has no relevance with any of Continue reading

Things that cannot go wrong

Found this Douglas Adams quote in The Signal and the Noise (a must-read book):

The major difference between a thing that might go wrong and a thing that cannot possibly go wrong is that when a thing that cannot possibly go wrong goes wrong it usually turns out to be impossible to get at or repair

I’ll leave to your imagination how this relates to stretched VLANs, ACI, NSX, VSAN, SD-WAN and a few other technologies.

Docker’s Kubernetes Support Leaves Questions Around Swarm

The company says it plans to evolve Swarm but hasn't provided any details.

The company says it plans to evolve Swarm but hasn't provided any details.

SDxCentral’s Weekly News Roundup — November 10, 2017

Telstra and Ericsson complete 5G data call; SoftBank increases stake in Sprint; Edgecore Networks contributes hardware for white box packet transponder to TIP.

Telstra and Ericsson complete 5G data call; SoftBank increases stake in Sprint; Edgecore Networks contributes hardware for white box packet transponder to TIP.

Lumina’s SDN Controller Manages Third Party Switches, Containers

This is targeted to the data center use case.

This is targeted to the data center use case.

Verizon Makes SD-WAN Platform Available to its Wholesale Customers

The company's SD-WAN service is powered by Cisco Meraki.

The company's SD-WAN service is powered by Cisco Meraki.

Show 365: You Can’t Do That In Enterprise Networks

On today's show, Peyton Maynard-Koran joins the Packet Pushers talk about why some enterprise networks avoid change, and why vendors want to keep it that way. The post Show 365: You Can’t Do That In Enterprise Networks appeared first on Packet Pushers.Cloudflare Wants to Buy Your Meetup Group Pizza

If you’re a web dev / devops / etc. meetup group that also works toward building a faster, safer Internet, I want to support your awesome group by buying you pizza. If your group’s focus falls within one of the subject categories below and you’re willing to give us a 30 second shout out and tweet a photo of your group and @Cloudflare, your meetup’s pizza expense will be reimbursed.

Developer Relations at Cloudflare & why we’re doing this

I’m Andrew Fitch and I work on the Developer Relations team at Cloudflare. One of the things I like most about working in DevRel is empowering community members who are already doing great things out in the world. Whether they’re starting conferences, hosting local meetups, or writing educational content, I think it’s important to support them in their efforts and reward them for doing what they do. Community organizers are the glue that holds developers together socially. Let’s support them and make their lives easier by taking care of the pizza part of the equation.

What’s in it for Cloudflare?

- We want web developers to target the apps platform

- We want more people to Continue reading

An Opinion On Offense Against NAT

It’s been a long time since I’ve gotten to rant against Network Address Translation (NAT). At first, I had hoped that was because IPv6 transitions were happening and people were adopting it rapidly enough that NAT would eventually slide into the past of SAN and DOS. Alas, it appears that IPv6 adoption is getting better but still not great.

Geoff Huston, on the other hand, seems to think that NAT is a good thing. In a recent article, he took up the shield to defend NAT against those that believe it is an abomination. He rightfully pointed out that NAT has extended the life of the modern Internet and also correctly pointed out that the slow pace of IPv6 deployment was due in part to the lack of urgency of address depletion. Even with companies like Microsoft buying large sections of IP address space to fuel Azure, we’re still not quite at the point of the game when IP addresses are hard to come by.

So, with Mr. Huston taking up the shield, let me find my +5 Sword of NAT Slaying and try to point out a couple of issues in his defense.

Relationship Status: NAT’s…Complicated

The first Continue reading

Introduction to Network Time Protocol (NTP) and Basic Configurations

What is Network Time Protocol or NTP ?

So, NTP is Network Time Protocol which is generally used to synchronise of the devices to some specific time references. NTP uses UDP protocol to communicate with all the devices in the network and all NTP communications in the network will be synchronised with the defined universal time in the network.

How they synchronise with the time source ?

NTP server usually receives its time from a trustworthy time source, such as a radio clock attached to a time server, and then distributes this time across the network. NTP is extremely efficient and there is no more than one packet per minute is necessary to synchronize two machines to within a millisecond of each other

An Network Time Protocol actually uses a layer to describe the distance between a network device and an authoritative time source

- A layer 1 time server Continue reading

On the dangers of Intel’s frequency scaling

While I was writing the post comparing the new Qualcomm server chip, Centriq, to our current stock of Intel Skylake-based Xeons, I noticed a disturbing phenomena.

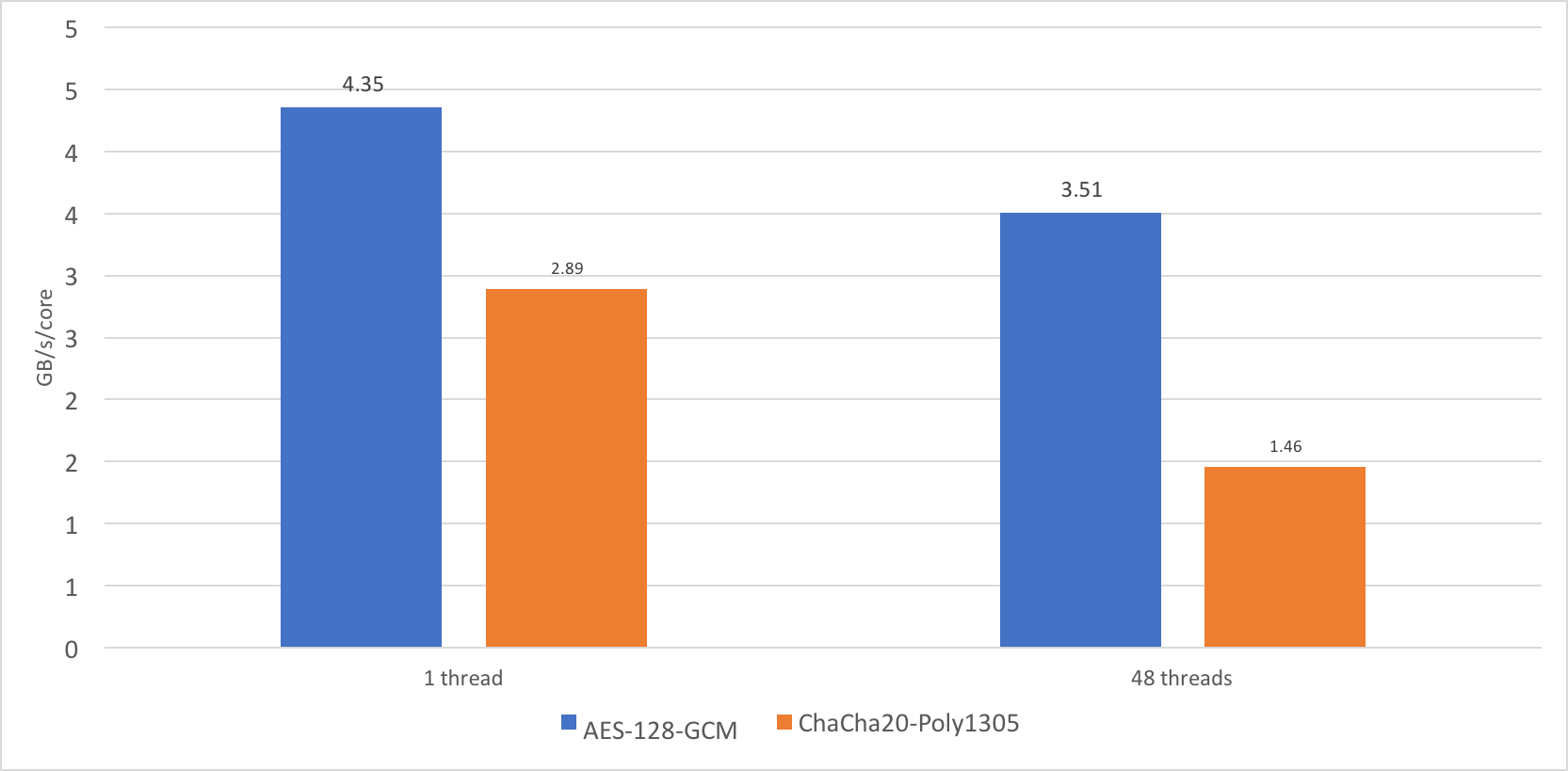

When benchmarking OpenSSL 1.1.1dev, I discovered that the performance of the cipher ChaCha20-Poly1305 does not scale very well. On a single thread, it performed at the speed of approximately 2.89GB/s, whereas on 24 cores, and 48 threads it performed at just over 35 GB/s.

CC BY-SA 2.0 image by blumblaum

CC BY-SA 2.0 image by blumblaum

Now this is a very high number, but I would like to see something closer to 69GB/s. 35GB/s is just 1.46GB/s/core, or roughly 50% of the single core performance. AES-GCM scales much better, to 80% of single core performance, which is understandable, because the CPU can sustain higher frequency turbo on a single core, but not all cores.

Why is the scaling of ChaCha20-Poly1305 so poor? Meet AVX-512. AVX-512 is a new Intel instruction set that adds many new 512-bit wide SIMD instructions and promotes most of the existing ones to 512-bit. The problem with such wide instructions is that they consume power. A lot of power. Imagine a single instruction that does the work of 64 regular Continue reading

Separate Data from Code [Video]

After explaining the challenges of data center fabric deployments, Dinesh Dutt focused on a very important topic I cover in Week#3 of the Building Network Automation Solutions online course: how do you separate data (data model describing data center fabric) from code (Ansible playbooks and device configurations)

Rough Guide to IETF 100: Identity, Privacy, and Encryption

Identity, privacy, and encryption continue to be active topics for the Internet Society and the IETF community impacting a broad range of applications. In this Rough Guide to IETF 100 post, I highlight a few of the many relevant activities happening next week in Singapore, but there is much more going on so be sure to check out the full agenda online.

Encryption

Encryption continues to be a priority of the IETF as well as the security community at large. Related to encryption, there is the TLS working group developing the core specifications, several working groups addressing how to apply the work of the TLS working group to various applications, and the Crypto-Forum Research Group focusing on the details of the underlying cryptographic algorithms.

The Transport Layer Security (TLS) working group is a key IETF effort developing core security protocols for the Internet. This week’s agenda includes both TLS 1.3 and Datagram Transport Layer Security. Additionally, the TLS working group will be discussing connection ID, exported authenticators, protecting against denial of service attacks, and application layer TLS. The TLS working group is very active and, as with all things that are really important, there are many Continue reading