Azure Networking Fundamentals: VNET Peering

Comment: Here is a part of the introduction section of the eight chapter of my Azure Networking Fundamentals book. I will also publish other chapters' introduction sections soon so you can see if the book is for you. The book is available at Leanpub and Amazon (links on the right pane).

This chapter introduces an Azure VNet Peering solution. VNet peering creates bidirectional IP connections between peered VNets. VNet peering links can be established within and across Azure regions and between VNets under the different Azure subscriptions or tenants. The unencrypted data path over peer links stays within Azure's private infrastructure. Consider a software-level solution (or use VGW) if your security policy requires data path encryption. There is no bandwidth limitation in VNet Peering like in VGW, where BW is based on SKU. From the VM perspective, VNet peering gives seamless network performance (bandwidth, latency, delay, and jitter) for Inter-VNet and Intra-VNet traffic. Unlike the VGW solution, VNet peering is a non-transitive solution, the routing information learned from one VNet peer is not advertised to another VNet peer. However, we can permit peered VNets (Spokes) to use local VGW (Hub) and route Spoke-to-Spoke data by using a subnet-specific route table Continue reading

Cloudflare’s handling of a bug in interpreting IPv4-mapped IPv6 addresses

In November 2022, our bug bounty program received a critical and very interesting report. The report stated that certain types of DNS records could be used to bypass some of our network policies and connect to ports on the loopback address (e.g. 127.0.0.1) of our servers. This post will explain how we dealt with the report, how we fixed the bug, and the outcome of our internal investigation to see if the vulnerability had been previously exploited.

RFC 4291 defines ways to embed an IPv4 address into IPv6 addresses. One of the methods defined in the RFC is to use IPv4-mapped IPv6 addresses, that have the following format:

| 80 bits | 16 | 32 bits |

+--------------------------------------+--------------------------+

|0000..............................0000|FFFF| IPv4 address |

+--------------------------------------+----+---------------------+

In IPv6 notation, the corresponding mapping for 127.0.0.1 is ::ffff:127.0.0.1 (RFC 4038)

The researcher was able to use DNS entries based on mapped addresses to bypass some of our controls and access ports on the loopback address or non-routable IPs.

This vulnerability was reported on November 27 to our bug bounty program. Our Security Incident Response Team (SIRT) was contacted, and incident response activities Continue reading

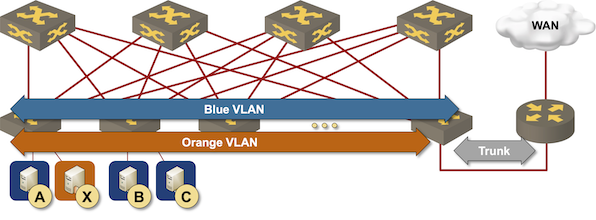

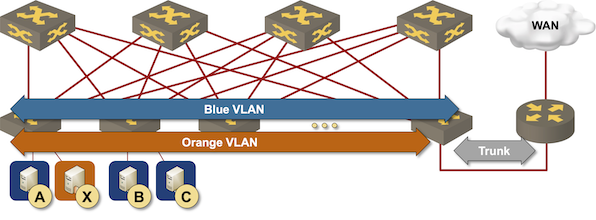

IRB Models: Edge Routing

The simplest way to implement layer-3 forwarding in a network fabric is to offload it to an external device1, be it a WAN edge router, a firewall, a load balancer, or any other network appliance.

Routing at the (outer) edge of the fabric

IRB Models: Edge Routing

The simplest way to implement layer-3 forwarding in a network fabric is to offload it to an external device1, be it a WAN edge router, a firewall, a load balancer, or any other network appliance.

Routing at the (outer) edge of the fabric

Uptick in healthcare organizations experiencing targeted DDoS attacks

Healthcare in the crosshairs

Over the past few days, Cloudflare, as well as other sources, have observed healthcare organizations targeted by a pro-Russian hacktivist group claiming to be Killnet. There has been an increase in the amount of healthcare organizations coming to us to help get out from under these types of attacks. Multiple healthcare organizations behind Cloudflare have also been targeted by HTTP DDoS attacks and Cloudflare has helped them successfully mitigate these attacks. The United States Department of Health and Human Services issued an Analyst Note detailing the threat of Killnet-related cyberattacks to the healthcare industry.

A rise in political tensions and escalation of the conflict in Ukraine are all factors that play into the current cybersecurity threat landscape. Unlike traditional warfare, the Internet has enabled and empowered groups of individuals to carry out targeted attacks regardless of their location or involvement. Distributed-denial-of-Service (DDoS) attacks have the unfortunate advantage of not requiring an intrusion or a foothold to be launched and have, unfortunately, become more accessible than ever before.

The attacks observed by the Cloudflare global network do not show a clear indication that they are originating from a single botnet and the attack methods and sources Continue reading

AWS Networking

https://codingpackets.com/blog/aws-networking

Cloud Notes: AWS VPC

https://codingpackets.com/blog/cloud-notes-aws-vpc

AWS Networking

https://codingpackets.com/blog/aws-networking

Cloud Notes: AWS VPC

https://codingpackets.com/blog/cloud-notes-aws-vpc

Cloud Notes: AWS VPC

https://codingpackets.com/blog/cloud-notes-aws-vpc

Cloud Notes: AWS VPC

https://codingpackets.com/blog/cloud-notes-aws-vpc

Cloud Notes: AWS VPC

https://codingpackets.com/blog/cloud-notes-aws-vpc

AWS Networking

https://codingpackets.com/blog/aws-networking