Network Break 347: Cisco Acquires Container App Monitor; Intel Unwraps Mount Evans IPU

It's the Network Break! This week we analyze Cisco's $500 million acquisition of a container-based and serverless application monitor, Intel's announcement of Mount Evans, an Infrastructure Processing Unit (IPU) for network and storage offload, and more tech news. Guest analyst Johna Till Johnson, CEO and founder of Nemertes Research, joins Greg Ferro.

The post Network Break 347: Cisco Acquires Container App Monitor; Intel Unwraps Mount Evans IPU appeared first on Packet Pushers.

Network Break 347: Cisco Acquires Container App Monitor; Intel Unwraps Mount Evans IPU

It's the Network Break! This week we analyze Cisco's $500 million acquisition of a container-based and serverless application monitor, Intel's announcement of Mount Evans, an Infrastructure Processing Unit (IPU) for network and storage offload, and more tech news. Guest analyst Johna Till Johnson, CEO and founder of Nemertes Research, joins Greg Ferro.The Importance of Network Vulnerability Assessments

A vulnerability assessment identifies and quantifies vulnerabilities in a company’s assets across applications, systems, and network infrastructures.Making Magic Transit health checks faster and more responsive

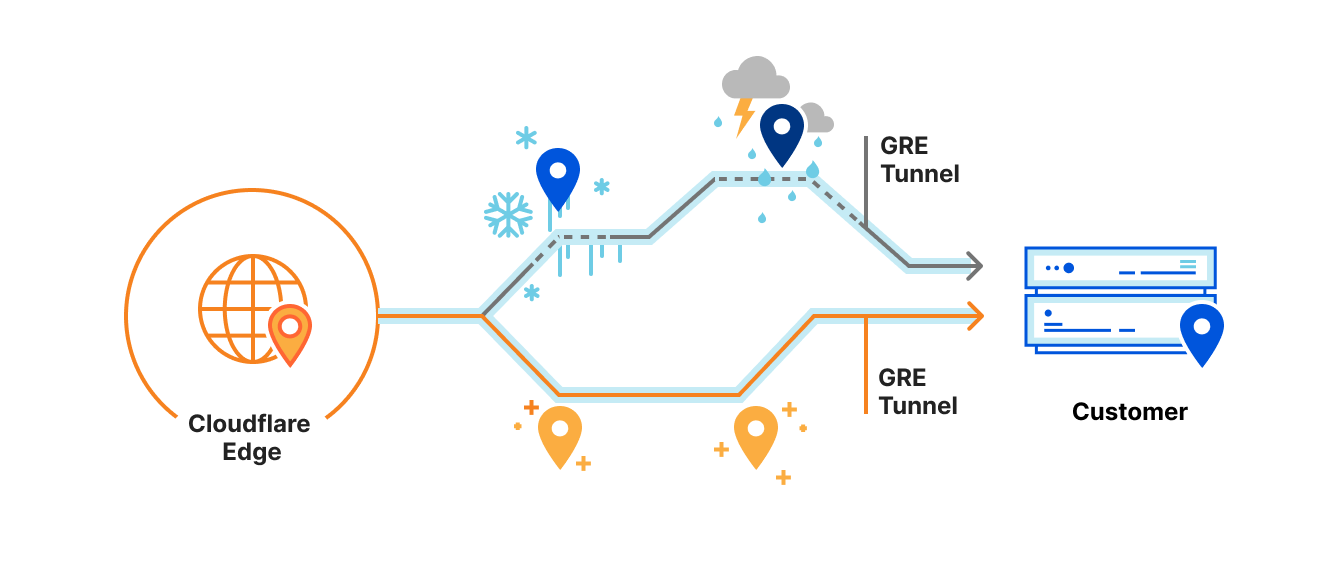

Magic Transit advertises our customer’s IP prefixes directly from our edge network, applying DDoS mitigation and firewall policies to all traffic destined for the customer’s network. After the traffic is scrubbed, we deliver clean traffic to the customer over GRE tunnels (over the public Internet or Cloudflare Network Interconnect). But sometimes, we experience inclement weather on the Internet: network paths between Cloudflare and the customer can become unreliable or go down. Customers often configure multiple tunnels through different network paths and rely on Cloudflare to pick the best tunnel to use if, for example, some router on the Internet is having a stormy day and starts dropping traffic.

Because we use Anycast GRE, every server across Cloudflare’s 200+ locations globally can send GRE traffic to customers. Every server needs to know the status of every tunnel, and every location has completely different network routes to customers. Where to start?

In this post, I’ll break down my work to improve the Magic Transit GRE tunnel health check system, creating a more stable experience for customers and dramatically reducing CPU and memory usage at Cloudflare’s edge.

Everybody has their own weather station

To decide where to send traffic, Cloudflare edge servers Continue reading

The Week in Internet News: Groups Ask Apple to Stop Photo-Scanning Plans

Apple photo privacy; FTC takes Facebook back to court; porn site bans porn; humanoid and pet-like robots

The post The Week in Internet News: Groups Ask Apple to Stop Photo-Scanning Plans appeared first on Internet Society.

Worth Reading: Simplifying Networks

Justin Pietsch wrote another fantastic blog post, this time describing how they simplified Amazon’s internal network, got rid of large-scale VLANs and multi-NIC hosts, moved load balancing functionality into a proxy layer managed by application teams, and finally introduced merchant silicon routers.

Worth Reading: Simplifying Networks

Justin Pietsch wrote another fantastic blog post, this time describing how they simplified Amazon’s internal network, got rid of large-scale VLANs and multi-NIC hosts, moved load balancing functionality into a proxy layer managed by application teams, and finally introduced merchant silicon routers.

Why Is Professional Development Important?

Learning is a lifelong process, and it never stops. Of course, no one is born to be brilliant at their job, but we learn and work on our development goals. After all, it takes time and effort to learn new skills and apply new knowledge.

Thanks to the fierce competition, everyone is focused on their professional development to stay ahead. Here is everything you need to know about why is professional development important.

Professional Development Explained

Before we understand why it’s important, you need to understand what professional development is. The term refers to career training and continuing education after an individual starts working to develop new skills for advancement in their career. Many jobs out there require individuals to take continuing education.

However, professional development is not just limited to education. It refers to all the training and learning opportunities you can take to enhance your work and skills. These skills are used to advance in the career.

Why Is Professional Development Important?

Here are the many reasons why professional development tools are important for any organization:

1. Increases Employee Retention

As an organization, you can offer professional development opportunities to increase employee retention. It Continue reading

Lucky Webapp Add Bootstrap

In this post, I will show you how to add Bootstrap styling to a Lucky webapp. Software Used The following software versions are used in this post. Lucky - 0.28.0 Bootstrap - 5.1.0 Installation First up, use Yarn to install Bootstrap. Next, import Bootstrap near the top of the ...continue reading

The Mystery of Known Issues

I’ve spent the better part of the last month fighting a transient issue with my home ISP. I thought I had it figure out after a hardware failure at the connection point but it crept back up after I got back from my Philmont trip. I spent a lot of energy upgrading my home equipment firmware and charting the seemingly random timing of the issue. I also called the technical support line and carefully explained what I was seeing and what had been done to work on the problem already.

The responses usually ranged from confused reactions to attempts to reset my cable modem, which never worked. It took several phone calls and lots of repeated explanations before I finally got a different answer from a technician. It turns out there was a known issue with the modem hardware! It’s something they’ve been working on for a few weeks and they’re not entirely sure what the ultimate fix is going to be. So for now I’m going to have to endure the daily resets. But at least I know I’m not going crazy!

Issues for Days

Known issues are a way of life in technology. If you’ve worked with any Continue reading

Heavy Networking 594: TLS 1.3 Down Deep With Ed Harmoush

Like anything in the world of IT, TLS has gone through various versions. TLS 1.1 and 1.2 are still commonly used, but TLS 1.3 is really where it’s at. Our guest is Ed Harmoush. Ed’s a professional instructor who’s researched TLS 1.3 and more as he’s prepped for his latest course offering, Practical TLS, which you can find at http://pracnet.net/tls. Use coupon PacketPushers100 to get $100 off this deep dive course from Ed.

The post Heavy Networking 594: TLS 1.3 Down Deep With Ed Harmoush appeared first on Packet Pushers.

Heavy Networking 594: TLS 1.3 Down Deep With Ed Harmoush

Like anything in the world of IT, TLS has gone through various versions. TLS 1.1 and 1.2 are still commonly used, but TLS 1.3 is really where it’s at. Our guest is Ed Harmoush. Ed’s a professional instructor who’s researched TLS 1.3 and more as he’s prepped for his latest course offering, Practical TLS, which you can find at http://pracnet.net/tls. Use coupon PacketPushers100 to get $100 off this deep dive course from Ed.Data protection controls with Cloudflare Browser Isolation

Starting today, your team can use Cloudflare’s Browser Isolation service to protect sensitive data inside the web browser. Administrators can define Zero Trust policies to control who can copy, paste, and print data in any web based application.

In March 2021, for Security Week, we announced the general availability of Cloudflare Browser Isolation as an add-on within the Cloudflare for Teams suite of Zero Trust application access and browsing services. Browser Isolation protects users from browser-borne malware and zero-day threats by shifting the risk of executing untrusted website code from their local browser to a secure browser hosted on our edge.

And currently, we’re democratizing browser isolation for any business by including it with our Teams Enterprise Plan at no additional charge.1

A different approach to zero trust browsing

Web browsers, the same tool that connects users to critical business applications, is one of the most common attack vectors and hardest to control.

Browsers started as simple tools intended to share academic documents over the Internet and over time have become sophisticated platforms that replaced virtually every desktop application in the workplace. The dominance of web-based applications in the workplace has created a challenge for security teams who Continue reading