Leveraging OpenStack to Orchestrate Distributed Cloud Deployments

The industry is seeing an increasing distribution of data centers, driven by a desire to improve the end user experiences,...Announcing VMware Container Networking with Antrea for Kubernetes

By Cody McCain, Senior Product Manager and Susan Wu, Senior Product Marketing Manager, Networking and Security Business Unit

Enterprises benefit from collaborative engineering and receive the latest innovations from open source projects. However, it’s a challenge for enterprise to rely solely on community support to run their operations. This is because community support is best-effort and cannot provide a pre-defined SLA.

While Kubernetes itself is open source, and part of Cloud Native Computing Foundation (CNCF), it takes an ecosystem of surrounding technologies as curated by CNCF—from the container registry and storage engine to the container network plugin to run Kubernetes.

Announcing VMware Container Networking with Antrea

With the new release of VMware Container Networking with Antrea, enterprises get the best of both worlds – access to the latest innovation from Project Antrea and world-class support from VMware. Container Networking with Antrea is the commercial offering consisting of and 24/7 support for Project Antrea.

Container Networking with Antrea will package the latest release of Project Antrea version 0.9.1. Antrea is a purpose-built Kubernetes networking solution for public and private clouds building upon Open vSwitch, the open source technology optimized for distributed multi-layer switching performance. Antrea is designed to run anywhere Kubernetes Continue reading

How Argo Tunnel engineering uses Argo Tunnel

Whether you are managing a fleet of machines or sharing a private site from your localhost, Argo Tunnel is here to help. On the Argo Tunnel team we help make origins accessible from the Internet in a secure and seamless manner. We also care deeply about productivity and developer experience for the team, so naturally we want to make sure we have a development environment that is reliable, easy to set up and fast to iterate on.

A brief history of our development environment (dev-stack)

Docker compose

When our development team was still small, we used a docker-compose file to orchestrate the services needed to develop Argo Tunnel. There was no native support for hot reload, so every time an engineer made a change, they had to restart their dev-stack.

We could hack around it to hot reload with docker-compose, but when that failed, we had to waste time debugging the internals of Docker. As the team grew, we realized we needed to invest in improving our dev stack.

At the same time Cloudflare was in the process of migrating from Marathon to kubernetes (k8s). We set out to find a tool that could detect changes in source code and Continue reading

Review Questions: Switching, Bridging and Routing

One of the most annoying part in every training content development project was the ubiquitous question somewhere at the end of the process: “and now we’d need a few review questions”. I’m positive anyone ever involved in a similar project can feel the pain that question causes…

Writing good review questions requires a particularly devious state of mind, sometimes combined with “I would really like to get the answer to this one” (obviously you’d mark such questions as “needs further research”, and if you’re Donald Knuth the question would be “prove that P != NP").

Security Policy Self-Service for Developers and DevOps Teams

In today’s economy, digital assets (applications, data, and processes) determine business success. Cloud-native applications are designed to iterate rapidly, creating rapid time-to-value for businesses. Organizations that are able to rapidly build and deploy their applications have significant competitive advantage. To this end, more and more developers are creating and leading DevOps teams that not only drive application development, but also take on operational responsibilities formerly owned by platform and security teams.

What’s the Value of a Self-Service Approach?

Cloud-native applications are often designed and deployed as microservices. The development team that owns the microservice understands the behavior of the service, and is in the best position to define and manage the network security of their microservice. A self-service model enables developers to follow a simple workflow and generate network policies with minimal effort. When problems occur with. application connectivity, developers should be able to diagnose connectivity issues and resolve them quickly without having to depend on resources outside of the team.

Developers and DevOps teams can also take a leading role in managing security, which is an integral part of cloud-native applications. There are two aspects to security in the context of Kubernetes.

- Cluster security – is a uniform set Continue reading

Reflecting on Three Years of Board Service and a Commitment to Quality Improvement

Departing Trustee Glenn McKnight looks back at his three years of service as a member of the Internet Society Board of Trustees.

During the past three years we have seen a tremendous amount of productive work by a functional and focused Internet Society Board of Trustees. This included not only the normal board and committee work, but also the extra efforts associated with the selection of a new CEO, creation of the Internet Society Foundation, and meeting the challenges of the proposed PIR/Ethos transaction.

It’s important to learn from these experiences, but it’s also important to focus on achievements and to reassert the core values of the Internet Society as a force of good in the Internet ecosystem. We see the Internet Society focusing its efforts with purposeful strategic direction lead by CEO Andrew Sullivan and his team. As a departing Trustee, I would like to see the Internet Society explore more opportunities for members to learn from one other, including “Meet the Board” to foster improved communication and a means to help teach the community about the role of the Board of Trustees.

During these three years, my work beyond the normal board work has also involved committee Continue reading

Day Two Cloud 063: The How And Why Of Migrating Databases To The Cloud

Planning a database migration to the cloud? If you're going to do it right, prepare to dig in because you have many options and trade offs to consider, including whether to go with IaaS or PaaS, security and monitoring issues, and much, much more. Day Two Cloud dives into the inner workings of database migration with expert Joey D’Antoni, Principal Consultant at Denny Cherry & Associates.

The post Day Two Cloud 063: The How And Why Of Migrating Databases To The Cloud appeared first on Packet Pushers.

Day Two Cloud 063: The How And Why Of Migrating Databases To The Cloud

Planning a database migration to the cloud? If you're going to do it right, prepare to dig in because you have many options and trade offs to consider, including whether to go with IaaS or PaaS, security and monitoring issues, and much, much more. Day Two Cloud dives into the inner workings of database migration with expert Joey D’Antoni, Principal Consultant at Denny Cherry & Associates.Cloudflare and Human Rights: Joining the Global Network Initiative (GNI)

Consistent with our mission to help build a better Internet, Cloudflare has long recognized the importance of conducting our business in a way that respects the rights of Internet users around the world. We provide free services to important voices online - from human rights activists to independent journalists to the entities that help maintain our democracies - who would otherwise be vulnerable to cyberattack. We work hard to develop internal mechanisms and build products that empower user privacy. And we believe that being transparent about the types of requests we receive from government entities and how we respond is critical to maintaining customer trust.

As Cloudflare continues to expand our global network, we think there is more we can do to formalize our commitment to help respect human rights online. To that end, we are excited to announce that we have joined the Global Network Initiative (GNI), one of the world's leading human rights organizations in the information and communications Technology (ICT) sector, as observers.

Business + Human Rights

Understanding Cloudflare’s new partnership with GNI requires some additional background on how human rights concepts apply to businesses.

In 1945, following the end of World War II, 850 delegates Continue reading

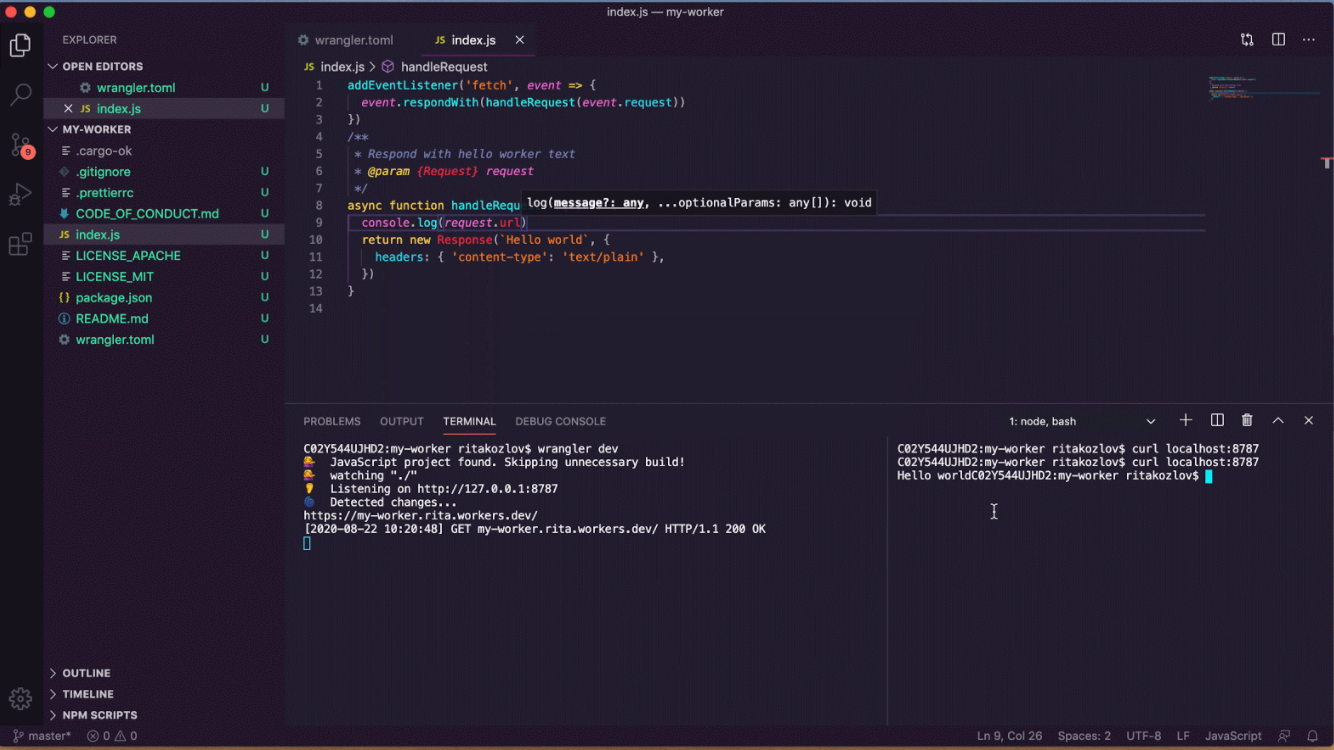

Announcing wrangler dev — the Edge on localhost

Cloudflare Workers — our serverless platform — allows developers around the world to run their applications from our network of 200 datacenters, as close as possible to their users.

A few weeks ago we announced a release candidate for wrangler dev — today, we're excited to take wrangler dev, the world’s first edge-based development environment, to GA with the release of wrangler 1.11.

Think locally, develop globally

It was once assumed that to successfully run an application on the web, one had to go and acquire a server, set it up (in a data center that hopefully you had access to), and then maintain it on an ongoing basis. Luckily for most of us, that assumption was challenged with the emergence of the cloud. The cloud was always assumed to be centralized — large data centers in a single region (“us-east-1”), reserved for compute. The edge? That was for caching static content.

Again, assumptions are being challenged.

Cloudflare Workers is about moving compute from a centralized location to the edge. And it makes sense: if users are distributed all over the globe, why should all of them be routed to us-east-1, on the opposite side of the world, Continue reading

Docker Swarm Services behind the Scenes

Remember the claim that networking is becoming obsolete and that everyone else will simply bypass the networking teams (source)?

Good news for you – there are many fast growing overlay solutions that are adopted by apps and security teams and bypass the networking teams altogether.

That sounds awesome in a VC pitch deck. Let’s see how well that concept works out in reality using Docker Swarm as an example (Kubernetes is probably even worse).