Heavy Networking 493: Taming Service Provider Complexity In 5G Networks (Sponsored)

5G presents a new set of challenges for service provider networks. As networks become increasingly dynamic and distributed to deliver an ever-evolving set of services, providers have to contend with increased complexity. Juniper Networks joins the Packet Pushers to discuss how its automation capabilities and tools can help tame the complexity beast. Our guest is Amit Bhardwaj, Director of Product Management at Juniper Networks.

The post Heavy Networking 493: Taming Service Provider Complexity In 5G Networks (Sponsored) appeared first on Packet Pushers.

The Two-Year Anniversary of The Athenian Project: Preparing for the 2020 Elections.

Two years ago, Cloudflare launched its Athenian Project, an effort to protect state and local government election websites from cyber attacks. With the two-year anniversary and many 2020 elections approaching, we are renewing our commitment to provide Cloudflare’s highest level of services for free to protect election websites and ensure the preservation of these critical infrastructure sites. We started the project at Cloudflare as it directly aligns with our mission: to help build a better Internet. We believe the Internet plays a helpful role in democracy and ensuring constituents’ right to information. By helping state and local government election websites, we ensure the protection of voters’ voices, preserve citizens’ confidence in the democratic process, and enhance voter participation.

We are currently helping 156 local or state websites in 26 states to combat DDoS attacks, SQL injections, and many other hostile attempts to threaten their operations. This is an additional 34 domains in states like Ohio, Florida, Kansas, South Carolina and Wisconsin since we reported statistics after last year’s election.

The need for security protection of critical election infrastructure is not new, but it is in the spotlight again as the 2020 U.S. elections approach, with the President, 435 seats Continue reading

Sizing the Buffer

The topic of buffer sizing was the subject of a workshop at Stanford University in early December 2019. The workshop drew together academics, researchers, vendors and operators to look at this topic from their perspectives. The following are my notes from this workshop.Introducing Load Balancing Analytics

Cloudflare aspires to make Internet properties everywhere faster, more secure, and more reliable. Load Balancing helps with speed and reliability and has been evolving over the past three years.

Let’s go through a scenario that highlights a bit more of what a Load Balancer is and the value it can provide. A standard load balancer comprises a set of pools, each of which have origin servers that are hostnames and/or IP addresses. A routing policy is assigned to each load balancer, which determines the origin pool selection process.

Let’s say you build an API that is using cloud provider ACME Web Services. Unfortunately, ACME had a rough week, and their service had a regional outage in their Eastern US region. Consequently, your website was unable to serve traffic during this period, which resulted in reduced brand trust from users and missed revenue. To prevent this from happening again, you decide to take two steps: use a secondary cloud provider (in order to avoid having ACME as a single point of failure) and use Cloudflare’s Load Balancing to take advantage of the multi-cloud architecture. Cloudflare’s Load Balancing can help you maximize your API’s availability for your new architecture. For example, you Continue reading

EVPN Route Targets, Route Distinguishers, and VXLAN Network IDs

Got this interesting question from one of my readers:

BGP EVPN message carries both VNI and RT. In importing the route, is it enough either to have VNI ID or RT to import to the respective VRF?. When importing routes in a VRF, which is considered first, RT or the VNI ID?

A bit of terminology first (which you’d be very familiar with if you ever had to study how MPLS/VPN works):

Read more ...Broadcom Boasts ‘World’s First’ 25.6 Tb/s Switch Silicon

“Keeping with the Broadcom cadence, approximately every two years we’ve been doubling the...

The Week in Internet News: Australian Lawmakers Push for ‘Fix’ to Encryption Law

An encryption fix: The Australian Labor Party says it will push for changes to an encryption law, passed in late 2018, that requires tech comp anies to give law enforcement agencies access to encrypted communications, ZDNet reports. Labor Party lawmakers have raised concerns about the law’s effect on the country’s tech industry, but it appears they don’t have the votes to make changes.

Telemedicine needs access: The use of telemedicine is growing, but low speeds in rural Internet are delaying its benefits to parts of Indiana, according to a story from the Kokomo Tribune, posted at Govtech.com. Some Internet-based diagnosis services need interactive videoconferencing technology with fast broadband speeds that aren’t available in parts of the state.

The future of IoT security: IoT World Today has six predictions for Internet of Things security in 2020. Among them: Facilities managers will become more concerned about smart building security, with buildings becoming a new avenue of attack. The security of 5G networks will also become an issue with new attacks on the way.

Goodbye WhatsApp: WhatsApp has begun automatically removing Kashmiri residents from WhatsApp, due to a long-running Internet shutdown in the region controlled by India, The Verge reports. WhatsApp’s Continue reading

Ericsson Corruption Probe Takes $1B Bite

“Through slush funds, bribes, gifts, and graft, Ericsson conducted telecom business with the...

Adlink Tackles Industrial IoT as Latest 5G-Drive Member

The 5G-Drive consortium has its sights set on the development of 5G autonomous drone scouts and...

Network Break 264: Broadcom’s New Tomahawk 4 Hits 25.6Tbps; Juniper Announces SD-LAN For EX Switches

Take a Network Break! Broadcom ships its fastest ASIC yet, the 15.6Tbps Tomahawk 4; Juniper Networks gets a new CTO, enables cloud control of its EX switches; and rolls out new CPE; Palo Alto Networks reports its latest quarterly financials, and we cover lots of listener follow-up.

The post Network Break 264: Broadcom’s New Tomahawk 4 Hits 25.6Tbps; Juniper Announces SD-LAN For EX Switches appeared first on Packet Pushers.

Network Break 264: Broadcom’s New Tomahawk 4 Hits 25.6Tbps; Juniper Announces SD-LAN For EX Switches

Take a Network Break! Broadcom ships its fastest ASIC yet, the 15.6Tbps Tomahawk 4; Juniper Networks gets a new CTO, enables cloud control of its EX switches; and rolls out new CPE; Palo Alto Networks reports its latest quarterly financials, and we cover lots of listener follow-up.Tech Bytes: Vitec Group Transforms Its WAN With Silver Peak’s SD-WAN (Sponsored)

Vitec Group has transformed its global WAN with an SD-WAN rollout from Silver Peak. The company has boosted the performance of critical business applications, reduced backup latency by 70%, and decommissioned expensive MPLS circuits in favor of DIA. Find out how in this sponsored Tech Bytes podcast. Our guest is Ben Skinner, head of corporate networks and infrastructure at Vitec Group.Tech Bytes: Vitec Group Transforms Its WAN With Silver Peak’s SD-WAN (Sponsored)

Vitec Group has transformed its global WAN with an SD-WAN rollout from Silver Peak. The company has boosted the performance of critical business applications, reduced backup latency by 70%, and decommissioned expensive MPLS circuits in favor of DIA. Find out how in this sponsored Tech Bytes podcast. Our guest is Ben Skinner, head of corporate networks and infrastructure at Vitec Group.

The post Tech Bytes: Vitec Group Transforms Its WAN With Silver Peak’s SD-WAN (Sponsored) appeared first on Packet Pushers.

Money Moves: November 2019

Google buys CloudSimple, challenges VMware Cloud on AWS; Palo Alto Networks announced plans to...

Segment Routing (SR) – What You Need To Know

This blog will provide you insights to help you on your journey on Segment Routing (SR) by...

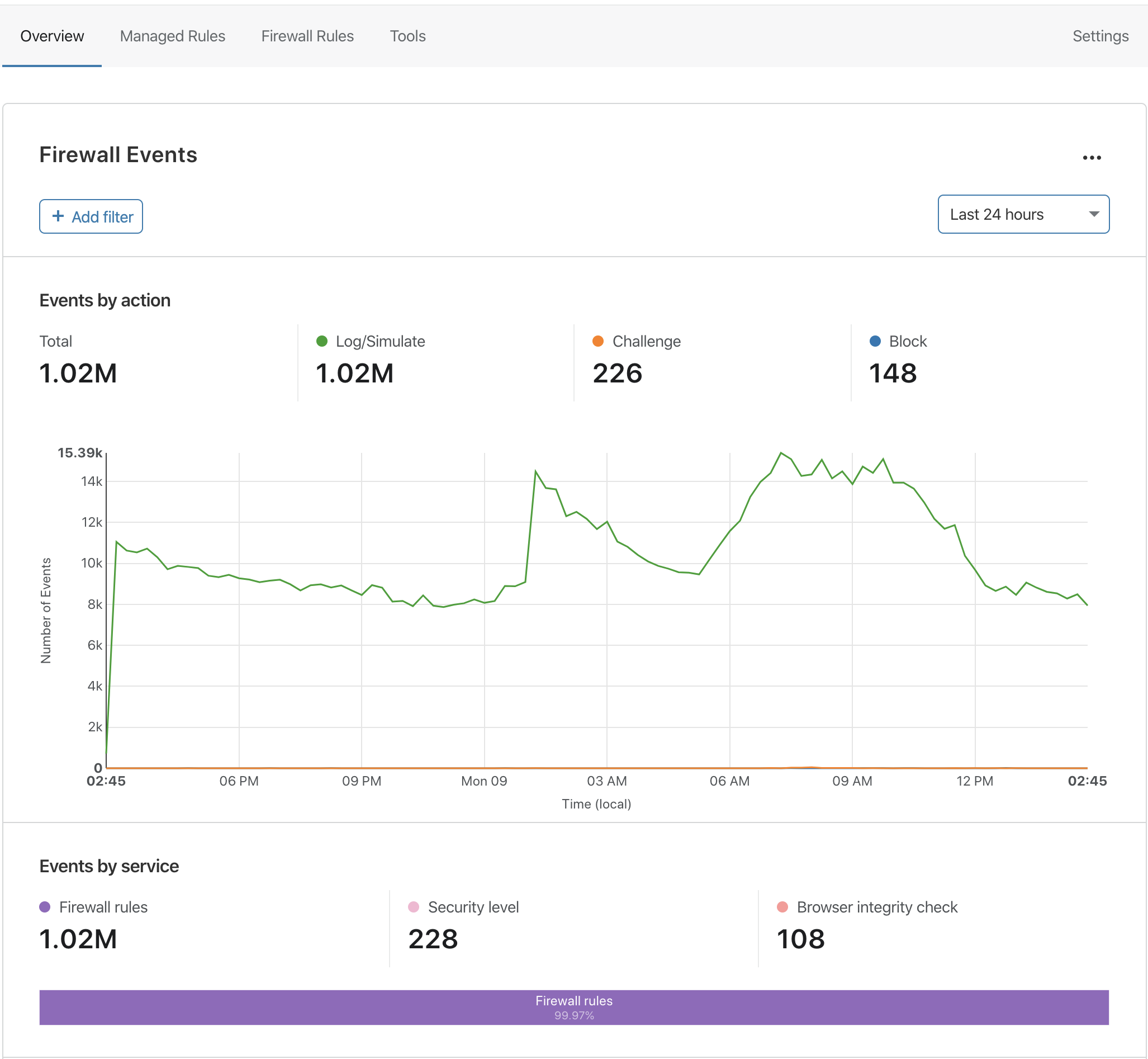

Firewall Analytics: Now available to all paid plans

Our Firewall Analytics tool enables customers to quickly identify and investigate security threats using an intuitive interface. Until now, this tool had only been available to our Enterprise customers, who have been using it to get detailed insights into their traffic and better tailor their security configurations. Today, we are excited to make Firewall Analytics available to all paid plans and share details on several recent improvements we have made.

All paid plans are now able to take advantage of these capabilities, along with several important enhancements we’ve made to improve our customers’ workflow and productivity.

Increased Data Retention and Adaptive Sampling

Previously, Enterprise customers could view 14 days of Firewall Analytics for their domains. Today we’re increasing that retention to 30 days, and again to 90 days in the coming months. Business and Professional plan zones will get 30 and 3 days of retention, respectively.

In addition to the extended retention, we are introducing adaptive sampling to guarantee that Firewall Analytics results are displayed in the Cloudflare Dashboard quickly and reliably, even when you are under a massive attack or otherwise receiving a large volume of requests.

Adaptive sampling works similar to Netflix: when your internet connection runs low Continue reading

Announcing deeper insights and new monitoring capabilities from Cloudflare Analytics

This week we’re excited to announce a number of new products and features that provide deeper security and reliability insights, “proactive” analytics when there’s a problem, and more powerful ways to explore your data.

If you’ve been a user or follower of Cloudflare for a little while, you might have noticed that we take pride in turning technical challenges into easy solutions. Flip a switch or run a few API commands, and the attack you’re facing is now under control or your site is now 20% faster. However, this ease of use is even more helpful if it’s complemented by analytics. Before you make a change, you want to be sure that you understand your current situation. After the change, you want to confirm that it worked as intended, ideally as fast as possible.

Because of the front-line position of Cloudflare’s network, we can provide comprehensive metrics regarding both your traffic and the security and performance of your Internet property. And best of all, there’s nothing to set up or enable. Cloudflare Analytics is automatically available to all Cloudflare users and doesn’t rely on Javascript trackers, meaning that our metrics include traffic from APIs and bots and are not skewed Continue reading