Log every request to corporate apps, no code changes required

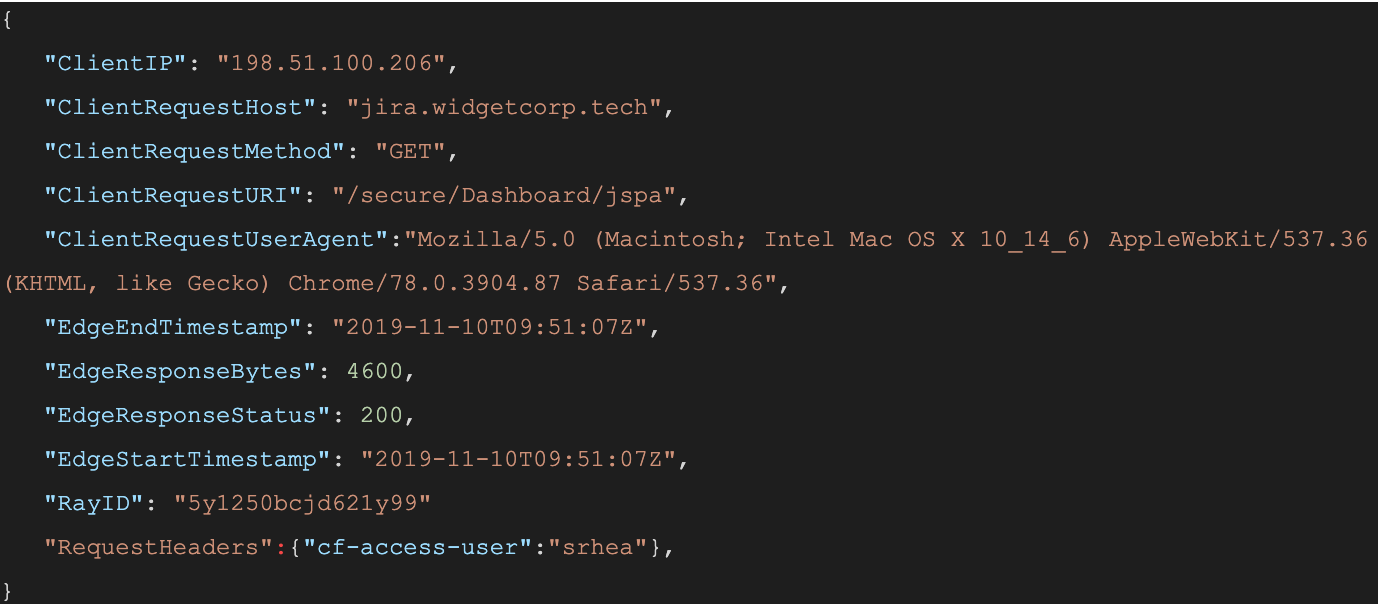

When a user connects to a corporate network through an enterprise VPN client, this is what the VPN appliance logs:

The administrator of that private network knows the user opened the door at 12:15:05, but, in most cases, has no visibility into what they did next. Once inside that private network, users can reach internal tools, sensitive data, and production environments. Preventing this requires complicated network segmentation, and often server-side application changes. Logging the steps that an individual takes inside that network is even more difficult.

Cloudflare Access does not improve VPN logging; it replaces this model. Cloudflare Access secures internal sites by evaluating every request, not just the initial login, for identity and permission. Instead of a private network, administrators deploy corporate applications behind Cloudflare using our authoritative DNS. Administrators can then integrate their team’s SSO and build user and group-specific rules to control who can reach applications behind the Access Gateway.

When a request is made to a site behind Access, Cloudflare prompts the visitor to login with an identity provider. Access then checks that user’s identity against the configured rules and, if permitted, allows the request to proceed. Access performs these checks on each request a user Continue reading

Dell Technologies CTO: Why AI Needs Empathy

If we want humans to trust artificial intelligence, then we need to teach the machines empathy,...

Writing userspace USB drivers for abandoned devices

Writing userspace USB drivers for abandoned devices

Palo Alto Networks Leaps Into SASE Market

Fulfilling Gartner's predictions, Palo Alto Networks announced its transition to a secure access...

Carrier supporting Carrier with BGP-LU

In our last post we talked about the less used method of deploying CsC where we ran OSPF and LDP inside the CSC-PE routing-instance.

Note: I can’t help myself apparently so be aware that Carrier of Carriers (CoC) is the same as Carrier supporting Carrier (CsC)

This required some changes to be made to our default LDP export policy as well as how we moved routes between the inet.3 and inet.0 tables. That being said, if you’re a single org it might make good sense to run things that way. I liked how you were able to see all of the remote LDP domain loopbacks in your local inet.3 table which in my mind made it easier to imagine the LSP paths.

That being said, it is clearly not the preferred deployment methodology. Most examples you’ll find leverage BGP (BGP-LU specifically) for the CSC-CE to CSC-PE connections as well as within the local label domains. So in this example, we’ll do just that. Larges chunks of the base configuration will be the same as they were in the previous post but for the sake of clarity I’ll post our starting post the starting configurations and diagrams here Continue reading

IETF 106 Begins Nov 16 in Singapore – Here is how you can participate remotely in building open Internet standards

Starting Saturday, November 16, 2019, the 106th meeting of the Internet Engineering Task Force (IETF) will begin in Singapore. Over 1,000 engineers from around the world will gather in the convention center to join together in the debates and discussions that will advance the open standards that make the Internet possible. They are gathered, in the words of the IETF mission, “to make the Internet work better“.

Pick your protocol – the future of DNS, DOH, TLS, HTTP(S), QUIC, SIP, TCP, IPv6, ACME, NTP… and many, many more will be debated in the rooms and hallways over the next week.

What if you cannot be IN Singapore?

If you are not able to physically be in Singapore this week, the good news is you can participate remotely! The IETF website explains the precise steps you need to do. To summarize quickly:

- Register as a remote participant. There is no cost.

- Review the agenda to figure out which sessions you want to join. (I will note that there are some very interesting (to me!) Birds-of-a-Feather (BOF) sessions at IETF 106.)

- Choose the channel(s) you will use to participate, including:

- Meetecho (video, slides, audio, and chat)

- Audio Continue reading

Weekly Wrap: Juniper Guns for Cisco, Aruba With Mist AI

SDxCentral Weekly Wrap for Nov. 15, 2019: Juniper enhances its Mist AI platform and launches a new...