DDoS detection with advanced real-time flow analytics

The diagram shows two high bandwidth flows of traffic to the Customer Network, the first (shown in blue) is a bulk transfer of data to a big data application, and the second (shown in red) is a distributed denial of service (DDoS) attack in which large numbers of compromised hosts attempt to flood the link connecting the Customer Network to the upstream Transit Provider. Industry standard sFlow telemetry from the customer router streams to an instance of the sFlow-RT real-time analytics engine which is programmed to detect (and mitigate) the DDoS attack.This article builds on the Docker testbed to demonstrate how advanced flow analytics can be used to separate the two types of traffic and detect the DDoS attack.

docker run --rm -d -e "COLLECTOR=host.docker.internal" -e "SAMPLING=100" \First, start a Host sFlow agent using the pre-built sflow/host-sflow image to generate the sFlow telemetry that would stream from the switches and routers in a production deployment.

--net=host -v /var/run/docker.sock:/var/run/docker.sock:ro \

--name=host-sflow sflow/host-sflow

setFlow('ddos_amplification', {

keys:'ipdestination,udpsourceport',

value: 'frames',

values: ['count:ipsource']

});

setThreshold('ddos_amplification', {

metric:'ddos_amplification',

value: 10000,

byFlow:true,

timeout: 2

});

setEventHandler(function(event) {

var [ipdestination,udpsourceport] = event.flowKey.split(',');

var [sourcecount] = event.values;

Continue reading

SR Linux in Containerlab

This article uses Containerlab to emulate a simple network and experiment with Nokia SR Linux and sFlow telemetry. Containerlab provides a convenient method of emulating network topologies and configurations before deploying into production on physical switches.

curl -O https://raw.githubusercontent.com/sflow-rt/containerlab/master/srlinux.yml

Download the Containerlab topology file.

containerlab deploy -t srlinux.yml

Deploy the topology.

docker exec -it clab-srlinux-h1 traceroute 172.16.2.2

Run traceroute on h1 to verify path to h2.

traceroute to 172.16.2.2 (172.16.2.2), 30 hops max, 46 byte packets

1 172.16.1.1 (172.16.1.1) 2.234 ms * 1.673 ms

2 172.16.2.2 (172.16.2.2) 0.944 ms 0.253 ms 0.152 ms

Results show path to h2 (172.16.2.2) via router interface (172.16.1.1).

docker exec -it clab-srlinux-switch sr_cli

Access SR Linux command line on switch.

Using configuration file(s): []

Welcome to the srlinux CLI.

Type 'help' (and press <ENTER>) if you need any help using this.

--{ + running }--[ ]--

A:switch#

SR Linux CLI describes how to use the interface.

A:switch# show system sflow status

Get status of sFlow telemetry.

-------------------------------------------------------------------------

Admin State Continue reading

Using Ixia-c to test RTBH DDoS mitigation

Remote Triggered Black Hole Scenario describes how to use the Ixia-c traffic generator to simulate a DDoS flood attack. Ixia-c supports the Open Traffic Generator API that is used in the article to program two traffic flows: the first representing normal user traffic (shown in blue) and the second representing attack traffic (show in red).

The article goes on to demonstrate the use of remotely triggered black hole (RTBH) routing to automatically mitigate the simulated attack. The chart above shows traffic levels during two simulated attacks. The DDoS mitigation controller is disabled during the first attack. Enabling the controller for the second attack causes to attack traffic to be dropped the instant it crosses the threshold.

The diagram shows the Containerlab topology used in the Remote Triggered Black Hole Scenario lab (which can run on a laptop). The Ixia traffic generator's eth1 interface represents the Internet and its eth2 interface represents the Customer Network being attacked. Industry standard sFlow telemetry from the Customer router, ce-router, streams to the DDoS mitigation controller (running an instance of DDoS Protect). When the controller detects a denial of service attack it pushed a control via BGP to the ce-router, Continue reading

BGP Remotely Triggered Blackhole (RTBH)

DDoS attacks and BGP Flowspec responses describes how to simulate and mitigate common DDoS attacks. This article builds on the previous examples to show how BGP Remotely Triggered Blackhole (RTBH) controls can be applied in situations where BGP Flowpsec is not available, or is unsuitable as a mitigation response.docker run --rm -it --privileged --network host --pid="host" \Start Containerlab.

-v /var/run/docker.sock:/var/run/docker.sock -v /run/netns:/run/netns \

-v ~/clab:/home/clab -w /home/clab \

ghcr.io/srl-labs/clab bash

curl -O https://raw.githubusercontent.com/sflow-rt/containerlab/master/ddos.ymlDownload the Containerlab topology file.

sed -i "s/\\.ip_flood\\.action=filter/\\.ip_flood\\.action=drop/g" ddos.ymlChange mitigation policy for IP Flood attacks from Flowspec filter to RTBH.

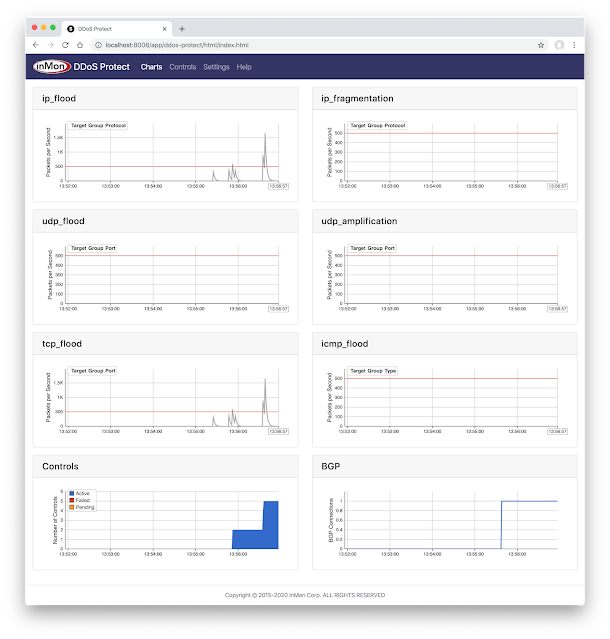

containerlab deploy -t ddos.ymlDeploy the topology. Access the DDoS Protect screen at http://localhost:8008/app/ddos-protect/html/

docker exec -it clab-ddos-attacker hping3 \Launch an IP Flood attack. The DDoS Protect dashboard shows that as soon as the ip_flood attack traffic reaches the threshold a control is implemented and the attack traffic is immediately dropped. The entire process between the attack being launched, detected, and mitigated happens within a second, ensuring minimal impact on network capacity and services.

--flood --rawip -H 47 192.0.2.129

docker exec -it clab-ddos-sp-router vtysh -c "show running-config"See Continue reading

Real-time flow telemetry for routers

The last few years have seen leading router vendors add support for sFlow monitoring technology that has long been the industry standard for switch monitoring. Router implementations of sFlow include:- Arista 7020R Series Routers, 7280R Series Routers, 7500R Series Routers, 7800R3 Series Routers

- Cisco 8000 Series Routers, ASR 9000 Series Routers, NCS 5500 Series Routers

- Juniper ACX Series Routers, MX Series Routers, PTX Series Routers

- Huawei NetEngine 8000 Series Routers

Note: Most routers also support Cisco Netflow/IPFIX. Rapidly detecting large flows, sFlow vs. NetFlow/IPFIX describes why you should choose sFlow if you are interested in real-time monitoring and control applications.DDoS mitigation is a popular use case for sFlow telemetry in routers. The combination of sFlow for real-time DDoS detection with BGP RTBH / Flowspec mitigation on routing platforms makes for a compelling solution.DDoS protection quickstart guide describes how to deploy sFlow along with BGP RTBH/Flowspec to automatically detect and mitigate DDoS flood attacks. The use of sFlow provides sub-second visibility into network traffic during the periods of high packet loss Continue reading

DDoS attacks and BGP Flowspec responses

This article describes how to use the Containerlab DDoS testbed to simulate variety of flood attacks and observe the automated mitigation action designed to eliminate the attack traffic.

docker run --rm -it --privileged --network host --pid="host" \Start Containerlab.

-v /var/run/docker.sock:/var/run/docker.sock -v /run/netns:/run/netns \

-v ~/clab:/home/clab -w /home/clab \

ghcr.io/srl-labs/clab bash

curl -O https://raw.githubusercontent.com/sflow-rt/containerlab/master/ddos.ymlDownload the Containerlab topology file.

containerlab deploy -t ddos.ymlDeploy the topology and access the DDoS Protect screen at http://localhost:8008/app/ddos-protect/html/.

docker exec -it clab-ddos-sp-router vtysh -c "show bgp ipv4 flowspec detail"

At any time, run the command above to see the BGP Flowspec rules installed on the sp-router. Simulate the volumetric attacks using hping3.

Note: While the hping3 --rand-source option to generate packets with random source addresses would create a more authentic DDoS attack simulation, the option is not used in these examples because the victims responses to the attack packets (ICMP Port Unreachable) will be sent back to the random addresses and may leak out of the Containerlab test network. Instead varying source / destination ports are used to create entropy in the attacks.

When you are finished trying the examples below, run the following command Continue reading

Containerlab DDoS testbed

Real-time telemetry from a 5 stage Clos fabric describes lightweight emulation of realistic data center switch topologies using Containerlab. This article extends the testbed to experiment with distributed denial of service (DDoS) detection and mitigation techniques described in Real-time DDoS mitigation using BGP RTBH and FlowSpec.docker run --rm -it --privileged --network host --pid="host" \Start Containerlab.

-v /var/run/docker.sock:/var/run/docker.sock -v /run/netns:/run/netns \

-v ~/clab:/home/clab -w /home/clab \

ghcr.io/srl-labs/clab bash

curl -O https://raw.githubusercontent.com/sflow-rt/containerlab/master/ddos.ymlDownload the Containerlab topology file.

containerlab deploy -t ddos.ymlFinally, deploy the topology. Connect to the web interface, http://localhost:8008. The sFlow-RT dashboard verifies that telemetry is being received from 1 agent (the Customer Network, ce-router, in the diagram above). See the sFlow-RT Quickstart guide for more information. Now access the DDoS Protect application at http://localhost:8008/app/ddos-protect/html/. The BGP chart at the bottom right verifies that BGP connection has been established so that controls can be sent to the Customer Router, ce-router.

docker exec -it clab-ddos-attacker hping3 --flood --udp -k -s 53 192.0.2.129Start a simulated DNS amplification attack using hping3. The udp_amplification chart shows that traffic matching the attack signature has crossed the threshold. The Controls chart shows Continue reading

Real-time EVPN fabric visibility

Real-time telemetry from a 5 stage Clos fabric describes lightweight emulation of realistic data center switch topologies using Containerlab. This article builds on the example to demonstrate visibility into Ethernet Virtual Private Network (EVPN) traffic as it crosses a routed leaf and spine fabric.docker run --rm -it --privileged --network host --pid="host" \Start Containerlab.

-v /var/run/docker.sock:/var/run/docker.sock -v /run/netns:/run/netns \

-v ~/clab:/home/clab -w /home/clab \

ghcr.io/srl-labs/clab bash

curl -O https://raw.githubusercontent.com/sflow-rt/containerlab/master/evpn3.ymlDownload the Containerlab topology file.

containerlab deploy -t evpn3.ymlFinally, deploy the topology.

docker exec -it clab-evpn3-leaf1 vtysh -c "show running-config"See configuration of leaf1 switch.

Building configuration...The loopback address on the switch, 192.168.1.1/32, is advertised to neighbors so that the VxLAN tunnel endpoint Continue reading

Current configuration:

!

frr version 8.1_git

frr defaults datacenter

hostname leaf1

no ipv6 forwarding

log stdout

!

router bgp 65001

bgp bestpath as-path multipath-relax

bgp bestpath compare-routerid

neighbor fabric peer-group

neighbor fabric remote-as external

neighbor fabric description Internal Fabric Network

neighbor fabric capability extended-nexthop

neighbor eth1 interface peer-group fabric

neighbor eth2 interface peer-group fabric

!

address-family ipv4 unicast

network 192.168.1.1/32

exit-address-family

!

address-family l2vpn evpn

neighbor fabric activate

advertise-all-vni

exit-address-family

exit

!

ip nht resolve-via-default

!

end

Who monitors the monitoring systems?

Adrian Cockroft poses an interesting question in, Who monitors the monitoring systems? He states, The first thing that would be useful is to have a monitoring system that has failure modes which are uncorrelated with the infrastructure it is monitoring. ... I don’t know of a specialized monitor-of-monitors product, which is one reason I wrote this blog post.This article offers a response, describing how to introduce an uncorrelated monitor-of-monitors into the data center to provide real-time visibility that survives when the primary monitoring systems fail.

Summary of the AWS Service Event in the Northern Virginia (US-EAST-1) Region, This congestion immediately impacted the availability of real-time monitoring data for our internal operations teams, which impaired their ability to find the source of congestion and resolve it. December 10th, 2021

Standardizing on a small set of communication primitives (gRPC, Thrift, Kafka, etc.) simplifies the creation of large scale distributed services. The communication primitives abstract the physical network to provide reliable communication to support distributed services running on compute nodes. Monitoring is typically regarded as a distributed service that is part of the compute infrastructure, relying on agents on compute nodes to transmit measurements to scale out analysis, storage, automation, and Continue reading

DDoS Mitigation with Cisco, sFlow, and BGP Flowspec

DDoS protection quickstart guide shows how sFlow streaming telemetry and BGP RTBH/Flowspec are combined by the DDoS Protect application running on the sFlow-RT real-time analytics engine to automatically detect and block DDoS attacks.

This article discusses how to deploy the solution in a Cisco environment. Cisco has a long history of supporting BGP Flowspec on their routing platforms and has recently added support for sFlow, see Cisco 8000 Series routers, Cisco ASR 9000 Series Routers, and Cisco NCS 5500 Series Routers.

First, IOS-XR doesn't provide a way to connect to the non-standard BGP port (1179) that sFlow-RT uses by default. Allowing sFlow-RT to open the standard BGP port (179) requires that the service be given additional Linux capabilities.

docker run --rm --net=host --sysctl net.ipv4.ip_unprivileged_port_start=0 \

sflow/ddos-protect -Dbgp.port=179

The above command launches the prebuilt sflow/ddos-protect Docker image. Alternatively, if sFlow-RT has been installed as a deb / rpm package, then the required permissions can be added to the service.

sudo systemctl edit sflow-rt.service

Type the above command to edit the service configuration and add the following lines:

[Service]

AmbientCapabilities=CAP_NET_BIND_SERVICE

Next, edit the sFlow-RT configuration file for the DDoS Protect application:

sudo vi /usr/local/sflow-rt/conf.d/ddos-protect.conf

Topology aware fabric analytics

Real-time telemetry from a 5 stage Clos fabric describes how to emulate and monitor the topology shown above using Containerlab and sFlow-RT. This article extends the example to demonstrate how topology awareness enhances analytics.docker run --rm -it --privileged --network host --pid="host" \Start Containerlab.

-v /var/run/docker.sock:/var/run/docker.sock -v /run/netns:/run/netns \

-v ~/clab:/home/clab -w /home/clab \

ghcr.io/srl-labs/clab bash

curl -O https://raw.githubusercontent.com/sflow-rt/containerlab/master/clos5.ymlDownload the Containerlab topology file.

sed -i "s/prometheus/topology/g" clos5.ymlChange the sFlow-RT image from sflow/prometheus to sflow/topology in the Containerlab topology. The sflow/topology image packages sFlow-RT with useful applications that combine topology awareness with analytics.

containerlab deploy -t clos5.ymlDeploy the topology.

curl -O https://raw.githubusercontent.com/sflow-rt/containerlab/master/clos5.jsonDownload the sFlow-RT topology file.

curl -X PUT -H "Content-Type: application/json" -d @clos5.json \Post the topology to sFlow-RT. Connect to the sFlow-RT Topology application, http://localhost:8008/app/topology/html/. The dashboard confirms that all the links and nodes in the topology are streaming telemetry. There is currently no traffic on the network, so none of the nodes in the topology are sending flow data.

http://localhost:8008/topology/json

docker exec -it clab-clos5-h1 iperf3 -c 172.16.4.2Generate traffic. You should see the Nodes No Flows number drop Continue reading

Real-time telemetry from a 5 stage Clos fabric

CONTAINERlab described how to use FRRouting and Host sFlow in a Docker container to emulate switches in a Clos (leaf/spine) fabric. The recently released open source project, https://github.com/sflow-rt/containerlab, simplifies and automates the steps needed to build and monitor topologies.docker run --rm -it --privileged --network host --pid="host" \Run the above command to start Containerlab if you already have Docker installed; the ~/clab directory will be created to persist settings. Otherwise, Installation provides detailed instructions for a variety of platforms.

-v /var/run/docker.sock:/var/run/docker.sock -v /run/netns:/run/netns \

-v ~/clab:/home/clab -w /home/clab \

ghcr.io/srl-labs/clab bash

curl -O https://raw.githubusercontent.com/sflow-rt/containerlab/master/clos5.ymlNext, download the topology file for the 5 stage Clos fabric shown at the top of this article.

containerlab deploy -t clos5.ymlFinally, deploy the topology.

Note: The 3 stage Clos topology, clos3.yml, described in the previous article is also available.The initial launch may take a couple of minutes as the container images are downloaded for the first time. Once the images are downloaded, the topology deploys in around 10 seconds.An instance of the sFlow-RT real-time analytics engine receives industry standard sFlow telemetry from all the switches in the network. All of Continue reading

UDP vs TCP for real-time streaming telemetry

This article compares UDP and TCP and their suitability for transporting real-time network telemetry. The results obtained demonstrate that poor throughput and high message latency in the face of packet loss makes TCP unsuitable for providing visibility during congestion events. We demonstrate that the use of UDP transport by the sFlow telemetry standard overcomes the limitations of TCP to deliver robust real-time visibility during extreme traffic events when visibility is most needed.Summary of the AWS Service Event in the Northern Virginia (US-EAST-1) Region, "This congestion immediately impacted the availability of real-time monitoring data for our internal operations teams, which impaired their ability to find the source of congestion and resolve it." December 10th, 2021

The data in these charts was created using Mininet to simulate packet loss in a simple network. If you are interested in replicating these results, Multipass describes how to run Mininet on your laptop.

sudo mn --link tc,loss=5

For example, the above command simulates a simple network consisting of two hosts connected by a switch. A packet loss rate of 5% is configured for each link.

Simple Python scripts running on the simulated hosts were used to simulate transfer of network telemetry.

#! Continue reading

Cisco NCS 5500 Series Routers

Cisco already supports industry standard sFlow telemetry across a range of products and the recent IOS-XR Release 7.5.1 extends support to Cisco NCS 5500 series routers.

Note: The NCS 5500 series routers also support Cisco Netflow. Rapidly detecting large flows, sFlow vs. NetFlow/IPFIX describes why you should choose sFlow if you are interested in real-time monitoring and control applications.

flow exporter-map SF-EXP-MAP-1

version sflow v5

!

packet-length 1468

transport udp 6343

source GigabitEthernet0/0/0/1

destination 192.127.0.1

dfbit set

!

Configure the sFlow analyzer address in an exporter-map.

flow monitor-map SF-MON-MAP

record sflow

sflow options

extended-router

extended-gateway

if-counters polling-interval 300

input ifindex physical

output ifindex physical

!

exporter SF-EXP-MAP-1

!

Configure sFlow options in a monitor-map.

sampler-map SF-SAMP-MAP

random 1 out-of 20000

!

Define the sampling rate in a sampler-map.

interface GigabitEthernet0/0/0/3

flow datalinkframesection monitor-map SF-MON-MAP sampler SF-SAMP-MAP ingress

Enable sFlow on each interface for complete visibilty into network traffic.

The diagram shows the general architecture of an sFlow monitoring deployment. All the switches stream sFlow telemetry to a central sFlow analyzer Continue reading

Cisco ASR 9000 Series Routers

Cisco already supports industry standard sFlow telemetry across a range of products and the recent IOS-XR Release 7.5.1 extends support to Cisco ASR 9000 Series Routers.

Note: The ASR 9000 series routers also support Cisco Netflow. Rapidly detecting large flows, sFlow vs. NetFlow/IPFIX describes why you should choose sFlow if you are interested in real-time monitoring and control applications.

The following commands configure an ASR 9000 series router to sample packets at 1-in-20,000 and stream telemetry to an sFlow analyzer (192.127.0.1) on UDP port 6343.

flow exporter-map SF-EXP-MAP-1

version sflow v5

!

packet-length 1468

transport udp 6343

source GigabitEthernet0/0/0/1

destination 192.127.0.1

dfbit set

!

Configure the sFlow analyzer address in an exporter-map.

flow monitor-map SF-MON-MAP

record sflow

sflow options

extended-router

extended-gateway

if-counters polling-interval 300

input ifindex physical

output ifindex physical

!

exporter SF-EXP-MAP-1

!

Configure sFlow options in a monitor-map.

sampler-map SF-SAMP-MAP

random 1 out-of 20000

!

Define the sampling rate in a sampler-map.

interface GigabitEthernet0/0/0/3

flow datalinkframesection monitor-map SF-MON-MAP sampler SF-SAMP-MAP ingress

Enable sFlow on each interface for complete visibilty into network traffic.

The diagram shows the general architecture of an sFlow monitoring deployment. All the switches stream sFlow telemetry to a central sFlow analyzer for network Continue reading

Real-time Kubernetes cluster monitoring example

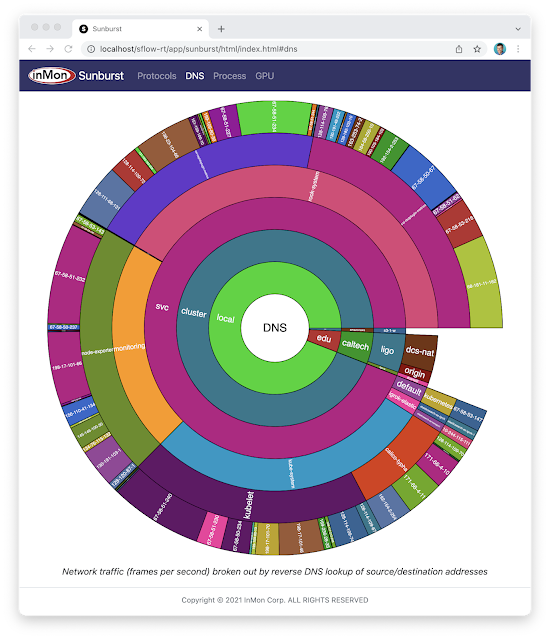

The Sunburst GPU chart updates every second to show a real-time view of the share of GPU resources being consumed by namespaces operating on the Nautilus hyperconverged Kubernetes cluster. The Nautilus cluster tightly couples distributes storage, GPU, and CPU resources to share among the participating research organizations.Sunburst

The recently released open source Sunburst application provides a real-time visualization of the protocols running a network. The Sunburst application runs on the sFlow-RT real-time analytics platform, which receives standard streaming sFlow telemetry from switches and routers throughout the network to provide comprehensive visibility.docker run -p 8008:8008 -p 6343:6343/udp sflow/prometheusThe pre-built sflow/prometheus Docker image packages sFlow-RT with the applications for exploring real-time sFlow analytics. Run the command above, configure network devices to send sFlow to the application on UDP port 6343 (the default sFlow port) and connect with a web browser to port 8008 to access the user interface.

The chart at the top of this article demonstrates the visibility that sFlow can provide into nested protocol stacks that result from network virtualization. For example, the most deeply nested set of protocols shown in the chart is:

- eth: Ethernet

- q: IEEE 802.1Q VLAN

- trill: Transparent Interconnection of Lots of Links (TRILL)

- eth: Ethernet

- q: IEEE 802.1Q VLAN

- ip: Internet Protocol (IP) version 4

- udp: User Datagram Protocol (UDP)

- vxlan: Virtual eXtensible Local Area Network (VXLAN)

- eth: Ethernet

- ip Internet Protocol (IP) version 4

- esp IPsec Encapsulating Continue reading

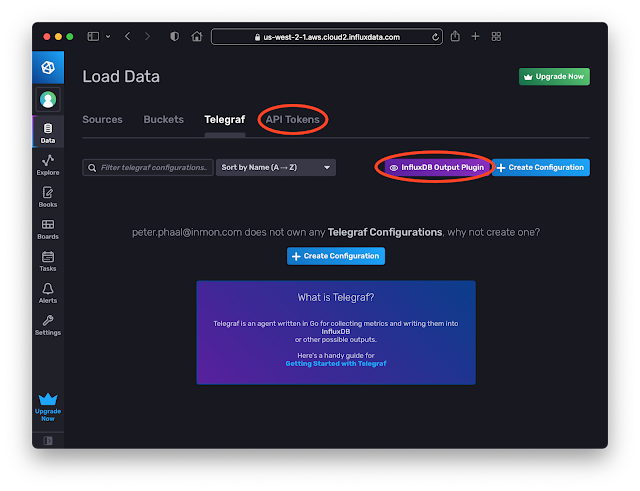

InfluxDB Cloud

InfluxDB Cloud is a cloud hosted version of InfluxDB. The free tier makes it easy to try out the service and has enough capability to satisfy simple use cases. In this article we will explore how metrics based on sFlow streaming telemetry can be pushed into InfluxDB Cloud.

docker run -p 8008:8008 -p 6343:6343/udp --name sflow-rt -d sflow/prometheus

Use Docker to run the pre-built sflow/prometheus image which packages sFlow-RT with the sflow-rt/prometheus application. Configure sFlow agents to stream data to this instance.

Create an InfluxDB Cloud account. Click the Data tab. Click on the Telegraf option and the InfluxDB Output Plugin button to get the URL to post data. Click the API Tokens option and generate a token.[agent]

interval = "15s"

round_interval = true

metric_batch_size = 5000

metric_buffer_limit = 10000

collection_jitter = "0s"

flush_interval = "10s"

flush_jitter = "0s"

precision = "1s"

hostname = ""

omit_hostname = true

[[outputs.influxdb_v2]]

urls = ["INFLUXDB_CLOUD_URL"]

token = Continue reading

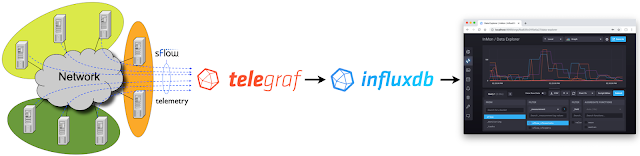

Telegraf sFlow input plugin

The Telegraf agent is bundled with an SFlow Input Plugin for importing sFlow telemetry into the InfluxDB time series database. However, the plugin has major caveats that severely limit the value that can be derived from sFlow telemetry.

Currently only Flow Samples of Ethernet / IPv4 & IPv4 TCP & UDP headers are turned into metrics. Counters and other header samples are ignored.

Series Cardinality Warning

This plugin may produce a high number of series which, when not controlled for, will cause high load on your database.

InfluxDB 2.0 released describes how to use sFlow-RT to convert sFlow telemetry into useful InfluxDB metrics.

Using sFlow-RT overcomes the limitations of the Telegraf sFlow Input Plugin, making it possible to fully realize the value of sFlow monitoring:

- Counters are a major component of sFlow, efficiently streaming detailed network counters that would otherwise need to be polled via SNMP. Counter telemetry is ingested by sFlow-RT and used to compute an extensive set of Metrics that can be imported into InfluxDB.

- Flow Samples are fully decoded by sFlow-RT, yielding visibility that extends beyond the basic Ethernet / IPv4 / TCP / UDP header metrics supported by the Telegraf plugin to include ARP, ICMP, Continue reading

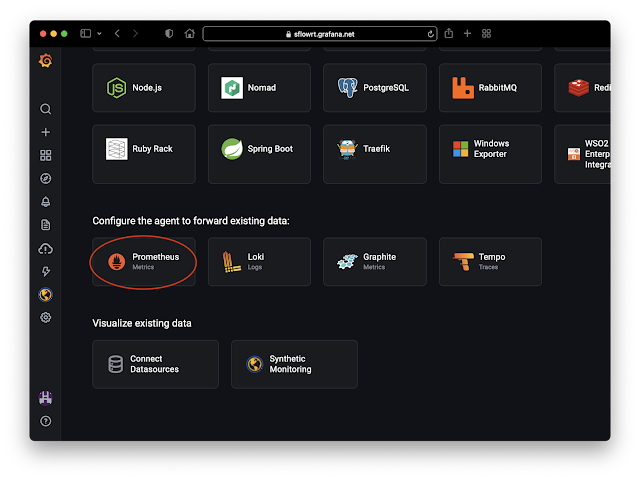

Grafana Cloud

docker run -p 8008:8008 -p 6343:6343/udp --name sflow-rt -d sflow/prometheusUse Docker to run the pre-built sflow/prometheus image which packages sFlow-RT with the sflow-rt/prometheus application. Configure sFlow agents to stream data to this instance.

server:

Continue reading