When It Comes to IoT, We Must Work Together to #SecureIt

My first ever rendezvous with the word “IoT” was during my final year at a college conference, when a prominent regional start-up figure dispensed an oblique reference to it. I learned that IoT was the next big thing veering towards the mass market, which would eventually change the course of everyday human existence by making our way of life more convenient. What caught my attention was the term “things” in IoT – an unbounded category which could be anything from the the bed you sleep on, the clothes you drape, or even the personal toiletries you use.

The Internet of Things (IoT) is a class of devices that “can monitor their environment, report their status, receive instructions, and even take action based on the information they receive.” IoT connotes not just the device but also the complex network connected to the device. Multiple studies have revealed that there are more connected devices than people on the planet. Although, combining computers and networks to devices has existed for long, they were previously not integrated to consumer devices and durable goods, used in ordinary day to day life. Furthermore, IoT being an evolving concept, exhibiting a range of ever-changing features, Continue reading

Weekly Show 412: What Managers Want From Their Teams

What does your manager want? On today's Weekly Show we talk to two managers to find out how they work with their teams, what they see as their roles, how they hire, and much more. Our guests are Michael Bushong and Omar Sultan.

The post Weekly Show 412: What Managers Want From Their Teams appeared first on Packet Pushers.

Cumulus, Facebook Push Open Data Center Interconnect

Cumulus Networks launched a transponder abstraction interface, which is a vendor-agnostic way to manage transponders. This makes data center interconnect technology more open.

Cumulus Networks launched a transponder abstraction interface, which is a vendor-agnostic way to manage transponders. This makes data center interconnect technology more open.

Stuff The Internet Says On Scalability For October 19th, 2018

Hey, wake up! It's HighScalability time:

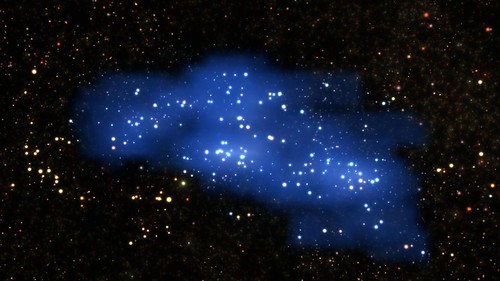

Now that's a cloud! The largest structure ever found in the early universe. The proto-supercluster Hyperion may contain thousands of galaxies or more. (Science)

Do you like this sort of Stuff? Please support me on Patreon. I'd really appreciate it. Know anyone looking for a simple book explaining the cloud? Then please recommend my well reviewed book: Explain the Cloud Like I'm 10. They'll love it and you'll be their hero forever.

- four petabytes: added to Internet Archive per year; 60,000: patents donated by Microsoft to the Open Invention Network; 30 million: DuckDuckGo daily searches; 5 seconds: Google+ session length; 1 trillion: ARM device goal; $40B: Softbank investment in 5G; 30: Happy Birthday IRC!; 97,600: Backblaze hard drives; 15: new Lambda function minute limit; $120 Billion: Uber IPO; 12%: slowdown in global growth of internet access; 25%: video ad spending in US; 1 billion: metrics per minute processed at Netflix; 900%: inflated Facebook ad-watch times; 31 million: GitHub users; 19: new AWS Public datasets; 25%: IPv6 adoption; 300: requests for Nest data; 913: security Continue reading

Twilio Created a NB-IoT Developer Platform for T-Mobile

The platform includes NB-IoT modules, programmable SIM cards, and a software development kit.

The platform includes NB-IoT modules, programmable SIM cards, and a software development kit.

Huawei Shakes Up the AI Status Quo

Google, nVidia, IBM face a new formidable AI challenger in Huawei.

Google, nVidia, IBM face a new formidable AI challenger in Huawei.

SDxCentral’s Weekly Roundup — October 19, 2018

Samsung buys an AI firm; AWS publishes an SLA for serverless computing; Oracle boosts Health Sciences Cloud with acquisition.

Samsung buys an AI firm; AWS publishes an SLA for serverless computing; Oracle boosts Health Sciences Cloud with acquisition.

10 Security Startups that Investors Are Funding

These 10 security companies raised more than $499 million in just the last month as security remains a top priority for many enterprises.

These 10 security companies raised more than $499 million in just the last month as security remains a top priority for many enterprises.

Diving into the DNS

DNS OARC organizes two meetings a year. They are two-day meetings with a concentrated dose of DNS esoterica. Here’s what I took away from the recent 29th meeting of OARC, held in Amsterdam in mid-October 2018.Short Take – Problems And Solutions

In this Network Collective Short Take, Russ shares his thoughts on the value of really understanding the problem at hand before settling on a specific solution.

The post Short Take – Problems And Solutions appeared first on Network Collective.

IXPs: Why Is the Middle East Lagging Behind?

On October 2nd, the Internet Society was happy to support the ITU in organizing the IXP Workshop on Peering and Interconnection in the Arab World “Towards unlocking regional interconnection opportunities” It was held in Manama-Bahrain, and kindly hosted by the Telecommunications Regulatory Authority (TRA) of Bahrain. This workshop was held on the eve of the Annual Meeting of the Arab ICT Regulators Network (AREGNET), and 30 regulators and 10 operators attended from all over the Arab region.

The workshop started with an overview of the Arab peering landscape given by Christine Arida, Director for Telecom Services and Planning at National Telecom Regulatory Authority of Egypt. Christine showed that the region is well served by undersea cables, with the oldest IXP established 20 years ago. However, all countries have either an underperforming IXP or do not have one at all. Regionally, cross-border interconnection is almost non-existent – with very few exceptions and most of the traffic is exchanged in London and Marseilles.

The debate started with an acknowledgement that strong and vibrant IXPs are needed in the Arab region. IXPs are a means and not the end… They are the enablers of digital transformation and a means to attract investment. Cheaper Continue reading

Automating UCS Manager Configuration with Ansible

Cisco UCS Manager is the management plane service for Cisco UCS solutions. Using a policy-based management approach, server configurations are decoupled from the physical hardware.

New: Expert ipSpace.net Subscription

Earlier this month I got this email from someone who had attended one of my online courses before and wanted to watch another one of them:

Is it possible for you to bundle a 1 year subscription at no extra cost if I purchase the Building Next-Generation Data Center course?

We were planning to do something along these lines for a long time, and his email was just what I needed to start a weekend-long hackathon.

End result: Expert ipSpace.net Subscription. It includes:

Read more ...Orca: differential bug localization in large-scale services

Orca: differential bug localization in large-scale services Bhagwan et al., OSDI’18

Earlier this week we looked at REPT, the reverse debugging tool deployed live in the Windows Error Reporting service. Today it’s the turn of Orca, a bug localisation service that Microsoft have in production usage for six of their large online services. The focus of this paper is on the use of Orca with ‘Orion,’ where Orion is a codename given to a ‘large enterprise email and collaboration service that supports several millions of users, run across hundreds of thousands of machines, and serves millions of requests per second.’ We could it ‘Office 365’ perhaps? Like REPT, Orca won a best paper award (meaning MR scooped 2 out of the three awards at OSDI this year!).

Orca is designed to support on-call engineers (OCEs) in quickly figuring out the change (commit) that introduced a bug to a service so that it can be backed out. (Fixes can come later!). That’s a much harder task than it sounds in highly dynamic and fast moving environments. In ‘Orion’ for example there are many developers concurrently committing code. Post review the changes are eligible for inclusion in a Continue reading

Spanning The Clouds, Public And Private, With The Kubernetes Stack

Enterprises are looking at a future of multiple clouds and hybrid clouds where they can run not only their new cloud-native applications but also migrate many of their legacy workloads and take advantage of cost savings and the agility that comes with cloud computing. …

Spanning The Clouds, Public And Private, With The Kubernetes Stack was written by Jeffrey Burt at .