AWS ABCs – Can I Firewall My Compute Instances?

In a previous post, I reviewed what a public subnet and Internet Gateway (IGW) are and that they allowed outbound and inbound connectivity to instances (ie, virtual machines) running in the AWS cloud.

If you’re the least bit security conscious, your reaction might be, “No way! I can’t have my instances sitting right on the Internet without any protection”.

Fear not, reader. This post will explain the mechanisms that the Amazon Virtual Private Cloud (VPC) affords you to protect your instances.

Security Groups

In a nutshell: security groups (SGs) define what traffic is allowed to reach an instance.

“Security group” is a bit of a weird name for what is essentially a firewall that sits in front of an instance, however if you think about it in terms of all servers at a particular tier in an N-tier application (eg, all the web servers) or all the servers that have a common function (eg, all PostgreSQL servers) and how each group would have its own security requirements when it comes to allowed ports, protocols, and IP addresses, then it makes a bit more sense: the security rules appropriate for a group of servers are all put together within Continue reading

A minimalist approach to network architecture

Minimalism, as a current concept, is not just about owning fewer things, or eliminating distractions, or consuming only specific coffees sold in unlabeled packaging at chairless coffee shops. Minimalism is a philosophical force and practical approach to life, that when applied correctly, can bring peace, happiness, and enrichment to your way of living. How do these core virtues of minimalism apply to network design? Read on. (And don’t worry, you can keep all of your stuff, your color TV and cell phones, and your roomy house, too – we’re just talking about networks here.)

Joshua Fields Millburn and Ryan Nicodemus, who founded theminimalists.com, sacrificed their former careers to share the concept of minimalism all over the globe, helping more than 20 million people live more meaningful lives. They’ve grounded the concepts of minimalism into a practical and elegant foundation that fits nicely in a modern society. They defined what many believe to be the core virtues of minimalism, ideas to internalize on your journey through life. When it comes to network design, here are five core virtues that prove to be incredibly valuable:

• Reclaim your time

• Create more, consume less

• Contribute beyond yourself

• Experience Continue reading

Getting Multi-cloud Right with Per-app Architectures

How by-the-app solutions can tackle common cloud migration roadblocks including ramp up, risk management, and operational overhead.

Network Troubleshooting Guidelines

It all started with an interesting weird MLAG bugs discussion during our last Building Next-Generation Data Center online course. The discussion almost devolved into “when in doubt reload” yammering when Mark Horsfield stepped in saying “while that may be true, make sure to check and collect these things before reloading”.

I loved what he wrote so much that I asked him to turn it into a blog post… and he made it even better by expanding it into generic network troubleshooting guidelines. Enjoy!

Read more ...Virtual Cloud Network Deep Dive: Join us in New York and Toronto!

Attention New York and Toronto, the NSX team is heading your way to deliver Deep Dive Sessions to help you get a jump start on taking your company’s networking and security to the next level!

With fall in the air, many of us are in the planning stages for big improvements for the year ahead. If your IT team is feeling pressure to increase agility, stay productive and help your company innovate, then you won’t want to miss these sessions to get a head start on the latest approach to networking and security.

The Problem with the Old Approach to Networking and Security

Traditional, hardware-based approaches to networking and security are pedantic, inflexible, and notoriously slow-moving. At the same time, the complexity around applications, services and data is increasing, while new, more sophisticated and ever-evolving threats are also in the mix – making IT teams responsible for more environments than ever before (data, cloud, branches, and the edge, oh my!). That’s all to say, there’s a lot to solve for. Luckily the NSX team has your back.

Build Your Foundation for a Virtual Cloud Network

VMware NSX® is an innovative networking and security approach that changes the Continue reading

AWS ABCs — Can I Firewall My Compute Instances?

In a previous post, I reviewed what a public subnet and Internet Gateway (IGW) are and that they allowed outbound and _in_bound connectivity to instances (ie, virtual machines) running in the AWS cloud.

If you're the least bit security conscious, your reaction might be, “No way! I can't have my instances sitting right on the Internet without any protection”.

Fear not, reader. This post will explain the mechanisms that the Amazon Virtual Private Cloud (VPC) affords you to protect your instances.

Inspur Donates New Server Configurations to the Open Compute Project

The Chinese server vendor Inspur participates in all of the open hardware platforms, including OCP, the Open Data Center Community, and Open19.

The Chinese server vendor Inspur participates in all of the open hardware platforms, including OCP, the Open Data Center Community, and Open19.

VMworld EMEA 2018 and Spousetivities

Registration is now open for Spousetivities at VMworld EMEA 2108 in Barcelona! Crystal just opened registration in the last day or so, and I wanted to help get the message out about these activities.

Here’s a quick peek at what Crystal has lined up for Spousetivities participants:

- A visit to the coastal village of Calella de Palafrugell, the village of Llafranc, and Pals (one of the most well-preserved medieval villages in all of Catalunya), along with wine in the Empordá region

- Tour of the Dali Museum

- Lunch and tour of Girona

- A lunch-time food tour

- A visit to the Collsera Natural Park and Mount Tibidabo, along with lunch at a 16th century stone farmhouse

For even more details, visit the Spousetivities site.

These activities look amazing. Even if you’ve been to Barcelona before, these unique activities and tours are not available to the public—they’re specially crafted specifically for Spousetivities participants.

Prices for all these activities are reduced thanks to Veeam’s sponsorship, and to help make things even more affordable there is a Full Week Pass that gives you access to all the activities at an additional discount.

These activities will almost certainly sell out, so register today!

Side note: Continue reading

Systemd traffic marking

Monitoring Linux services describes how the open source Host sFlow agent exports metrics from services launched using systemd, the default service manager on most recent Linux distributions. In addition, the Host sFlow agent efficiently samples network traffic using Linux kernel capabilities: PCAP/BPF, nflog, and ulog.This article describes a recent extension to the Host sFlow systemd module, mapping sampled traffic to the individual services the generate or consume them. The ability to color traffic by application greatly simplifies service discovery and service dependency mapping; making it easy to see how services communicate in a multi-tier application architecture.

The following /etc/hsflowd.conf file configures the Host sFlow agent, hsflowd, to sampling packets on interface eth0, monitor systemd services and mark the packet samples, and track tcp performance:

sflow {

collector { ip = 10.0.0.70 }

pcap { dev = eth0 }

systemd { markTraffic = on }

tcp { }

}The diagram above illustrates how the Host sFlow agent is able to efficiently monitor and classify traffic. In this case both the Host sFlow agent and an Apache web server are are running as services managed by systemd. A network connection , shown in Continue reading

Open Compute A Foot In the Datacenter Door For Inspur

It is certainly true that no technology company can grow if they are not able to do business in China. …

Open Compute A Foot In the Datacenter Door For Inspur was written by Timothy Prickett Morgan at .

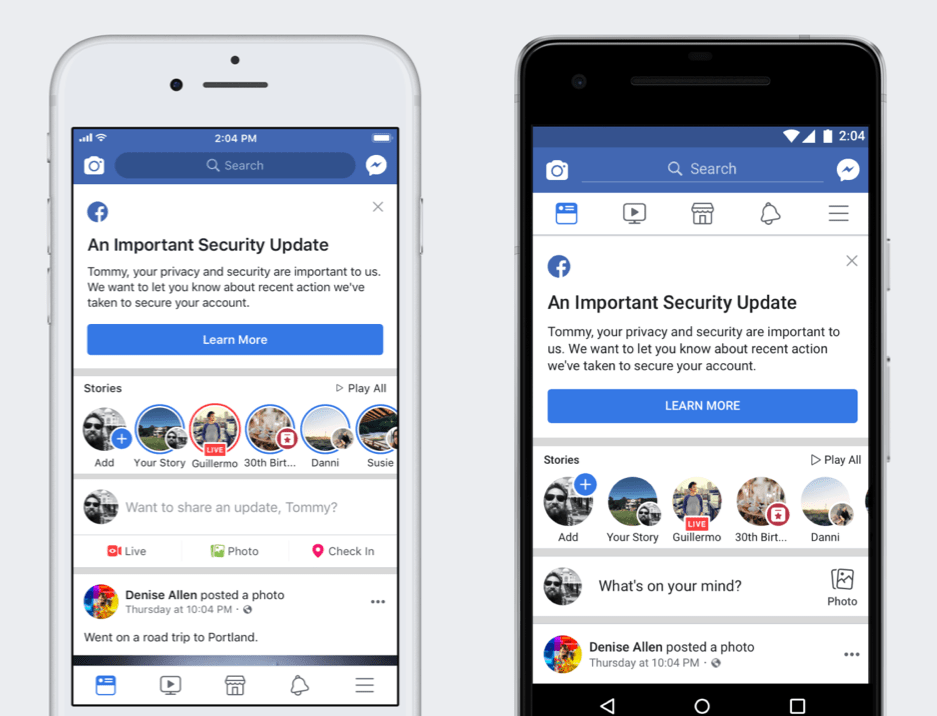

The Facebook Breach: Some Lessons for the Internet

Last week Facebook found itself at the heart of a security breach that put at risk the personal information of millions of users of the social network.

On September 28, news broke that an attacker exploited a technical vulnerability in Facebook’s code that would allow them to log into about 50 million people’s accounts.

While Facebook was quick to address the exploit and fix it, they say they don’t know if anyone’s accounts actually were breached.

This breach follows the Cambridge Analytica scandal earlier this year that resulted in the serious mishandling of the data of millions of people who use Facebook.

Both of these events illustrate that we cannot be complacent about data security. Companies that hold personal and sensitive data need to be extra vigilant about protecting their users’ data.

Yet even the most vigilant are also vulnerable. Even a single security bug can affect millions of users, as we can see.

There are a few things we can learn from this that applies to the other security conversations: Doing security well is notoriously hard, and persistent attackers will find bugs to exploit, in this case a combination of three apparently unrelated ones on the Facebook platform.

This Continue reading

Microsoft Ignite Lacks Azure Pizzazz

Some in attendance at the computing giant's Ignite event thought that Azure innovation was lacking and that Google was a stronger long-term rival to AWS.

Some in attendance at the computing giant's Ignite event thought that Azure innovation was lacking and that Google was a stronger long-term rival to AWS.

IPv6 Security Considerations

When rolling out a new protocol such as IPv6, it is useful to consider the changes to security posture, particularly the network’s attack surface. While protocol security discussions are widely available, there is often not “one place” where you can go to get information about potential attacks, references to research about those attacks, potential counters, and operational challenges. In the case of IPv6, however, there is “one place” you can find all this information: draft-ietf-opsec-v6. This document is designed to provide information to operators about IPv6 security based on solid operational experience—and it is a must read if you have either deployed IPv6 or are thinking about deploying IPv6.

The draft is broken up into four broad sections; the first is the longest, addressing generic security considerations. The first consideration is whether operators should use Provider Independent (PI) or Provider Assigned (PA) address space. One of the dangers with a large address space is the sheer size of the potential routing table in the Default Free Zone (DFZ). If every network operator opted for an IPv6 /32, the potential size of the DFZ routing table is 2.4 billion routing entries. If you thought converging on about 800,000 routes is Continue reading

AT&T Contributes Its Cell Site White Box Router Spec to the Open Compute Project

This white box reference design is available to any hardware maker to use as a guide to build cell site gateway routers. And they can be paired with disaggregated software.

This white box reference design is available to any hardware maker to use as a guide to build cell site gateway routers. And they can be paired with disaggregated software.

Verizon Fires Up First Residential 5G Fixed Wireless Markets

Verizon is touting the fact that it is the first operator globally to deploy 5G, even though the service is based upon the company’s own 5G TF standard.

Verizon is touting the fact that it is the first operator globally to deploy 5G, even though the service is based upon the company’s own 5G TF standard.

Boosting the Clock for High Performance FPGA Inference

A few years ago the market was rife with deep learning chip startups aiming at AI training. …

Boosting the Clock for High Performance FPGA Inference was written by Nicole Hemsoth at .