KU053: Migrate Legacy Applications to Kubernetes with Konveyor

Whether you want to migrate legacy applications to Kubernetes in order to save the whales or for any other reason, Konveyor is here to help. Savitha Raghunathan joins us today to walk us through the open source tool. The basics: You input the application’s source code (any language that has a language server) and Konveyor... Read more »Collaborate to Trust Telco SaaS

Unlocking the full potential of telecom in the SaaS era relies on comprehensive strategies and a constant commitment to evolving security practices.Looking To Adopt Generative AI Within Your Organization?

SPONSORED POST: Generative AI is the subject of significant interest from enterprises busy looking to use it to help them improve business processes and build innovative new applications and services which can attract new customers and grow revenue. …

Looking To Adopt Generative AI Within Your Organization? was written by Timothy Prickett Morgan at The Next Platform.

LISP vs EVPN: Mobility in Campus Networks

I decided not to get involved in the EVPN-versus-LISP debates anymore; I’d written everything I had to say about LISP. However, I still get annoyed when experienced networking engineers fall for marketing gimmicks disguised as technical arguments. Here’s a recent one:

LISP vs EVPN: Mobility in Campus Networks

I decided not to get involved in the EVPN-versus-LISP debates anymore; I’d written everything I had to say about LISP. However, I still get annoyed when experienced networking engineers fall for marketing gimmicks disguised as technical arguments. Here’s a recent one:

With MTIA v2 Chip, Meta Can Do AI Inference, But Not Training

If you control your code base and you have only a handful of applications that run at massive scale – what some have called hyperscale – then you, too, can win the Chip Jackpot like Meta Platforms and a few dozen companies and governments in the world have. …

With MTIA v2 Chip, Meta Can Do AI Inference, But Not Training was written by Timothy Prickett Morgan at The Next Platform.

Gelsinger: With Gaudi 3 and Xeon 6, AI Workloads Will Come Our Way

The steady rise of AI over the past several years – and the accelerated growth with the introduction generative AI since OpenAI’s launch of ChatGPT in November 2022 – has shifted Intel’s status as a challenger in a chip market that it long had dominated. …

Gelsinger: With Gaudi 3 and Xeon 6, AI Workloads Will Come Our Way was written by Jeffrey Burt at The Next Platform.

Narrowboat: Lessons learnt

At this stage of the build internally there is only really the bedroom, snags and trims (windows, ceiling edges and doors) left to do. I am currently in Australia having a bit of RnR so this is a reflective post to show the build at its present state and go through the things I have learnt along the way. I could plan all I like but as I haven’t lived on a boat before there was always going to be a few wrong design decisions.

D2C239: “(Almost) Every Infrastructure Decision I Endorse or Regret”

You can’t just drop a knife on fish and expect there to be sushi. Jack Lindamood joins us today to share his metaphors and thoughts on picking the right IT tools and processes as outlined in his popular article, “(Almost) Every Infrastructure Decision I Endorse or Regret after 4 Years Running Infrastructure at a Startup.”... Read more »Modernizing IT Networks for Higher Education

In today’s data-driven world, higher educational institution networks and their management must be modernized to address the needs of everyone across campus. Enterprises face similar challenges and also need to modernize their networks.Stateful Firewall Cluster High Availability Theater

Dmitry Perets wrote an excellent description of how typical firewall cluster solutions implement control-plane high availability, in particular, the routing protocol Graceful Restart feature (slightly edited):

Most of the HA clustering solutions for stateful firewalls that I know implement a single-brain model, where the entire cluster is seen by the outside network as a single node. The node that is currently primary runs the control plane (hence, I call it single-brain). Sessions and the forwarding plane are synchronized between the nodes.

Stateful Firewall Cluster High Availability Theater

Dmitry Perets wrote an excellent description of how typical firewall cluster solutions implement control-plane high availability, in particular, the routing protocol Graceful Restart feature (slightly edited):

Most of the HA clustering solutions for stateful firewalls that I know implement a single-brain model, where the entire cluster is seen by the outside network as a single node. The node that is currently primary runs the control plane (hence, I call it single-brain). Sessions and the forwarding plane are synchronized between the nodes.

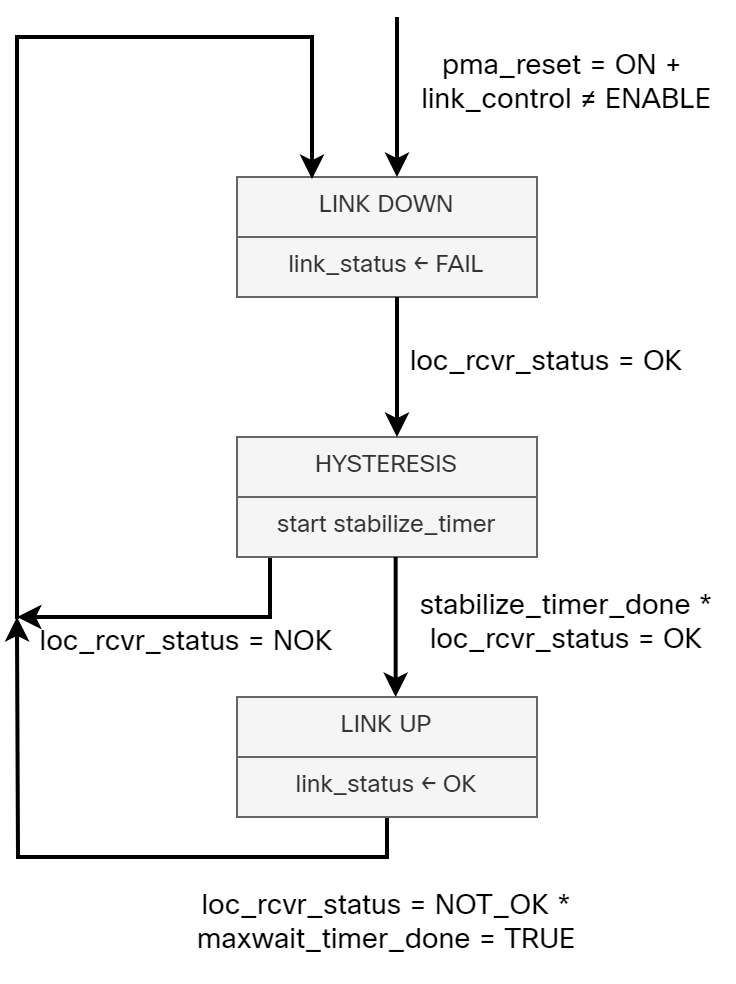

1000BASE-T Part 4 – Link Down Detection

In the previous three parts, we learned about all the interesting things that go on in the PHY with scrambling, descrambling, synchronization, auto negotiation, FEC encoding, and so on. This is all essential knowledge that we need to have to understand how the PHY can detect that a link has gone down, or is performing so badly that it doesn’t make sense to keep the link up.

What Does IEEE 802.3 1000BASE-T Say?

The function in 1000BASE-T that is responsible for monitoring the status of the link is called link monitor and is defined in 40.4.2.5. The standard does not define much on what goes on in link monitor, though. Below is an excerpt from the standard:

Link Monitor determines the status of the underlying receive channel and communicates it via the variable

link_status. Failure of the underlying receive channel typically causes the PMA’s clients to suspend normal

operation.

The Link Monitor function shall comply with the state diagram of Figure 40–17.

The state diagram (redrawn by me) is shown below:

While 1000BASE-T leaves what the PHY monitors in link monitor to the implementer, there are still some interesting variables and timers that you should be Continue reading

HS069: Regulating AI

In today’s episode Greg and Johna spar over how, when, and why to regulate AI. Does early regulation lead to bad regulation? Does late regulation lead to a situation beyond democratic control? Comparing nascent regulation efforts in the EU, UK, and US, they analyze socio-legal principles like privacy and distributed liability. Most importantly, Johna drives... Read more »PP009: Don’t Forget the Firmware

If your approach to firmware is that you don’t bother it as long as it doesn’t bother you, you might want to listen to this episode. Concerns about supply chain vulnerabilities are on the rise and for good reason: Attackers are targeting firmware because compromising this software can allow attackers to persist on systems after... Read more »Google Joins The Homegrown Arm Server CPU Club

If you are wondering why Intel chief executive officer Pat Gelsinger has been working so hard to get the company’s foundry business not only back on track but utterly transformed into a merchant foundry that, by 2030 or so can take away some business from archrival Taiwan Semiconductor Manufacturing Co, the reason is simple. …

Google Joins The Homegrown Arm Server CPU Club was written by Timothy Prickett Morgan at The Next Platform.