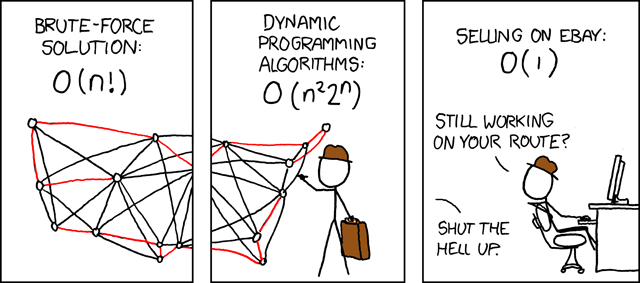

Balancing MMLU With The Shortest Path Cost Constraints

It is almost impossible for someone coming from a purely mathematical background with little exposure to applications to understand how to go about formulating a real-world problem in mathematical terms. - Dantzig.

In our previous post, we explored the Min. Max Link Utilization problem using Linear Programming. The focus was on optimizing network traffic distribution to achieve optimal link utilization. However, this approach did not consider the potential impact on path lengths, allowing flows to be placed on longer paths without constraints. We mentioned this briefly in a passing statement. However, I thought it would be worth exploring the extended problem formulation that introduces an additional constraint to strike a balance between minimizing link utilization and controlling the deviation of paths from their shortest counterparts.

The core objective remains the same—to minimize the maximum link utilization across the network. However, we now introduce a conflicting constraint that aims to limit the extent to which paths can deviate from their shortest routes. This constraint is crucial for keeping path lengths within an acceptable range, as excessively long paths can degrade the user experience.

Mathematically, we are faced with two opposing constraints. On the one hand, we strive to minimize link utilization, Continue reading

Booting Raspberry Pi 3 B from USB Drive

USB drives typically have a longer lifespan and better durability compared to SD cards, especially […]

The post Booting Raspberry Pi 3 B from USB Drive first appeared on Brezular's Blog.

The Complexity Cycle: Infrastructure Sprawl is a Killer

Eliminating complexity by standardizing with a platform approach is the way to put the brakes on the complexity cycle.HN728: How Drivenets Leverages Ethernet Fabrics For AI Networking (Sponsored)

To run AI workloads, a network needs thousands of GPUs and those GPUs must operate in sync. If there is congestion or dropped frames, very expensive efforts could be delayed or disrupted. While there are advantages to using Ethernet for AI networking (including engineers well-trained in the protocol and a robust ecosystem), it wasn’t designed... Read more »Cloudflare acquires Baselime to expand serverless application observability capabilities

Today, we’re thrilled to announce that Cloudflare has acquired Baselime.

The cloud is changing. Just a few years ago, serverless functions were revolutionary. Today, entire applications are built on serverless architectures, from compute to databases, storage, queues, etc. — with Cloudflare leading the way in making it easier than ever for developers to build, without having to think about their architecture. And while the adoption of serverless has made it simple for developers to run fast, it has also made one of the most difficult problems in software even harder: how the heck do you unravel the behavior of distributed systems?

When I started Baselime 2 years ago, our goal was simple: enable every developer to build, ship, and learn from their serverless applications such that they can resolve issues before they become problems.

Since then, we built an observability platform that enables developers to understand the behaviour of their cloud applications. It’s designed for high cardinality and dimensionality data, from logs to distributed tracing with OpenTelemetry. With this data, we automatically surface insights from your applications, and enable you to quickly detect, troubleshoot, and resolve issues in production.

In parallel, Cloudflare has been busy the past few years building Continue reading

Browser Rendering API GA, rolling out Cloudflare Snippets, SWR, and bringing Workers for Platforms to all users

This post is also available in 简体中文, 繁體中文, 日本語, 한국어, Deutsch, Français and Español.

Browser Rendering API is now available to all paid Workers customers with improved session management

In May 2023, we announced the open beta program for the Browser Rendering API. Browser Rendering allows developers to programmatically control and interact with a headless browser instance and create automation flows for their applications and products.

At the same time, we launched a version of the Puppeteer library that works with Browser Rendering. With that, developers can use a familiar API on top of Cloudflare Workers to create all sorts of workflows, such as taking screenshots of pages or automatic software testing.

Today, we take Browser Rendering one step further, taking it out of beta and making it available to all paid Workers' plans. Furthermore, we are enhancing our API and introducing a new feature that we've been discussing for a long time in the open beta community: session management.

Session Management

Session management allows developers to reuse previously opened browsers across Worker's scripts. Reusing browser sessions has the advantage that you don't need to instantiate a new browser for every request and every task, Continue reading

Cloudflare acquires PartyKit to allow developers to build real-time multi-user applications

We're thrilled to announce that PartyKit, an open source platform for deploying real-time, collaborative, multiplayer applications, is now a part of Cloudflare. This acquisition marks a significant milestone in our journey to redefine the boundaries of serverless computing, making it more dynamic, interactive, and, importantly, stateful.

Defining the future of serverless compute around state

Building real-time applications on the web have always been difficult. Not only is it a distributed systems problem, but you need to provision and manage infrastructure, databases, and other services to maintain state across multiple clients. This complexity has traditionally been a barrier to entry for many developers, especially those who are just starting out.

We announced Durable Objects in 2020 as a way of building synchronized real time experiences for the web. Unlike regular serverless functions that are ephemeral and stateless, Durable Objects are stateful, allowing developers to build applications that maintain state across requests. They also act as an ideal synchronization point for building real-time applications that need to maintain state across multiple clients. Combined with WebSockets, Durable Objects can be used to build a wide range of applications, from multiplayer games to collaborative drawing tools.

In 2022, PartyKit began as a project to Continue reading

Blazing fast development with full-stack frameworks and Cloudflare

Hello web developers! Last year we released a slew of improvements that made deploying web applications on Cloudflare much easier, and in response we’ve seen a large growth of Astro, Next.js, Nuxt, Qwik, Remix, SolidStart, SvelteKit, and other web apps hosted on Cloudflare. Today we are announcing major improvements to our integration with these web frameworks that makes it easier to develop sophisticated applications that use our D1 SQL database, R2 object store, AI models, and other powerful features of Cloudflare’s developer platform.

In the past, if you wanted to develop a web framework-powered application with D1 and run it locally, you’d have to build a production build of your application, and then run it locally using `wrangler pages dev`. While this worked, each of your code iterations would take seconds, or tens of seconds for big applications. Iterating using production builds is simply too slow, pulls you out of the flow, and doesn’t allow you to take advantage of all the DX optimizations that framework authors have put a lot of hard work into. This is changing today!

Our goal is to integrate with web frameworks in the most natural way possible, without developers having to learn Continue reading

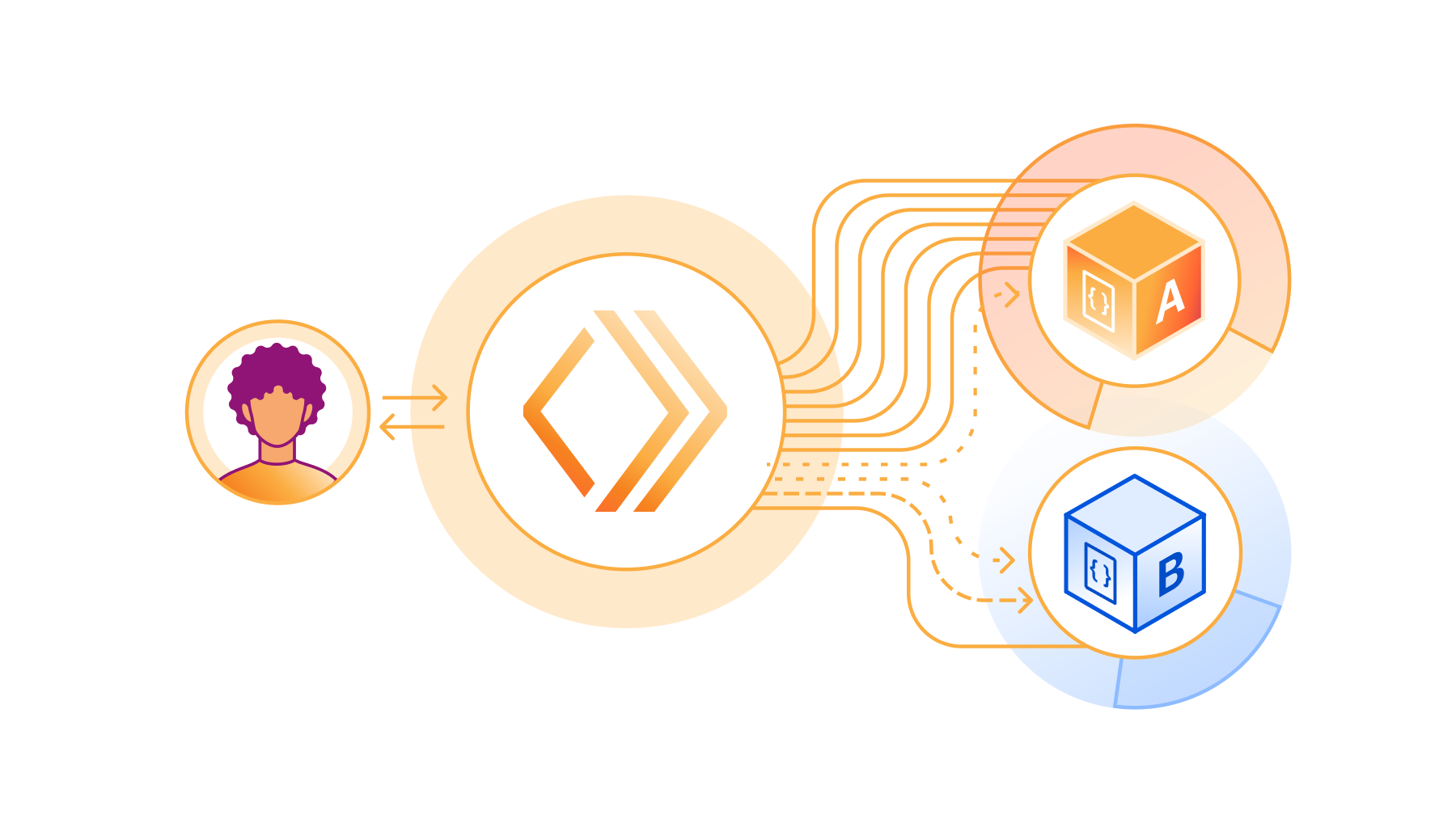

We’ve added JavaScript-native RPC to Cloudflare Workers

Cloudflare Workers now features a built-in RPC (Remote Procedure Call) system enabling seamless Worker-to-Worker and Worker-to-Durable Object communication, with almost no boilerplate. You just define a class:

export class MyService extends WorkerEntrypoint {

sum(a, b) {

return a + b;

}

}

And then you call it:

let three = await env.MY_SERVICE.sum(1, 2);

No schemas. No routers. Just define methods of a class. Then call them. That's it.

But that's not it

This isn't just any old RPC. We've designed an RPC system so expressive that calling a remote service can feel like using a library – without any need to actually import a library! This is important not just for ease of use, but also security: fewer dependencies means fewer critical security updates and less exposure to supply-chain attacks.

To this end, here are some of the features of Workers RPC:

- For starters, you can pass Structured Clonable types as the params or return value of an RPC. (That means that, unlike JSON, Dates just work, and you can even have cycles.)

- You can additionally pass functions in the params or return value of other functions. When the other side calls the function you passed to Continue reading

Community Update: empowering startups building on Cloudflare and creating an inclusive community

With millions of developers around the world building on Cloudflare, we are constantly inspired by what you all are building with us. Every Developer Week, we’re excited to get your hands on new products and features that can help you be more productive, and creative, with what you’re building. But we know our job doesn’t end when we put new products and features in your hands during Developer Week. We also need to cultivate welcoming community spaces where you can come get help, share what you’re building, and meet other developers building with Cloudflare.

We’re excited to close out Developer Week by sharing updates on our Workers Launchpad program, our latest Developer Challenge, and the work we’re doing to ensure our community spaces – like our Discord and Community forums – are safe and inclusive for all developers.

Helping startups go further with Workers Launchpad

In late 2022, we initiated the $2 billion Workers Launchpad Funding Program aimed at aiding the more than one million developers who use Cloudflare's Developer Platform. This initiative particularly benefits startups that are investing in building on Cloudflare to propel their business growth.

The Workers Launchpad Program offers a variety of resources to help builders Continue reading

Why Are We Using EVPN Instead of SPB or TRILL?

Dan left an interesting comment on one of my previous blog posts:

It strikes me that the entire industry lost out when we didn’t do SPB or TRILL. Specifically, I like how Avaya did SPB.

Oh, we did TRILL. Three vendors did it in different proprietary ways, but I’m digressing.

Why Are We Using EVPN Instead of SPB or TRILL?

Dan left an interesting comment on one of my previous blog posts:

It strikes me that the entire industry lost out when we didn’t do SPB or TRILL. Specifically, I like how Avaya did SPB.

Oh, we did TRILL. Three vendors did it in different proprietary ways, but I’m digressing.

Technical Debt and the Hidden Cost of Network Management: Why it’s Time to Revisit Your Network Foundations

Maintaining existing network infrastructures often incurs huge technical debt before newer technologies are adopted over time.Intel’s Chips No Longer Pay More Than Their Fair Share Of Foundry Costs

The biggest benefit that is coming from the separation of the Intel chip design and marketing business from its foundry operations is that Intel’s chip product groups no longer have to shoulder the totality of the immense costs of its manufacturing operations. …

Intel’s Chips No Longer Pay More Than Their Fair Share Of Foundry Costs was written by Timothy Prickett Morgan at The Next Platform.

Hedge 220: The Cost of the Cloud

Cloud services are all the rage right now, but are they worth it? There are many aspects to the question, and the answer is almost always going to be “it depends.” Do you really need to spin up capacity more quickly than you can buy hardware and get it running? Do you really need to be able to spin capacity down without leaving any hardware behind? Is cloud really the best use of your team’s time and talent?

David Heinemeier Hansson joins Tom and Russ to talk about the economics and uses of cloud, and why his company has moved away from public cloud services.

IPB148: Microsoft to Expand CLAT Support in Windows 11

Today Tom, Scott, and Ed discuss the exciting announcement in IPv6 world: Microsoft is expanding its CLAT support in Windows 11. This means enterprises can be even more comfortable transitioning to a IPv6-only network: Now not only do they have DNS64 and NAT64 to translate IPv4 to IPv6, but they have CLAT for any apps... Read more »Celestial AI Wants To Break The Memory Wall, Fuse HBM With DDR5

In 2024, there is no shortage of interconnects if you need to stitch tens, hundreds, thousands, or even tens of thousands of accelerators together. …

Celestial AI Wants To Break The Memory Wall, Fuse HBM With DDR5 was written by Tobias Mann at The Next Platform.

New tools for production safety — Gradual deployments, Source maps, Rate Limiting, and new SDKs

This post is also available in 简体中文, 繁體中文, 日本語, 한국어, Deutsch, Español and Français.

2024’s Developer Week is all about production readiness. On Monday. April 1, we announced that D1, Queues, Hyperdrive, and Workers Analytics Engine are ready for production scale and generally available. On Tuesday, April 2, we announced the same about our inference platform, Workers AI. And we’re not nearly done yet.

However, production readiness isn’t just about the scale and reliability of the services you build with. You also need tools to make changes safely and reliably. You depend not just on what Cloudflare provides, but on being able to precisely control and tailor how Cloudflare behaves to the needs of your application.

Today we are announcing five updates that put more power in your hands – Gradual Deployments, source mapped stack traces in Tail Workers, a new Rate Limiting API, brand-new API SDKs, and updates to Durable Objects – each built with mission-critical production services in mind. We build our own products using Workers, including Access, R2, KV, Waiting Room, Vectorize, Queues, Stream, and more. We rely on each of these Continue reading

What’s new with Cloudflare Media: updates for Calls, Stream, and Images

Our customers use Cloudflare Calls, Stream, and Images to build live, interactive, and real-time experiences for their users. We want to reduce friction by making it easier to get data into our products. This also means providing transparent pricing, so customers can be confident that costs make economic sense for their business, especially as they scale.

Today, we’re introducing four new improvements to help you build media applications with Cloudflare:

- Cloudflare Calls is in open beta with transparent pricing

- Cloudflare Stream has a Live Clipping API to let your viewers instantly clip from ongoing streams

- Cloudflare Images has a pre-built upload widget that you can embed in your application to accept uploads from your users

- Cloudflare Images lets you crop and resize images of people at scale with automatic face cropping

Build real-time video and audio applications with Cloudflare Calls

Cloudflare Calls is now in open beta, and you can activate it from your dashboard. Your usage will be free until May 15, 2024. Starting May 15, 2024, customers with a Calls subscription will receive the first terabyte each month for free, with any usage beyond that charged at $0.05 per real-time gigabyte. Additionally, there are no charges for Continue reading