‘Slingshot’ Cyber Espionage Campaign Hacks Routers

Security researchers uncover advanced hacking group targeting MikroTik routers in Africa and Middle East.

Security researchers uncover advanced hacking group targeting MikroTik routers in Africa and Middle East.

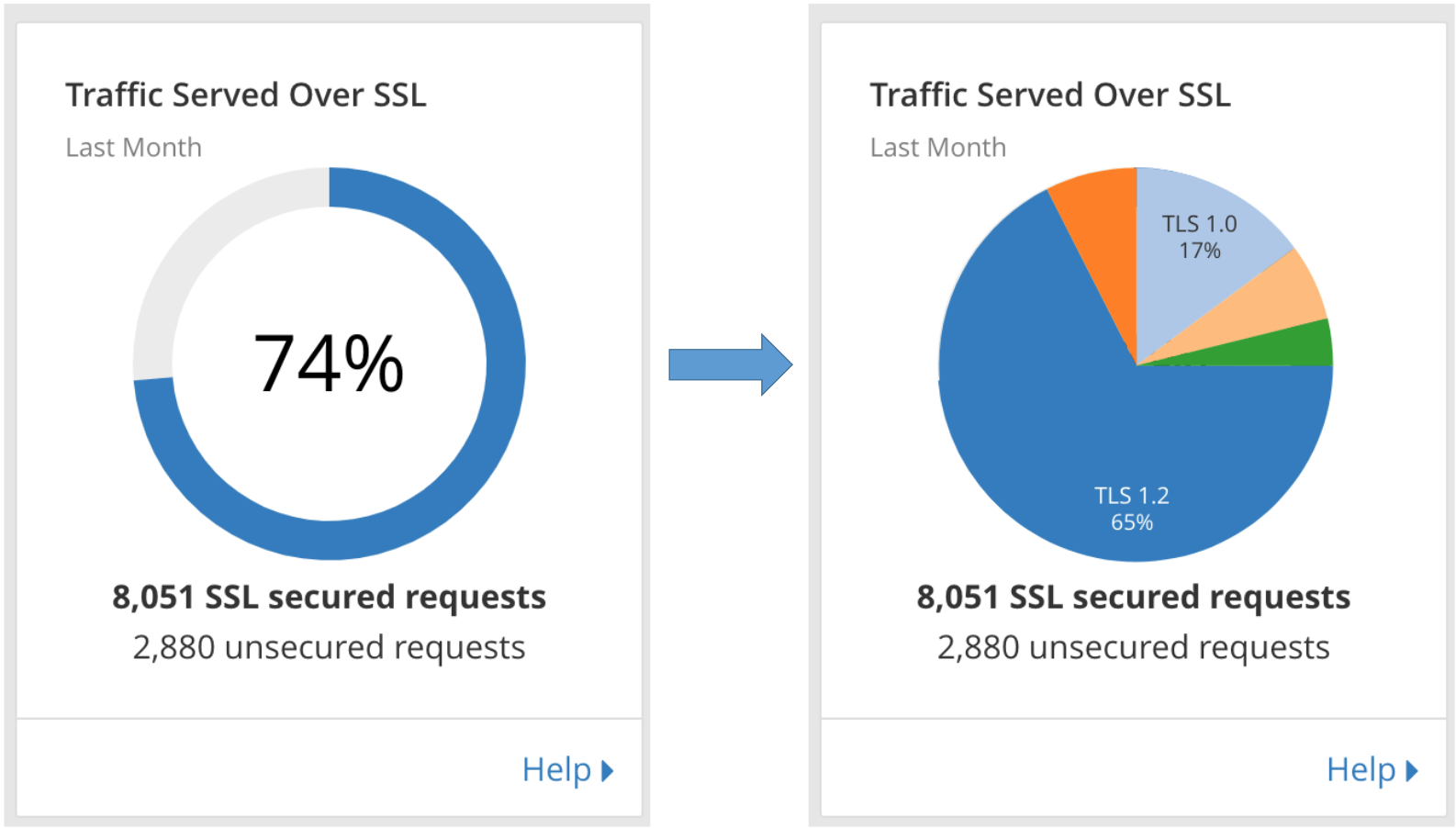

On June 4, Cloudflare will be dropping support for TLS 1.0 and 1.1 on api.cloudflare.com. Additionally, the dashboard will be moved from www.cloudflare.com/a to dash.cloudflare.com and will require a browser that supports TLS 1.2 or higher.

No changes will be made to customer traffic that is proxied through our network, though you may decide to enforce a minimum version for your own traffic. We will soon expose TLS analytics that indicate the percent of connections to your sites using TLS 1.0-1.3, and controls to set a specific minimum version. Currently, you may enforce version 1.2 or higher using the Require Modern TLS setting.

Prior to June 4, API calls made with TLS 1.0 or 1.1 will have warning messages inserted into responses and dashboard users will see a banner encouraging you to upgrade your browser. Additional details on these changes, and a complete schedule of planned events can be found in the timeline below.

Transport Layer Security (TLS) is the protocol used on the web today to encrypt HTTPS connections. Version 1.0 was standardized almost 20 years ago as the successor to SSL Continue reading

Thanks for checking out the Getting Started series! This quick tutorial lists the basic steps needed to perform an upgrade of Red Hat Ansible Tower in a standalone configuration. Specifically, we'll be upgrading Ansible Tower 3.1.0 to the latest (as of this writing) version 3.2.2 in a few simple steps. There are some things you’ll need to keep in mind while upgrading (e.g., editing the inventory file appropriately), and a description will be offered with each example.

The steps to upgrading are similar to installing Ansible Tower. The original inventory file from the install should already have the hostnames and variables you'll be using, so it's suggested that you work from your current install's inventory file to populate the upgrade file.

Your older inventory file may have some different lines than the newer upgrade version, due to updated configuration options or added features. In this example, the difference between the 3.1.0 and the 3.2.2 inventory files is the added ability to enable isolated key generation for clustered installs. See below for a side-by-side comparison:

| Ansible Tower 3.1.0 | Ansible Tower 3.2.2 |

| [tower] |

A challenge for people who make things is living in a world where everyone else makes things, too. On the Internet, everyone seems to be making something they want you to consider and approve of.

Sometimes, that Internet creation is as simple as a tweet or Facebook post. Like it! Share it! Retweet it! More complex creations, like this blog post, are still easy enough to make and share that there are likely hundreds of new articles you might be asked to read in a week.

If you were to carefully keep up with everything you subscribe to or follow, your mind would never have time to itself. You’d never be able to think your own thoughts. You’d be too busy chewing on the thoughts of other people.

For this reason, I believe constant consumption damages productivity. Designers, architects, artisans, writers, and other creators need time to think through what they are making. Writers need a subject and word flow to clearly communicate. Technology architects need to deeply consider the implications of their designs from multiple angles.

Deep consideration takes contiguous blocks of time. Achieving a flowing state of mind takes uninterrupted time. Thoughts build one on Continue reading

A challenge for people who make things is living in a world where everyone else makes things, too. On the Internet, everyone seems to be making something they want you to consider and approve of.

Sometimes, that Internet creation is as simple as a tweet or Facebook post. Like it! Share it! Retweet it! More complex creations, like this blog post, are still easy enough to make and share that there are likely hundreds of new articles you might be asked to read in a week.

If you were to carefully keep up with everything you subscribe to or follow, your mind would never have time to itself. You’d never be able to think your own thoughts. You’d be too busy chewing on the thoughts of other people.

For this reason, I believe constant consumption damages productivity. Designers, architects, artisans, writers, and other creators need time to think through what they are making. Writers need a subject and word flow to clearly communicate. Technology architects need to deeply consider the implications of their designs from multiple angles.

Deep consideration takes contiguous blocks of time. Achieving a flowing state of mind takes uninterrupted time. Thoughts build one on Continue reading

To keep their niche in computing, field programmable gate arrays not only need to stay on the cutting edge of chip manufacturing processes. They also have to include the most advanced networking to balance out that compute, rivalling that which the makers of switch ASICs provide in their chips.

By comparison, CPUs have it easy. They don’t have the serializer/deserializer (SerDes) circuits that switch chips have as the foundation of their switch fabric. Rather, they might have a couple of integrated Ethernet network interface controllers embedded on the die, maybe running at 1 Gb/sec or 10 Gb/sec, and they offload …

FPGA Interconnect Boosted In Concert With Compute was written by Timothy Prickett Morgan at The Next Platform.

Mikrotik routers as an attack vector

AI on the job: Many U.S. residents believe that artificial intelligence will replace some workers over the next decade or so, but it won’t take theirs, according to a story in the New York Times. But it’s not all doom and gloom, because advances in AI and robotics can actually create more jobs, Tim Johnson, CEO of IT staffing firm Mondo, writes in Forbes.

Fixing the IoT: The U.K. government issued a set of guidelines for Internet-of-things device makers to better secure their products. Among the recommendations: Issue regular software updates, get rid of default passwords, and warn customers promptly about vulnerabilities. Ok, so it’s not rocket science, but it seems that some IoT device makers haven’t done some of these things in the past. Some critics also believe the guidelines lack teeth, according to a story in ITpro.

The Blockchain election: The use of Blockchain technologies could help resolve some continuing problems with voting, according to a story by Bitcoin Magazine run on Nasdaq.com. The use of a Blockchain ledger could address the old “hanging chad” problem from the 2000 U.S. election, and it could bring new privacy and security to elections, according to the Continue reading

Google pines for a truly programmable data plane.

Google pines for a truly programmable data plane.

We’re excited to share news of the third edition of the Applied Networking Research Workshop (ANRW2018), which will take place in Montreal, Quebec, on Monday, July 16 at the venue of the Internet Engineering Task Force (IETF) 102 meeting. The workshop program already includes some great invited talks and the Call for Papers is open now, with a deadline of 20 April.

ANRW2018 will provide a forum for researchers, vendors, network operators and the Internet standards community to present and discuss emerging results in applied networking research. The workshop will also create a path for academics to transition research back into IETF standards and protocols, and for academics to find inspiration from topics and open problems addressed at the IETF. Accepted short papers will be published in the ACM Digital Library.

ANRW2018 particularly encourages the submission of results that could form the basis for future engineering work in the IETF, that could change operational Internet practices, that can help better specify Internet protocols, or that could influence further research and experimentation in the Internet Research Task Force (IRTF).

If you have some relevant work and would like to join us in Montreal for the workshop and maybe stick Continue reading

Interop ITX Infrastructure Chair Keith Townsend provides guidance on hyperconvergence, cloud migration, network disaggregation, and containers.

Flash memory has become absolutely normal in the datacenter, but that does not mean it is ubiquitous and it most certainly does not mean that all flash arrays, whether homegrown and embedded in servers or purchased as appliances, are created equal. They are not, and you can tell not only from the feeds and speeds, but from the dollars and sense.

It has been nine years since Pure Storage, one of the original flash array upstarts, was founded and seven years since the company dropped out of stealth with its first generation of FlashArray products. In that relatively short time, …

Why Cisco Should – And Should Not – Acquire Pure Storage was written by Timothy Prickett Morgan at The Next Platform.

In this post of the Internet Society Rough Guide to IETF 101, I’ll focus on important work the IETF is doing that helps improve security and resilience of the Internet infrastructure.

What happens if an IXP operator begins maintenance work on the switches without ensuring that BGP sessions between the peers have been shut down? A network disruption and outage. A draft now in the RFC editor queue, “Mitigating Negative Impact of Maintenance through BGP Session Culling”, provides guidance to IXP operators on how to avoid such situations by forcefully tearing down the BGP sessions (session culling) affected by the maintenance before the maintenance activities commence. This approach allows BGP speakers to pre-emptively converge onto alternative paths while the lower layer network’s forwarding plane remains fully operational.

Another draft also in the RFC editor queue, “Graceful BGP session shutdown”, addresses issues related to planned maintenance. The procedures described in this document can be applied to reduce or avoid packet loss for outbound and inbound traffic flows initially forwarded along the peering link to be shut down. These procedures trigger, in both Autonomous Systems (AS), rerouting to alternate paths if they exist within the Continue reading

One of the most important aspects of the introductory part of my Building Network Automation Solutions online course is the question should I buy a solution or build my own?

I already described the arguments against buying a reassuringly-expensive single-blob-of-complexity solution from a $vendor, but what about using point tools?

Read more ...Starting next weekend, the Internet Engineering Task Force will be in London for IETF 101, where about 1000 engineers will discuss open internet standards and protocols. The week begins on Saturday, 17 March, with a Hackathon and Code Sprint. The IETF meeting itself begins on Sunday and goes through Friday.

As usual, we’ll write our ‘Rough Guide to the IETF’ blog posts on topics of mutual interest to both the IETF and the Internet Society:

More information about IETF 101:

Here are some of the activities that the Internet Society is involved in during the week.

Catch up on the world of the IETF and open Internet standards by reading the IETF Journal. The November issue marked the final printed version; now we plan to share longer-form articles online and via our Twitter and Facebook channels. Our two most recent articles are “Big Changes Ahead for Core Internet Protocols” by Mark Nottingham and “QUIC: Bringing flexibility to the Internet” Continue reading