BGP Labs: Remove Private AS from AS-Path

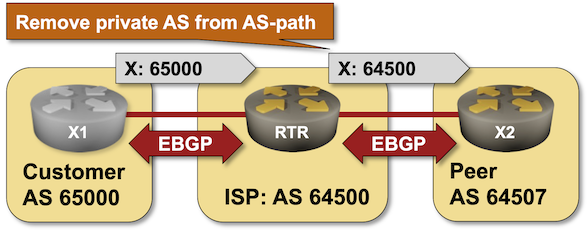

In a previous BGP lab exercise, I described how an Internet Service Provider could run BGP with a customer without the customer having a public BGP AS number. The only drawback of that approach: the private BGP AS number gets into the AS path, and everyone else on the Internet starts giving you dirty looks (or drops your prefixes).

Let’s fix that. Most BGP implementations have some remove private AS functionality that scrubs AS paths during outgoing update processing. You can practice it in the Remove Private BGP AS Numbers from the AS Path lab exercise.