Hedge 269: Web 3.0

Yes, we took an (unintentional) three-week break for medical reasons … but we’re back with a new episode.

What is Web 3.0, and how is it different from Web 2.0? What about XR, AI, and Quantum, and their relationship to Web 3.0? Jamie Schwartz joins Tom Ammon and Russ White to try to get to a solid definition of what Web 3.0 and how it impacts the future of the Internet.

Let’s DO this: detecting Workers Builds errors across 1 million Durable Objects

Cloudflare Workers Builds is our CI/CD product that makes it easy to build and deploy Workers applications every time code is pushed to GitHub or GitLab. What makes Workers Builds special is that projects can be built and deployed with minimal configuration. Just hook up your project and let us take care of the rest!

But what happens when things go wrong, such as failing to install tools or dependencies? What usually happens is that we don’t fix the problem until a customer contacts us about it, at which point many other customers have likely faced the same issue. This can be a frustrating experience for both us and our customers because of the lag time between issues occurring and us fixing them.

We want Workers Builds to be reliable, fast, and easy to use so that developers can focus on building, not dealing with our bugs. That’s why we recently started building an error detection system that can detect, categorize, and surface all build issues occurring on Workers Builds, enabling us to proactively fix issues and add missing features.

It’s also no secret that we’re big fans of being “Customer Zero” at Cloudflare, and Workers Builds is itself a Continue reading

BGP and MTU Deep Dive

A long long time ago, BGP implementations would use a Maximum Segment Size (MSS) of 536 bytes for BGP peerings. Why 536? Because in RFC 791, the original IP RFC, the maximum length of a datagram is defined as follows:

Total Length: 16 bits

Total Length is the length of the datagram, measured in octets,

including internet header and data. This field allows the length of

a datagram to be up to 65,535 octets. Such long datagrams are

impractical for most hosts and networks. All hosts must be prepared

to accept datagrams of up to 576 octets (whether they arrive whole

or in fragments). It is recommended that hosts only send datagrams

larger than 576 octets if they have assurance that the destination

is prepared to accept the larger datagrams.

The number 576 is selected to allow a reasonable sized data block to

be transmitted in addition to the required header information. For

example, this size allows a data block of 512 octets plus 64 header

octets to fit in a datagram. The maximal internet header is 60

octets, and a typical internet header is 20 octets, allowing a

margin for headers of higher level protocols.

The idea was to Continue reading

N4N028: The Wide World of WANs

We wanted to do an episode on SD-WAN, but realized we needed to set the stage for how wide-area networking developed. That’s why today’s episode is a history lesson of the Wide Area Network (WAN). We talk about how WANs emerged, public and private WANs, how WANs connect to LANs and data centers, the care... Read more »Dear ArubaCX, VXLAN VNI Has 24 Bits

I thought I’ve seen it all, but the networking vendors (and their lack of testing) never cease to amaze me. Today’s special: ArubaCX software VXLAN implementation.

We decided it’s a good idea to rewrite the VXLAN integration tests to use one target device and one FRR container to test inter-vendor VXLAN interoperability. After all, what could possibly go wrong with a simple encapsulation format that could be described on a single page?

Everything worked fine (as expected), except for the ArubaCX VM (running release Virtual.10.15.1005, build ID AOS-CX:Virtual.10.15.1005:9d92f5caa6b6:202502181604), which failed every single test.

Nvidia Does Not Need China, But It Craves It And That Is Risky

“No.”

That’s probably a word that Jensen Huang, co-founder and chief executive officer of Nvidia, doesn’t hear a lot. …

Nvidia Does Not Need China, But It Craves It And That Is Risky was written by Timothy Prickett Morgan at The Next Platform.

With DPU-Goosed Switches, HPE Tackles VMware, Security – And Maybe HPC And AI

Pendulums are always swinging back and forth in the datacenter, with functions being offloaded from one thing and onloaded to another cheaper thing that is often more flexible or faster. …

With DPU-Goosed Switches, HPE Tackles VMware, Security – And Maybe HPC And AI was written by Timothy Prickett Morgan at The Next Platform.

HW053: Ubiquiti in the Enterprise

Ubiquiti is known primarily for wireless equipment for residential and small business use, but it can be a player in the enterprise world. On today’s show, we talk with Darrell DeRosia, Sr. Director, Network & Infrastructure Services with the Memphis Grizzlies, about how he provides that connectivity for the FedExForum, home to the Memphis Grizzlies... Read more »D2DO273: Azure VNets Don’t Exist

Cloud networks aren’t like traditional data center networks, so applying a traditional network design to the cloud probably isn’t the best idea. On today’s Day Two Cloud, guest Aidan Finn guides us through significant differences between Microsoft Azure networking and on-prem data center networks. For instance, subnets don’t segment hosts, network security groups do; every... Read more »Worth Reading: Practical Advice for Engineers

Sean Goedecke published an interesting compilation of practical advice for engineers. Not surprisingly, they include things like “focus on fundamentals” and “spend your working time doing things that are valuable to the company and your career” (OMG, does that really have to be said?).

Bonus point: a link to an article by Patrick McKenzie (of the Bits About Money fame) explaining why you SHOULD NOT call yourself a programmer (there goes the everyone should be a programmer gospel 😜).

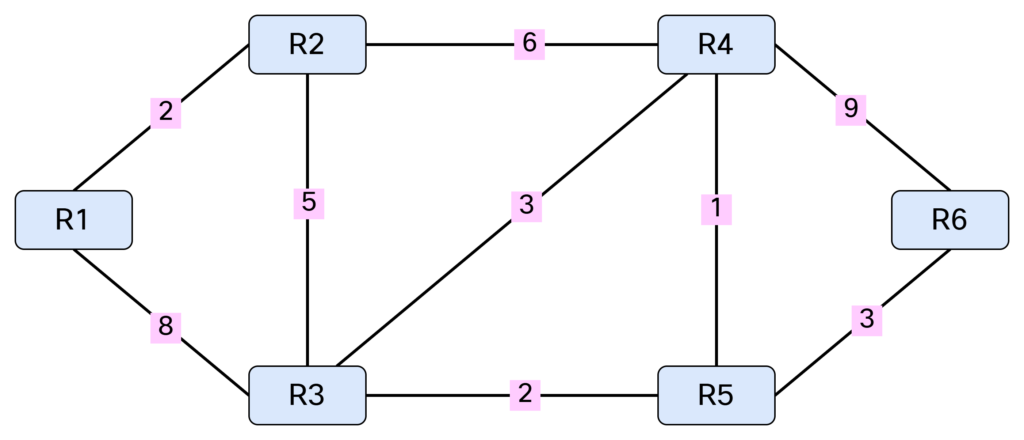

Understanding Shortest Path First

Link state protocols like OSPF and IS-IS use the Shortest Path First (SPF) algorithm developed by Edsger Dijkstra. Edsger was a Dutch computer scientist, programmer, software engineer, mathematician, and science essayist. He wanted to solve the problem of finding the shortest distance between two cities such as Rotterdam and Groningen. The solution came to him when sitting in a café and the rest is history. SPF is used in many applications, such as GPS, but also in routing protocols, which I’ll cover today.

To explain SPF, I’ll be working with the following topology:

Note that SPF only works with a weighted graph where we have positive weights. I’m using symmetrical costs, although you could have different costs in each direction. Before running SPF, we need to build our Link State Database (LSDB) and I’ll be using IS-IS in my lab for this purpose. Based on the topology above, we can build a table showing the cost between the nodes:

This triplet of information consists of originating node, neighbor node, and cost. It can also be represented as [R1, R2, 2], [R1, R3, 8], [R2, R1, 2], [R2, R3, 5], [R2, R4, 6], [R3, R1, 8], [R3, R2, 5], [R3, R4, Continue reading

Why We Need A Data-Centric OS For AI

It is a pretty bold claim for anyone to call themselves the creator of the operating system for AI. …

Why We Need A Data-Centric OS For AI was written by Timothy Prickett Morgan at The Next Platform.

Reasons to attend the 2025 AI Infra Summit

Sponsored Post: The 2025 AI Infra Summit will bring some of the brightest minds in AI and its supporting infrastructure to Santa Clara on September 9-11 this year. …

Reasons to attend the 2025 AI Infra Summit was written by Chris Williams at The Next Platform.

BGP handling bug causes widespread internet routing instability

BGP handling bug causes widespread internet routing instability

At 7AM (UTC) on Wednesday May 20th 2025 a BGP message was propagated that triggered surprising (to many) behaviours with two major B

PP064: How Aviatrix Tackles Multi-Cloud Security Challenges (Sponsored)

Aviatrix is a cloud network security company that helps you secure connectivity to and among public and private clouds. On today’s Packet Protector, sponsored by Aviatrix, we get details on how Aviatrix works, and dive into a new feature called the Secure Network Supervisor Agent. This tool uses AI to help you monitor and troubleshoot... Read more »NB528: IP Fabric Adds Firewall Rule Simulation; Extreme Networks Debuts Agentic AI in Platform ONE

Take a Network Break! We begin with a Red Alert for critical vulnerabilities Kubernetes Gardener. Up next, a threat actor has been squatting on unused CNAME records to distribute malware and spam, and IP Fabric rolls out a new firewall rule simulation capability to let administrators test the effect of firewall rules on traffic patterns.... Read more »ChatGPT Strikes Again: IS-IS on Unnumbered Interfaces 🤦♂️

In the last few days, I decided to check out how much better ChatGPT has gotten in the last year or two. I tried to be positive and was rewarded with some surprisingly good results. I even figured out I can use it to summarize my blog posts using prompts like this one:

Using solely the information from blog.ipspace.net, what can you tell me about running ospf over unnumbered interfaces

And then I asked it about unnumbered interfaces and IS-IS, and it all went sideways:

Why we need a unified approach to Kubernetes environments

Today, organizations struggle managing disparate technologies for their Kubernetes networking and network security needs. Leveraging multiple technologies for networking and security for in-cluster, ingress, egress, and traffic across clusters creates challenges, including operational complexities and increased costs. For example, to manage ingress traffic for Kubernetes clusters, users cobble together multiple solutions from different providers such as ingress controllers or gateways and load balancers for routing traffic, as well as Web Application Firewalls (WAFs) for enhanced security.

Despite the challenges it brings, deploying disparate technologies has been a “necessary evil” for organizations to get all the capabilities needed for holistic Kubernetes networking. Here, we’ll explore challenges this proliferation of tooling introduces, and provide actionable tips for today’s platform and security teams to overcome these issues.

Challenges Managing Multiple Technologies

The fragmented approach to networking and network security in Kubernetes leads to challenges and inefficiencies, including:

- Operational overhead: Each technology comes with its own learning curve, setup, configuration, integration, and maintenance requirements. This leads to a challenging user experience.

- Increased costs: Licensing and operational costs accumulate as more tools are deployed.

- Scaling challenges: As clusters grow or spread across diverse environments, ensuring consistent and secure networking becomes harder.

- Security gaps: Disjointed solutions Continue reading

Repost: On the Advantages of XML

Continuing the discussion started by my Breaking APIs or Data Models Is a Cardinal Sin and Screen Scraping in 2025 blog posts, Dr. Tony Przygienda left another thoughtful comment worth reposting as a publicly visible blog post:

Having read your newest rant around my rant ;-} I can attest that you hit the nail on the very head in basically all you say:

- XML output big? yeah.

- JSON squishy syntax? yeah.

- SSH prioritization? You didn’t live it until you had a customer where a runaway python script generated 800+ XML netconf sessions pumping data ;-)