Securing Modern Applications

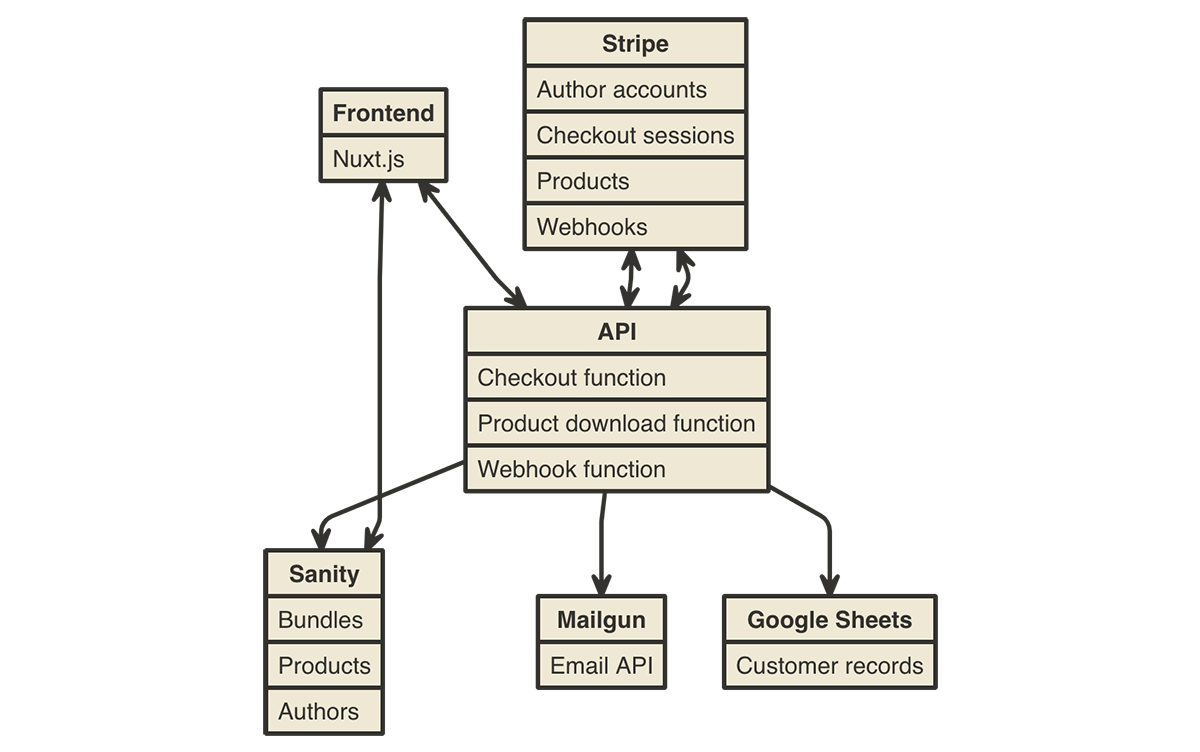

Modern applications are changing enterprise security. Apps today are comprised of dozens, or even hundreds, of microservices. They can be spun up and down in real time and may span multiple clouds (on–premises, private cloud, and public cloud). Traditional security stacks just aren’t suited to protecting these applications consistently.

To effectively secure modern apps, we start by identifying unique application assets across clouds—such as users, services, and data. We then continuously evaluate their risk and automatically make authorization decisions to adjust our application security and compliance posture based on asset identity—regardless of where they are or where they have moved.

Security professionals can learn how to use VMware network and security solutions to secure modern applications in the following VMworld sessions:

Security Policies for Modern Applications: An Evolution from Micro-segmentation (ISCS2240)

Enterprises are embracing cloud native transformation and modernizing traditional applications, from monolithic to microservices architectures. As applications transform and span multiple clouds (on–premises, private cloud, and public cloud), it’s essential to Continue reading