MLAG Deep Dive: Dealing with LAG Member Failures

Craig Weinhold pointed me to a complex topic I managed to ignore in my MLAG Deep Dive series: how does an MLAG cluster reroute around a failure of a LAG member link?

In this blog post, we’ll focus on traditional MLAG cluster implementations using a peer link; another blog post will explore the implications of using VXLAN and EVPN to implement MLAG clusters.

We’ll also ignore the interesting question of “how is the LAG member link failure detected?”1 and focus on “what happens next?” using the sample MLAG topology:

MLAG Deep Dive: Dealing with LAG Member Failures

Craig Weinhold pointed me to a complex topic I managed to ignore in my MLAG Deep Dive series: how does an MLAG cluster reroute around a failure of a LAG member link?

In this blog post, we’ll focus on traditional MLAG cluster implementations using a peer link; another blog post will explore the implications of using VXLAN and EVPN to implement MLAG clusters.

We’ll also ignore the interesting question of “how is the LAG member link failure detected?”1 and focus on “what happens next?” using the sample MLAG topology:

D2C242: Data Engineering and its Streams, Rivers, and Lakes

Keith Gregory teaches us about data engineering in a way DevOps folks (and hydrologists) can understand. He explains that the role of a data engineer is to create pipelines to transport data from metaphorical rivers and make it usable for data analysts. Keith walks us through the testing process; the difference between streaming pipelines and... Read more »Deploying and Analyze EVPN Instances: Deployment Scenarios

In the previous section, we built a Single-AS EVPN Fabric with OSPF-enabled Underlay Unicast routing and PIM-SM for Multicast routing using Any Source Multicast service. In this section, we configure two L2-Only EVPN Instances (L2-EVI) and two L2/L3 EVPN Instances (L2/3-EVI) in the EVPN Fabric. We examine their operations in six scenarios depicted in Figure 3-1.

Scenario 1 (L2-Only EVI, Intra-VN):

In the Deployment section, we configure an L2-Only EVI with a Layer 2 VXLAN Network Identifier (L2VNI) of 10010. The Default Gateway for the VLAN associated with the EVI is a firewall. In the Analyze section, we observe the Control Plane and Data Plane operation when a) connecting Tenant Systems TS1 and TS2 to the segment, and b) TS1 communicates with TS2 (Intra-VN Communication).

Scenario 2 (L2-Only EVI, Inter-VN):

In the Deployment section, we configure another L2-Only EVI with L2VNI 10020, to which we attach TS3 and TS4. In the Analyze section, we examine EVPN Fabric's Control Plane and Data Plane operations when TS2 (L2VNI 10010) sends data to TS3 (L2VNI 10020), Inter-VN Communication.

Scenario 3 (L2/L3 EVI, Intra-VN):

In the Deployment section, we configure a Virtual Routing and Forwarding (VRF) Instance named VRF-NWKT with L3VNI 10077. Next, Continue reading

Worth Exploring: LibreQoS

Erik Auerswald pointed me to an interesting open-source project. LibreQoS implements decent QoS using software switching on many-core x86 platforms. It’s implemented as a bump-in-the-wire software solution, so you should be able to plug it into your network just before a major congestion point and let it handle the packet dropping and prioritization.

Obviously, the concept is nothing new. I wrote about a similar problem in xDSL networks in 2009.

Worth Exploring: LibreQoS

Erik Auerswald pointed me to an interesting open-source project. LibreQoS implements decent QoS using software switching on many-core x86 platforms. It’s implemented as a bump-in-the-wire software solution, so you should be able to plug it into your network just before a major congestion point and let it handle the packet dropping and prioritization.

Obviously, the concept is nothing new. I wrote about a similar problem in xDSL networks in 2009.

PP013: Untangling Managed Security Services

What’s the difference between cybersecurity “as a service” vs. “managed” vs. “hosted”? And what’s the difference between an MSP and an MSSP? In this episode, JJ helps untangle the terms and concepts in cybersecurity offerings. She explains what questions you should ask vendors to make sure you’re picking the right one for your needs; negotiating... Read more »HS071: The Real Numbers Behind the Return To Office Myths

Why are some executives still insisting on Return to Office policies? Does it improve culture and productivity like they swear? Or is it more about the devaluing of a massive asset on their books: Commercial real estate. If the value of commercial real estate drops, companies have less to leverage for loans and– perhaps more... Read more »Introducing Cloudflare for Unified Risk Posture

Managing risk posture — how your business assesses, prioritizes, and mitigates risks — has never been easy. But as attack surfaces continue to expand rapidly, doing that job has become increasingly complex and inefficient. (One global survey found that SOC team members spend, on average, one-third of their workday on incidents that pose no threat).

But what if you could mitigate risk with less effort and less noise?

This post explores how Cloudflare can help customers do that, thanks to a new suite that converges capabilities across our Secure Access Services Edge (SASE) and web application and API (WAAP) security portfolios. We’ll explain:

- Why this approach helps protect more of your attack surface, while also reducing SecOps effort

- Three key use cases — including enforcing Zero Trust with our expanded CrowdStrike partnership

- Other new projects we’re exploring based on these capabilities

Cloudflare for Unified Risk Posture

Today, we’re announcing Cloudflare for Unified Risk Posture, a new suite of cybersecurity risk management capabilities that can help enterprises with automated and dynamic risk posture enforcement across their expanding attack surface. Today, one unified platform enables organizations to:

- Evaluate risk across people and applications: Cloudflare evaluates risk posed by people via Continue reading

Using Fortran on Cloudflare Workers

In April 2020, we blogged about how to get COBOL running on Cloudflare Workers by compiling to WebAssembly. The ecosystem around WebAssembly has grown significantly since then, and it has become a solid foundation for all types of projects, be they client-side or server-side.

As WebAssembly support has grown, more and more languages are able to compile to WebAssembly for execution on servers and in browsers. As Cloudflare Workers uses the V8 engine and supports WebAssembly natively, we’re able to support languages that compile to WebAssembly on the platform.

Recently, work on LLVM has enabled Fortran to compile to WebAssembly. So, today, we’re writing about running Fortran code on Cloudflare Workers.

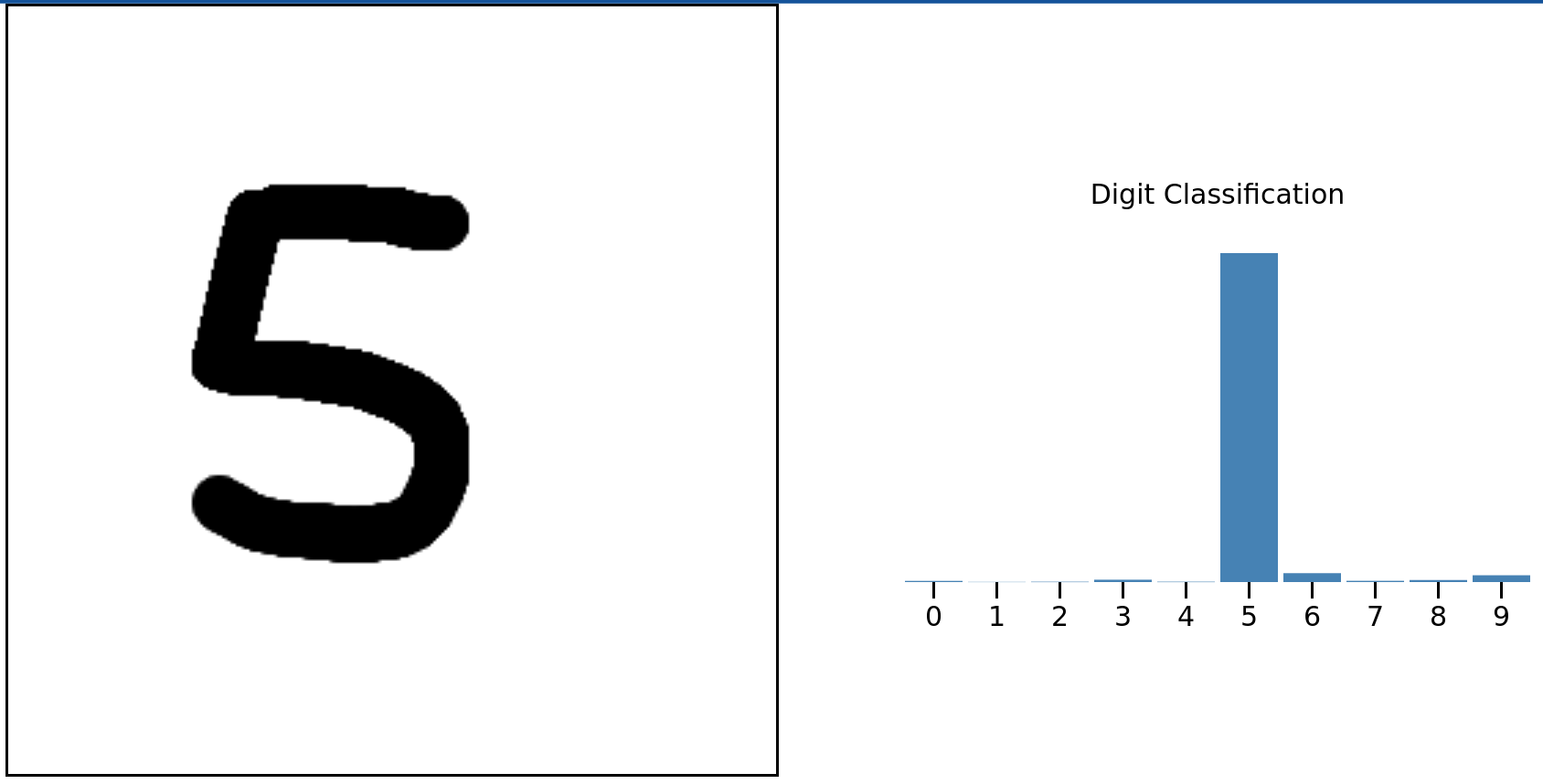

Before we dive into how to do this, here’s a little demonstration of number recognition in Fortran. Draw a number from 0 to 9 and Fortran code running somewhere on Cloudflare’s network will predict the number you drew.

Try yourself on handwritten-digit-classifier.fortran.demos.cloudflare.com.

This is taken from the wonderful Fortran on WebAssembly post but instead of running client-side, the Fortran code is running on Cloudflare Workers. Read on to find out how you can use Fortran on Cloudflare Workers and how that demonstration works.

Wait, Fortran? Continue reading

Cisco vPC in VXLAN/EVPN Network – Part 3 – Verifying Connectivity

The following topology is used:

We want to verify connectivity and traffic flow towards:

- Gateway of Server3.

- Server1.

- Server2.

- Server4.

Let’s start with the gateway. The gateway is at 10.0.0.1 and has a MAC address of 0001.0001.0001:

server3:~$ ip neighbor | grep 10.0.0.1 10.0.0.1 dev bond0 lladdr 00:01:00:01:00:01 STALE

This is an anycast gateway MAC. When initiating a ping towards 10.0.0.1, it can go to either Leaf1 or Leaf2. I will run Ethanalyzer on the switches to confirm which one is receiving the ICMP Echo Request:

Leaf1# ethanalyzer local interface inband display-filter "icmp" limit-captured-frames 0 Capturing on 'ps-inb' Leaf2# ethanalyzer local interface inband display-filter "icmp" limit-captured-frames 0 Capturing on 'ps-inb'

Then initiate ping from Server3:

server3:~$ ping 10.0.0.1 PING 10.0.0.1 (10.0.0.1) 56(84) bytes of data. 64 bytes from 10.0.0.1: icmp_seq=1 ttl=255 time=1.19 ms 64 bytes from 10.0.0.1: icmp_seq=2 ttl=255 time=1.29 ms ^C --- 10.0.0.1 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1001ms rtt min/avg/max/mdev = 1.192/1.242/1.292/0.050 ms

Repost: State of Lisp Implementations (2024)

You might remember Béla Várkonyi’s use of LISP to build resilient ground-to-airplane networks from last week’s repost. It seems he’s not exactly happy with the current level of LISP support, at least based on what he wrote as a response to Jeff McLaughlin’s claim that “I can tell you that our support for EVPN does not, in any way, indicate the retirement of LISP for SD-Access.”:

Nice to hear the Cisco intends to support LISP. However, it is removed from IOS XR already. So it is not that clear…

If Cisco will stop supporting LISP, then we will be forced to create our own LISP routers, since we need it for extreme mobility environments.

Repost: State of Lisp Implementations (2024)

You might remember Béla Várkonyi’s use of LISP to build resilient ground-to-airplane networks from last week’s repost. It seems he’s not exactly happy with the current level of LISP support, at least based on what he wrote as a response to Jeff McLaughlin’s claim that “I can tell you that our support for EVPN does not, in any way, indicate the retirement of LISP for SD-Access.”:

Nice to hear the Cisco intends to support LISP. However, it is removed from IOS XR already. So it is not that clear…

If Cisco will stop supporting LISP, then we will be forced to create our own LISP routers, since we need it for extreme mobility environments.

Running Palo Alto Firewall in Containerlab

Hi all, in this quick blog post, let's look at how to run Palo Alto firewalls in Containerlab. If you've been following me for a while, you might know that I've started using Containerlab more often in my projects. If you're new to Containerlab or need a quick recap, check out my other introductory post below. Now, let's dive in.

boxen or vrnetlab

Palo Alto doesn't provide a containerized VM image (not CN-Series), it only has a VM-based image. You can create a container from this VM image using two methods. The official documentation recommends using 'boxen' to generate a container image from the VM. However, I chose to use the vrnetlab project instead.

Creating a containerized image using vrnetlab

First things first, download the VM image (qcow2) from the Palo Alto support portal. You might need a valid support contract to access this image. For this Continue reading

NB477: Arista Assembles Switch-Based Microperimeters; FCC Wants More Money for Telcos Dumping Huawei Gear

Take a Network Break! This week we cover a new microsegmentation offering from Arista, new GenAI assistants from Fortinet, and a GenAI firewall from Versa Networks to monitor and report on how organizations are using generative AI tools and applications. AWS will stop selling VMware Cloud on AWS (but you can still get it through... Read more »Tech Bytes: Real-Time Network Performance Monitoring with NetBeez (Sponsored)

Network monitoring is growing increasingly complicated. Companies are facing more distributed applications and more remote employees. NetBeez, our sponsor today, is here to talk about how they monitor network performance in real time for the campus, WAN, and more. From proactively testing networks after configuration changes to identifying how well a worker’s laptop is connecting... Read more »Famous Last Words: I’m Too Stupid for That

Some networking vendors realized that one way to gain mindshare is to make their network operating systems available as free-to-download containers or virtual machines. That’s the right way to go; I love their efforts and point out who went down that path whenever possible1 (as well as others like Cisco who try to make our lives miserable).

However, those virtual machines better work out of the box, or you’ll get frustrated engineers who will give up and never touch your warez again, or as someone said in a LinkedIn comment to my blog post describing how Junos vPTX consistently rejects its DHCP-assigned IP address: “If I had encountered an issue like this before seeing Ivan’s post, I would have definitely concluded that I am doing it wrong.”2

Famous Last Words: I’m Too Stupid for That

Some networking vendors realized that one way to gain mindshare is to make their network operating systems available as free-to-download containers or virtual machines. That’s the right way to go; I love their efforts and point out who went down that path whenever possible1 (as well as others like Cisco who try to make our lives miserable).

However, those virtual machines better work out of the box, or you’ll get frustrated engineers who will give up and never touch your warez again, or as someone said in a LinkedIn comment to my blog post describing how Junos vPTX consistently rejects its DHCP-assigned IP address: “If I had encountered an issue like this before seeing Ivan’s post, I would have definitely concluded that I am doing it wrong.”2

Worth Reading: Cisco vPC in VXLAN/EVPN Networks

Daniel Dib started writing a series of blog posts describing Cisco vPC in VXLAN/EVPN Networks. The first one covers the anycast gateway, the second one the vPC configuration.

Let’s hope he will keep them coming and link them together so it will be easy to find the whole series after stumbling on one of the posts ;)