IPB139: Avoiding Typical IPv6 Pitfalls

Network engineers and architects considering IPv6 can benefit from the experiences of those who have gone before them by avoiding the problems that have bedeviled other deployments. On today's show, your hosts discuss three typical pitfalls and how to get over or around them without falling in.

The post IPB139: Avoiding Typical IPv6 Pitfalls appeared first on Packet Pushers.

IPB139: Avoiding Typical IPv6 Pitfalls

Network engineers and architects considering IPv6 can benefit from the experiences of those who have gone before them by avoiding the problems that have bedeviled other deployments. On today’s show, your hosts discuss three typical pitfalls and how to get over or around them without falling in. Those IPv6 pitfalls include: IPv4 thinking Deploying ULA... Read more »The First Ever Network Automation Conference – AutoCon0

First let me just say that you have got to love a zero indexed conference! If you are a network engineer and you don’t know what that means we need to chat..and that situation was a key topic of the conference. In my mind the goal of the conference was to assess the state of READ MORE

The post The First Ever Network Automation Conference – AutoCon0 appeared first on The Gratuitous Arp.

Worth Reading: Cloudflare Control Plane Outage

Cloudflare experienced a significant outage in early November 2023 and published a detailed post-mortem report. You should read the whole report; here are my CliffsNotes:

- Regardless of how much redundancy you have, sometimes all systems will fail at once. Having redundant systems decreases the probability of total failure but does not reduce it to zero.

- As your systems grow, they gather hidden- and circular dependencies.

- You won’t uncover those dependencies unless you run a full-blown disaster recovery test (not a fake one)

- If you don’t test your disaster recovery plan, it probably won’t work when needed.

Also (unrelated to Cloudflare outage):

Worth Reading: Cloudflare Control Plane Outage

Cloudflare experienced a significant outage in early November 2023 and published a detailed post-mortem report. You should read the whole report; here are my CliffsNotes:

- Regardless of how much redundancy you have, sometimes all systems will fail at once. Having redundant systems decreases the probability of total failure but does not reduce it to zero.

- As your systems grow, they gather hidden- and circular dependencies.

- You won’t uncover those dependencies unless you run a full-blown disaster recovery test (not a fake one)

- If you don’t test your disaster recovery plan, it probably won’t work when needed.

Also (unrelated to Cloudflare outage):

IPv6, the DNS and Happy Eyeballs

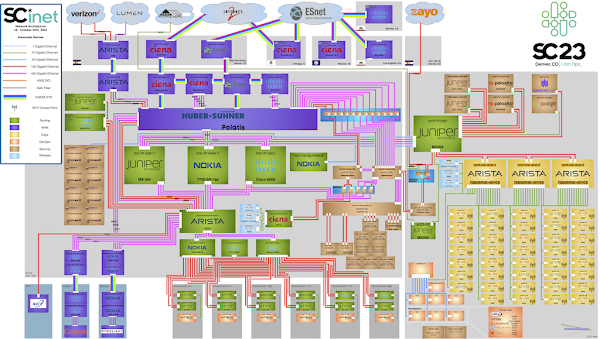

If we are going to update RFC 3901, "DNS IPv6 Transport Guidelines," and offer a revised set of guidelines that are more positive guidelines about the use of IPv6 in the DNS, then what should such updated guidelines say?SC23 WiFi Traffic Heatmap

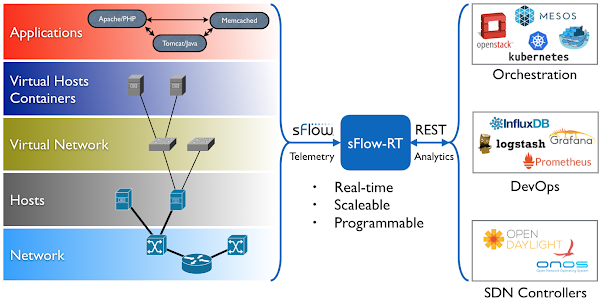

Additional use cases being demonstrated this week include, SC23 Dropped packet visibility demonstration and SC23 SCinet traffic.

D2C219: KubeConversations Part 1 – Platform Engineering

Welcome to a special edition of Day Two Cloud. Host Ned Bellavance traveled to KubeCon NA 2023 and spoke to vendors and open source maintainers about what's going on in the cloud-native ecosystem. This episode features conversations on platform engineering.

The post D2C219: KubeConversations Part 1 – Platform Engineering appeared first on Packet Pushers.

D2C220: KubeConversations Part 1 – Platform Engineering

Welcome to a special edition of Day Two Cloud. Host Ned Bellavance traveled to KubeCon Chicago 2023 and spoke to vendors and open source maintainers about what’s going on in the cloud-native ecosystem. This episode features conversations on platform engineering. Part 2 will focus on security. Episode Guests: Cole Morrison, Developer Advocate at HashiCorp LinkedIn... Read more »Introducing hostname and ASN lists to simplify WAF rules creation

If you’re responsible for creating a Web Application Firewall (WAF) rule, you’ll almost certainly need to reference a large list of potential values that each field can have. And having to manually manage and enter all those fields, for numerous WAF rules, would be a guaranteed headache.

That’s why we introduced IP lists. Having a separate list of values that can be referenced, reused, and managed independently of the actual rule makes for a better WAF user experience. You can create a new list, such as $organization_ips, and then use it in a rule like “allow requests where source IP is in $organization_ips”. If you need to add or remove IPs, you do that in the list, without touching each of the rules that reference the list. You can even add a descriptive name to help track its content. It’s easy, clean, and organized.

Which led us, and our customers, to ask the next natural question: why stop at IPs?

Cloudflare’s WAF is highly configurable and allows you to write rules evaluating a set of hostnames, Autonomous System Numbers (ASNs), countries, header values, or values of JSON fields. But to do so, you’ve to input a list of Continue reading

BGP Labs: Using Multi-Exit Discriminator (MED)

In the previous labs we used BGP weights and Local Preference to select the best link out of an autonomous system and thus change the outgoing traffic flow.

Most edge (end-customer) networks face a different problem – they want to influence the incoming traffic flow, and one of the tools they can use is BGP Multi-Exit Discriminator (MED).

BGP Labs: Using Multi-Exit Discriminator (MED)

In the previous labs, we used BGP weights and Local Preference to select the best link out of an autonomous system and thus change the outgoing traffic flow.

Most edge (end-customer) networks face a different problem – they want to influence the incoming traffic flow, and one of the tools they can use is BGP Multi-Exit Discriminator (MED).

White House Unveils National Spectrum Strategy

The plan targets five bands for greater use, calls for a dynamic spectrum-sharing testbed, and incentives for advanced management products.HW015: What Every Wi-Fi Pro Needs To Know About Private LTE

Private LTE and Wi-Fi use a lot of overlapping skills but there are also some key differences that Wi-Fi pros need to be aware of.

The post HW015: What Every Wi-Fi Pro Needs To Know About Private LTE appeared first on Packet Pushers.