Worth Reading: AI for Network Managers

Pat Allen wrote an interesting guide for managers of networking teams dealing with the onslaught of AI (HT: PacketPushers newsletter).

The leitmotif: use AI to generate a rough solution, then review and improve it. That makes perfect sense and works as long as we don’t forget we can’t trust AI, assuming you save time doing it this way.

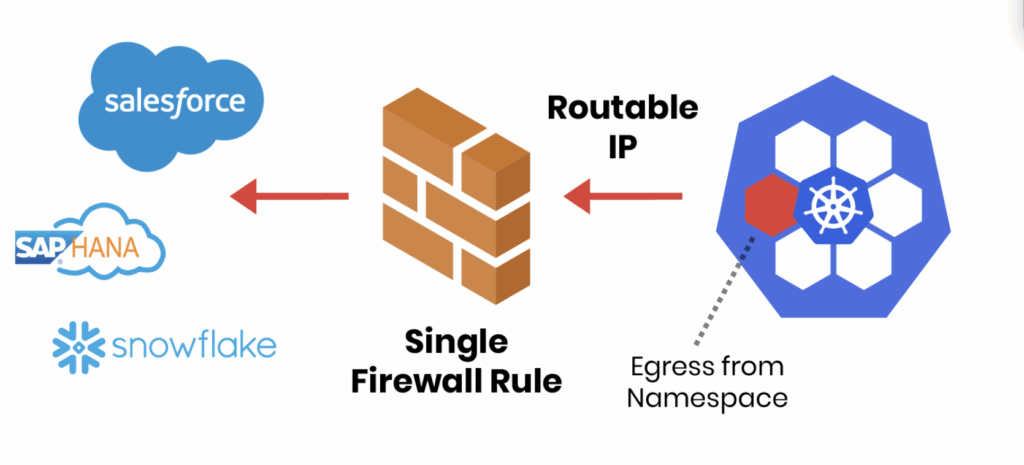

5 Essential Steps to Strengthen Kubernetes Egress Security

Securing what comes into your Kubernetes cluster often gets top billing. But what leaves your cluster, outbound or egress traffic, can be just as risky. A single compromised pod can exfiltrate data, connect to malicious servers, or propagate threats across your network. Without proper egress controls, workloads can reach untrusted destinations, creating serious security and compliance risks. This guide breaks down five practical steps to strengthen Kubernetes egress security, helping teams protect data, enforce policies, and maintain visibility across clusters.

Why Egress Controls Matter

|

Your Kubernetes Egress Security Checklist

To help teams tackle this challenge, we’ve put together a Kubernetes Egress Security Checklist, based on best practices from real-world Continue reading

HS115: Cyber-Risk Assessment and Cybersecurity Budgeting: You’re (Probably) Doing It Wrong

To understand how much to spend on cybersecurity, you have to accurately assess or quantify your risks. Too many people still peg their cybersecurity spend to their IT budget; that is, they’ll look at what they’re spending on IT, and then allocate a percentage of that to cybersecurity. That may have made some sense when... Read more »Some Notes from RIPE-91

The 91st meeting of the RIPE community was held in Bucharest in October this year. It was a busy week and, as usual, there were presentations on a wide variety of topics, including routing, the DNS, network operations, security, measurement and address policies. The following are some notes on presentations that I found to be of interest to me.OMG: Automatic OSPFv3 Router ID on Cisco IOS

Found this incredible gem1 hidden in the Usage Guidelines for the OSPFv3 router-id configuration command part of the Cisco IOS IPv6 reference guide.

The whole paragraph seems hallucinated2, but that couldn’t be because the page was supposedly last updated in 2019, and LLMs weren’t good enough to write well-structured nonsense at that time:

OSPFv3 is backward-compatible with OSPF version 2.

No, it is not.

NB549: Startups Take on Switch, ASIC Incumbents; Google Claims Quantum Advantage

Take a Network Break! Companies spying on…I mean, monitoring…their employees via software called WorkExaminer should be aware of a login bypass that needs to be locked down. On the news front, we opine on whether it’s worth trying to design your way around AWS outages, and speculate on the prospects of a new Ethernet switch... Read more »Tech Bytes: Why Equinix Should Be Part of Your AI Network Strategy (Sponsored)

AI infrastructure conversations tend to be dominated by GPUs, data center buildouts, and power and water consumption. But networks also play a crucial role, whether to support huge file transfers to a compute cluster, stream telemetry from edge locations to feed AI pipelines, or provide high-speed, low latency connectivity for AI agents. On today’s Tech... Read more »Adding a Syslog Server to a netlab Lab Topology

netlab does not support a Syslog server (yet), but it’s really easy to add one to your lab topology, primarily thanks to the Rsyslog team publishing a ready-to-run container. Let’s do it ;)

Adding a Syslog Server

Rsyslog is an open-source implementation of a Syslog server (with many bells and whistles, most of which we won’t use) that can (among other things) log incoming messages to a file. Even better (for our use case), the Rsyslog team regularly publishes Rsyslog containers; we’ll use the rsyslog/rsyslog-collector container because it can “receive logs via UDP, TCP, and optionally RELP, and can send them to storage backends or files.”

A Unified ARP Table (and how to get one when you don’t have one)

How to get one when you don't have one and what happens when its gone! There is so much propaganda out there today (and I am not even referring to politics), it feels good to go back to fundamentals. Few things are more foundational to networking than Address Resolution Protocol (ARP). It is inconceivable to READ MORE

The post A Unified ARP Table (and how to get one when you don’t have one) appeared first on The Gratuitous Arp.

Network Labs on a Budget

"Can you suggest some specs for a server for my network labs?" is probably the question I get asked the most. People reach out all the time asking for recommendations. The thing is, I never really know their exact situation or what they’re trying to do in their lab. So, I usually just share what I have and what worked best for me, and let them decide what fits their setup.

In this post, I’ll go over the cheapest way to build your own network lab without spending too much.

What We Will Cover?

- Buying a used mini PC

- Proxmox as the hypervisor (optional)

- Linux as a VM

- Containerlab/Netlab, EVE-NG, Cisco CML

- Proxmox Backup Server (optional)

- Simplest Option for Absolute Beginners

TL;DR

You don’t need expensive hardware to build a solid network lab. A used mini PC with decent specs is more than enough to run tools like Proxmox, Continue reading

Contributing to NTC templates

This guide is the steps I follow when adding or updating NTC templates. Contributing to a project in Github is still a learning curve for me, the days of learning CLI by repetition seem long gone so when using or contributing to any of these NetOps type tools I have to keep guides as it is a bit of a struggle to remember with so many new and alien things to know and the sporadic nature that I use them.

The strange webserver hot potato — sending file descriptors

I’ve previously mentioned my io-uring webserver tarweb. I’ve now added another interesting aspect to it.

As you may or may not be aware, on Linux it’s possible to send a file descriptor from one process to another over a unix domain socket. That’s actually pretty magic if you think about it.

You can also send unix credentials and SELinux security contexts, but that’s a story for another day.

My goal

I want to run some domains using my webserver “tarweb”. But not all. And I want to host them on a single IP address, on the normal HTTPS port 443.

Simple, right? Just use nginx’s proxy_pass?

Ah, but I don’t want nginx to stay in the path. After SNI (read: “browser saying which domain it wants”) has been identified I want the TCP connection to go directly from the browser to the correct backend.

I’m sure somewhere on the internet there’s already an SNI router that does this, but all the ones I found stay in line with the request path, adding a hop.

Why?

A few reasons:

- Having all bytes bounce on the SNI router triples the number of total file descriptors for the connection. (one on the backend, Continue reading

What’s New in Calico v3.31: eBPF, NFTables, and More

We’re excited to announce the release of Calico v3.31,  which brings a wave of new features and improvements.

which brings a wave of new features and improvements.

For a quick look, here are the key updates and improvements in this release:

- Calico NFTables Dataplane is now Generally Available

- Calico eBPF Dataplane Enhancements

- Simplified installation: new template defaults to

eBPF, automatically disableskube-proxyviakubeProxyManagementfield, and addsbpfNetworkBootstrapfor auto API endpoint detection. - Configurable cgroupv2 path: support for immutable OSes (e.g., Talos).

- >>Learn More: See how Calico v3.31 makes eBPF installation frictionless and simplifies setup in our Zero-Trust with Zero-Friction eBPF in Calico v3.31 blog

- Simplified installation: new template defaults to

- Calico Whisker (Observability Stack)

- Improved UI and performance in Calico v3.31.

- New policy trace categories: Enforced vs Pending.

- Lower memory use, IPv6 fixes, and more efficient flow streaming.

- Networking & QoS

- New bandwidth and packet rate QoS controls across all dataplanes.

- DiffServ (

DSCP) support: prioritize traffic by marking packets (e.g.,EFfor VoIP). - Introduces new

QoSPolicyAPI for declarative traffic control.

- Encapsulation & Routing

- Tech Preview: Felix now handles encapsulation routes (

IP-in-IP,no-encap) directly — no BIRD required!

- Tech Preview: Felix now handles encapsulation routes (

- NAT Control

- New

natOutgoingExclusionsconfig for granular NAT management. - Choose between

Continue reading

- New

HN802: Unifying Networking and Security with Fortinet SASE: Architecture, Reality, and Lessons Learned (Sponsored)

The architecture and tech stack of a Secure Access Service Edge (SASE) solution will influence how the service performs, the robustness of its security controls, and the complexity of its operations. Sponsor Fortinet joins Heavy Networking to make the case that a unified offering, which integrates SD-WAN and SSE from a single vendor, provides a... Read more »Hedge 285: Post Quantum Crypto

Is quantum really an immediate and dangerous threat to current cryptography systems, or are we pushing to hastily adopt new technologies we won’t necessarily need for a few more years? Should we allow the quantum pie to bake a few more years before slicing a piece and digging in? George Michaelson joins Russ and Tom to discuss.

TNO047: Advice From Both Sides of the Network Aisle

Senad Palislamovic has held many roles in his time, from engineer to network operator to sales engineer and back again. He’s been around long enough to see trends come and go. Senad visits Total Network Operations to share some of his observations on network automation, AI for NetOps, and the quality of network data. Senad... Read more »DEEP Is Still a Must-Attend Boutique Conference

I love well-organized small conferences, so it wasn’t hard to persuade me to have another talk at the DEEP Conference in Zadar, Croatia. This time, I talked about the role of digital twins in disaster recovery/avoidance testing. You might know my take on networking digital twins; after that, I only had enough time to focus on bandwidth and latency matter, and this is how you emulate limited bandwidth and add latency bit.