Beyond Scale to Flexible Cloud Networking

In the early 2000’s a new generation of smartphones revolutionized the cell phone industry, eliminating the market for “flip phones,” introducing new tools and completely redefining “phones” to universal internet devices. New companies rose and old ones adapted or failed. In 2015, a new generation of electric cars (Tesla being the most well-known), were introduced...Continue reading »

Network Automation Online Course: a Vendor Perspective

A few days after I published the blog post describing why it might make sense to attend the Building Network Automation Solutions course even when you’re already using a $vendor network management system/platform, I got a surprising email from one of my friends working for a major networking vendor:

Read more ...Automating Cisco Nexus Switches with Ansible

For the past several years, the open source [network] community has been rallying around Ansible as a platform for network automation. Just over a year ago, Ansible recognized the importance of embracing the network community and since then, has made significant additions to offer network automation out of the box. In this post, we’ll look at two distinct models you can use when automating network devices with Ansible, specifically focusing on Cisco Nexus switches. I’ll refer to these models as CLI-Driven and Abstraction-Driven Automation.

Note: We’ll see in later posts how we can use these models and a third model to accomplish intent-driven automation as well.

For this post, we’ve chosen to highlight Nexus as there are more Nexus Ansible modules than any other network operating system as of Ansible 2.2 making it extremely easy to highlight these two models.

CLI-Driven Automation

The first way to manage network devices with Ansible is to use the Ansible modules that are supported by a diverse number of operating systems including NX-OS, EOS, Junos, IOS, IOS-XR, and many more. These modules can be considered the lowest common denominator as they work the same way across operating systems requiring you to define the Continue reading

Will autocrats ever learn? – The Internet Blackout in Gambia

On Wednesday afternoon, Cloudflare and other Internet companies noticed that the West African country of The Gambia had dropped off the Internet - the day before the presidential election that was planned to be held there on Thursday, December 1st. This is not unprecedented. The Ugandan government blocked access to Facebook and WhatsApp during its recent election. Internet blocking by governments has also been seen in Gabon. Even Ghana toyed with the idea earlier this year.

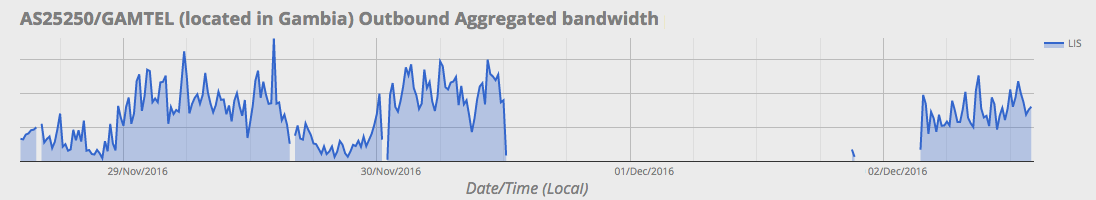

Gambia has a population of 1.8 million people, and according to World Internet Stats, Internet penetration is growing fast and is almost 20%. The latest statistics indicate that at least ten percent of Gambians are using Facebook. As shown in the graph below, on Thursday, the Gambian government cut off access to the global Internet and for 39 hours hundreds of thousands of Gambians were unable to use online services on which they rely every day.

All the networks in Gambia disappeared from the global routing tables. This could have been caused by a soft reconfiguration of Internet routers; or by a physical powering down of telecommunications equipment. At this point, we do not know. What we do know is that we Continue reading

Folks, Let’s Not Forget About the Original Use of SDN, Says Nokia

Carrier SDN can drive 25% more revenue generating services.

Carrier SDN can drive 25% more revenue generating services.

Containers & Serverless Functions Have Their Day on AWS

AWS goes open source with Blox and seriously revs up Lambda.

AWS goes open source with Blox and seriously revs up Lambda.

Get Trained on the AWS Cloud

Based on the AWS Essentials Course, AWSome Day is ideal for IT managers, business leaders, system engineers, system administrators, developers and architects who are eager to learn more about cloud computing and how to get started on the AWS Cloud.

- Gain a deeper understanding of AWS core and application services

- Learn how to deploy and automate your infrastructure on the AWS Cloud

- Get your questions answered by our AWS experts

- Receive a Certificate of Attendance when you complete the all modules

Register on below mentioned link:

https://aws.amazon.com/events/awsome-day/awsome-day-online/

Date: 6 December 2016

Time: 10am – 1.30pm IST

Location: Online