Living with Small Forwarding Tables

A friend of mine working for a mid-sized networking vendor sent me an intriguing question:

We have a product using an old ASIC that has 12K forwarding entries, and would like to extend its lifetime. I know you were mentioning some useful tricks, would you happen to remember what they were?

This challenge has no perfect solution, but there are at least three tricks I’ve encountered so far (as always, comments are most welcome):

Living with Small Forwarding Tables

A friend of mine working for a mid-sized networking vendor sent me an intriguing question:

We have a product using an old ASIC that has 12K forwarding entries, and would like to extend its lifetime. I know you were mentioning some useful tricks, would you happen to remember what they were?

This challenge has no perfect solution, but there are at least three tricks I’ve encountered so far (as always, comments are most welcome):

On the Edge with 5G? Automation’s Never Been More Necessary (or Attainable)

Automation, virtualization, and networking slicing will allow enterprises to deploy 5G services in a cost-effective manner, changing legacy workflows and processes.Welcome to Platform Week

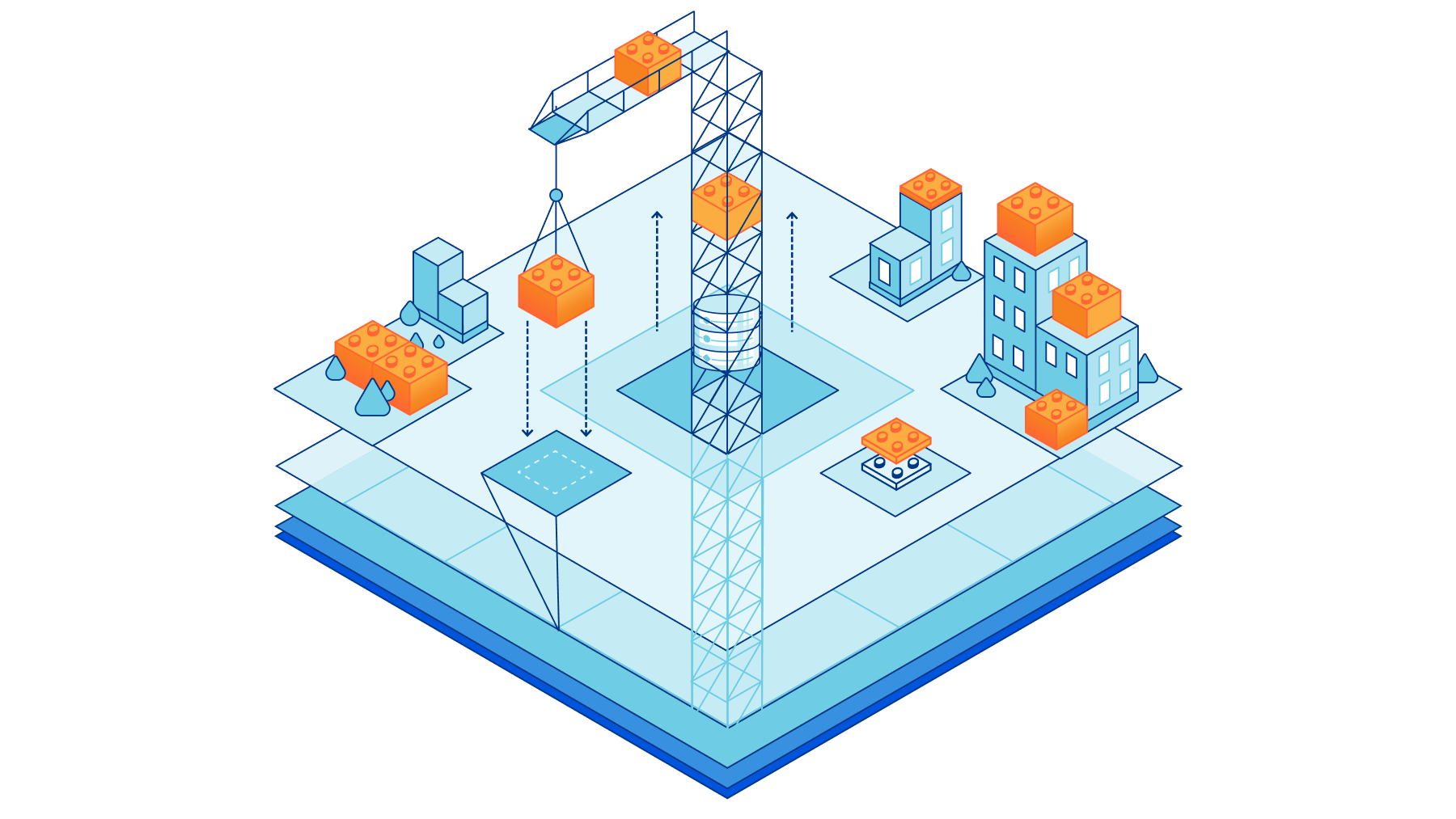

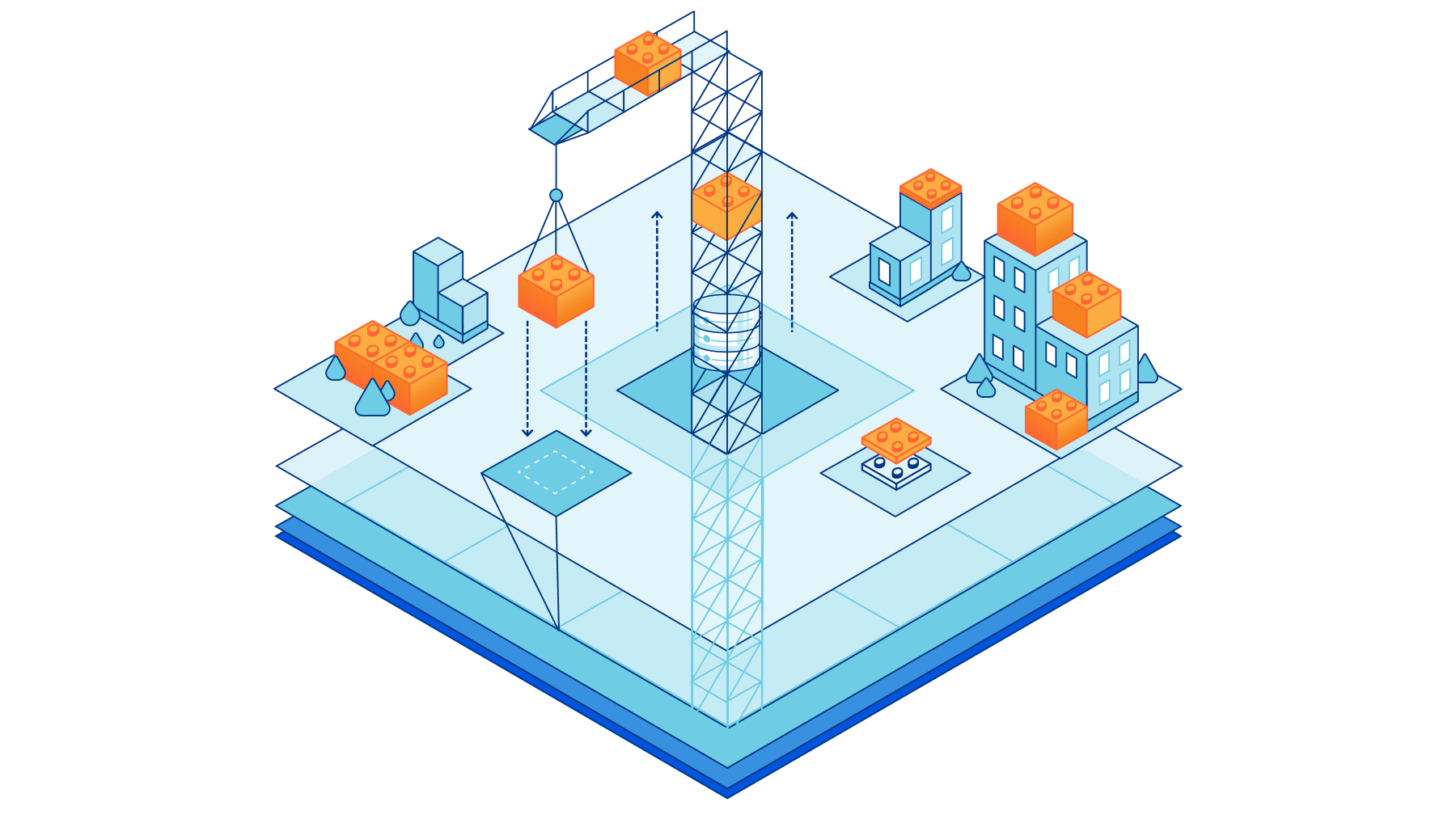

Principled. It’s one of Cloudflare’s three core values (alongside curiosity and transparency).

It’s a word that we came back to quite a bit in thinking through a question that has been foundational in driving us for this year’s Platform Week: what makes a truly great developer platform?

Of course, when it comes to evaluating developer platforms, the temptation is to focus on the “feeds and speeds” part of the equation. Who is the fastest? Who has the coolest tech? Who lets you do stuff that previously you could not?

Undoubtedly, these are all important questions. But we realized that the fun and shiny things which are often answers to these questions can easily become distractions from the true promise of developing on the Internet — and even traps that the less principled developer platforms can use to lure you into their arms.

The promise being, of course: that you can pull together solutions from a variety of different providers, to build something greater than what you’d be able to do with any one of them alone. That you can build something based on whatever is best when you sit down to create your application. And of course, if something better Continue reading

Announcing our Spring Developer Speaker Series

We love developers.

Late last year, we hosted Full Stack Week, with a focus on new products, features, and partnerships to continue growing Cloudflare’s developer platform. As part of Full Stack Week, we also hosted the Developer Speaker Series, bringing 12 speakers in the web dev community to our 24/7 online TV channel, Cloudflare TV. The talks covered topics across the web development ecosystem, which you can rewatch at any time.

We loved organizing the Developer Speaker Series last year. But as developers know far too well, our ecosystem changes rapidly: what may have been cutting edge back in November 2021 can be old news just a few months later in 2022. That’s what makes conferences and live speaking events so valuable: they serve as an up-to-date reference of best practices and future-facing developments in the industry. With that in mind, we're excited to announce a new edition of our Developer Speaker Series for 2022!

Check out the eleven expert web dev speakers, developers, and educators that we’ve invited to speak live on Cloudflare TV! Here are the talks you’ll be able to watch, starting tomorrow morning (May 9 at 09:00 PT):

The Bootcampers Companion – Caitlyn Greffly

In Continue reading

AWS: The Case of the iBGP Peer that Worked – Part 2: The Answer

The Answer: “#8 – Ping 192.168.14.2 from the AWS CSR” Summary: Why? What does BGP coming up have to do with the AWS CSR pinging the other side of GRE tunnel? Well if you know the answer… don’t read anymore.... Read More ›

The post AWS: The Case of the iBGP Peer that Worked – Part 2: The Answer appeared first on Networking with FISH.

Worth Reading: The State of fq_codel (and Bufferbloat)

Erik Auerswald sent me a pointer to a blog post by Dave Taht: The state of fq_codel and sch_cake worldwide. It’s so nice to see what a huge impact Dave made since he started the Bufferbloat project.

Hint: if you have no idea what Bufferbloat or fq_codel are, you REALLY SHOULD explore Dave’s web site.

Worth Reading: The State of fq_codel (and Bufferbloat)

Erik Auerswald sent me a pointer to a blog post by Dave Taht: The state of fq_codel and sch_cake worldwide. It’s so nice to see what a huge impact Dave made since he started the Bufferbloat project.

Hint: if you have no idea what Bufferbloat or fq_codel are, you REALLY SHOULD explore Dave’s web site.

Cisco Catalyst Stack Upgrade

Well… It will reboot your whole switch stack at once, In case you were wondering. But it has a neat feature of automatic rollback to the previous IOS XE version if something goes south with the newly upgraded switches. The same goes for non-stacked Cisco Catalyst C9200 and C9300 switches, but the question was, and the answer is hard to find if the stack would reload members sequentially or it would just reload all members at once. The answer is of course the least good option which makes the upgrade impossible without network outage even if other devices are connected

The post Cisco Catalyst Stack Upgrade appeared first on How Does Internet Work.

Worth Watching: Source Routing on the Edge (iNOG::14v)

Most large content providers use some sort of egress traffic engineering on edge web proxy/caching servers to optimize the end-user experience (avoid congested transit autonomous systems) and link utilization on egress links.

I was planning to write a blog post about the tricks they use for ages, and never found time to do it… but if you don’t mind watching a video, the Source Routing on the Edge presentation Oliver Herms had at iNOG::14v does a pretty good job explaining the concepts and a particular implementation.

Worth Watching: Source Routing on the Edge (iNOG::14v)

Most large content providers use some sort of egress traffic engineering on edge web proxy/caching servers to optimize the end-user experience (avoid congested transit autonomous systems) and link utilization on egress links.

I was planning to write a blog post about the tricks they use for ages, and never found time to do it… but if you don’t mind watching a video, the Source Routing on the Edge presentation Oliver Herms had at iNOG::14v does a pretty good job explaining the concepts and a particular implementation.

Community Spotlight series: Calico Open Source user insights from Ana Shmygla and Josef Janda, Jamf

In this installment of the Calico Community Spotlight series, I interviewed Ana Shmyglya and Josef Janda, who both work for Jamf. Last year, Josef wrote Migrating CNI plugin from kube-router to Calico on Kops managed Kubernetes cluster, and I wanted to dive deeper into his and Ana’s experience based on that blog post. We mainly talked about their respective teams, their responsibilities, and the challenges they have faced whilst using Kubernetes.

Q: What are your current roles and primary responsibilities?

Ana: I work in the Platform team. This basically means I am responsible for a team that maintains the core infrastructure, which includes the Kubernetes clusters that we run. We also own the underlying CNI of the clusters.

Josef: I work as a DevOps engineer on the team that maintains the internal development tools and other systems connected to the software delivery life cycle process.

Q: What orchestrator(s) have you been using?

Josef: We use Kubernetes. That’s basically the only orchestrator in our company.

Ana: Same for us as well, it’s Kubernetes across the company.

Q: What cloud infrastructure(s) has been part of your projects?

Ana: We use a couple of different providers, including AWS, but we only run Continue reading

Heavy Networking 629: The State Of Data Center Fabrics In 2022

Today's Heavy Networking dives into data center fabrics with guest Russ White. We discuss just what makes a data center fabric, why the industry relies too much on BGP, fabric alternatives and options, the future of data center fabrics, and more. Russ is a network architect, author, and instructor.

The post Heavy Networking 629: The State Of Data Center Fabrics In 2022 appeared first on Packet Pushers.

Heavy Networking 629: The State Of Data Center Fabrics In 2022

Today's Heavy Networking dives into data center fabrics with guest Russ White. We discuss just what makes a data center fabric, why the industry relies too much on BGP, fabric alternatives and options, the future of data center fabrics, and more. Russ is a network architect, author, and instructor.Friday Thoughts on the Full Stack

It’s been a great week at Networking Field Day 28 this week with some great presentations and even better discussions outside of the room. We recorded a couple of great podcasts around some fun topics, including the Full Stack Engineer.

Some random thoughts about that here before we publish the episode of the On-Premise IT Roundtable in the coming weeks:

- Why do you need a full stack person in IT? Isn’t the point to have people that are specialized?

- Why does no one tell the developers they need to get IT skills? Why is it more important for the infrastructure team to learn how to code?

- We see full stack doctors, which are general practitioners. Why are there no full stack lawyers or full stack accountants?

- If the point of having a full stack understanding is about growing non-tech skills why not just say that instead?

- There’s value in having someone that knows a little bit about everything but not too much. But that value is in having them in a supervisor role instead of an operations or engineering role. Do you want the full stack doctor doing brain surgery? or do you want him to refer you to a Continue reading