Technology Short Take #84

Welcome to Technology Short Take #84! This episode is a bit late (sorry about that!), but I figured better late than never, right? OK, bring on the links!

Networking

- When I joined the NSX team in early 2013, a big topic at that time was overlay protocols (VXLAN, STT, etc.). Since then, that topic has mostly faded, though it still does come up from time to time. In particular, the move toward Geneve has prompted that discussion again, and Russell Bryant tackles the discussion in this post.

- Sjors Robroek describes his nested NSX-T lab that also includes some virtualized network equipment (virtualized Arista switches). Nice!

- Colin Lynch shares some details on his journey with VMware NSX (so far).

- I wouldn’t take this information as gospel, but here’s a breakdown of some of the IPv6 support available in VMware NSX.

Servers/Hardware

- Here’s an interesting article on the role that virtualization is playing in the network functions virtualization (NFV) space now that ARM hardware is growing increasingly powerful. This is a space that’s going to see some pretty major changes over the next few years, in my humble opinion.

Security

- Anthony Burke gives a little bit of a sneak peek Continue reading

Technology Short Take #84

Welcome to Technology Short Take #84! This episode is a bit late (sorry about that!), but I figured better late than never, right? OK, bring on the links!

Networking

- When I joined the NSX team in early 2013, a big topic at that time was overlay protocols (VXLAN, STT, etc.). Since then, that topic has mostly faded, though it still does come up from time to time. In particular, the move toward Geneve has prompted that discussion again, and Russell Bryant tackles the discussion in this post.

- Sjors Robroek describes his nested NSX-T lab that also includes some virtualized network equipment (virtualized Arista switches). Nice!

- Colin Lynch shares some details on his journey with VMware NSX (so far).

- I wouldn’t take this information as gospel, but here’s a breakdown of some of the IPv6 support available in VMware NSX.

Servers/Hardware

- Here’s an interesting article on the role that virtualization is playing in the network functions virtualization (NFV) space now that ARM hardware is growing increasingly powerful. This is a space that’s going to see some pretty major changes over the next few years, in my humble opinion.

Security

- Anthony Burke gives a little bit of a sneak peek Continue reading

Cisco ACI, VMware NSX and Programmability

One of my readers sent me a lengthy email describing his NSX-versus-ACI views. He started with [slightly reworded]:

What I want to do is to create customer templates to speed up deployment of application environments, as it takes too long at the moment to set up a new application environment.

That’s what we all want. How you get there is the interesting part.

Read more ...Technology Short Take #83

Welcome to Technology Short Take #83! This is a slightly shorter TST than usual, which might be a nice break from the typical information overload. In any case, enjoy!

Networking

- I enjoyed Dave McCrory’s series on the future of the network (see part 1, part 2, part 3, and part 4—part 5 hadn’t gone live yet when I published this). In my humble opinion, he’s spot on in his viewpoint that network equipment is increasingly becoming more like servers, so why not embed services and functions in the network equipment? However, this isn’t enough; you also need a strong control plane to help manage and coordinate these services. Perhaps Istio will help provide that control plane, though I suspect something more will be needed.

- Michael Kashin has a handy little tool that functions like

ssh-copy-idon servers, but for network devices (leveraging Netmiko). Check out the GitHub repository. - Anthony Shaw has a good comparison of Ansible, StackStorm, and Salt (with a particular view at applicability in a networking context). This one is definitely worth a read, in my opinion.

- Miguel Gómez of Telefónica Engineering discusses maximizing performance in VXLAN overlay networks.

- Nicolas Michel has a good Continue reading

Technology Short Take #83

Welcome to Technology Short Take #83! This is a slightly shorter TST than usual, which might be a nice break from the typical information overload. In any case, enjoy!

Networking

- I enjoyed Dave McCrory’s series on the future of the network (see part 1, part 2, part 3, and part 4—part 5 hadn’t gone live yet when I published this). In my humble opinion, he’s spot on in his viewpoint that network equipment is increasingly becoming more like servers, so why not embed services and functions in the network equipment? However, this isn’t enough; you also need a strong control plane to help manage and coordinate these services. Perhaps Istio will help provide that control plane, though I suspect something more will be needed.

- Michael Kashin has a handy little tool that functions like

ssh-copy-idon servers, but for network devices (leveraging Netmiko). Check out the GitHub repository. - Anthony Shaw has a good comparison of Ansible, StackStorm, and Salt (with a particular view at applicability in a networking context). This one is definitely worth a read, in my opinion.

- Miguel Gómez of Telefónica Engineering discusses maximizing performance in VXLAN overlay networks.

- Nicolas Michel has a good Continue reading

Network Slices

There has been a lot of chatter recently in the 5G wireless world about network slices. A draft was recently published in the IETF on network slices—draft-gdmb-netslices-intro-and-ps-02. But what, precisely, is a network slice?

Perhaps it is better to begin with a concept most network engineers already know (and love)—a virtual topology. A virtual topology is a set of links, with some subset of connected devices (either virtual or real), that act as a subset of the network. Isn’t such a subset of the network a “slice” if you look at it from a different angle? To ask the question in a different way: how are network slices different from virtual network overlays?

To begin, consider the control plane. In the world of virtual topologies, there is generally one control plane that provides reachability, as well as sorting reachability into each virtual topology. For instance, BGP carries a route target and a route discriminator to indicate which virtual topology any particular destination belongs to. A network slice, by contrast, actually has multiple control planes—one for each slice. There will still be one “supervisor control plane,” of course, much like there is a hypervisor that manages the resources of each Continue reading

Higher performance for oVirt Python SDK

Python SDK version 4.1.4 introduced support for sending asynchronous requests and HTTP pipelining.

This blog post explains those terms and will show you an example how to use the Python SDK in an asynchronous manner.

Asynchronous Requests

When using asynchronous requests, the client sends the request and defines a method (usually called callback), which should be called after the response is received but the client is not waiting for the response. In order for SDK to work in an asynchronous fashion, we introduced two new features to our SDK: multiple connections and HTTP pipelining.

These features provide significant value when the user wishes to fetch the inventory of the oVirt system. The time to fetch the inventory may be significantly decreased, too. A comparison of the synchronous and asynchronous requests folows.

Multiple Connections

Previously the SDK used only a single open connection that sequentially sent the requests according to user program and always waited for the server response for corresponding request.

In the new version of the SDK, the user can specify the number of connections the SDK should create to the server, and the specific requests created by user program uses those connections in parallel.

HTTP Continue reading

High Availability for Red Hat Virtualization Manager 4.1

Hi folks, if you missed Red Hat Summit 2017 last week, it was great time in Boston. As promised, I’m uploading my presentation on HA for RHV-M 4.1 – hosted engine. Although, I’m doing it a little differently this time. I took the time this week to actually re-record it including the demos! This way you get a flavor of how I actually presented it last week.It turned out a little shorter in the re-recording, as it only clocked out at about 30 minutes and my session was about 10 minutes longer. But it’s all good. I walk through what hosted engine is, how it compares to standard deployment, why you would care if RHV-M goes down, and how to actually deploy hosted engine.

The embedded demos walk through the deployment of RHVH, the deployment of hosted engine via Cockpit, then a forced failover courtesy of a guest Velociraptor. Ok, not really, I just yanked the power on the underlying host.. but watch the demo anyway..

(best viewed in full screen, give it a moment to get in focus..)

One of the things that I really tried to emphasize in both the original presentation and the re-recording Continue reading

Integrating Kibana/Elasticsearch on Top of OpenShift with oVirt Engine SSO

oVirt Engine provides a powerful way to manage users and domains using the oVirt Engine AAA extensions. oVirt Engine supports many different LDAP server types for authentication using the ovirt-engine-extension-aaa-ldap extension and supports managing internal users using the ovirt-engine-extension-aaa-jdbc extension. Clients can use the powerful oVirt Engine user management in their applications by using the OAuth2 or OpenId Connect end points provided by oVirt Engine SSO to authenticate users in their applications.

Below is step-by-step instructions on how to integrate Kibana/Elasticsearch on top of OpenShift with oVirt Engine SSO. The instructions should work for any client application that can be configured to use a OAuth2 or OpenID Connect server to authenticate its users.

The goal is to integrate Kibana/Elasticsearch on top of OpenShift with oVirt Engine SSO, so existing engine users can access Kibana/Elasticsearch without reauthentication (we don't need to maintain authentication configuration separately for oVirt Engine and Kibana/Elasticsearch).

The integration requires a fully working and configured oVirt Engine instance on oVirt Engine host and a fully working and configured instance of Kibana/Elasticsearch on top of OpenShift on the OpenShift host.

Installing Kibana/Elasticsearch and OpenShift Backend

Install Kibana/Elasticsearch/OpenShift on CentOS7 or RHEL 7.3 as described in https://github.com/ViaQ/Main/blob/master/README-mux.md

Continue reading

A Warm Welcome to the Summer Internship Students

The oVirt project is glad to announce that five talented students will be joining the oVirt community over the summer period, as part of the 2017 Google Summer of Code (GSoC) and Outreachy internship programs.

The oVirt project is glad to announce that five talented students will be joining the oVirt community over the summer period, as part of the 2017 Google Summer of Code (GSoC) and Outreachy internship programs.

Both GSoC and Outreachy focus on getting more student developers interested in open source software development, as well as providing opportunities for talented people, underrepresented in the tech world, to gain valuable technology experience. The students will spend their summer break writing code, learning about open source development and documentation, and earning a stipend.

Joining us as part of GSoC are:

Tasdik Rahman will be working on adding Ansible roles for oVirt-utilities, for easier testing and automated redeployment. His mentor will be Lukas Svaty.

Shubham Dubey will be working on configuring backup storage for Ovirt. The idea is to replace the need for a dedicated storage domain for backup and disaster recovery. Shubham's mentor will be Maor Lipchuk.

Joining us as part of Outreachy, are:

Anastasia Antsiferova will be working on the oVirt log analyser. Her mentor will be Milan Zamazal.

Leni Kadali will be working on documetation. His mentor will be Jason Brooks.

Valentina Makarova will be working on implementing oVirt integration tests using Continue reading

Technology Short Take #82

Welcome to Technology Short Take #82! This issue is a bit behind schedule; I’ve been pretty heads-down on some projects. That work will come to fruition in a couple weeks, so I should be able to come up for some air soon. In the meantime, here’s a few links and articles for your reading pleasure.

Networking

- Kristian Larsson shows how to validate data using YANG. Practical examples like this have really helped me better understand YANG and its relationship to structured data you might exchange with a device or service.

- There’s lots of talk about applying test-driven development (TDD) principles in various automation contexts, but I like the fact that Ajay Chenampara provides some practical examples in his blog post on applying TDD in network automation using Ansible.

- Matt Oswalt talks about how the combination of NAPALM and StackStorm enables some interesting results, including the ability to verify configuration consistency. StackStorm isn’t something I’ve had the opportunity to learn/use at all, but it’s on my (ever-growing) list of things to check out.

- Aaron Conole provides an overview of using the

ovs-dpctlcommand to “program” the Open vSwitch (OVS) kernel module. It’s a bit geeky, but does provide some insight into Continue reading

Netdev 2.1 conference report

I attended the Netdev 2.1 Conference in Montreal from April 6 to 8. Netdev is a community-driven conference mainly for Linux networking developers and developers whose applications rely on code in the Linux kernel networking subsystem. It focuses very tightly on Linux kernel networking and on how packets are handled through the Linux kernel as they pass between network interfaces and applications running in user space.

In this post, I write about the three-day conference and I offer some commentary on the talks and workshops I attended. I grouped my comments in categories based on my interpretation of each talk’s primary topic. The actual order in which these topics were presented is available in the Netdev 2.1 schedule. The slides from the talks, workshops, and keynotes are posted under each session on the Netdev web site. Videos of the talks are available on the netdevconf Youtube channel.

Keynotes

Each day at the Netdev conference featured a keynote by a prominent member of the Linux networking community. Two of the keynotes covered higher-level views of Linux in the network in the enterprise, cloud, and the Internet of things. The other keynote covered details of the new eXpress Data Path Continue reading

VMware NSX in Redundant L3-only Data Center Fabric

During the Networking in Private and Public Clouds webinar I got an interesting question: “Is it possible to run VMware NSX on redundantly-connected hosts in a pure L3 data center fabric?”

TL&DR: I thought the answer is still No, but after a very helpful discussion with Anthony Burke it seems that it changed to Yes (even through the NSX Design Guide never explicitly says Yes, it’s OK and here’s how you do it).

Read more ...Up and Running with oVirt 4.1 and Gluster Storage

Last month, the oVirt Project shipped version 4.1 of its open source virtualization management system. With a new release comes an update to this howto for running oVirt together with Gluster storage using a trio of servers to provide for the system's virtualization and storage needs, in a configuration that allows you to take one of the three hosts down at a time without disrupting your running VMs.

If you're looking instead for a simpler, single-machine option for trying out oVirt, your best bet is the oVirt Live ISO. This is a LiveCD image that you can burn onto a blank CD or copy onto a USB stick to boot from and run oVirt. This is probably the fastest way to get up and running, but once you're up, this is definitely a low-performance option, and not suitable for extended use or expansion.

Read on to learn about my favorite way of running oVirt.

oVirt, Glusterized

Prerequisites

Hardware: You’ll need three machines with 16GB or more of RAM and processors with hardware virtualization extensions. Physical machines are best, but you can test oVirt using nested KVM as well. I've written this howto using VMs running on my "real" oVirt+Gluster Continue reading

Up and Running with oVirt 4.1 and Gluster Storage

Last month, the oVirt Project shipped version 4.1 of its open source virtualization management system. With a new release comes an update to this howto for running oVirt together with Gluster storage using a trio of servers to provide for the system's virtualization and storage needs, in a configuration that allows you to take one of the three hosts down at a time without disrupting your running VMs.

If you're looking instead for a simpler, single-machine option for trying out oVirt, your best bet is the oVirt Live ISO page. This is a LiveCD image that you can burn onto a blank CD or copy onto a USB stick to boot from and run oVirt. This is probably the fastest way to get up and running, but once you're up, this is definitely a low-performance option, and not suitable for extended use or expansion.

Read on to learn about my favorite way of running oVirt.

oVirt, Glusterized

Prerequisites

Hardware: You’ll need three machines with 16GB or more of RAM and processors with hardware virtualization extensions. Physical machines are best, but you can test oVirt using nested KVM as well. I've written this howto using VMs running on my "real" Continue reading

Say Hello to oVirt 4.1.1

On March 22, the oVirt project released version 4.1.1, available for Red Hat Enterprise Linux 7.3, CentOS Linux 7.3, or similar.

oVirt is the open source virtualization solution that provides an awesome KVM management interface for multi-node virtualization. This maintenance version is super stable and there are some nice new features.

So what's new in oVirt 4.1.1?

Storage Team

- LUNs can be removed from a block data domain, provided that there is enough free space on the other domain devices to contain the data stored on the LUNs being removed.

- Support for NFS version 4.2 connections (when supported by storage).

Integration Team

- oVirt-hosted-engine-setup now works with NetworkManager enabled.

Network Team

- NetworkManager keeps running when a host is added to oVirt. This allows users to review networking configurations in cockpit whenever they want.

Infra Team

- A new tool, engine-vacuum, performs a vacuum on the PostgreSQL database in order to reclaim disk space on the operating system. It also updates and removes garbage from tables.

- Alerts for all data centers and clusters that are not upgraded to the highest compatibility version.

- Time zones are now shown in log records to make it easier to correlate Continue reading

Technology Short Take #81

Welcome to Technology Short Take #81! I have another collection of links, articles, and thoughts about key data center technologies, and hopefully I’ve managed to include something here that will prove useful or thought-provoking. Enjoy!

Networking

- Matt Oswalt has a great post on the need for networking professionals to learn basic scripting skills—something he (rightfully) distinguishes from programming as a full-time occupation. This is a post well worth spending a few minutes to read, and I wholeheartedly agree with Matt’s position here. Further, he follows that up with a post on how automation is more than just configuration management; it’s about network services. (This is a view shared by Ivan Pepelnjak.) Excellent stuff!

- Speaking of Ivan, he pointed out this post with 45 Wireshark challenges to help you improve your network analysis skills. Thanks to Ivan for the tip, and thanks to Johannes Weber for the challenges.

- Jason Edelman also jumps into this discussion on the need for automation/programming/scripting skills with a post that advocates the position of “automate when you can, program when you must”. Jason’s position aligns well with the viewpoints of both Matt and Ivan. I must say—if three well-known networking professionals who are also Continue reading

Q&A: What Is a Hyperconverged Infrastructure?

I’m running a hyperconverged infrastructure event with Mitja Robas on April 6th, and so my friend Christoph Jaggi sent me a list of interesting questions, starting with:

What are hyperconverged infrastructures?

The German version of the interview is published on inside-it.ch.

Read more ...Technology Short Take #80

Welcome to Technology Short Take #80! This post is a week late (I try to publish these every other Friday), so my apologies for the delay. However, hopefully I’ve managed to gather together some articles with useful information for you. Enjoy!

Networking

- Biruk Mekonnen has an introductory article on using Netmiko for network automation. It’s short and light on details, but it does provide an example snippet of Python code to illustrate what can be done with Netmiko.

- Gabriele Gerbino has a nice write-up about Cisco’s efforts with APIs; his article includes a brief description of YANG data models and a comparison of working with network devices via SSH or via API.

- Giuliano Bertello shares why it’s important to RTFM; or, how he fixed an issue with a Cross-vCenter NSX 6.2 installation caused by duplicate NSX Manager UUIDs.

- Andrius Benokraitis provides a preview of some of the networking features coming soon in Ansible 2.3. From my perspective, Ansible has jumped out in front in the race among tools for network automation; I’m seeing more coverage and more interest in using Ansible for network automation.

- Need to locate duplicate MAC addresses in your environment, possibly caused by cloning Continue reading

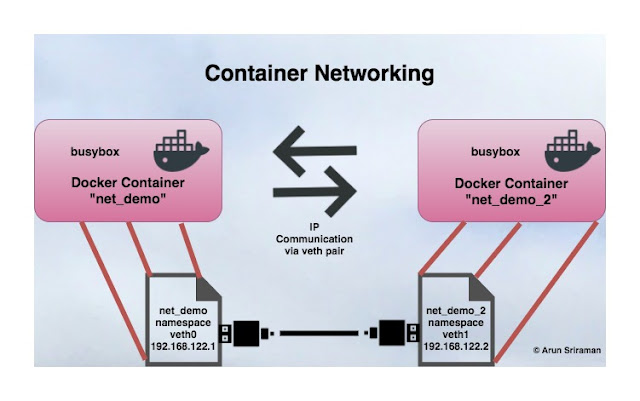

Container Namespaces – How to add networking to a docker container

Today, lets deep dive into adding interfaces to a container manually and in-turn gain some insight into how all of this works. Since this discussion is going to revolve around network namespaces I assume you have some background in that area. If you are new to the concept of namespaces and network namespaces, I recommend reading this.

Step 1: We will first bring up a docker container without networking. From docker docs, using the --network none when running a docker container leaves out container interface creation for that docker instance. Although docker skips network interface creation it brings up the container with Continue reading