Show 398: The Tradeoffs Of Information Hiding In The Control Plane

Today on the Priority Queue, we re gonna hide some information. Oh, like route summarization? Sure, like route summarization. That s an example of information hiding. But there s much more to the story than that.

Our guest is Russ White. Russ is a serial networking book author, network architect, RFC writer, patent holder, technical instructor, and much of the motive force behind the early iterations of the CCDE program.

The latest tome to flow from his keyboard (and mine, actually) is Computer Networking Problems and Solutions available on Amazon right now. While I wrote or contributed to several of the chapters in this book, Russ did the lion s share, and we re going to dive into one of his book chapters, devoted to the topic of information hiding.

We discuss the reasons for information hiding in the control plane, including resource conservation and reducing the failure domain; the pros and cons of dividing a network in multiple failure domains with information hiding; and the criticality of convergence.

We also talk about techniques for information hiding, including filtering reachability information and using overlays.

Sponsor: InterOptic

InterOptic offers high-performance, high-quality optics at a fraction of the cost. Find out more Continue reading

How to operationalize Cumulus Linux

Thanks to the limitations of traditional networks, network operators are accustomed to doing everything manually and slowly. But they want to perform configuration, troubleshooting and upgrades faster and with fewer mistakes. They’re ready and willing to learn a new approach, but they want to know what their options are. More importantly, they want to do it right. The good news is, regardless of your organization’s specific goals, you can operationalize Cumulus Linux to meet those objectives faster and more consistently. This post will help you understand your options for developing agile, speed-of-business workflows for:

- Configuration management

- Backup and recovery

- Troubleshooting

And if you’re looking for a deeper, more technical dive into how to implement these network operations, download this white paper.

Configuration management

Automation

The biggest disadvantage of manual configurations is that they simply don’t scale. Implementing BGP across dozens of switches is a copy-and-paste endeavor that’s time-consuming and prone to error. Not only that, checking that the configuration took effect and works as expected requires hop-by-hop verification in addition to testing route propagation and IP connectivity. However, In a small network, there’s no shame in at least starting out doing everything by hand.

Cumulus Linux lets you use a Continue reading

Rough Guide to IETF 102: DNSSEC, DNS Security and Privacy

DNS privacy will receive a large focus in the latter half of the IETF 102 week with attention in the DPRIVE, DNSSD, and OPSEC working groups. In an interesting bit of scheduling (which is always challenging), most of the DNS sessions are Wednesday through Friday. As part of our Rough Guide to IETF 102, here’s a quick view on what’s happening in the world of DNS.

Given that IETF 102 is in Montreal, Canada, all times below are Eastern Daylight Time (EDT), which is UTC-4.

IETF 102 Hackathon

The “DNS team” has become a regular feature of the IETF Hackathons and the Montreal meeting is no different. The IETF 102 Hackathon wiki outlines the work that will start tomorrow (scroll down to see it). Major security/privacy projects include:

- Implementing a part of draft-bortzmeyer-dprive-resolver-to-auth

- Setting up and measuring leakage avoidance using root loopback at privacy server

- Oblivious DNS

- Proof of concept of the Multi-Provider DNSSEC draft.

Anyone is welcome to join the DNS team for part or all of that event.

DNS Operations (DNSOP)

The DNS sessions at IETF 102 start on Wednesday morning from 9:30am – 12noon with the DNS Operations (DNSOP) Working Group. Paul Wouters and Ondrej Sury Continue reading

Security Vendor Fortinet Sells SD-WAN Directly to Customers

According to Gartner research, there are more than 60 SD-WAN vendors, and 90 percent of them offer little or no security. Fortinet’s SD-WAN has a single controller to manage both the security and the other SD-WAN features.

According to Gartner research, there are more than 60 SD-WAN vendors, and 90 percent of them offer little or no security. Fortinet’s SD-WAN has a single controller to manage both the security and the other SD-WAN features.

Datadog Deepens Algorithmic and Machine Learning With Watchdog Capability

Watchdog uses algorithms and machine learning to automatically look at all the data sent by an enterprises’ infrastructure and applications.

Watchdog uses algorithms and machine learning to automatically look at all the data sent by an enterprises’ infrastructure and applications.

SDxCentral’s Weekly Roundup — July 13, 2018

The U.S. Justice Department appeals the AT&T-Time Warner merger; Tintri files for bankruptcy in the U.S., shutters in Europe; Ericsson divests Swedish field services operation.

The U.S. Justice Department appeals the AT&T-Time Warner merger; Tintri files for bankruptcy in the U.S., shutters in Europe; Ericsson divests Swedish field services operation.

Bug Bounties Jump 33% With $11.7M Paid to Hackers Last Year

Some companies and governments are now offering as much as $250,000 to find and fix security flaws, according to the Hacker-Powered Security Report.

Some companies and governments are now offering as much as $250,000 to find and fix security flaws, according to the Hacker-Powered Security Report.

Intel Buys a Programmable Chip Company to Prepare for 5G

Programmable chips will play a key role in 5G networks because complex processing will be necessary in the baseband and the base station.

Programmable chips will play a key role in 5G networks because complex processing will be necessary in the baseband and the base station.

Microsoft’s Container Strategy Continues To Evolve

Containers have been getting a lot of attention in the enterprise over the past several years, thanks to them being an enabling technology for greater operational agility, especially in developing customer-facing applications and getting the code into production with greater speed. …

Microsoft’s Container Strategy Continues To Evolve was written by Daniel Robinson at .

Stuff The Internet Says On Scalability For July 13th, 2018

Hey, it's HighScalability time:

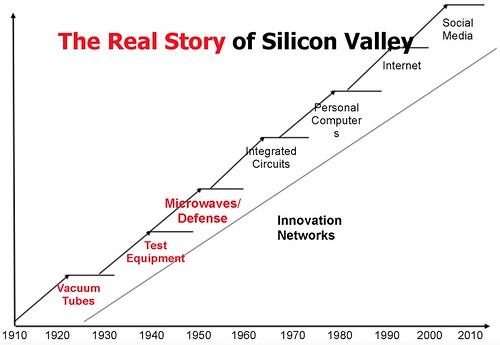

Steve Blank tells the Secret History of Silicon Valley. What a long, strange trip it is.

Do you like this sort of Stuff? Please lend me your support on Patreon. It would mean a great deal to me. And if you know anyone looking for a simple book that uses lots of pictures and lots of examples to explain the cloud, then please recommend my new book: Explain the Cloud Like I'm 10. They'll love you even more.

- $27 billion: CapEx invested by leading cloud vendors in first quarter of 2018; $40 billion: App store revenue in 10 years; $57.5 billion: venture investment first half of 2018; 1 billion: Utah voting system per day hack attempts; 67%: did not deploy a serverless app last year; $1.8 billion: made by Pokeman GO; $13 billion: Netflix's new content budget;

- Quotable Quotes:

- @davidbrunelle: The best developers and engineering leaders I've personally worked with do *not* have a notable presence on GitHub or public bodies of speaking or writing work. I worry that a lot of folks confuse celebrity and visibility with talent and ability.

- Bernard Continue reading

We’ve Added a New Machine Learning Course to Our Video Library!

This is a 2 and a half hour introductory course in Machine Learning. It’s taught by Yogesh Kulkarni, a practitioner and instructor in the field of Machine learning.

Why You Should Study This Topic:

Machine Learning is getting more popular each day. It is not just hype, but an essential technique made possible and powerful by the availability of data. Studying Machine Learning is imperative, and Python is a good programming environment to get started with the basics. This workshop will not only familiarize you with this powerful and popular techniques, but will also give you the confidence necessary to venture into this on your own, thereby improving your chances of a lucrative career ahead.

Who Should Watch:

This course is for anyone who wants to become more familiar with Machine Learning. It is recommended to have some knowledge of college level mathematics and programming using Python. You can be from any domain, such as Finance, Engineering, Agriculture, Biology, etc. you will know a new problem-solving technique which could be of great help in your own domain.

What You’ll Learn:

You will learn what Artificial Intelligence is, how it relates to Machine Learning and Deep Learning, what’s the core idea Continue reading

[Sponsored] Short Take – Cumulus Networks

In this sponsored Network Collective Short Take, Pete Lumbis joins us to talk about how Cumulus Networks is offering a new disaggregated approach to network operations. There are some common concerns when it comes to a disaggregated model and in this conversation we focus on how Cumulus is making support, operations, and procurement fit within the models and experiences you are used to. All of this while still empowering engineers to take advantage of the power and flexibility that Cumulus Linux has to offer. For more information about Cumulus Linux, visit https://cumulusnetworks.com

The post [Sponsored] Short Take – Cumulus Networks appeared first on Network Collective.

Independence, Impartiality, and Perspective

In case you haven’t noticed recently, there are a lot of people that have been going to work for vendors and manufacturers of computer equipment. Microsoft has scored more than a few of them, along with Cohesity, Rubrik, and many others. This is something that I see frequently from my position at Tech Field Day. We typically hear the rumblings of a person looking to move on to a different position early on because we talk to a great number of companies. We also hear about it because it represents a big shift for those who are potential delegates for our events. Because going to a vendor means loss of their independence. But what does that really mean?

Undeclaring Independence

When people go to work for a manufacturer of a computing product, the necessarily lose their independence. But that’s not the only case where that happens. You can also not be truly independent if you work for reseller. If your company specializes in Cisco and EMC, are you truly independent when discussion Juniper and NetApp? If you make your money by selling one group of products you’re going to be unconsciously biased toward them. If you’ve been burned or had Continue reading