IPv6 Primer for Deployments

This is a liveblog of the OpenStack Summit Sydney session titled “IPv6 Primer for Deployments”, led by Trent Lloyd from Canonical. IPv6 is a topic with which I know I need to get more familiar, so attending this session seemed like a reasonable approach.

Lloyd starts with some history. IPv6 was released in 1980, and uses 32-bit address (with a total address space of around 4 billion). IPv4, as most people know, is still used for the majority of Internet traffic. IPv6 was released in 1998, and uses 128-bit addresses (for a theoretical total address space of 3.4 x 10 to the 38th power). IPv5 was an experimental protocol, which is why the IETF used IPv6 as the version number for the next production version of the IP protocol.

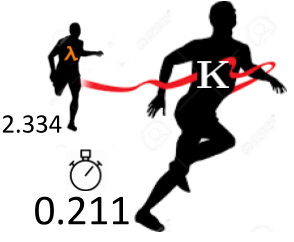

Lloyd shows a graph showing the depletion of IPv4 address space, to help attendees better understand the situation with IPv4 address allocation. The next graph Lloyd shows illustrates IPv6 adoption, which—according to Google—is now running around 20% or so. (Lloyd shared that he naively estimated IPv4 would be deprecated in 2010.) In Australia it’s still pretty difficult to get IPv6 support, according to Lloyd.

Next, Lloyd reviews decimal and Continue reading

Much of the technology comes from recent cloud security acquisitions.

Much of the technology comes from recent cloud security acquisitions. Kubernetes is the top container platform of OpenStack users.

Kubernetes is the top container platform of OpenStack users. Security and familiarity were cited as tipping the choice in favor of VMs.

Security and familiarity were cited as tipping the choice in favor of VMs. Juniper realigns workforce; Dell EMC and Nutanix fight for No. 1 HCI spot; Cisco's $1.9B purchase.

Juniper realigns workforce; Dell EMC and Nutanix fight for No. 1 HCI spot; Cisco's $1.9B purchase. If accepted, it would create a WiFi, automotive, and location chip powerhouse.

If accepted, it would create a WiFi, automotive, and location chip powerhouse. The technology comes from HPE's $275 million SGI acquisition last year.

The technology comes from HPE's $275 million SGI acquisition last year.