Practical Python For Networking: 5.3 Code Refactoring – Second Example – Video

This lesson walks through the second example of code refactoring. Course files are in a GitHub repository: https://github.com/ericchou1/pp_practical_lessons_1_route_alerts Eric Chou is a network engineer with 20 years of experience, including managing networks at Amazon AWS and Microsoft Azure. He’s the founder of Network Automation Nerds and has written the books Mastering Python Networking and Distributed […]

The post Practical Python For Networking: 5.3 Code Refactoring – Second Example – Video appeared first on Packet Pushers.

NCSA Delta Supercomputer Adopts Slingshot But Forgoes Cray “Shasta” Design

When it comes to supercomputing in academia, the cost of a cluster is almost always an issue and this, coupled with the desire to drive as much compute as possible, drives architectural choices. …

NCSA Delta Supercomputer Adopts Slingshot But Forgoes Cray “Shasta” Design was written by Dylan Martin at The Next Platform.

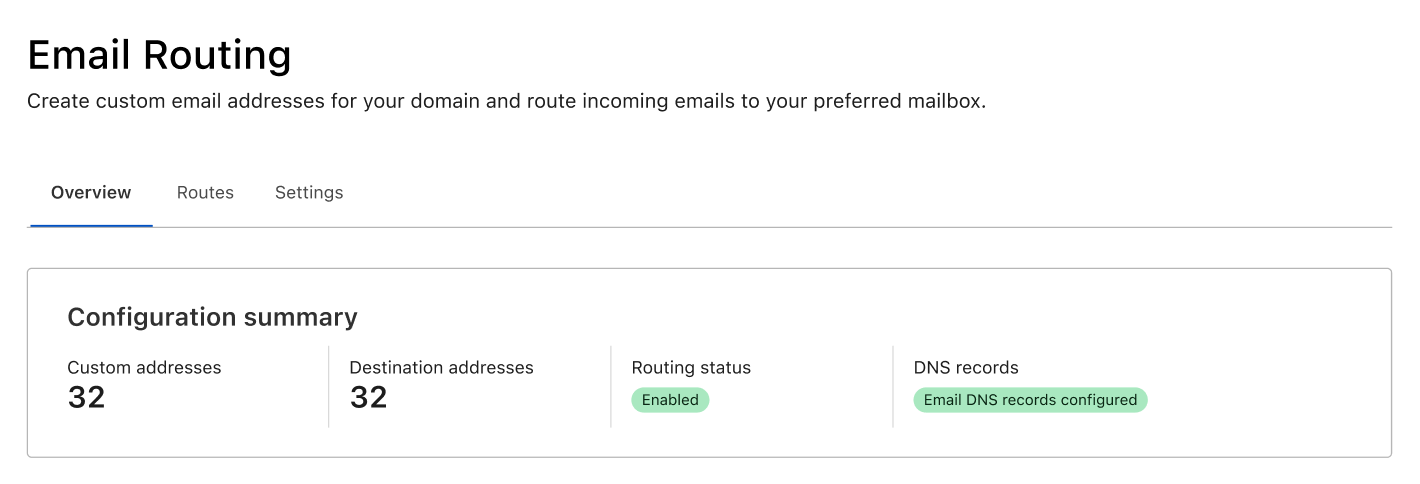

Email Routing Insights

Have you ever wanted to try a new email service but worried it might lead to you missing any emails? If you have, you’re definitely not alone. Some of us email ourselves to make sure it reaches the correct destination, others don’t rely on a new address for anything serious until they’ve seen it work for a few days. In any case, emails often contain important information, and we need to trust that our emails won’t get lost for any reason.

To help reduce these worries about whether emails are being received and forwarded - and for troubleshooting if needed - we are rolling out a new Overview page to Email Routing. On the Overview tab people now have full visibility into our service and can see exactly how we are routing emails on their behalf.

Routing Status and Metrics

The first thing you will see in the new tab is an at a glance view of the service. This includes the routing status (to know if the service is configured and running), whether the necessary DNS records are configured correctly, and the number of custom and destination addresses on the zone.

Below the configuration summary, you will see more Continue reading

Event-driven remediation with systemd and Red Hat Ansible Automation Platform

Over the many years of working as an engineer and architect with a particular interest in storage, I have learned that donuts and energy drinks can really bring you some joy in trying situations. When it seems that your infrastructure is on fire and you need an exorcist to help you find the ghost in the machine, a humble box of glazed donuts can give you and your team a much-needed break and allow you to refocus.

Now, the issue with this habit is that it might help you in the moment, but over time this can become a real health issue. Configuration drift, technical issues, and technical debt can all have similar effects on your health, increasing your heart rate and causing sleepless nights. Red Hat Ansible Automation Platform can assist you here with not only keeping your infrastructure in check, but also giving your teams the peace of mind that systems are running as they should.

Being able to schedule compliance checks on your systems with Ansible Automation Platform enables you to preserve configuration and system states, and keep them running the way you prefer. But sometimes this is not proactive enough. What if you have Continue reading

Viewing a Certificate Using OpenSSL

I have started taking Ed Harmoush’s Practical TLS course to learn more about TLS and certificates. When learning about TLS, you want to inspect different certificates to see the various fields and see how different organizations use certificates differently. As always, Linux comes with a great set of tools to work with certificates in the form of OpenSSL. In this post, I will show how to download a certificate and discuss some of the fields that are present in the certificate.

To get the certificate, we will use openssl with s_client and connect to a web site. I’m using twitter.com in this example:

openssl s_client -connect twitter.com:443 CONNECTED(00000003) depth=2 C = US, O = DigiCert Inc, OU = www.digicert.com, CN = DigiCert Global Root CA verify return:1 depth=1 C = US, O = DigiCert Inc, CN = DigiCert TLS Hybrid ECC SHA384 2020 CA1 verify return:1 depth=0 C = US, ST = California, L = San Francisco, O = "Twitter, Inc.", CN = twitter.com verify return:1 --- Certificate chain 0 s:C = US, ST = California, L = San Francisco, O = "Twitter, Inc.", CN = twitter.com i:C = US, O = Continue reading

netlab Multi-Platform Custom Configuration Templates

In the Building a BGP Anycast Lab I described how you could use custom configuration templates to extend the netlab functionality.

That example used Cisco IOS… but what if you want to test the same functionality on multiple platforms? netlab provides a nice trick: the custom configuration template could point to a directory with platform-specific templates. Let me show you how that works…

Multi-Platform Custom Configuration Templates in netsim-tools

In the Building a BGP Anycast Lab I described how you could use custom configuration templates to extend the functionality of netsim-tools.

That example used Cisco IOS… but what if you want to test the same functionality on multiple platforms? netsim-tools provides a nice trick: the custom configuration template could point to a directory with platform-specific templates. Let me show you how that works…

Wanted: A Complete – And Heavily Customizable – HPC Software Stack

Sponsored Feature. There are a lot of things that the HPC centers and hyperscalers of the world have in common, and one of them is their attitudes about software. …

Wanted: A Complete – And Heavily Customizable – HPC Software Stack was written by Timothy Prickett Morgan at The Next Platform.

OMG: Hop-by-Hop Path MTU Discovery

Straight from the “Bad Ideas Never Die” (see also RFC 1925 Rule 11) department: Geoff Huston described a proposal to use hop-by-hop IPv6 extension headers to implement Path MTU Discovery. In his words:

It is a rare situation when you can create an outcome from two somewhat broken technologies where the outcome is not also broken.

IETF should put rules in place similar to the ones used by the patent office (Thou Shalt Not Patent Perpetual Motion Machine), but unfortunately we’re way past that point. Back to Geoff:

It appears that the IETF has decided that volume is far easier to achieve than quality. These days, what the IETF is generating as RFCs is pretty much what the IETF accused the OSI folk of producing back then: Nothing more than voluminous paperware about vapourware!

OMG: Hop-by-Hop Path MTU Discovery

Straight from the “Bad Ideas Never Die” (see also RFC 1925 Rule 11) department: Geoff Huston described a proposal to use hop-by-hop IPv6 extension headers to implement Path MTU Discovery. In his words:

It is a rare situation when you can create an outcome from two somewhat broken technologies where the outcome is not also broken.

IETF should put rules in place similar to the ones used by the patent office (Thou Shalt Not Patent Perpetual Motion Machine), but unfortunately we’re way past that point. Back to Geoff:

It appears that the IETF has decided that volume is far easier to achieve than quality. These days, what the IETF is generating as RFCs is pretty much what the IETF accused the OSI folk of producing back then: Nothing more than voluminous paperware about vapourware!

Heavy Networking 627: Network Automation As A Business Culture

The business benefits of network automation are sometimes lost in discussion about technology and tools. Guest Tim Fiola joins this episode of Heavy Networking to discuss how to engage the business at a cultural level so that network automation is properly embraced and supported by management.Heavy Networking 627: Network Automation As A Business Culture

The business benefits of network automation are sometimes lost in discussion about technology and tools. Guest Tim Fiola joins this episode of Heavy Networking to discuss how to engage the business at a cultural level so that network automation is properly embraced and supported by management.

The post Heavy Networking 627: Network Automation As A Business Culture appeared first on Packet Pushers.

BGP AS Override Feature Explained in 2022

BGP AS Override needs to be understood well in order to understand the BGP loop prevention behavior, But why BGP AS Override might create a dangerous situation, and what are the alternatives of BGP AS Override will be explained in this post.

What is BGP AS Override

BGP AS Override feature is used to change the AS number or numbers in the AS Path attribute. Without BGP AS-Override, let’s see what would happen.

In this topology, Customer BGP AS is AS 100. The customer has two locations.

Service Provider, in the middle, let’s say providing MPLS VPN service for the customer.

As you can understand from the topology, Service Provider is running EBGP with the Customer, because they have different BGP Autonomous Systems.

The service provider in the above topology has BGP AS 200.

Left customer router, when it advertises BGP update message to the R2, R2 sends to R3 and when R3 sends to R4, R4 wouldn’t accept the BGP update,

When R4 receives that update, it will check the AS-Path attribute and would see its own BGP AS number in the AS Path.

Thus is by default rejected, due to EBGP loop prevention.

If the router sees its Continue reading

Ease of Use or Ease of Repair

Have you tried to repair a mobile device recently? Like an iPad or an MacBook? The odds are good you’ve never even tried to take one apart, let alone put in replacement parts. Devices like these are notorious to try and repair because they aren’t designed to be fixed by a normal person.

I’ve recently wondered why it’s so hard to repair things like this. I can recall opening up my old Tandy Sensation computer to add a Sound Blaster card and some more RAM back in the 90s but there’s no way I could do that today, even if the devices were designed to allow that to happen. In my thinking, I realized why that might be.

Build to Rebuild

When you look at the way that car engine bays were designed in the 80s and 90s you might be surprised to see lots of wasted space. There’s room to practically crawl in beside the engine and take a nap. Why is that? Why waste all that space? Well, if you’re a mechanic that wants to get up close and personal with some part of the engine you want all the space you can find. You’d rather waste a Continue reading

On Securing BGP

The US Federal Communications Commission recently asked for comments on securing Internet routing. While I worked on the responses offered by various organizations, I also put in my own response as an individual, which I’ve included below.

I am not providing this answer as a representative of any organization, but rather as an individual with long experience in the global standards and operations communities surrounding the Internet, and with long experience in routing and routing security.

I completely agree with the Notice of Inquiry that “networks are essential to the daily functioning of critical infrastructure [yet they] can be vulnerable to attack” due to insecurities in the BGP protocol. While proposed solutions exist that would increase the security of the BGP routing system, only some of these mechanisms are being widely deployed. This response will consider some of the reasons existing proposals are not deployed and suggest some avenues the Commission might explore to aid the community in developing and deploying solutions.

9: Measuring BGP Security.

At this point, I only know of the systems mentioned in the query for measuring BGP routing security incidents. There have been attempts to build other systems, but none of these systems have been Continue reading