Ops Work vs Project Work

There’s a constant tension between delivering new services, and running the existing services well. How do you figure out how to prioritise work between Operations tasks and Project work? Skewing too far either way leads to problems. Maybe the answer is in how we structure Operations tasks?

Definitions

- Operations work: Dealing with outages, trouble tickets, support requests, etc. System monitoring – reviewing data for capacity planning, and identifying new areas to monitor. Automated repetitive tasks. Patches, upgrades, minor changes to existing services. Accountants would call this work OpEx.

- Project work: Design, test and deployment of new services. Major upgrades or enhancements to existing services. This is usually classified as CapEx. For some businesses, this work is customer-billable.

What happens when you’re imbalanced?

- Too much Project work: If you’re flat out deploying new systems (and dealing with the fallout), it’s easy to let Operations work slip. Maybe you don’t get around to automating that log rotation script, or paying attention to the slope of that consumption graph. It’s OK for a while too…things seem to be trucking along. But then you start having outages due to simple things like logs filling directories, or you hit a capacity limit, and there’s a 6-week Continue reading

Network Break 21

IT Talent Shortage and Whiny CIOs, Podcasts Make Money, ACI vs NSX wobbles and Dell busts some moves at its conference.

Author information

The post Network Break 21 appeared first on Packet Pushers Podcast and was written by Greg Ferro.

Facebook Altoona Network Diagram in 2-D

They have a few diagrams that outline the architecture. One of them is in 3-D. 3-D diagrams are always more difficult for my brain to conceptualize (maybe it's just me), so I re-drew it in a more typical 2-D fashion.

My diagram depicts 8 racks in the POD Continue reading

Infuriating Inconsistent Interfaces; F5 on the stand.

Ok, it’s another f5 post and if you’re not using f5 you might think this is irrelevant to you. However, I beg you to read on because the issue I’m describing today has a relationship to SDN and network automation, … Continue reading

If you liked this post, please do click through to the source at Infuriating Inconsistent Interfaces; F5 on the stand. and give me a share/like. Thank you!

Infuriating Inconsistent Interfaces; F5 on the stand.

Ok, it’s another f5 post and if you’re not using f5 you might think this is irrelevant to you. However, I beg you to read on because the issue I’m describing today has a relationship to SDN and network automation, and why they are such a pain to do in so many cases.

f5 SSL Profiles

The day began simply enough: news had broken about the “Poodle” SSLv3 vulnerability, and like the majority of network and server nerds we needed to disable or block SSLv3 as quickly as possible in order to remove that particular attack vector. My job was to look at the f5 load balancers, and to do so I realized that I needed to understand what SSL we had out there, and I’d also need to determine the exact change I would be making.

I wrote a couple of scripts to analyze our f5 configurations, and soon enough I had a spreadsheet showing all the SSL client profiles that were in use on each load balancer. It’s important, at this point, to understand how the f5 configures SSL profiles. Fundamentally, a custom profile inherits all of its settings from a “parent” profile, unless you specifically choose to Continue reading

Reinventing the wheel (or RFC 1925 sect 2.11)

Simon Wardley is another old-timer with low tolerance for people reinventing the broken wheels. I couldn’t resist sharing part of his blog post because it applies equally well to what we’re seeing in the SDN world:

No, I haven't read Gartner's recent research on this subject (I'm not a subscriber) and it seems weird to be reading "research" about stuff you've done in practice a decade ago (sounds familiar). Maybe they've found some magic juice? Experience however dictates that it'll be snake oil […]. I feel like the old car mechanic listening to the kid saying that his magic pill turns water into gas. I'm sure it doesn't ... maybe this time it will ... duh, suckered again.

Meanwhile the academics already talk about SDN 2.0.

Meeting Rules

Years ago a wise engineer gave me these rules for meetings:

- Never go into a meeting unless you know what the outcome will be.

- Plan to leave the meeting with less work than when you went in.

Stick to those rules, and you’ll do well.

OK, so maybe the second rule’s not so serious, but the first one has a grain of truth. You don’t need to know exactly what the decision should be, but you should be clear about what you want to get decided. If it’s particularly important, you should have already discussed it with the key attendees, and you should know what they’re thinking. You don’t want any surprises.

Too many meetings have no clear purpose, or they can only agree that ‘a decision needs to be made…pending further research.’ Avoid those sorts of meetings. Otherwise it ends up like…well….Every Meeting Ever:

Facebook Altoona Network Diagram in 2-D

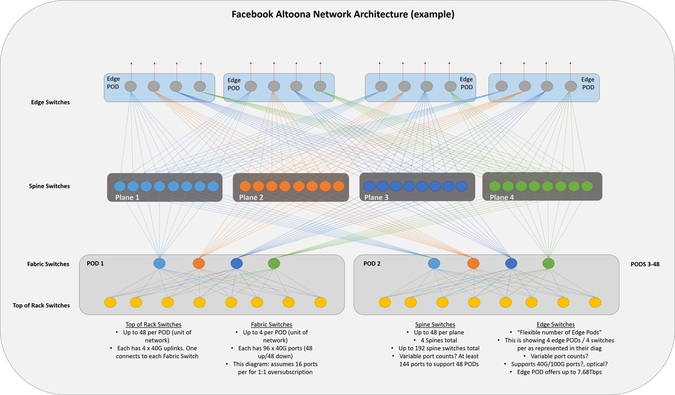

Facebook recently wrote about the network architecture they are using in their new Altoona data center facility. If you haven’t read through their article yet, it’s definitely worth the read.

They have a few diagrams that outline the architecture. One of them is in 3-D. 3-D diagrams are always more difficult for my brain to conceptualize (maybe it’s just me), so I re-drew it in a more typical 2-D fashion.

There aren’t details on quantity and speeds of ports for the spine and edge switches, so I represented them similar to how they have them in their diagram. For every downward facing port a spine switch has (across the plane), 2300 servers can be added. That’s the amount of servers per pod. 48 servers per rack * 48 racks per pod = 2300 servers. Based on the switch types being used, this number could be more, but I’m using the characteristics of 48 x 10G ports for host facing ports + 4 x 40G ports for uplinks.

My diagram depicts 8 racks in the POD and 8 switches per plane. These numbers would be 48 in a fully built out network/diagram.

Feel free to comment and correct anything I may Continue reading

GNS3 – ASAv and XRv and IOU and XEv

I am able to run ASAv and XRv and IOU and XEv on my la;ptop, forming OSPF neighbor relationship between them.I then pinged each loopback from the ASA and also pinged each loopback from IOU. This test shows:

- One way broadcast and one way unicast are working - ARP

- Unicast is working - ICMP

- Multicast is working - OSPF

All thanks for GNS3 v1.1. Isn't it great?

Here is the topology:

And here is some show commands from the ASA:

GNS3 integration with Virtual box is very useful. Whatever you can run inside Virtualbox, you can connect to each other with endless possibilities.

My system76 laptop is running Ubuntu 14.04, 16GB, i7 and SSDs.

I was using the following resources:

- Install these items from https://github.com/GNS3/

- gns3-gui

- gns3-server

- iouyap

- dynamips (this is needed even if not using dynamips for IOS)

- vboxwrapper

- vpcs (optional, but very handy to test connectivity)

cd /usr/local/bin

sudo setcap cap_dac_override,cap_net_admin,cap_net_raw+eip dynamips

sudo setcap cap_net_raw,cap_net_admin+eip iouap

Notice the NIC type. It should be MT server.

Notice Continue reading

CCIE Data Center Written Bootcamp :: December 15th – 19th

We’re excited to announce an upcoming CCIE Data Center Written Bootcamp, beginning December 15th.

It’s going to be delivered via our custom Online-HD-ILT™ training solution (high definition and interactive), Jason, our resident Data Center expert and CCIE DC instructor, will be delivering this specialized bootcamp. Not only is this course a must for CCIE Data Center written candidates (Cisco exam ID 350-080), but it’s also perfect for engineers who are also preparing for their CCIE Data Center lab exam, as they will be able to easily transition straight into their lab studies. Also, if you’re looking to re-certify an existing CCIE, but would like a potential road map into another one, this bootcamp will provide you with the capabilities of doing so.

This course lists at $999, but we’re providing a $500 coupon for individuals who purchase it now – through Monday, November 17th (Please use coupon code: DCW500BC upon checkout).

Also, our annual (1-year and 2-year) iPeverything™ clients will be permitted to attend this course at absolutely no charge! Simply login, go to the schedule page within your Member’s Area, and register.

Note: This course will begin at 9 AM EST, and will last 5 to 8 hours per Continue reading

PlexxiPulse—A Big Week for Plexxi and a New Era in IT

If you’ve been following Plexxi in the news and on social media, you will see that we announced our new CEO, Rich Napolitano, who comes to us from EMC to continue the company’s expansion and help lead Plexxi into a new era of IT. Rich has been a friend of Plexxi’s for a while; he sat on our Board of Directors before taking on his current role CEO. You can read Rich’s first blog post on why he joined Plexxi here. We also announced this week that Tim Lieto has been named senior vice president of sales and customer service to lead the company’s worldwide sales and channel effort. It sure is an exciting time for Plexxi, and we’re thrilled to have both Rich and Tim on board!

In this week’s PlexxiTube of the week, Dan Backman explains how Plexxi’s Big Data fabric solution is managed.

Jim Duffy wrote an interesting piece in Network World this week questioning whether Cisco and Arista should develop versions of their operating systems for bare metal hardware (similar to Cumulus). The industry shift that is taking place here is actually very simple. Research and development spend reflects where the value and Continue reading

Cisco ISR: Enable Features, No Performance Hit?

Last month I visited Interop NYC 2014 as a guest of Tech Field Day Extra! where our group was given a presentation about the new Cisco ISR routers by Matt Bolick, a Technical Marketing Engineer for Cisco. The Integrated Service … Continue reading

If you liked this post, please do click through to the source at Cisco ISR: Enable Features, No Performance Hit? and give me a share/like. Thank you!

Cisco ISR: Enable Features, No Performance Hit?

Last month I visited Interop NYC 2014 as a guest of Tech Field Day Extra! where our group was given a presentation about the new Cisco ISR routers by Matt Bolick, a Technical Marketing Engineer for Cisco.

The Integrated Service Routers (ISRs) themselves seem pretty feature packed, covering four key areas:

- Transport independence (DMVPN)

- Intelligent Path Control (PfR v3)

- Application Optimization (WAN optimization, ADC and WAAS)

- Secure Connectivity (Scalable, strong encryption, IPS, web filtering, etc.)

Rather than reinvent the wheel, Matt explained that the idea was to use existing protocols in a useful new way; in this case in particular to offer secure hybrid transport across MPLS and Internet for private cloud and DC access, probably ultimately moving to just Internet connectivity base on the shift Cisco has seen in how corporations see their branch offices (and specifically how much they want to reduce costs!).

So far so cool, but I figure you can look up all the specifications and features for yourselves so I won’t bore you with much more of that here. There was something else that tickled me though.

ISR Performance Figures

The new routers have some interesting performance claims:

- 4321 = 50–100 Mbps

- 4331 Continue reading

4 Inevitable Questions When Joining a Monitoring Group, Pt. 1

Leon Adato, Technical Product Marketing Manager with SolarWinds is our guest blogger today, with a sponsored post on the topic of alerting. The Four Questions For people who are interested in monitoring, there is a leap that you make when you go from watching systems that YOU care about, to monitoring systems that other people […]

Author information

The post 4 Inevitable Questions When Joining a Monitoring Group, Pt. 1 appeared first on Packet Pushers Podcast and was written by Sponsored Blog Posts.

Friday News Analysis: Arkin Net, Pica8, OpenDaylight, Plexxi, Juniper

Arkin Net Rises from Stealth with $7M in funding, to bring Google Search-like Simplicity to Software-Defined Datacenter Operations “Arkin Net is coming out of stealth mode with $7 million in funding, led by Nexus Venture Partners, to revolutionize the Software-Defined Networking (SDN) market – projected to be $8B by 2018. Arkin Net is […]The Roost Stand Discount Code

Original content from Roger's CCIE Blog Tracking the journey towards getting the ultimate Cisco Certification. The Routing & Switching Lab Exam

Since my first post on the Roost Stand I have been overwhelmed with comments on my new portable laptop stand the biggest one is where did you buy it from? The great guys at Roost have given me a Roost Stand Discount Code that you can use at the checkout to get a 10% discount. If... [Read More]

Post taken from CCIE Blog

Original post The Roost Stand Discount Code

HP IMC TACACS Authentication Manager – AD/LDAP link

In this post an overview of the integration of the IMC TAM module with an Active Directory LDAP Server. The goal of this configuration is to ensure members of a specific Active Directory group (for example g_networkadmins) are granted a … Continue readingiPexpert’s Newest “CCIE Wall of Fame” Additions 11/14/2014

Today was a great week, in our opinion! As Andy is putting up the final touches on his CCIE Collaboration product portfolio, and has a few classes under his belt, we’re beginning to see students pass their Collaboration lab. And of course, Jeff is still cranking out Wireless success stories!

Please Join us in congratulating the following iPexpert clients who have passed their CCIE lab!

- Rashmi Patel, CCIE #44921 (Collaboration)

- Jonathan Woloshyn , CCIE #45422 (Collaboration)

- Istvan Czobor , CCIE #45345 (Wireless)

- Nitin Chopra , CCIE #45371 (Wireless)

This Week’s CCIE Testimonials

Rashmi Patel, CCIE #44921 Wrote:

“I’d like to thank Andy and iPexpert for their CCIE Collaboration study materials and bootcamp! I used iPexpert’s Ultimate Self-Study Bundle (for the CCIE Collaboration lab). I also attended iPexpert’s 5-Day Bootcamp in Chicago. Andy is a great instructor, he helped me understand the key technical areas within the lab blueprint.”

We Want to Hear From You!

Have you passed your CCIE lab exam and used any of iPexpert’s or Proctor Labs self-study products, or attended a CCIE Bootcamp? If so, we’d like to add you to our CCIE Wall of Fame!

Viptela SEN: Hybrid WAN Connectivity with an SDN Twist

Like many of us Khalid Raza wasted countless hours sitting in meetings discussing hybrid WAN connectivity designs using a random combination of DMVPN, IPsec, PfR, and one or more routing protocols… and decided to try to create a better solution to the problem.

Viptela Secure Extensible Network (SEN) doesn’t try to solve every networking problem ever encountered, which is why it’s simpler to use in the use case it is designed to solve: multi-provider WAN connectivity.

Read more ...Cumulus in the Campus?

Recently I’ve been idly speculating about how campus networking could be shaken up, with different cost and management models. A few recent podcasts have inspired some thoughts on how Cumulus Networks might fit into this.

In response to a PacketPushers podcast on HP Network Management, featuring yours truly, Kanat asks:

For me the benchmark of network management so far is Meraki Dashboard – stupid simple and feature rich…

Yes – it’s a niche product that only focuses on Campus scenarios, Yes – it only supports proprietary HW. But it offers pretty much everything network operator needs – detailed visibility, traffic policy engine with L7 capability, MDM and you can hit it and go full speed right away.How long will it take HP to achieve that level of simplicity/usability?

He’s right about the Meraki dashboard. It’s fantastic. Fast to get set up, easy to use, it’s what others should aspire to. But there’s a catch: It only works with Meraki hardware. Keep paying your monthly bills, and all is well. But what if you’ve got non-Meraki hardware? Or what if you decide you don’t want to pay Meraki any more? What if Meraki goes out of business (unlikely, but still Continue reading